Cross-audiovisual information conversion method based on semantic preservation

A technology of audio-visual information and semantics, applied in the field of cross-audio-visual information transformation, it can solve problems such as the limitation of sound waveform, and achieve the effect of strong sound intelligibility, high robustness and accuracy, and fast training speed.

Active Publication Date: 2021-05-14

NORTHWESTERN POLYTECHNICAL UNIV

View PDF2 Cites 0 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

The present invention solves the limitations of the existing vision-to-auditory cross-modal information conversion method for accurately generating sound waveforms based on human language in an unconstrained environment

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

specific Embodiment

[0073] 1. Simulation conditions

[0074] This embodiment is a simulation performed by using Python and other related toolkits on an Intel(R) Xeon(R) CPU E5-2650V4@2.20GHz central processor, a memory of 500G, and an Ubuntu 14 operating system.

[0075] The data used in the simulation is the image-audio description dataset obtained by adding audio description autonomously to the existing dataset.

[0076] 2. Simulation content

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

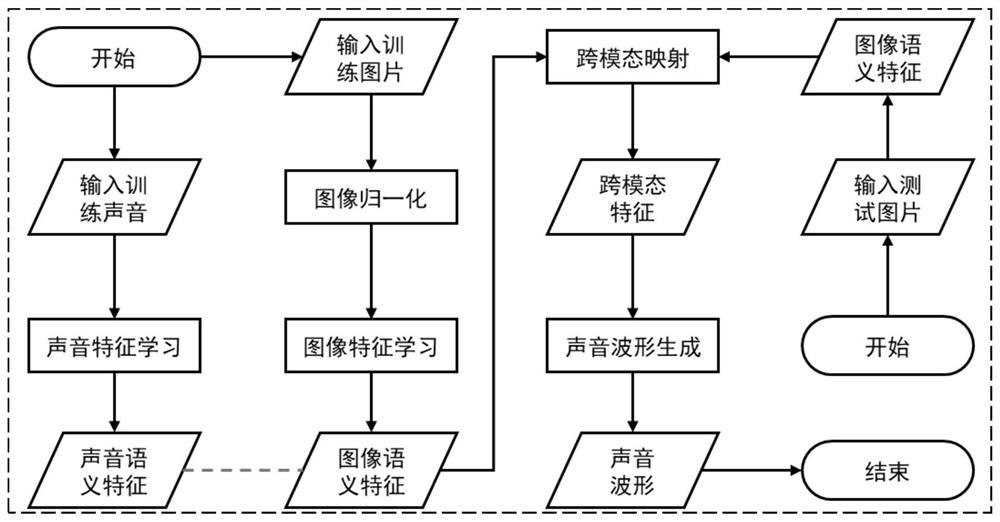

The invention discloses a cross-audiovisual information conversion method based on semantic reservation, which is characterized in that audio-visual information conversion is regarded as an expression similarity learning problem of a low-dimensional space, and cross-modal conversion of features is realized in the low-dimensional space by extracting semantic features of an image, and finally, the low-dimensional cross-modal features are mapped into sound waveforms based on human languages. According to the invention, the problem of limitation of an existing visual-to-auditory cross-modal information conversion method on accurate generation of sound waveforms based on human languages in an unconstrained environment is solved. For an unconstrained environment, a sound waveform based on a human language is generated, which is more in line with an actual situation.

Description

technical field [0001] The invention belongs to the technical field of machine learning, and in particular relates to a cross-audio-visual information conversion method. Background technique [0002] The cross-modal information conversion from vision to hearing helps visually impaired people to better perceive the information of the surrounding world, so it has strong practicability for this kind of people. However, due to the ubiquitous heterogeneous semantic gap between audiovisual modalities and the complex data structures within auditory modalities, it is very difficult to achieve effective cross-audiovisual information conversion. At present, there are relatively few studies on cross-modal information conversion from vision to hearing. The workflow of these works is roughly as follows: first, extract the semantic features of visual data, then predict the spectrogram of auditory data, and finally realize the generation of sound waveforms. These studies generally generat...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G10L13/027G10L25/27

CPCG10L13/027G10L25/27Y02T10/40

Inventor 袁媛宁海龙

Owner NORTHWESTERN POLYTECHNICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com