Input coding method for constructing modeling unit of neural machine translation model

A technology of machine translation and model modeling, which is applied in the field of machine translation and can solve problems such as reduced readability of translation results and unregistered neural translation models

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

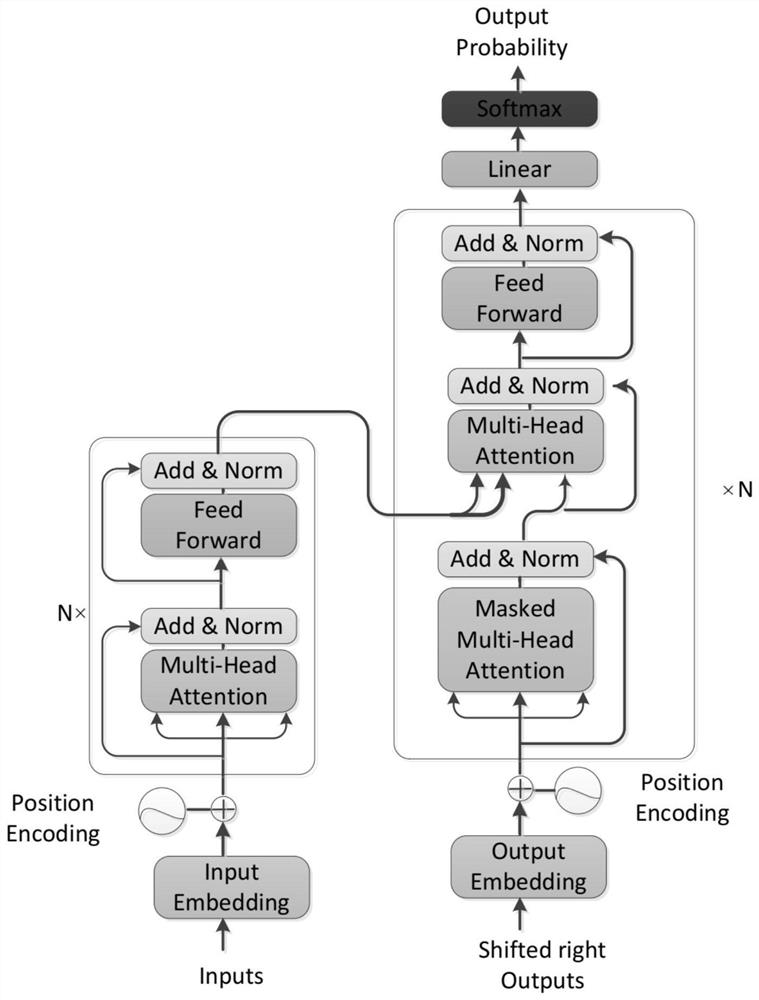

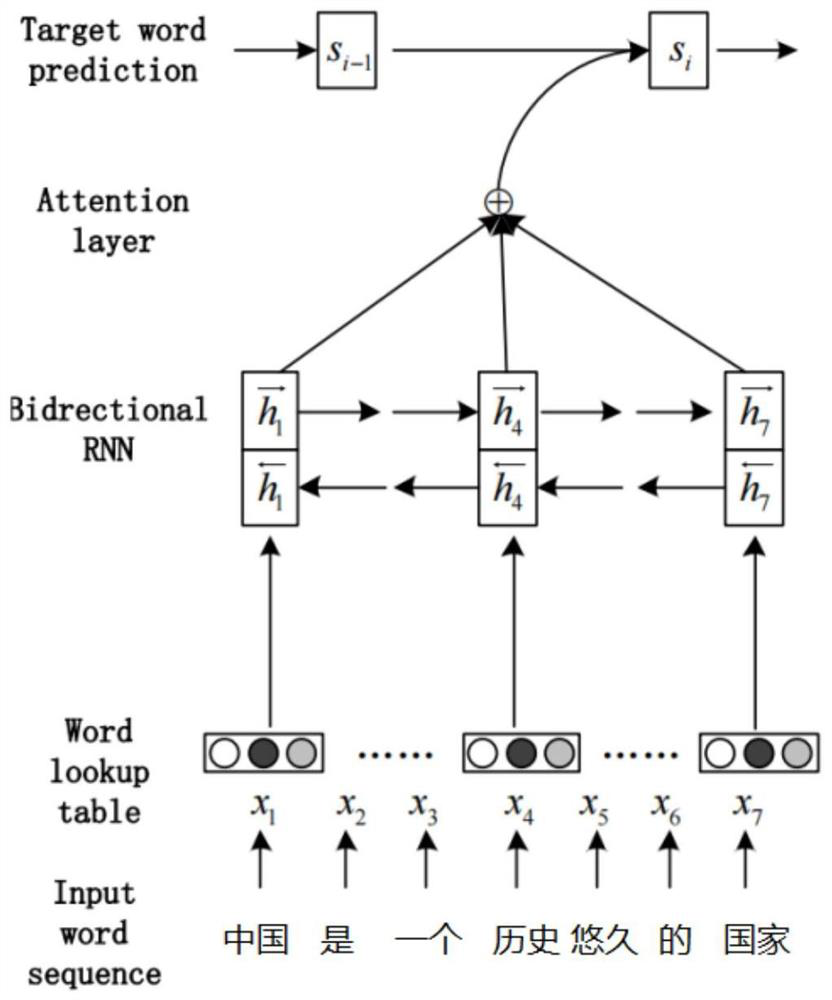

[0023] The present invention will be described in detail below in conjunction with the accompanying drawings. An input encoding method for building a neural machine translation model modeling unit is based on an encoder-decoder architecture. The encoder is completely based on the attention mechanism and does not use complicated Recurrent Neural Network or Convolutional Neural Network. The encoder is composed of six layers of identical modules, each of which includes a multi-head self-attention network and a position-sensitive forward neural network; the decoder is also composed of six layers of identical modules . The decoder has the same structure as the LSTM-based decoder in the RNNSearch model, and uses a layer of LSTM network to read the hidden layer vector of the encoder and predict the target word sequence.

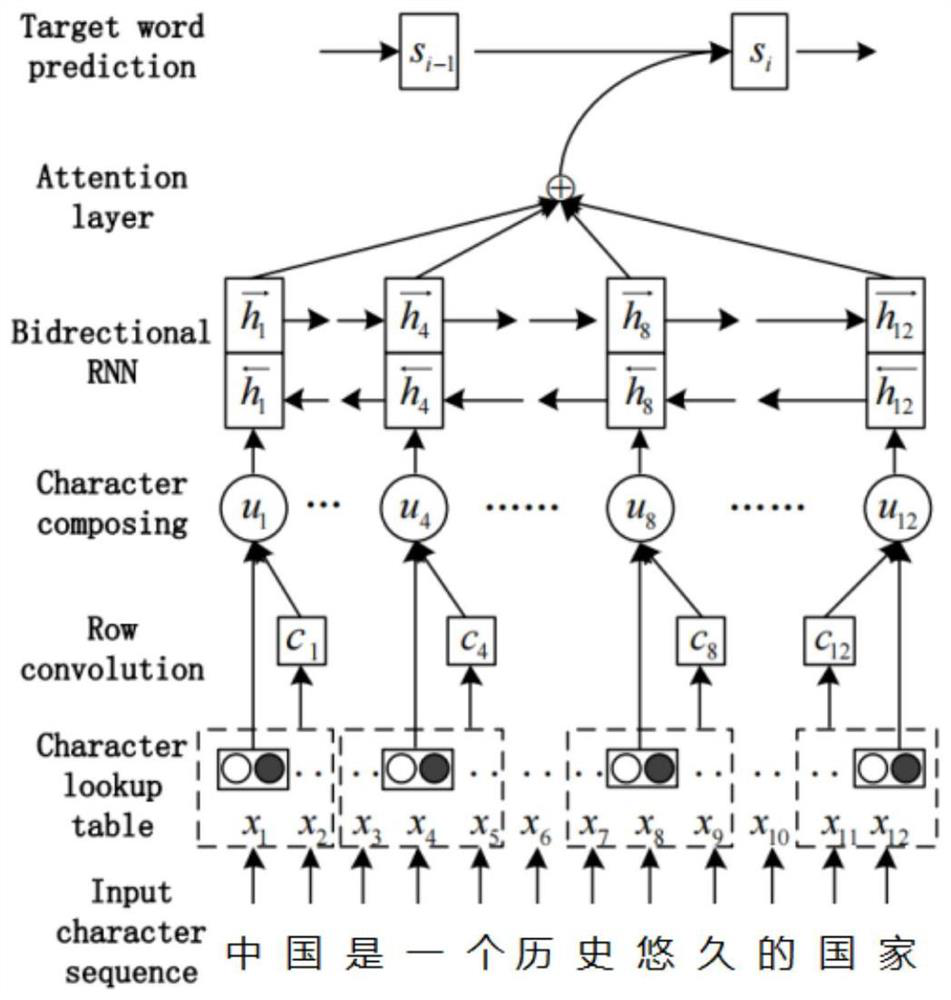

[0024] Before the encoder, the word-based modeling unit of the typical neural machine translation model with attention mechanism is reconstructed, and the modeling...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com