Three-dimensional human body virtualization reconstruction method and device

A virtualization and human body technology, applied in the field of computer vision, can solve problems such as the inability to restore human body information, lack of external texture information of the dressed human body, etc., and achieve a robust effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

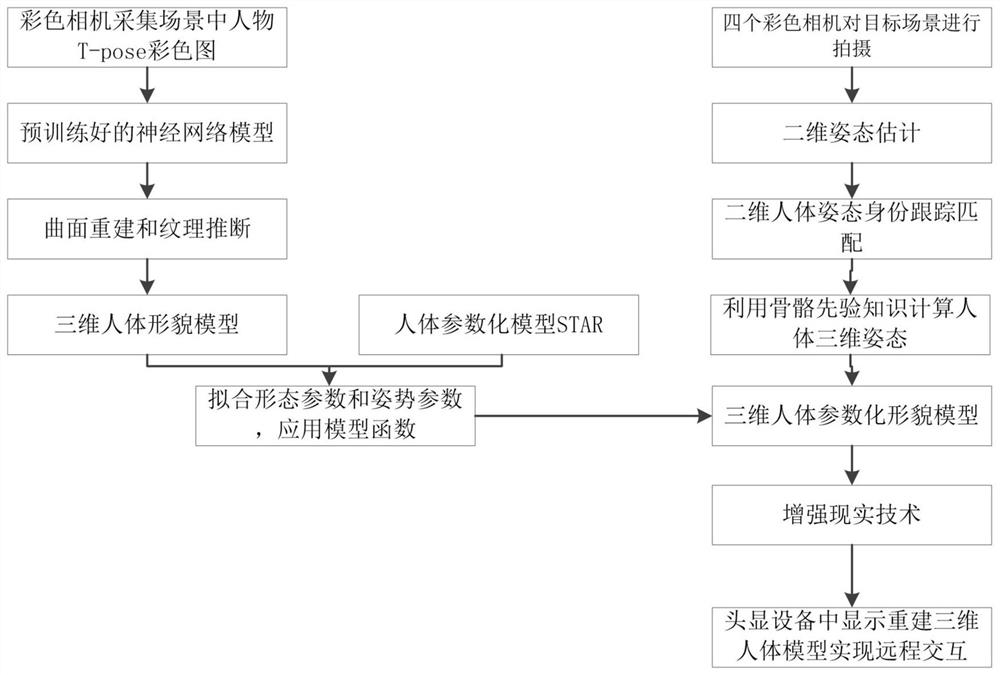

[0033] figure 1 A flowchart showing a three-dimensional human body virtualization reconstruction method provided by an embodiment of the present invention;

[0034] Such as figure 1 As shown, the method of the embodiment of the present invention mainly includes the following steps:

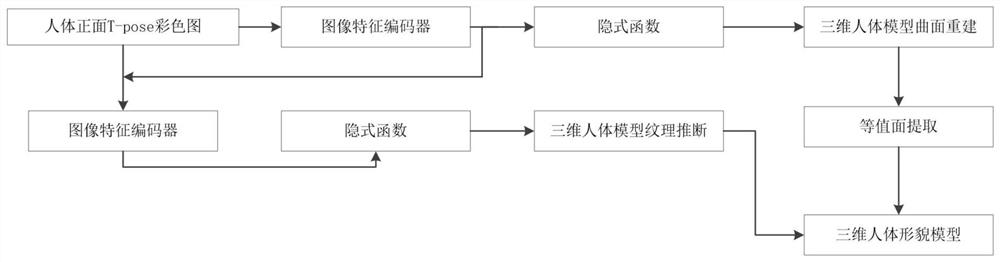

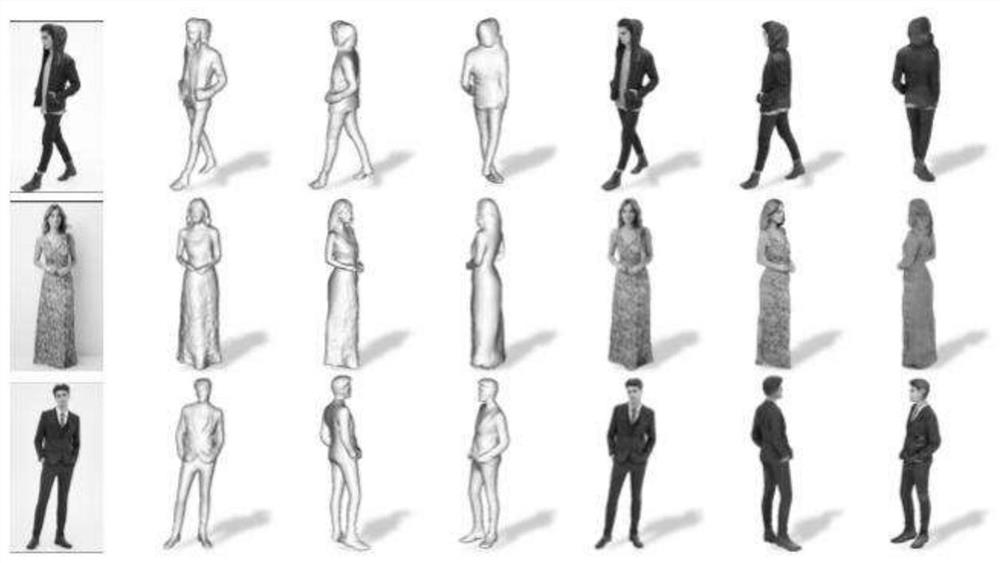

[0035] S1: Use a camera to take a standard T-pose picture of the human body posture, and input the T-pose picture into the first neural network model to obtain a three-dimensional human body shape model; wherein the first neural network model uses a large number of real human body posture images in advance. train.

[0036] In this embodiment, four color cameras are respectively placed at arbitrary edge positions in the scene, so that they can capture four color images of the human body from different perspectives. In some other embodiments, different numbers of camera devices may also be provided according to different viewing angles. The photographic equipment includes cameras, video cameras ...

Embodiment 2

[0075] Furthermore, as an implementation of the methods shown in the above embodiments, another embodiment of the present invention also provides a three-dimensional human body virtual reconstruction device. This device embodiment corresponds to the foregoing method embodiment. For the convenience of reading, this device embodiment does not repeat the details in the foregoing method embodiment one by one, but it should be clear that the device in this embodiment can correspond to the foregoing method implementation. Everything in the example. In the device of this embodiment, there are following modules:

[0076] 1. Obtain the human body three-dimensional appearance model module: use camera equipment to take standard T-pose pictures of human body postures, and input the T-pose pictures into the first neural network model to obtain the human body three-dimensional appearance model; the first neural network model uses a large number of real Human body posture images are used fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com