A Human Pose Estimation Method Based on Joint Relationship

A technology of human body posture and joints, applied in computing, computer parts, instruments, etc., can solve problems such as inability to train, a large number of calculations, increase the difficulty of prediction, etc., and achieve the effect of good recognition effect, high computing efficiency, and accurate positioning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

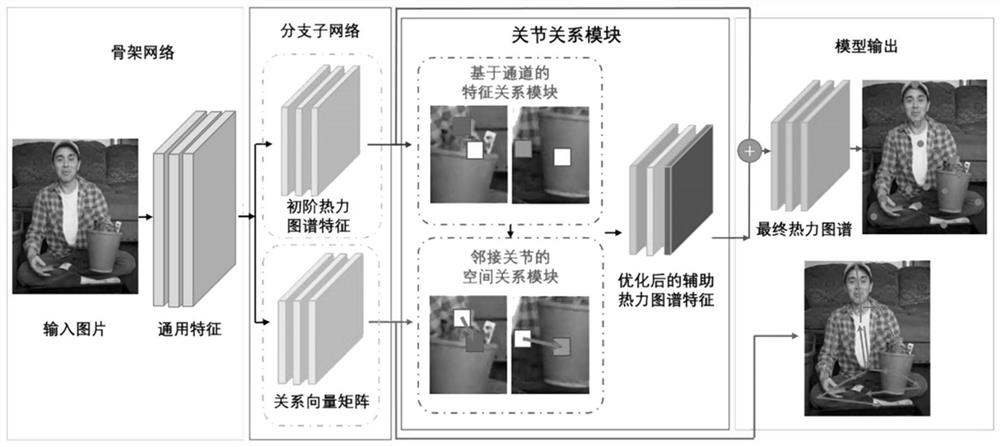

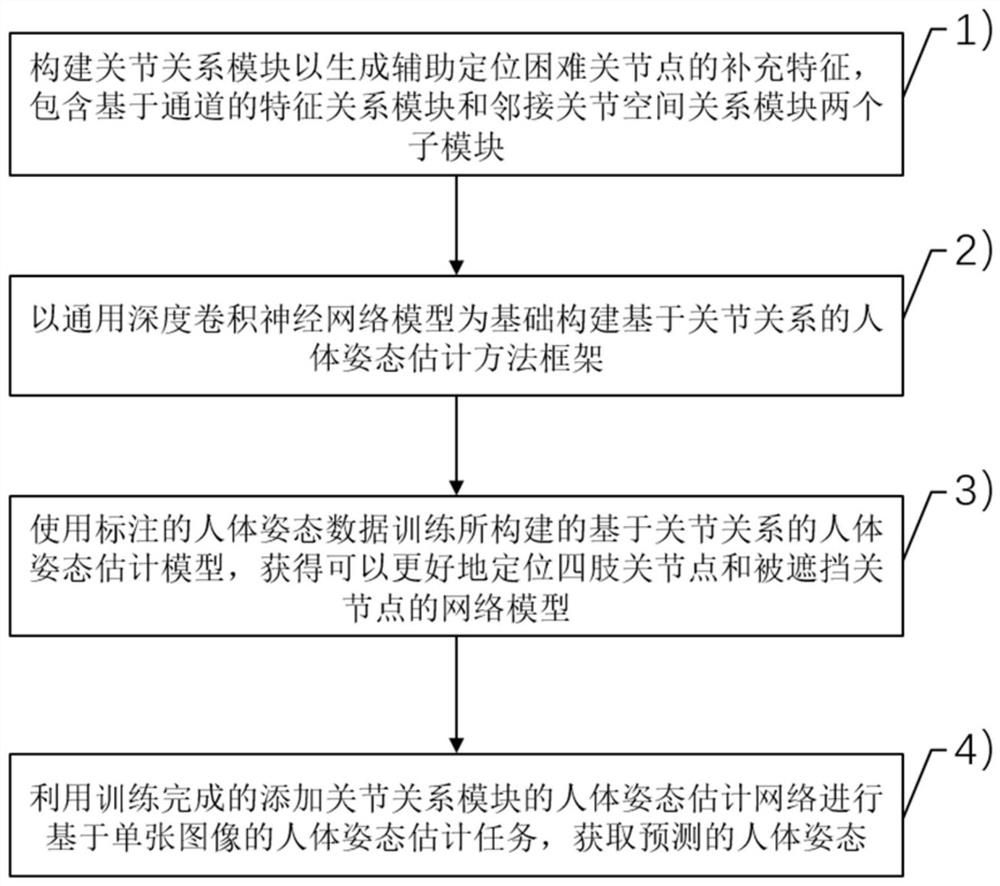

[0063] Such as figure 2 As shown, a kind of human body posture estimation method based on joint relationship provided by the present invention mainly includes the following four steps:

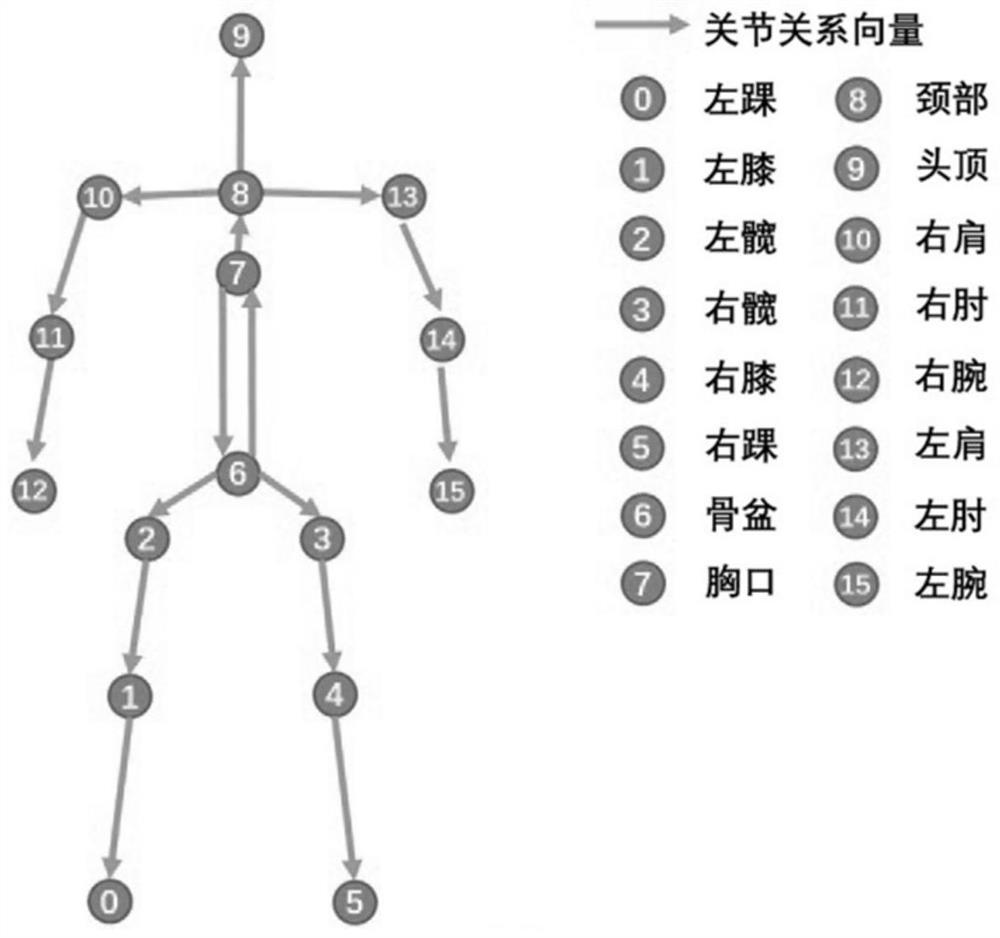

[0064] 1) Construct the joint relationship module to generate supplementary features for auxiliary positioning of difficult joint points, including two sub-modules: the channel-based feature relationship module and the adjacent joint space relationship module;

[0065] 2) Construct a human body pose estimation model based on joint relationships based on the general deep convolutional neural network model;

[0066] 3) Use the marked human body posture data to train the constructed human body posture estimation model based on the joint relationship, and obtain a network model that can better locate limb joints and occluded joints;

[0067] 4) For the input image to be processed, use the human body pose estimation network with joint relationship module trained in step 3) to perform the human bo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com