Caching of potential search results

A caching and caching group technology, applied in the direction of network data retrieval, other database retrieval, special data processing applications, etc., can solve problems such as low latency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

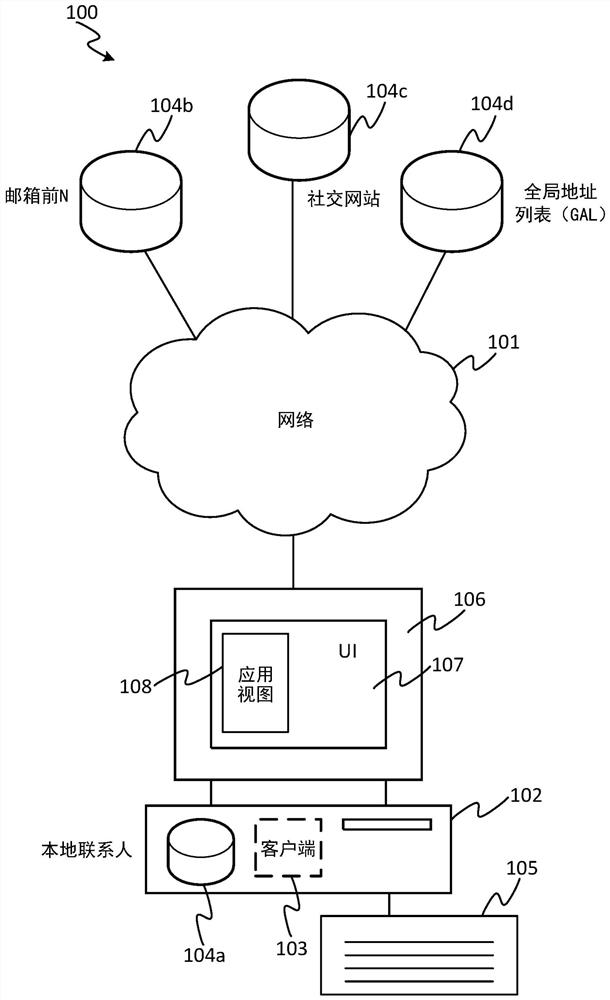

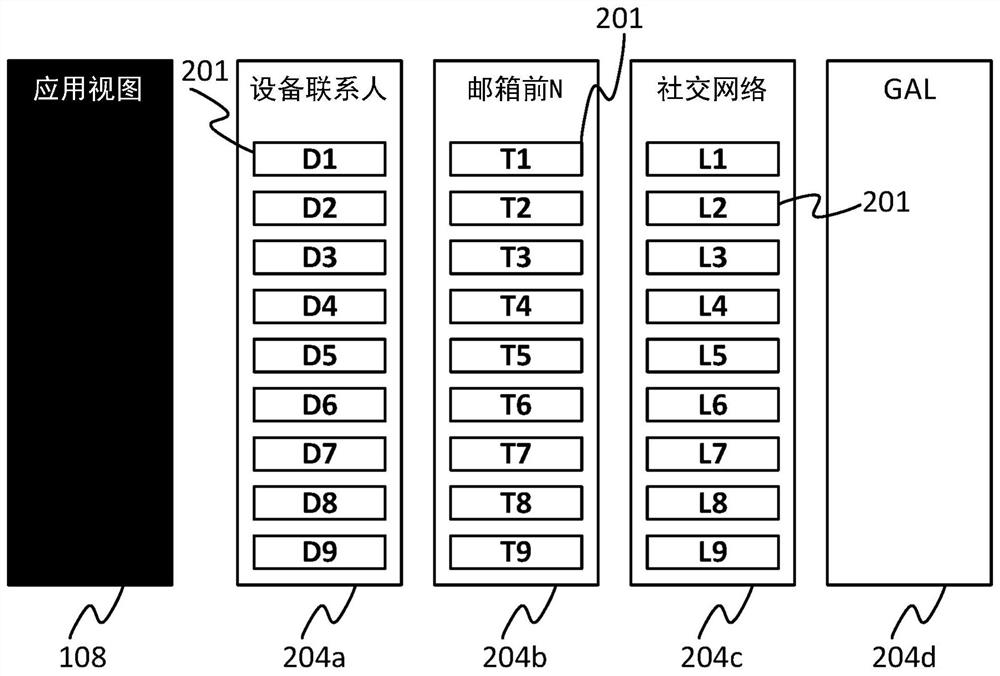

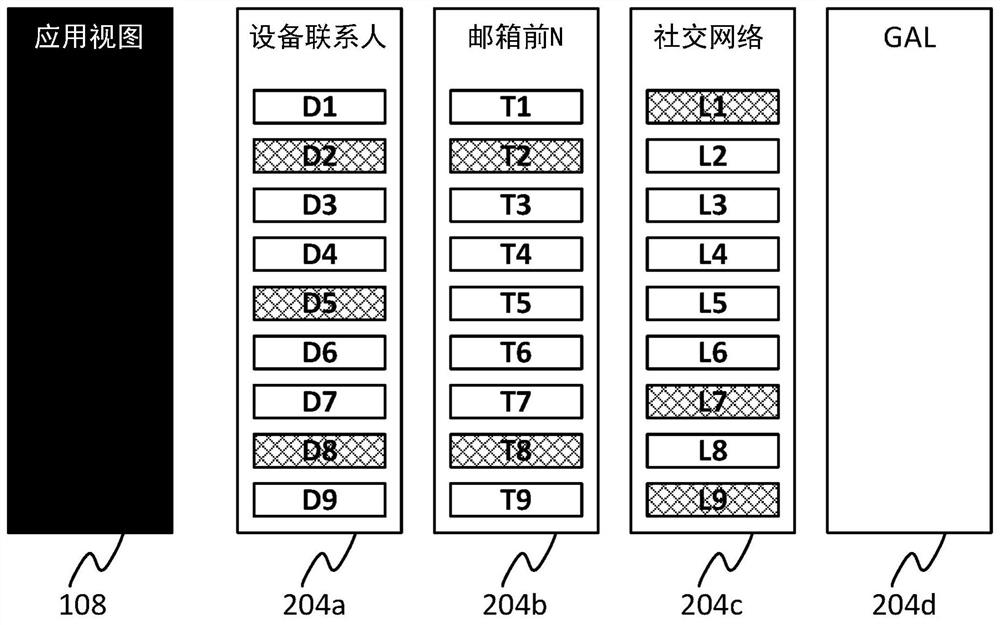

[0015] There are various scenarios where a user may wish to search for entity records from different data sources. It is desirable to be able to quickly display results to the end user (performance), while also ensuring that the correct entities are available for selection (integrity). Common solutions have pros and cons, such as caching (memory intensive) and streaming (CPU and bandwidth intensive). The normal way to execute queries is to evict the entire cache for each query to optimize the results for the query string. One approach is disclosed below, which is a compromise between these approaches, with segmented streams of different segments and / or sources, with frequent updates for the different segments. It can provide significant latency improvements for a variety of application scenarios, whether searching for people the user frequently works with or someone the user is interacting with for the first time.

[0016] User jobs can be expressed as follows. A user wants...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com