Target detection tracking method based on feature fusion and environment self-adaption

A feature fusion and target detection technology, applied in character and pattern recognition, image data processing, instruments, etc., can solve problems such as failure and interference tracking, achieve good robustness, improve tracking speed, and reduce complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

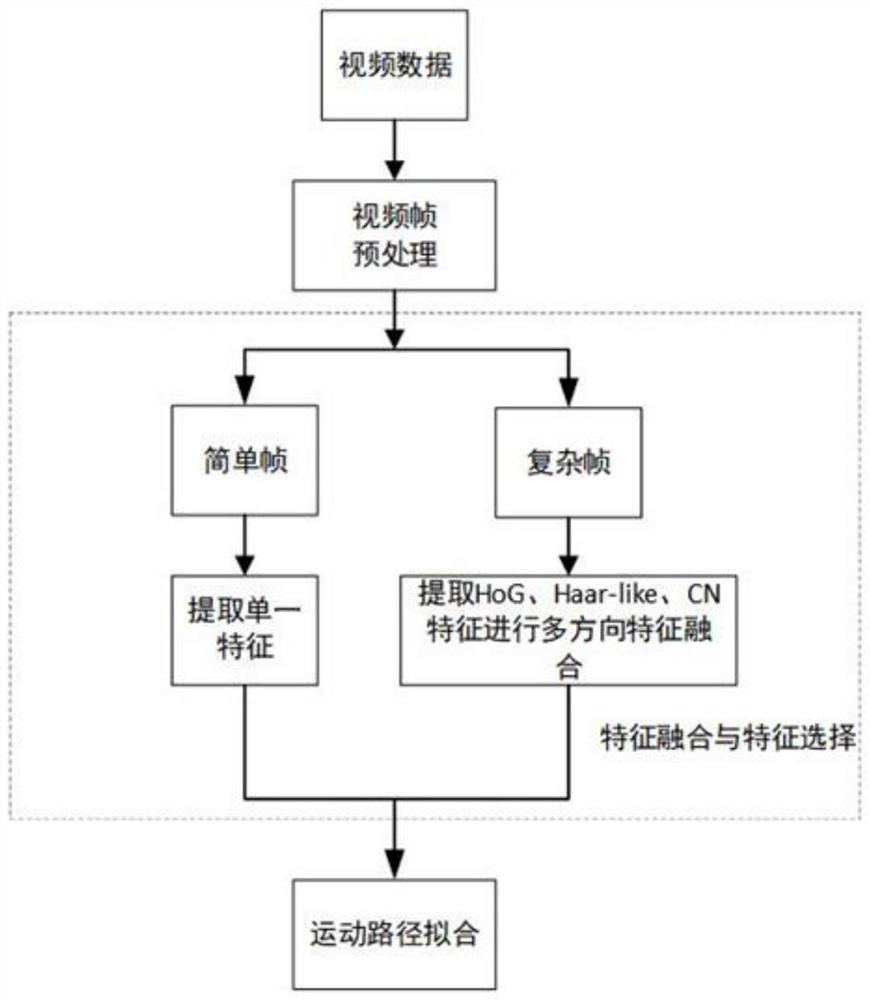

[0045] ginseng figure 1 As shown, this embodiment provides a method for target detection and tracking based on feature fusion and environment adaptation, including the following steps:

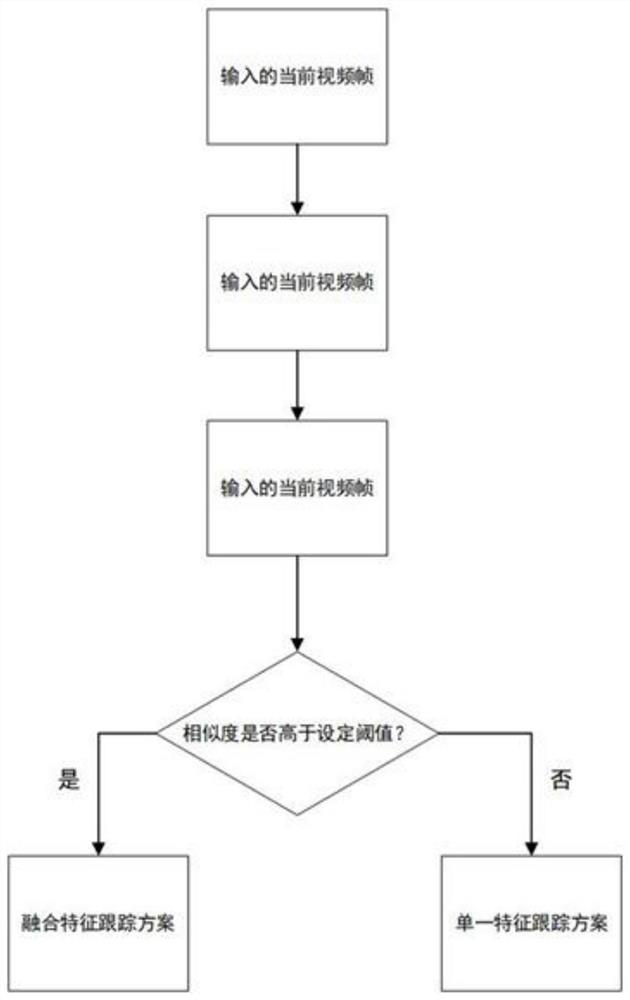

[0046] Step 1, use a pre-classifier to preprocess the video data, and divide the video data into simple frames and complex frames;

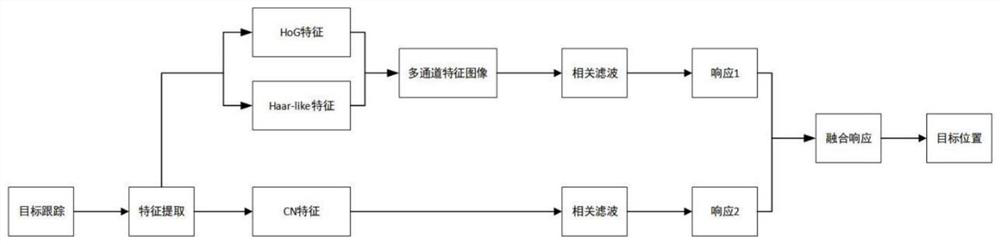

[0047] Step 2, for simple frames, extract a single feature; for complex frames, extract HoG, Haar, CN features for multi-directional feature fusion;

[0048] Step 3, based on the sample screening mechanism of motion prediction, use the least squares method to fit the target's motion trajectory, and use this as a parameter for weight calculation to re-evalua...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com