Method for abstracting abstract text based on graph knowledge and theme perception

A topic and abstract technology, applied in the field of natural language processing, can solve problems such as fragmentation and difficulty in capturing semantic-level relationships, and achieve the effect of good robustness and adaptive ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be further described below in conjunction with drawings and embodiments.

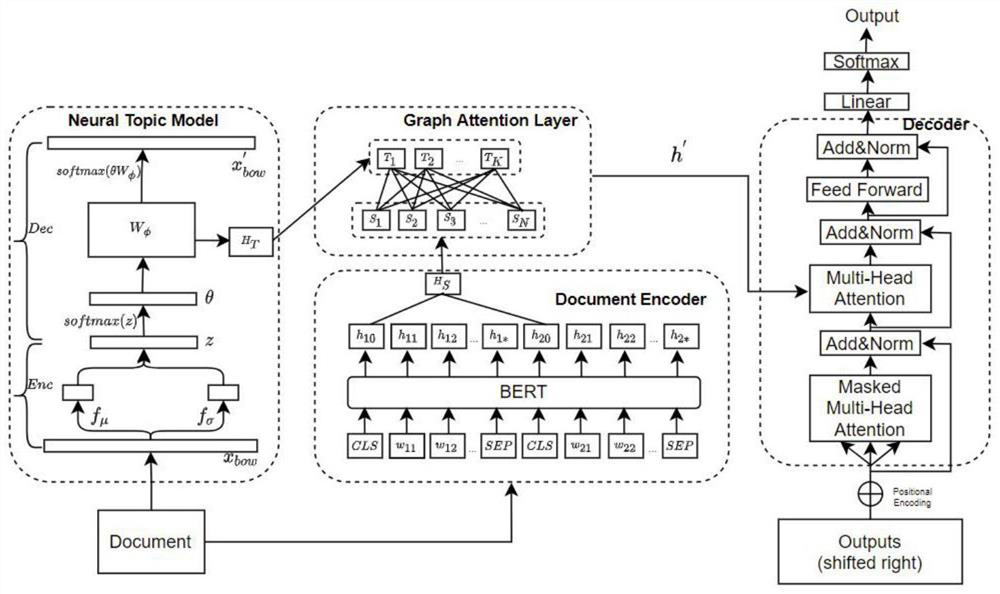

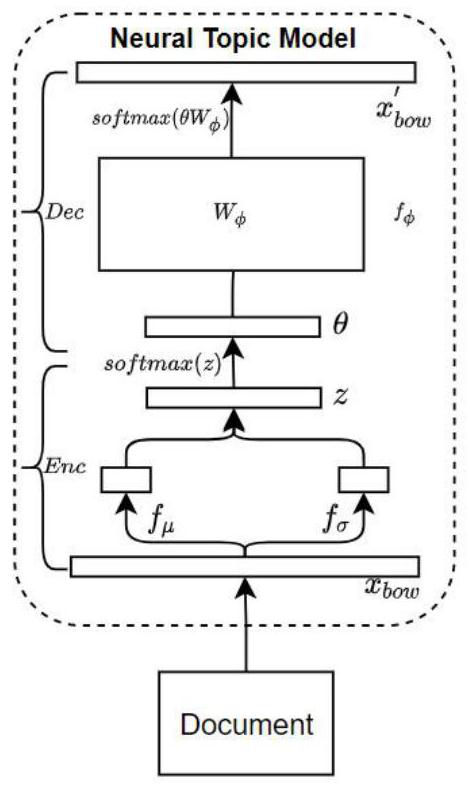

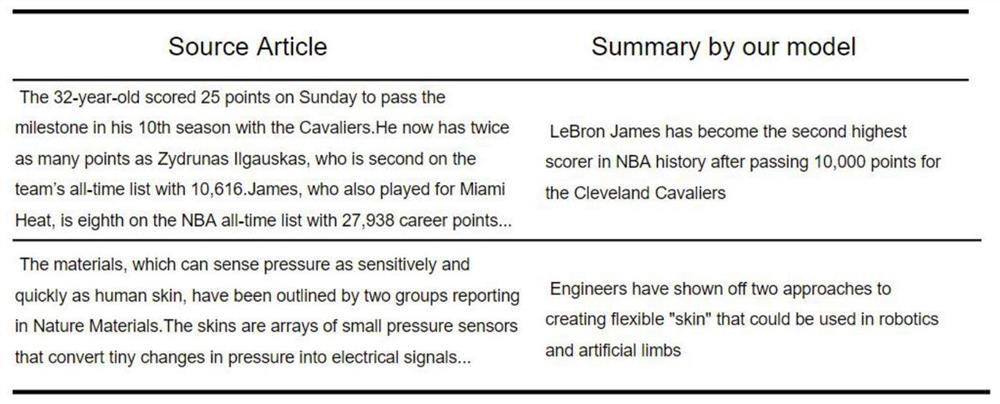

[0019] The present invention proposes an abstract text summarization method based on graph knowledge and topic awareness. First, we encode input documents with a pretrained language model BERT to learn contextual sentence representations, while discovering latent topics using a neural topic model (NTM). Then, we construct a heterogeneous document graph consisting of sentence and topic nodes, and simultaneously use an improved graph attention network (GAT) to update its representation. Third, obtain the expression form of the sentence node and calculate the latent semantics. Finally, the latent semantics are fed into a Transformer-based decoder for decoding to generate the final result. We conduct extensive experiments on two real-world datasets CNN / DailyMail and XSum.

[0020] A model based on BERT, neural topic model and graph neural network proposed by the present ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com