Image description generation method based on external triple and abstract relationship

An image description and triplet technology, applied in neural learning methods, computer parts, character and pattern recognition, etc., can solve the problem of too simple description, and achieve the effect of accurate description

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0013] The present invention will be further described below in conjunction with the accompanying drawings.

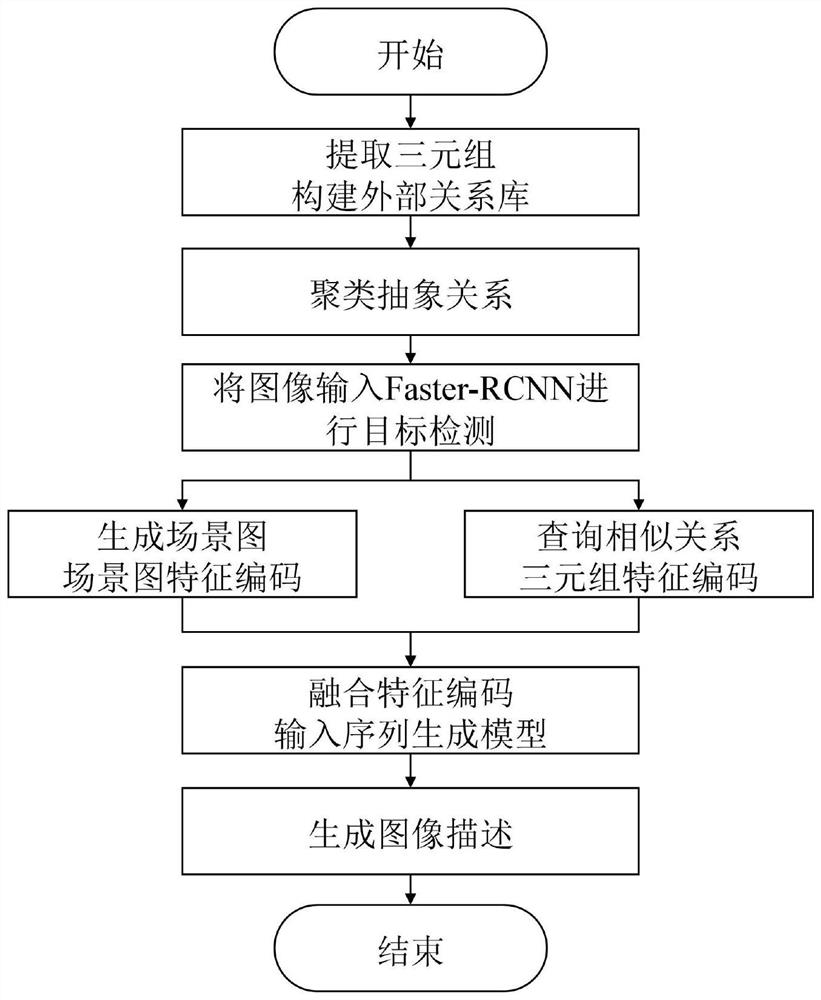

[0014] refer to figure 1 and 5 Shown is a flow diagram of an overall embodiment of the present invention.

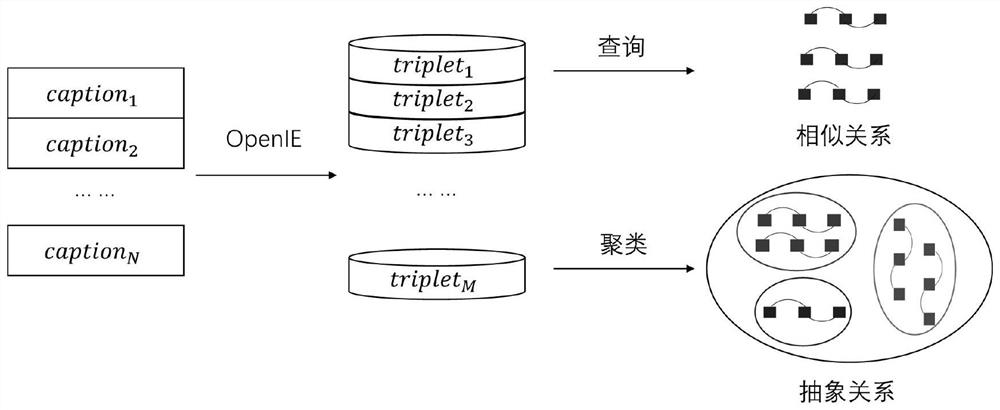

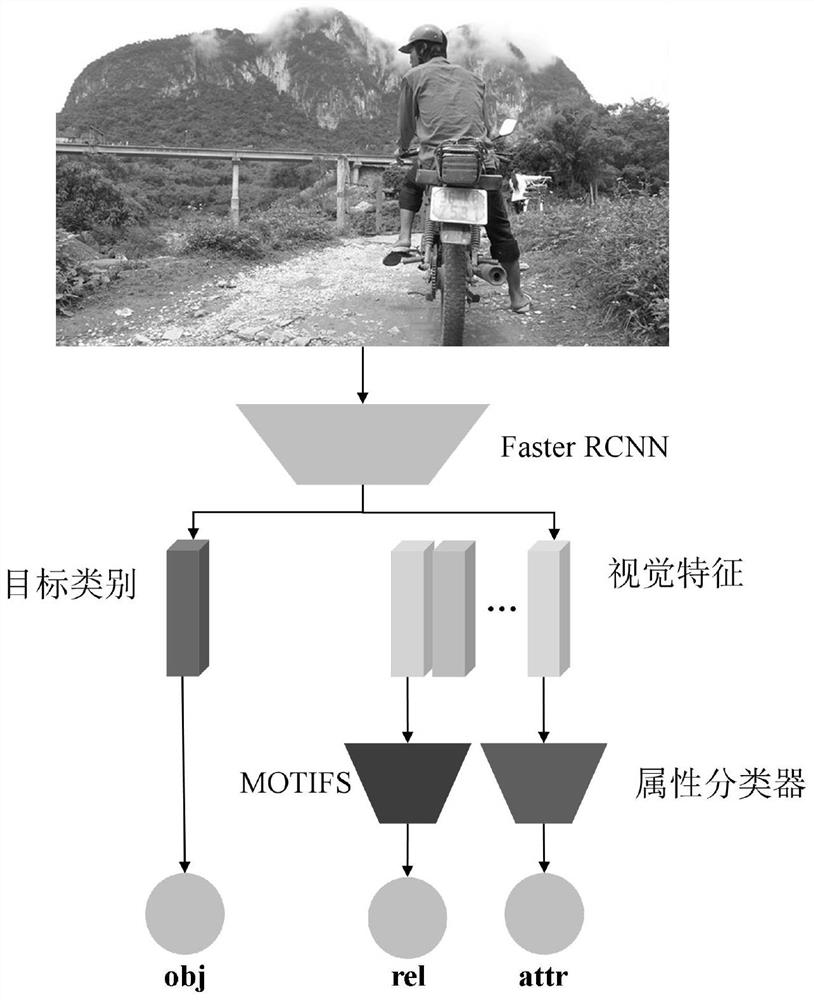

[0015] In order to solve these problems, the present invention constructs an external relation library, searches for similarity relation and abstract relation from the library according to the image target category, and integrates them with scene graph features. Specifically, we first use an open domain knowledge extraction tool to extract triples in image description texts, build an external relation library, and encode the features of the triples. According to the text similarity of the relations in the triples, the triples with high similarity are clustered into one class, which is called abstract relation. At the same time, the model performs target detection on the image to obtain target visual features and semantic labels. According to the text similari...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com