A nested meta-learning method and system based on federated architecture

A meta-learning and federated technology, applied in the field of machine learning, can solve the problems of global model aggregation speed that needs to be improved, communication overhead is too large, etc., to achieve the effect of improving generalization and performance, good generalization, and high flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

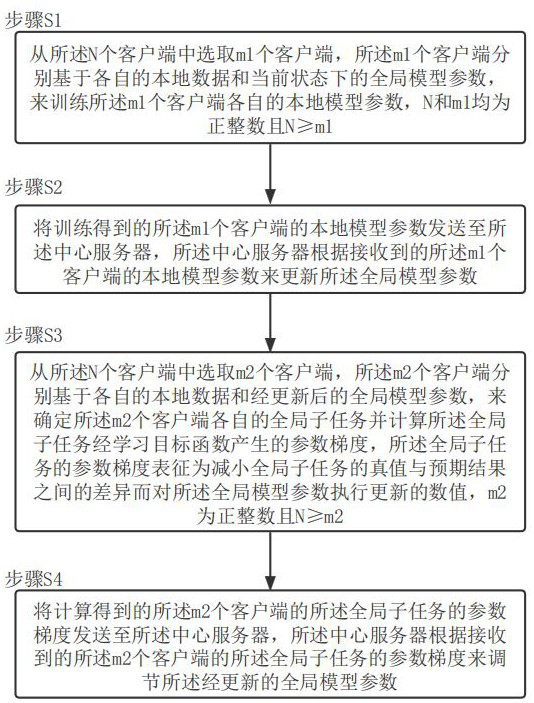

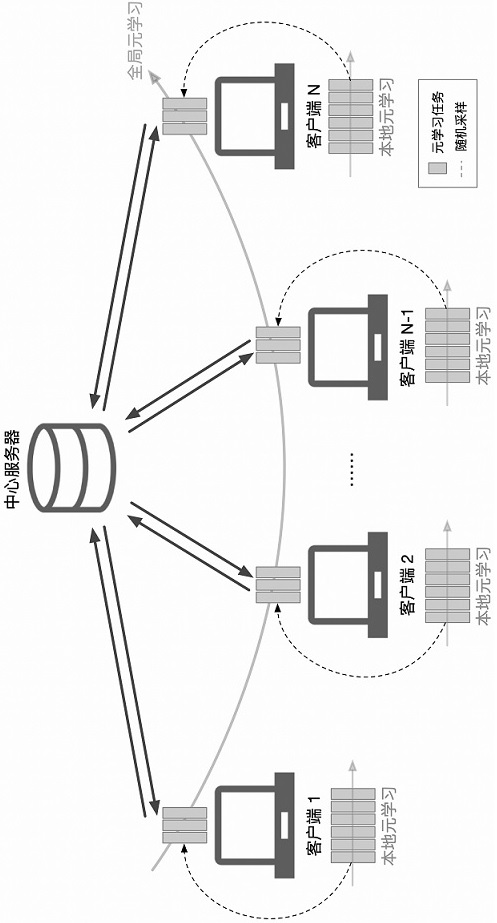

[0084] Nested Meta-Learning Algorithm Flow

[0085] enter: N : the number of users; : Global meta-learning algorithm; : local meta-learning algorithm; : the global learning rate; : local learning rate; : The number of episode rounds of global training; : The number of episode epochs for local training.

[0086] output: : parameters of the global model

[0087] for do

[0088] # perform local meta-learning

[0089] Pick a subset of users from all users U ;

[0090] for each user do

[0091] Assign global model parameters to local models ;

[0092] for local episode rounds do

[0093] user u Sampling from own data to construct local meta-learning tasks ;

[0094] Computing gradients for meta-learning task training ;

[0095] Update local meta-learning model parameters ;

[0096] end for

[0097] end for

[0098] Global model parameters first take the average of all local model parameters

[0099] # Perform global meta-learning

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com