Image classification method, electronic equipment, storage medium and program product

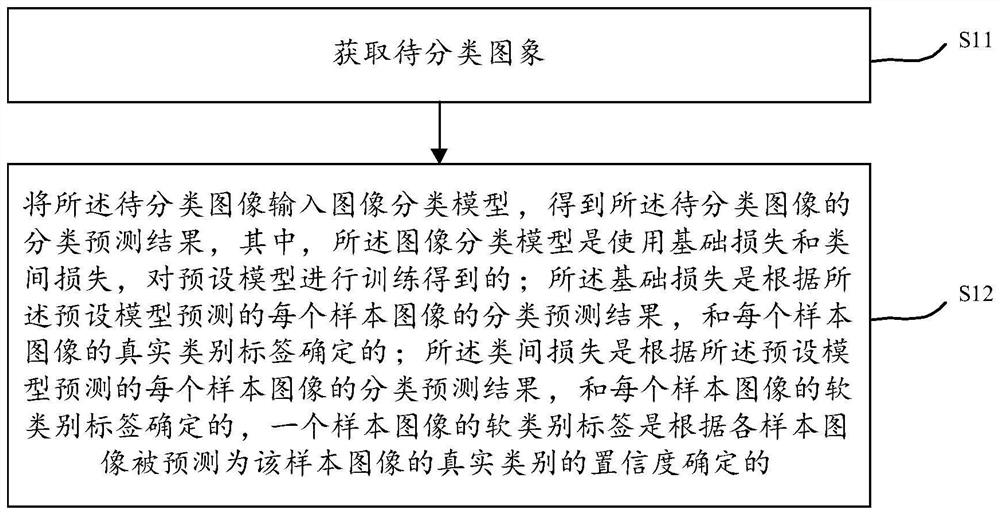

A classification method and image technology, applied in the field of image processing, can solve problems that affect the accuracy of the image classification model, the number of category sample images is unbalanced, and the image classification model cannot learn and distinguish well

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

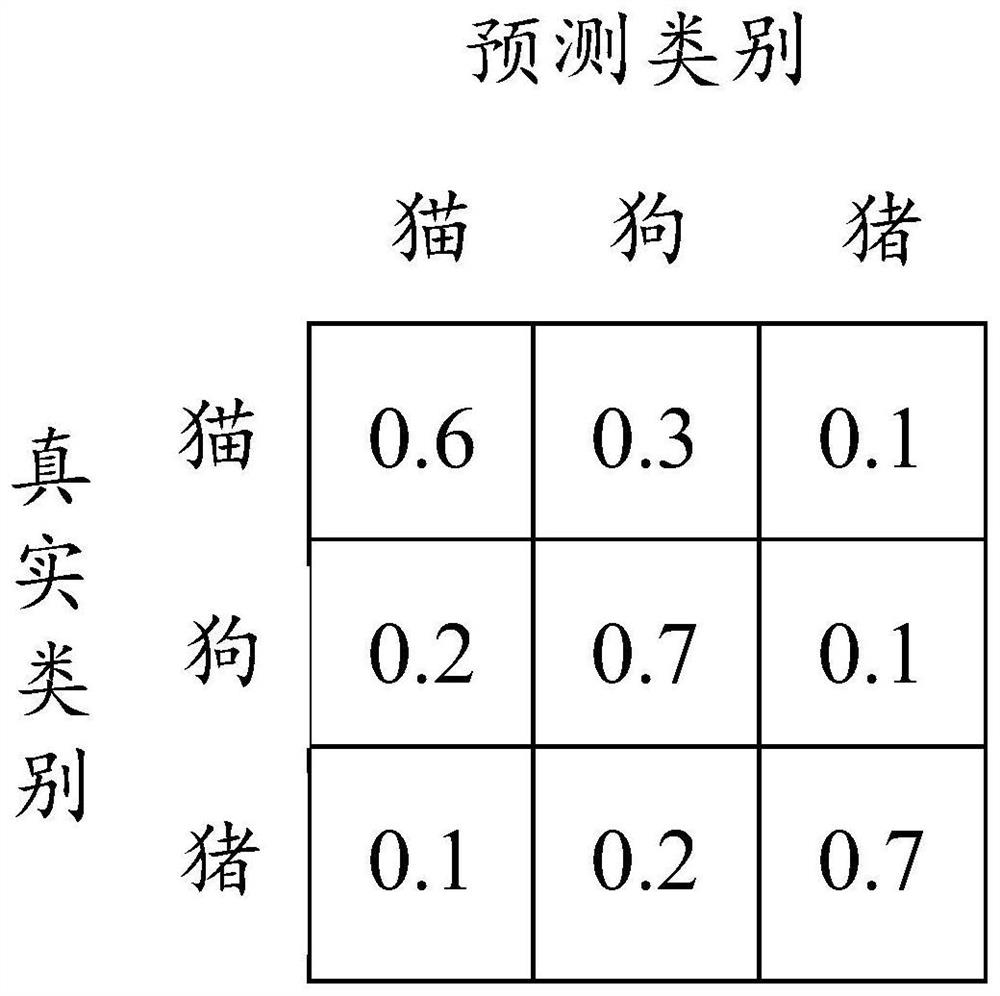

Method used

Image

Examples

Embodiment Construction

[0060] In order to make the above objects, features and advantages of the present application more obvious and comprehensible, the present application will be further described in detail below in conjunction with the accompanying drawings and specific implementation methods.

[0061] In recent years, artificial intelligence-based computer vision, deep learning, machine learning, image processing, image recognition and other technologies have made important progress. Artificial Intelligence (AI) is an emerging science and technology that researches and develops theories, methods, technologies and application systems for simulating and extending human intelligence. The subject of artificial intelligence is a comprehensive subject that involves many technologies such as chips, big data, cloud computing, Internet of Things, distributed storage, deep learning, machine learning, and neural networks. As an important branch of artificial intelligence, computer vision is specifically t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com