A UAV multi-stage visual precision landing method and device

A UAV, multi-stage technology, applied in 3D position/course control, vehicle position/route/height control, instruments, etc., can solve the problem of inability to achieve a large landing height, accurate landing, safe landing, UAV landing target Point detection and positioning failure, hidden dangers of safe landing and other problems, to achieve the effect of low cost, convenient operation and strong generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The following description of at least one exemplary embodiment is merely illustrative in nature and is in no way intended to limit the invention, its application, or uses. Based on the embodiments of the present invention, all other embodiments obtained by those of ordinary skill in the art without creative efforts shall fall within the protection scope of the present invention.

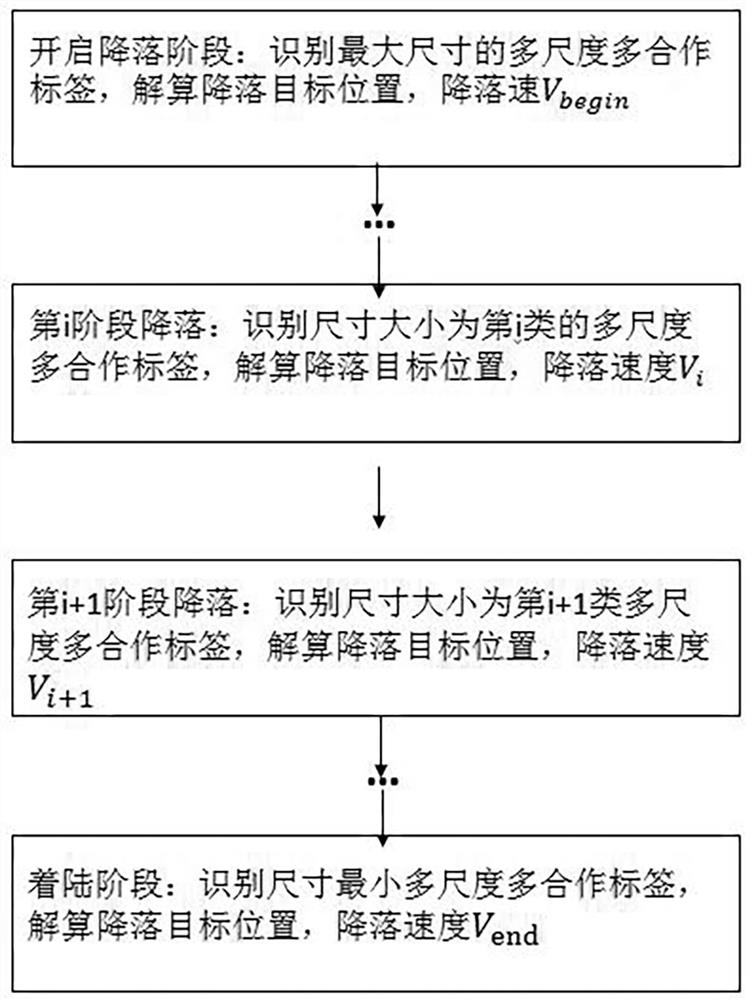

[0030] A multi-stage visual precision landing method for an unmanned aerial vehicle, comprising the following steps:

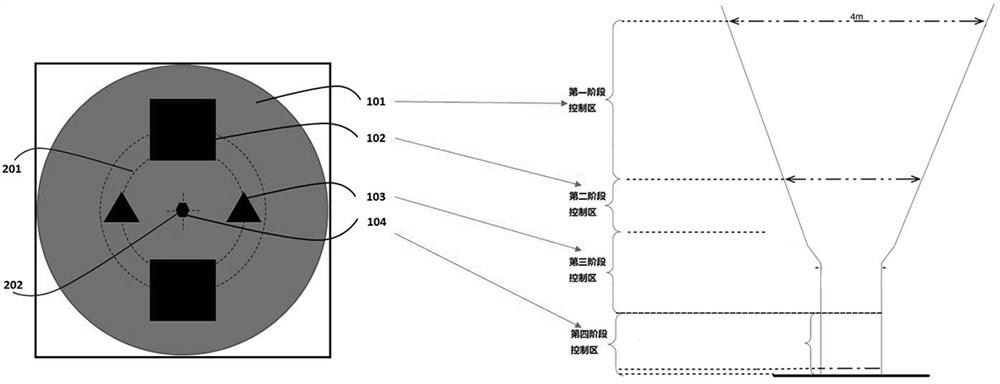

[0031] Step S1: Obtain the internal parameters of the airborne bird's-eye view camera and the actual landing height requirement of the UAV, and construct a ground visual landing sign with multi-scale and multi-cooperative labels;

[0032] The multi-scale multi-cooperation tags include multi-scale tags and multi-cooperation tags, the multi-cooperation tags are tags with different shapes, and each type of the multi-cooperation tags corresponds to a landing stage; the multi-scale...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com