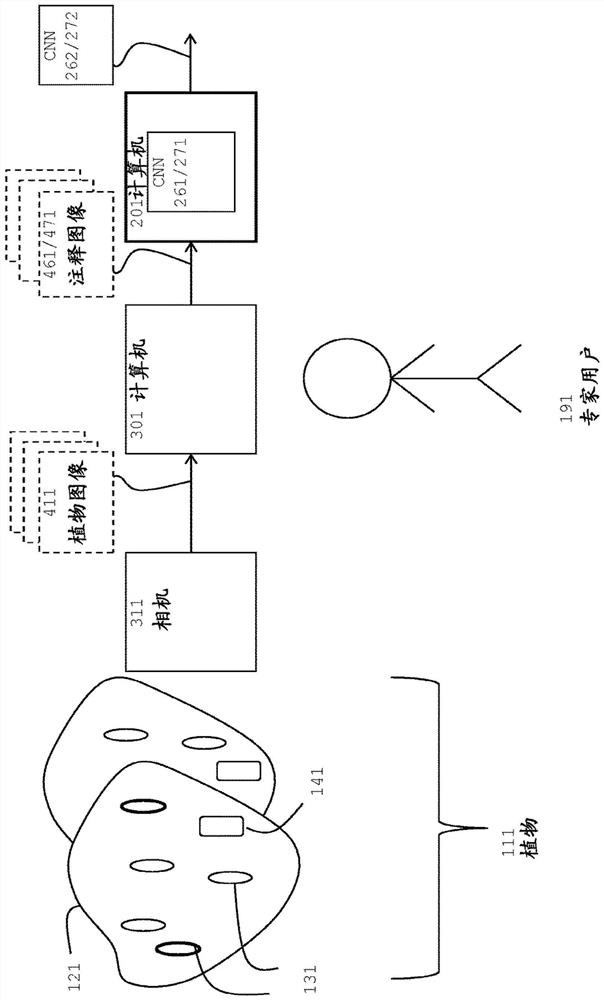

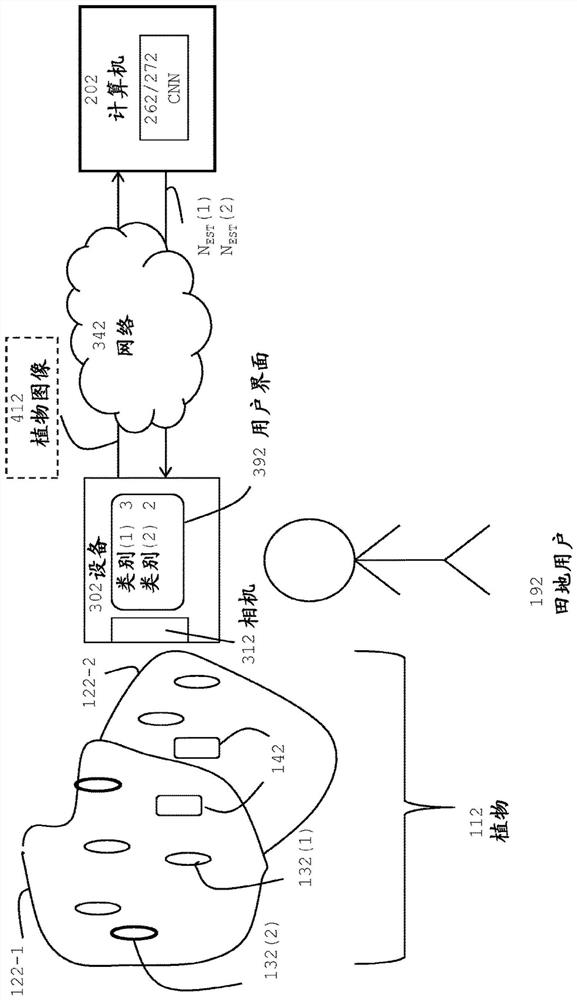

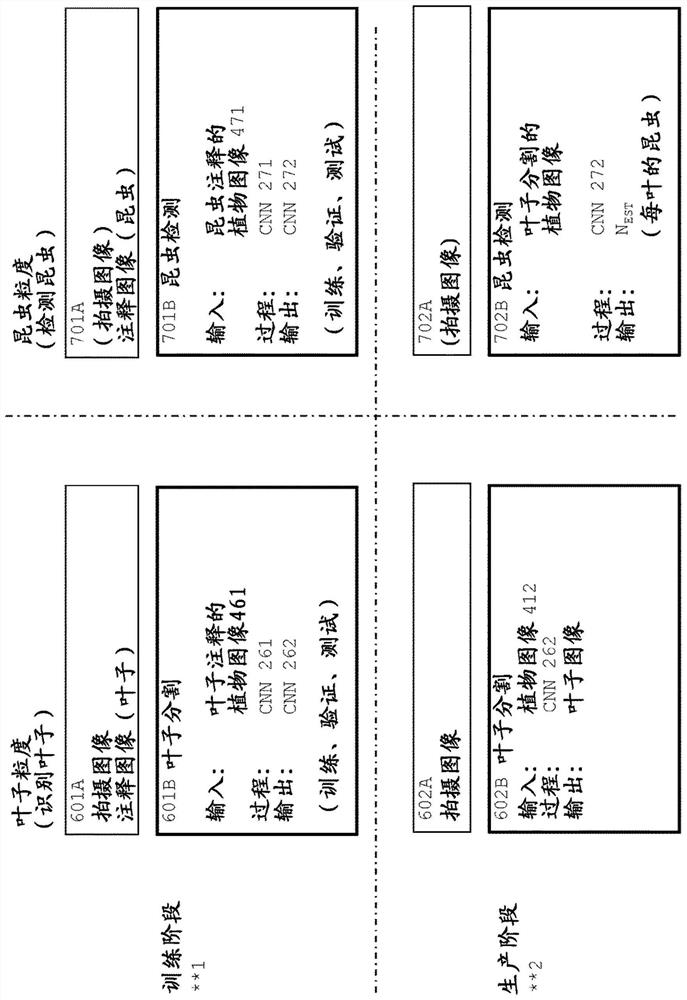

Quantifying objects on plant by estimating number of objects on plant portion, such as leaf, through convolutional neural network providing density map

A technology of convolutional neural network and density map, applied in the field of computer image processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049]writing convention

[0050] The specification begins by explaining some writing conventions.

[0051] The term "image" refers to the data structure of a digital photograph (ie, a data structure using file formats such as JPEG, TIFF, BMP, RAW, etc.). The phrase "taking an image" denotes the act of pointing a camera at an object, such as a plant or a part of a plant, and having the camera store the image.

[0052] This specification uses the term "show" when explaining the content (ie semantics) of an image, for example in a phrase such as "the image shows a plant". However, human users do not need to view the images. This computer-user interaction is expressed in the term "display", such as in "computer shows plant image to expert", where the expert user looks at the screen to see the plant on the image.

[0053] The term "annotation" represents metadata received by a computer when an expert user views a display of images and interacts with the computer. The term "ann...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com