Hair point cloud neural rendering method with stable time domain

A point cloud and stable technology, applied in the field of neural rendering and computer graphics, can solve the problems of slow rendering speed, high overhead, poor coloring effect, etc., to improve time domain stability, reduce rendering time, ensure rendering quality and rendering speed Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

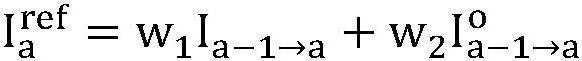

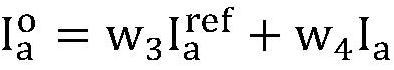

[0026] The time-domain stable hair point cloud neural rendering method of the present invention comprises the following steps:

[0027] Step 1: Use the ray tracing algorithm to generate a hair rendering data set, including local illumination results and global illumination results, build a basic neural rendering network according to the point cloud-based neural rendering method framework, including point cloud projection to obtain feature maps, use U-Net The network performs feature extraction and rendering on the feature map and uses a perceptual loss function as a constraint on the results of the network rendering.

[0028] This step is the technical basis of the present invention and is divided into the following sub-steps.

[0029] 1) Use Blender to make a hair model composed of hundreds of thousands of hairs, and generate 540 camera-surrounding local illumination for each model, and the global illumination rendering results have corresponding transparency masks. The resul...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com