Automatic driving lane changing scene classification method and recognition method based on clustering

A scene classification and automatic driving technology, applied in character and pattern recognition, instruments, computing, etc., can solve problems such as labor-intensive and automatic scene classification, and achieve the effect of avoiding classification confusion, saving computing time and space costs, and covering a wide range of scenes.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

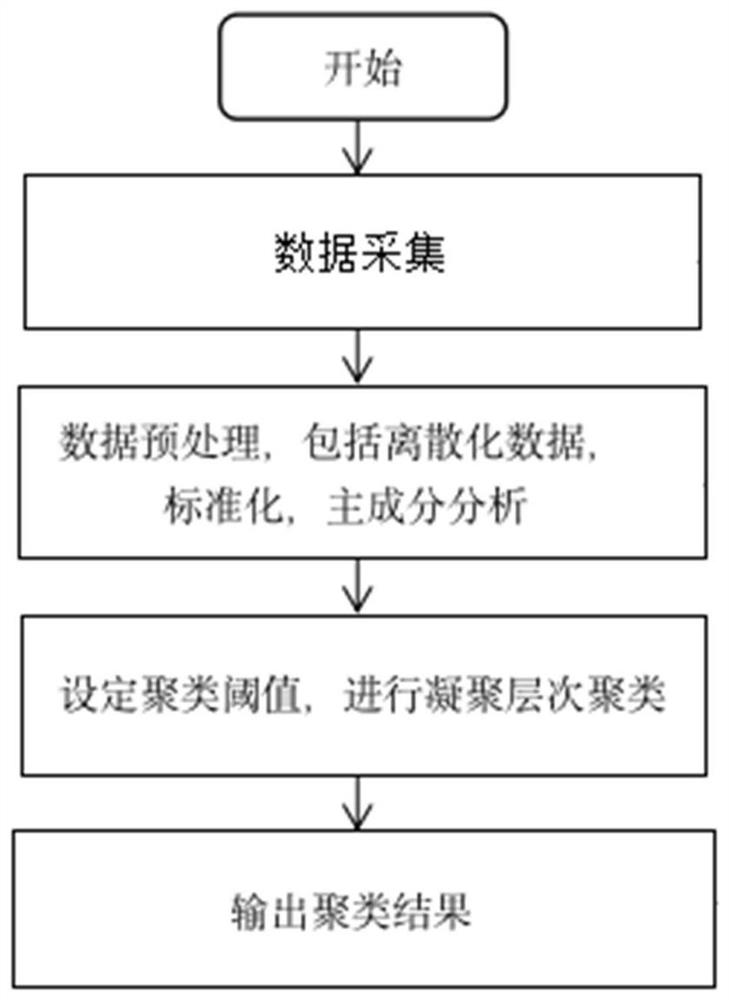

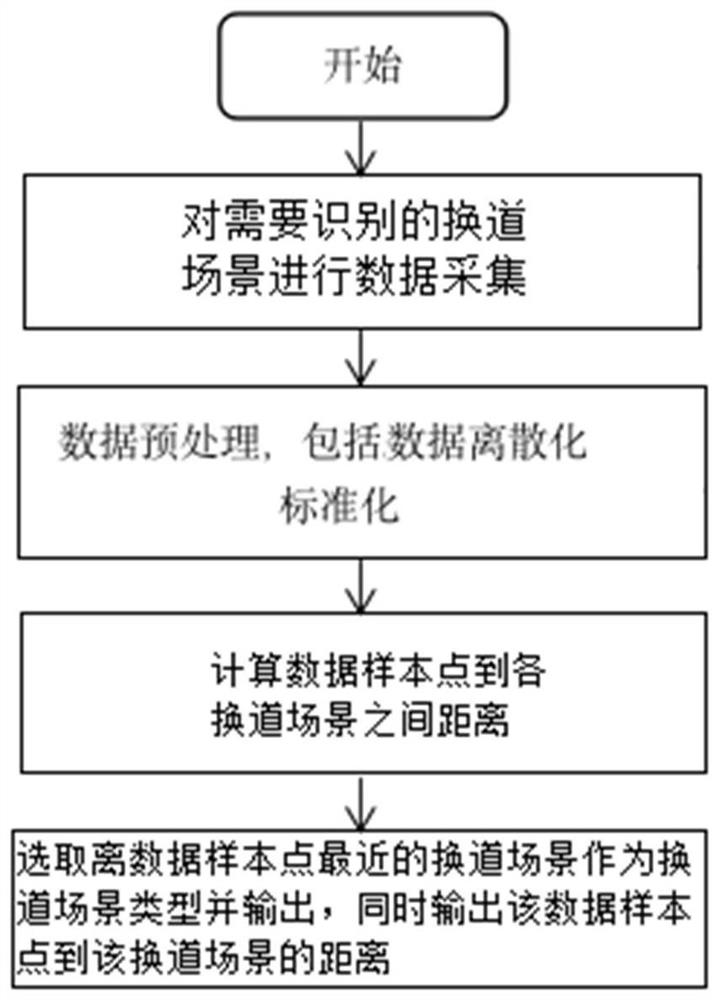

Image

Examples

Embodiment Construction

[0049] The present invention will be further described below with reference to the accompanying drawings and embodiments.

[0050]The automatic driving scene action factors take into account relevant information such as driving ability, functional characteristics, physical environment, behavior of traffic participants, and evaluation criteria. Scenes are divided into static and dynamic. Static scenes usually include road facilities, traffic accessories, surrounding environment, etc.; dynamic scenes include traffic management control, motor vehicles, non-motor vehicles, and pedestrians. When describing a scene, the present invention often requires multiple parameters to accurately describe a scene object. The parameters considered in the data description of the scene include: the geometric structure and topology of the driving road and the interaction data with other traffic participants; the relative positions and relative motion trends of traffic participants such as surround...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com