Feature point matching and screening method for adaptive region motion statistics

A feature point matching and screening method technology, applied in the field of computer vision, can solve the problem of low accuracy of the feature point matching and screening method, and achieve the effect that is conducive to calculation and accurate screening

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

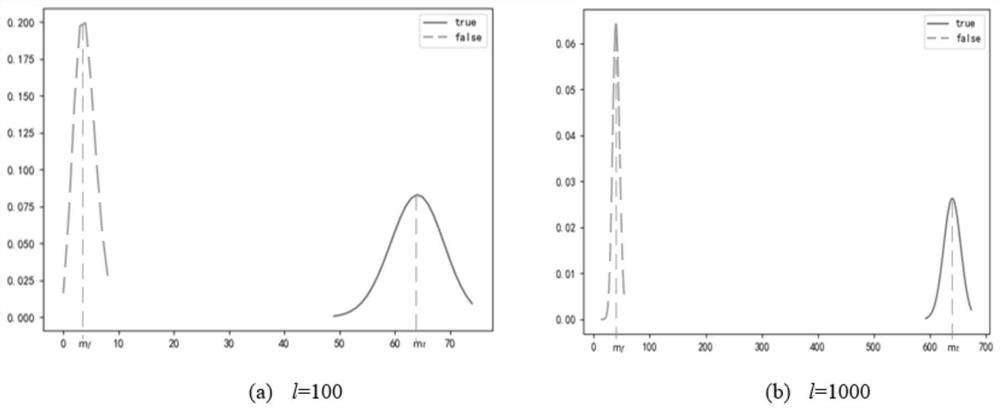

[0054] This embodiment provides a feature point matching screening method for adaptive regional motion statistics, including the following steps:

[0055] Step 1: Match the pixel gray value relationship in the image by the feature point, and use the watershed algorithm to divide the image into several regions;

[0056] Step 2: For the area obtained in step 1, use the constraints of the maximum stable extreme value area to screen out the area with a small area change caused by the change of the pixel grayscale threshold;

[0057] Step 3: Remove the overlapped part in the area of the maximum stable extreme value, and mark the area serial number;

[0058] Step 4: Count the matching number and corresponding relationship of feature points in each area;

[0059] Step 5: According to the constraints of regional motion statistics, screen out the correct region correspondence, and retain the feature point matching in it.

Embodiment 2

[0061] This embodiment provides a feature point matching screening method for adaptive regional motion statistics, including the following steps:

[0062] Step 1: Match the pixel gray value relationship in the image where the feature points are located, and use the watershed method [VincentL, Soille P.Watersheds in digital spaces:an efficient algorithm based on immersion simulations[J].IEEE Transactions on Pattern Analysis&MachineIntelligence,1991,13 (06):583-598.] Divide the image into regions under different thresholds. Specific steps are as follows:

[0063] Step 11: Initialize the data structure, including: a region stack, a history stack and a boundary heap, the region stack is a data structure for storing image region pixel information under different grayscale thresholds, and the history stack is a recording region stack. Threshold raising process The data structure, the boundary heap is the data structure of the storage area boundary pixel collection;

[0064] A mark...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com