Cache-conscious concurrency control scheme for database systems

A cache and database technology, which is used in electrical digital data processing, special data processing applications, digital data information retrieval, etc., and can solve problems such as poor update performance and scalability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] I parallel control

[0030] coherent cache miss

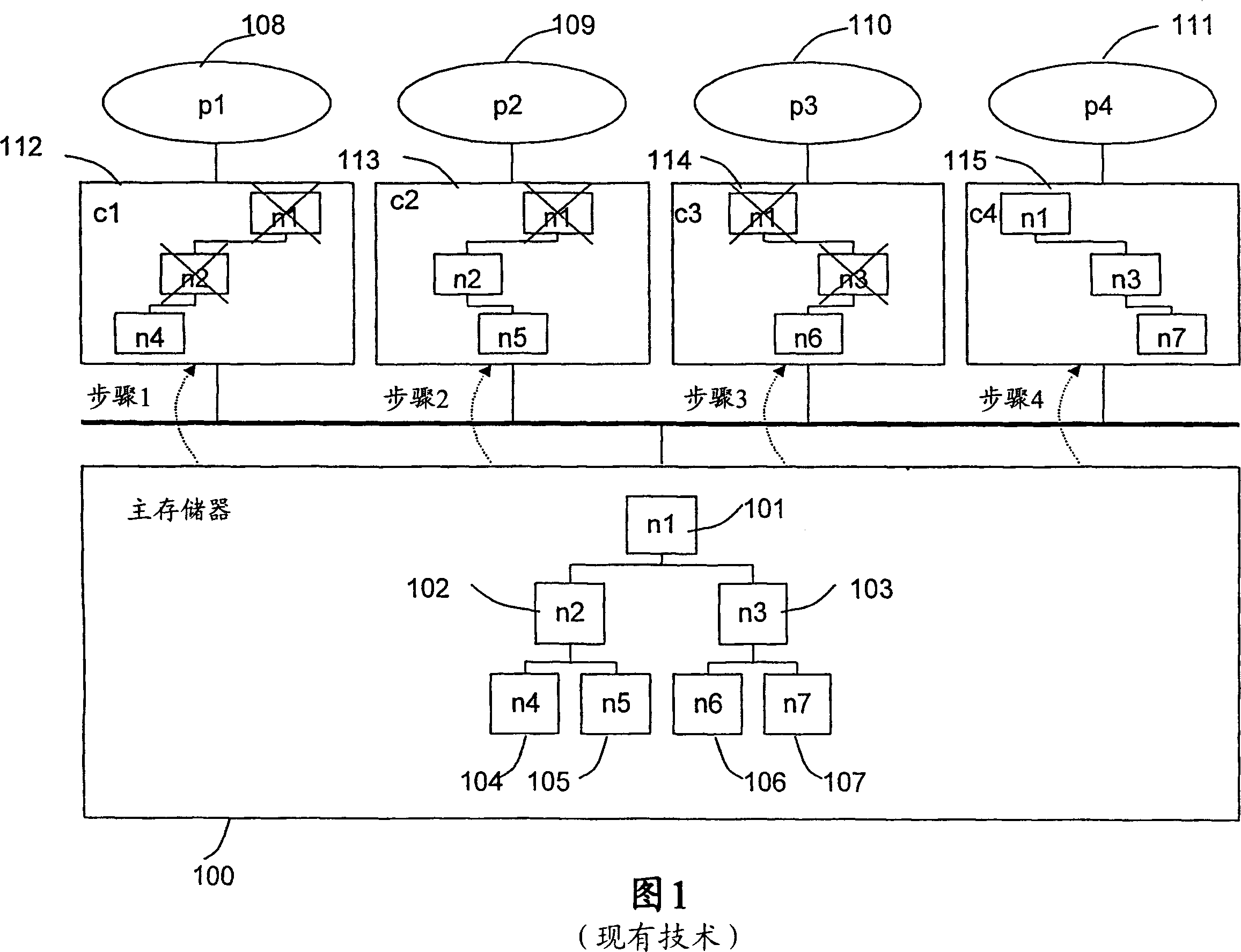

[0031] Figure 1 shows how coherent cache misses occur in a query processing system with a traditional database management system (DBMS). A DBMS is a collection of programs that manage the structure of a database and control access to it. In the main memory 100, there is an index tree including nodes n1 to n7 (101 to 107) for accessing a database on disk or in the main memory. For simplicity, assume that each node corresponds to a cache block and contains a latch. As mentioned above, latches can guarantee that a transaction can only access a data item. There are four processors (108 to 111) accessing main memory 100 through caches (112-115).

[0032] Let us consider a situation where, on cold start of the main memory query processing system, processor p1 108 traverses the path (n1 101, n2 102, n4 104) such that these nodes are copied in cache c1 112 of p1108 . During this process, the latches on n1 and n2 are he...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com