Refining Synthetic Data With A Generative Adversarial Network Using Auxiliary Inputs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

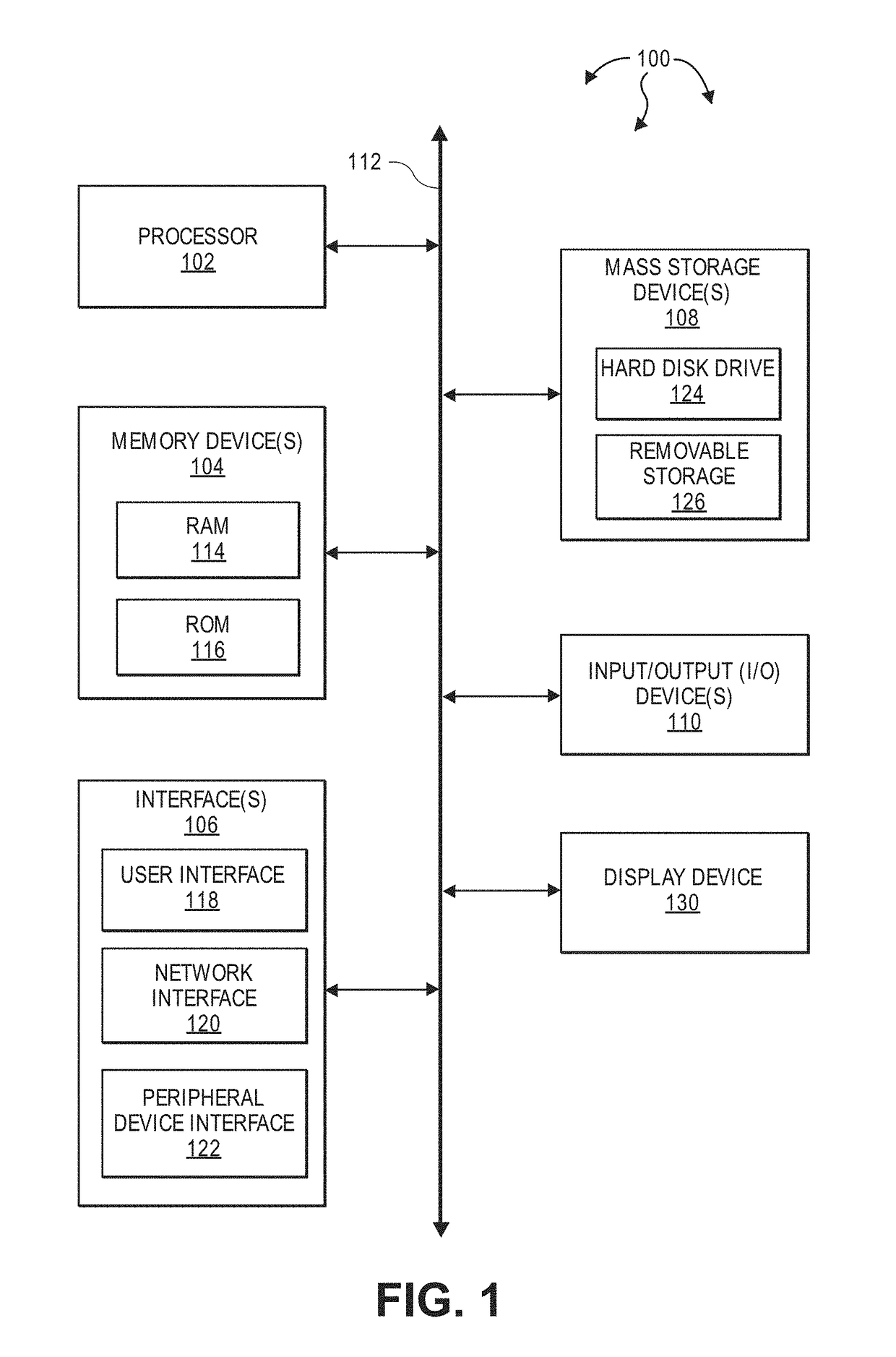

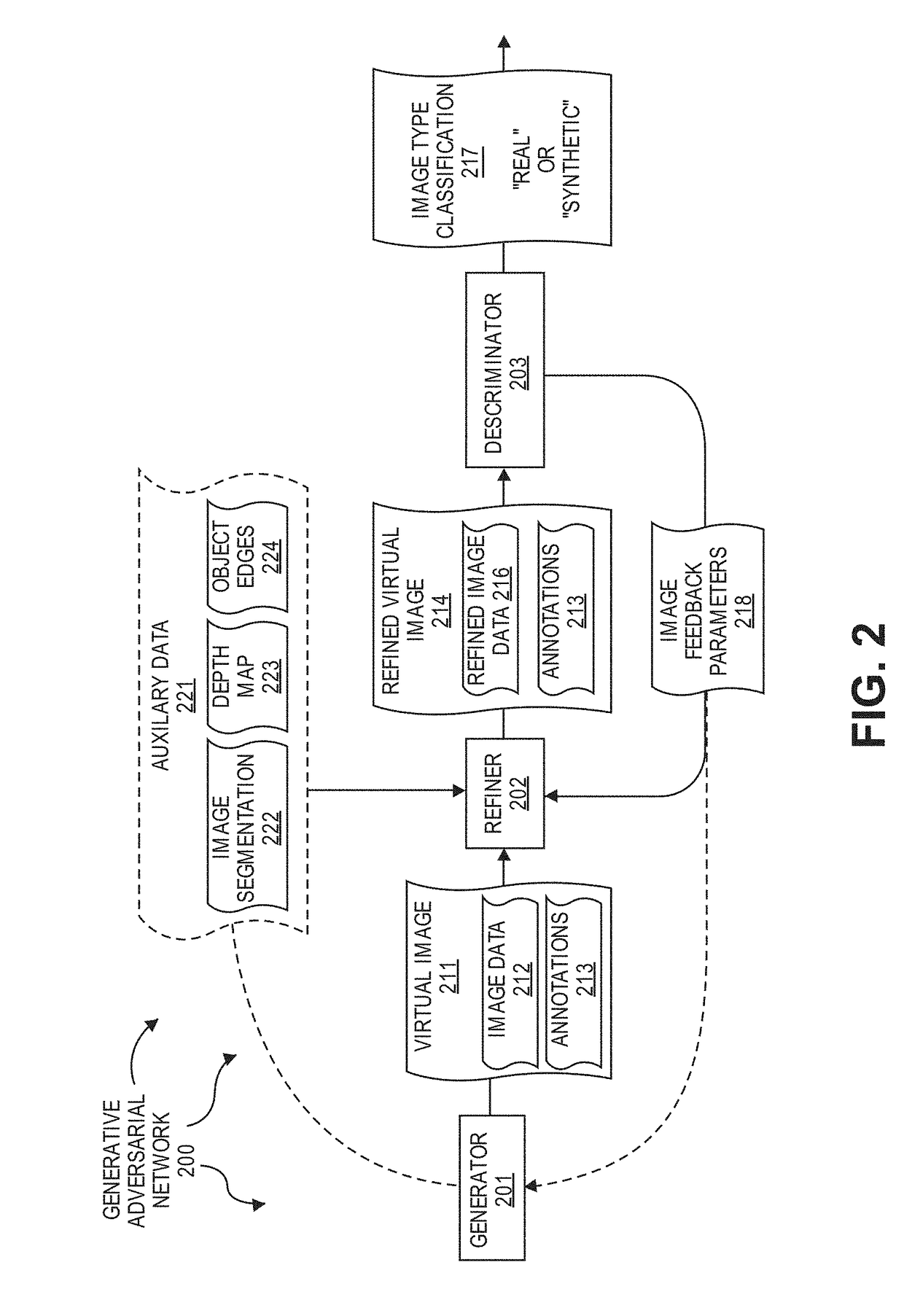

[0009]The present invention extends to methods, systems, and computer program products for refining synthetic data with a Generative Adversarial Network using auxiliary inputs.

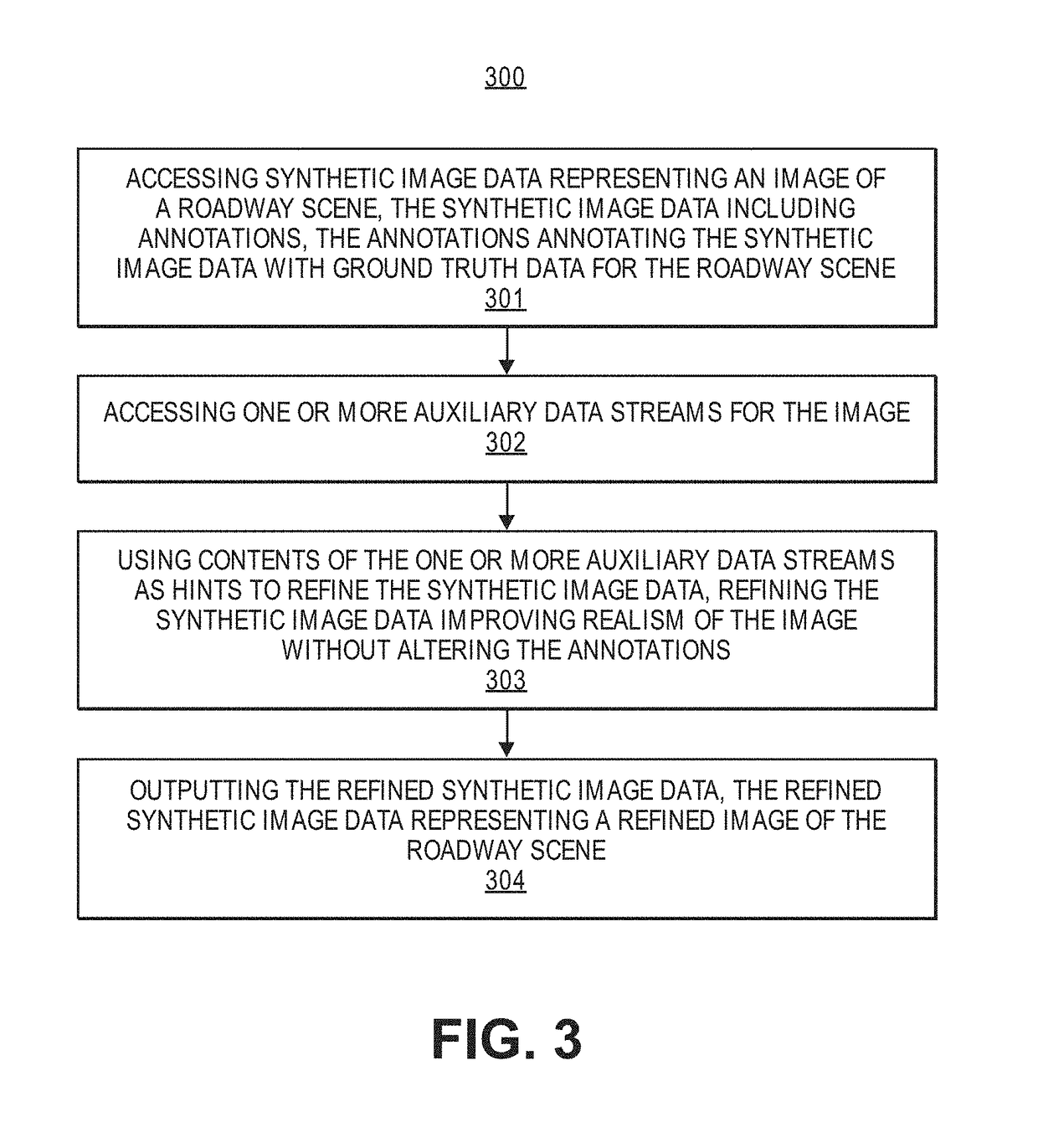

[0010]Aspects of the invention include using Generative Adversarial Networks (“GANs”) to refine synthetic data. Refined synthetic data can be rendered more realistically than the original synthetic data. Refined synthetic data also retains annotation metadata and labeling metadata used for training of machine learning models. GANs can be extended to use auxiliary channels as inputs to a refiner network to provide hints about increasing the realism of synthetic data. Refinement of synthetic data enhances the use of synthetic data for additional applications.

[0011]In one aspect, a GAN is used to refine a synthetic (or virtual) image, for example, an image generated by a gaming engine, into a more realistic refined synthetic (or virtual) image. The more realistic refined synthetic image retains annotation metadat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com