Method and apparatus for sound object following

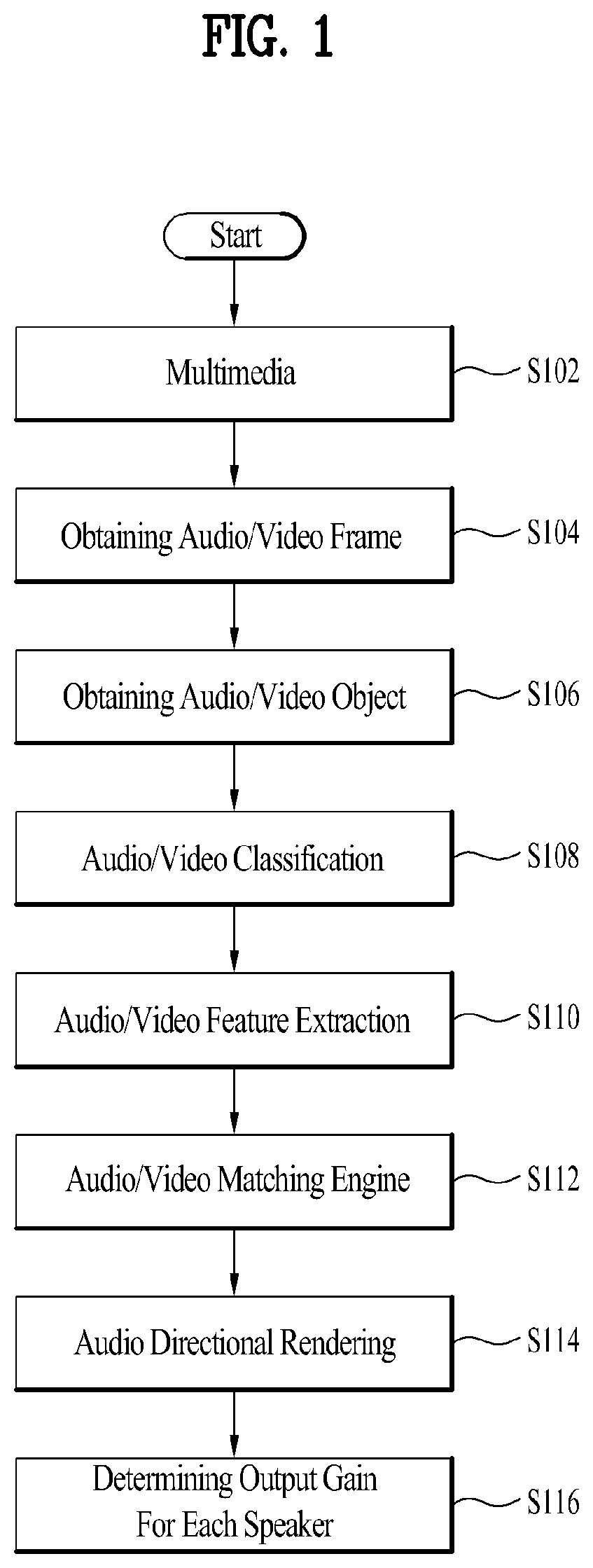

a technology of sound object and sound processing technology, applied in the field of multimedia signal processing, can solve the problems of difficult application of 3d audio processing technology to content for broadcasting and real-time streaming, time and money, and limited use of 3d audio processing technology such as dolby atmos, so as to improve the sense of immersion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

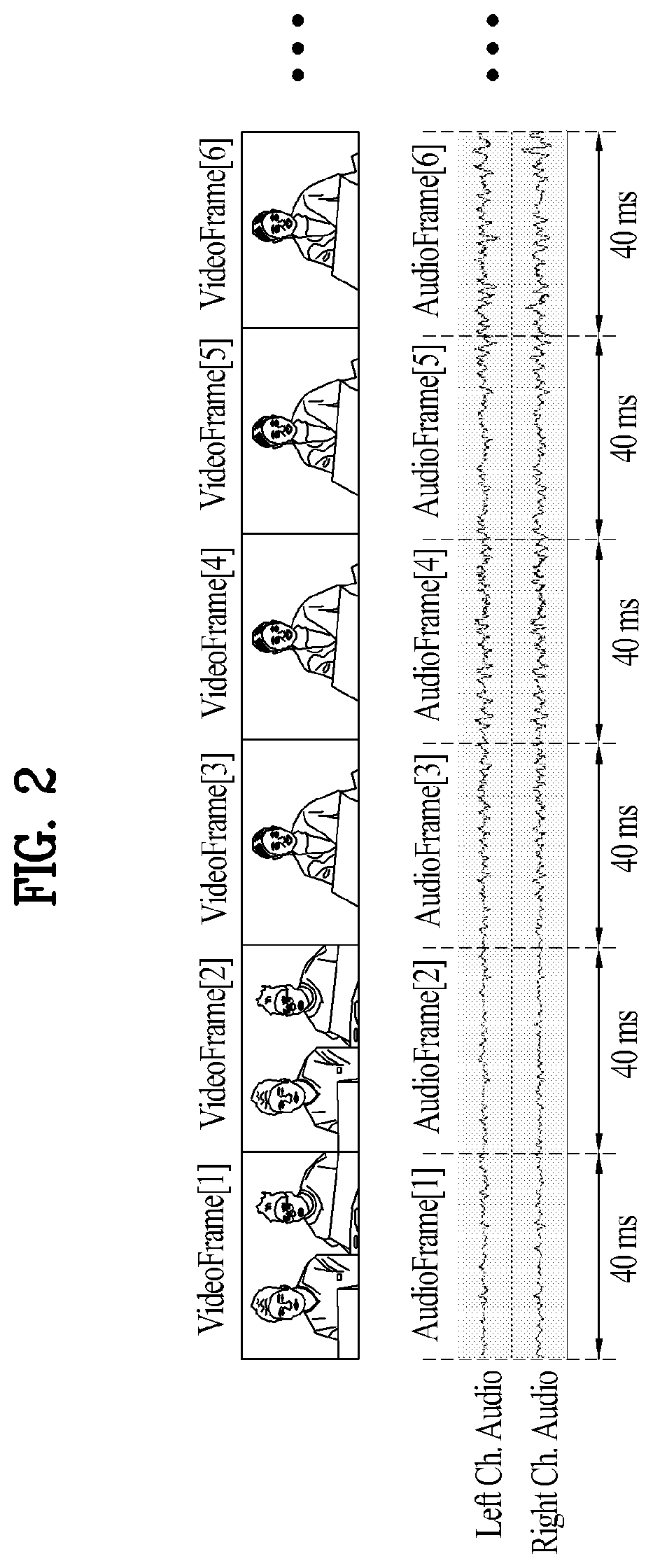

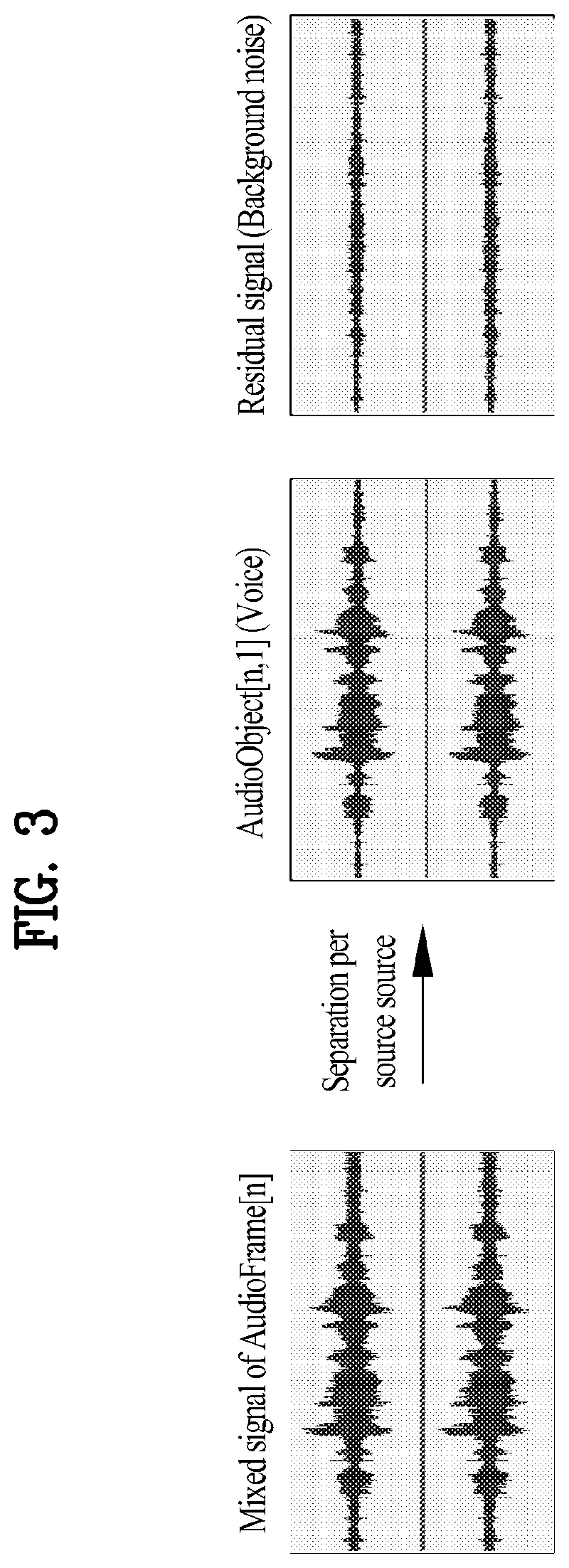

Method used

Image

Examples

Embodiment Construction

[0048]Reference will now be made in detail to the preferred embodiments of the present disclosure, examples of which are illustrated in the accompanying drawings. Wherever possible, the same reference numbers will be used throughout the drawings to refer to the same or like parts.

[0049]In order to apply 3D audio processing technologies such as Dolby Atmos to broadcast or real-time streaming content, audio mixing technicians need to generate and transmit mixing parameters for 3D effects in real time. The current technology has a difficulty in performing real-time processing. In particular, in order to properly apply 3D audio processing technology such as Dolby Atmos, it is necessary to accurately identify the location of a loudspeaker set on the user (or decoder) side. However, and it is rarely possible for the content producer and supplier (or encoder) to identify all the information on the loudspeaker location in a typical house. Therefore, there is a technical difficulty in applyi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com