Information acquisition and transfer method of auxiliary vision system

A visual system and stereoscopic vision technology, applied in the information field, can solve problems such as huge amount of computation, errors, immature development of pattern recognition and intelligent systems, etc., and achieve the effect of small amount of computation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be described in further detail below with reference to the accompanying drawings.

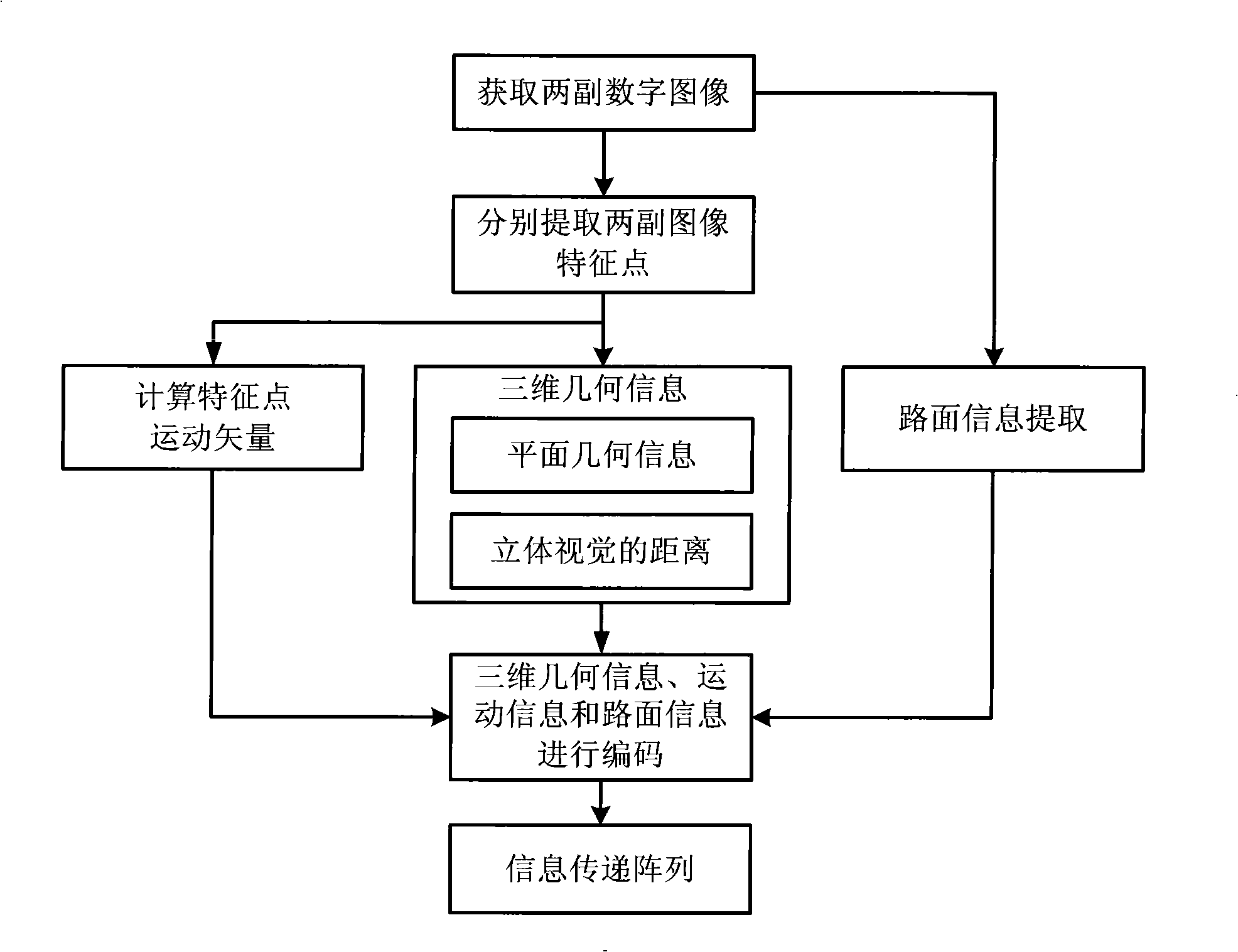

[0028] Refer to attached figure 1 , the information acquisition steps of the present invention are as follows:

[0029] Step 1: Obtain image information.

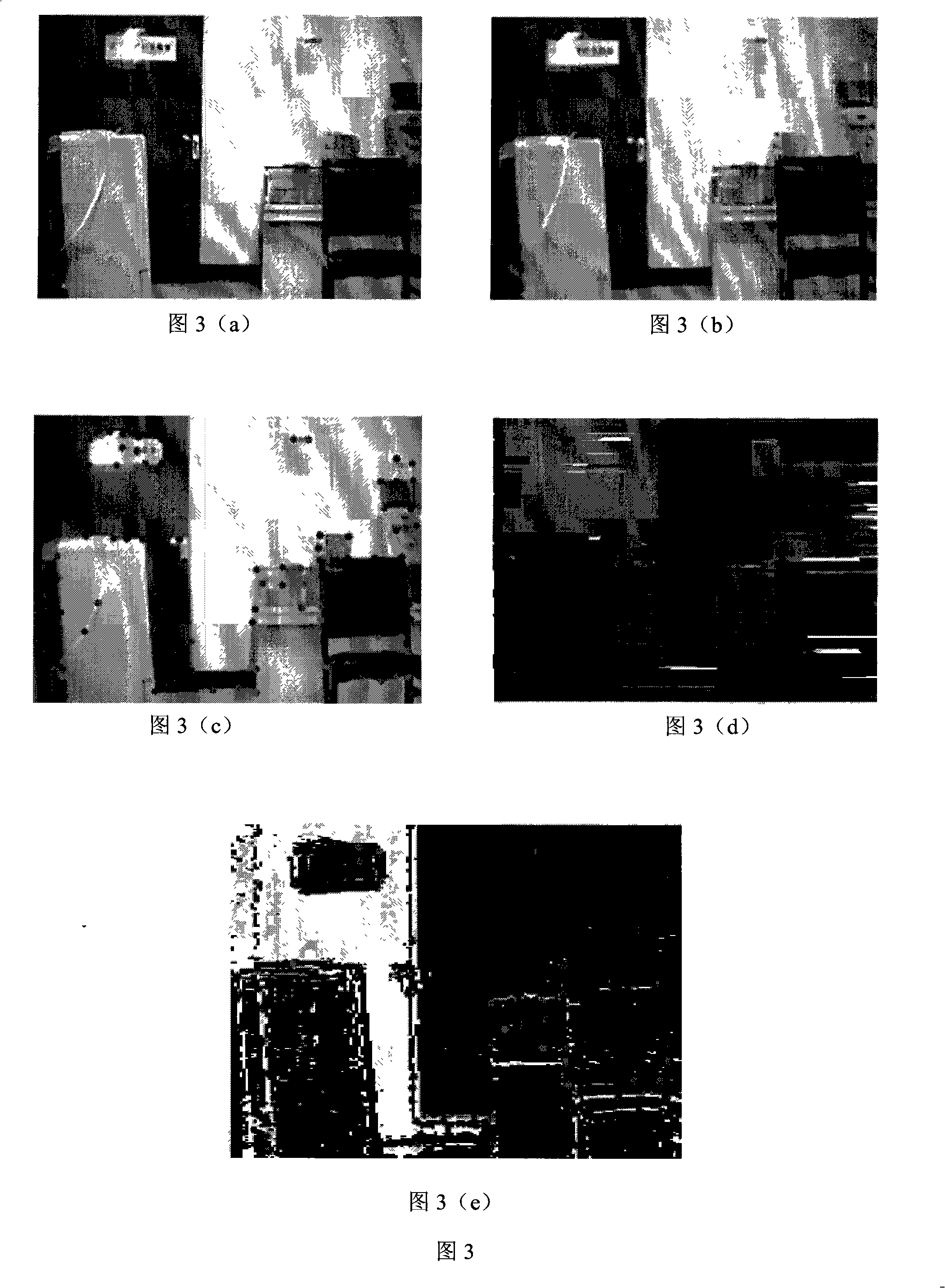

[0030] Simultaneously obtain two original digital images of the measured object from different angles through two cameras I 1 and I 2 , as shown in Figure 3(a) and Figure 3(b).

[0031] The second step: extract the feature points of the image information.

[0032] Using the Harris corner detection method, the feature points in Figure 3(a) and Figure 3(b) are extracted respectively, and the extraction steps are as follows:

[0033] 2.1, use the following formula to calculate the image I 1 The gradient image:

[0034] X 1 = I 1 ⊗ ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com