Perceptual video compression method based on JND and AR model

An AR model and video compression technology, applied in the direction of digital video signal modification, television, electrical components, etc., can solve problems such as robustness and insufficient effect, and achieve the effect of reducing bit rate, improving compression efficiency, and improving real-time performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] Below in conjunction with accompanying drawing, the technical scheme of invention is described in detail:

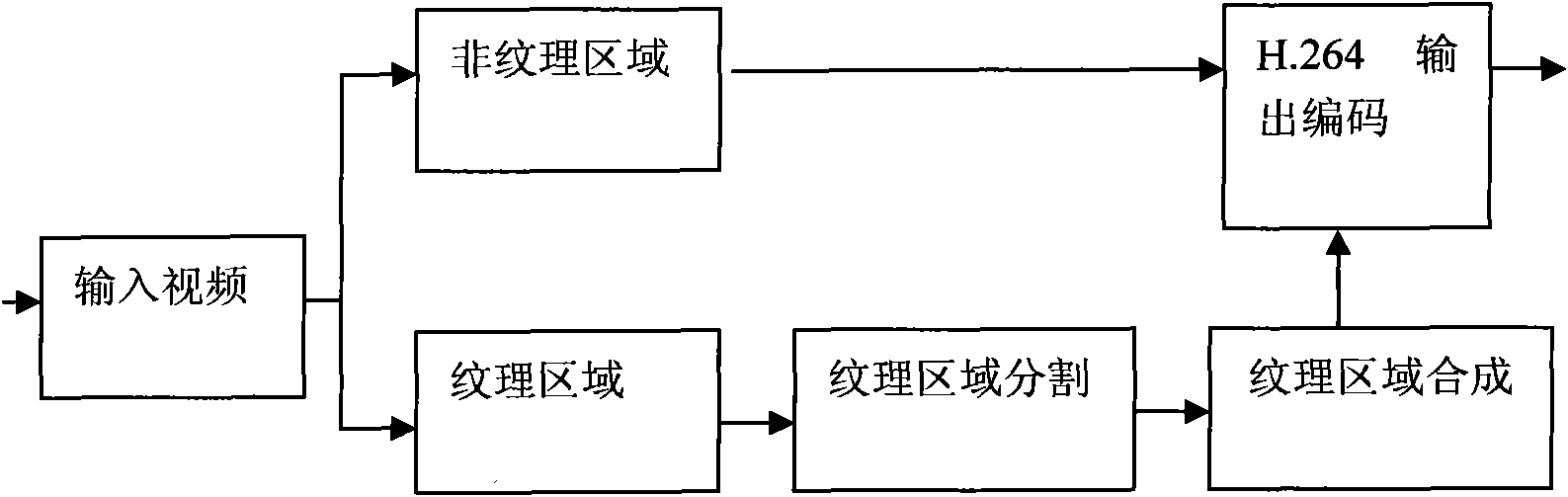

[0044] For stereoscopic video with a huge amount of information, removing the perceptual redundancy has a more obvious effect on improving the coding efficiency. The research on HVS started from psycho-physiology, and later widely applied to vision-related fields. In stereoscopic video processing, in addition to temporal and spatial redundancy, the elimination of perceptual redundancy cannot be ignored. The invention proposes a perceptual video compression method based on JND and AR models, including a segmentation algorithm of texture regions and a synthesis algorithm based on an autoregressive model. We first use the JND-based segmentation algorithm to segment the texture area in the video, and then the autoregressive model synthesizes the texture area, such as figure 1 shown.

[0045] The present invention's perceptual video compression method based on JND a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com