Expression interaction method based on face tracking and analysis

An expression and face technology, which is applied in the field of face-based tracking and expression interaction, can solve problems such as incompatibility, and achieve the effects of strong versatility, robust face tracking, and fast processing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be described in detail below with reference to the accompanying drawings. It should be noted that the described embodiments are only intended to facilitate the understanding of the present invention and do not have any limiting effect on it. The present invention is illustrated by the following embodiments:

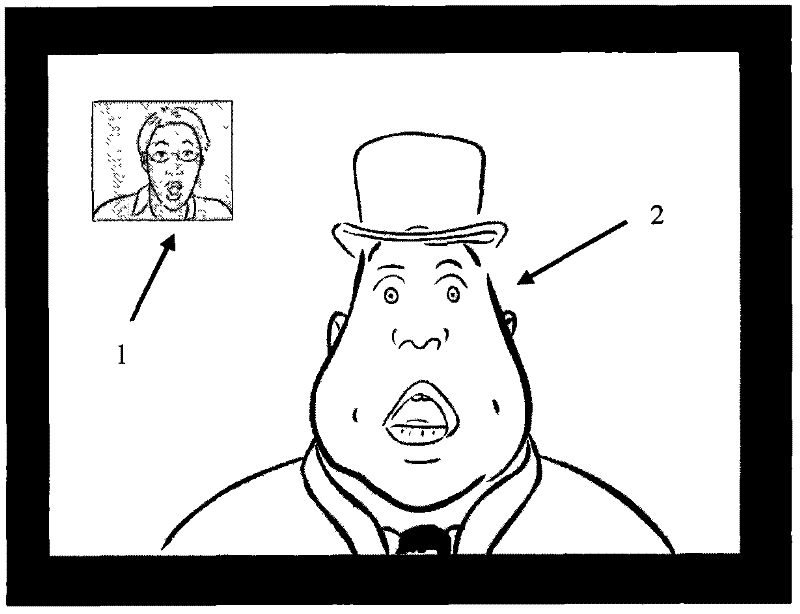

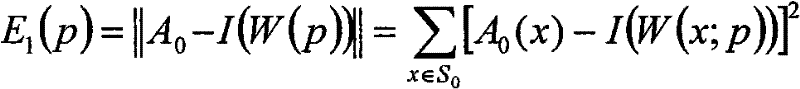

[0019] Making 3D models, training active appearance models, initializing face tracking, setting energy function in face tracking and expression analysis, detecting eyes open and closed, and driving 3D models. The specific implementation process is as follows:

[0020] 1. Make a 3D model

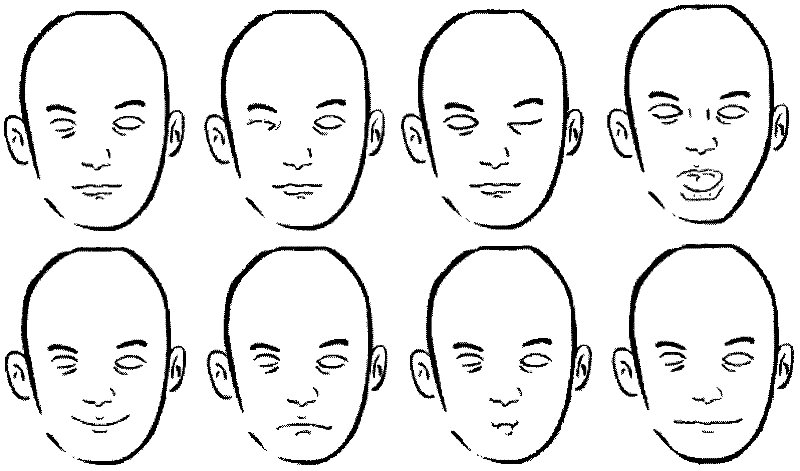

[0021] The production of 3D models belongs to the offline preprocessing stage. The purpose is to design a 3D face model and corresponding models in 14 expression states. Some models such as figure 2 Shown. In the expression interaction, let the model simulate the performer's expression in real time. In the present invention, 14 basic expressions are divided...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com