Method and device for identifying action types, and method and device for broadcasting programs

A technology of action types and recognition methods, applied in character and pattern recognition, input/output of user/computer interaction, television, etc., can solve problems such as inflexible program broadcasting methods and program broadcast errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

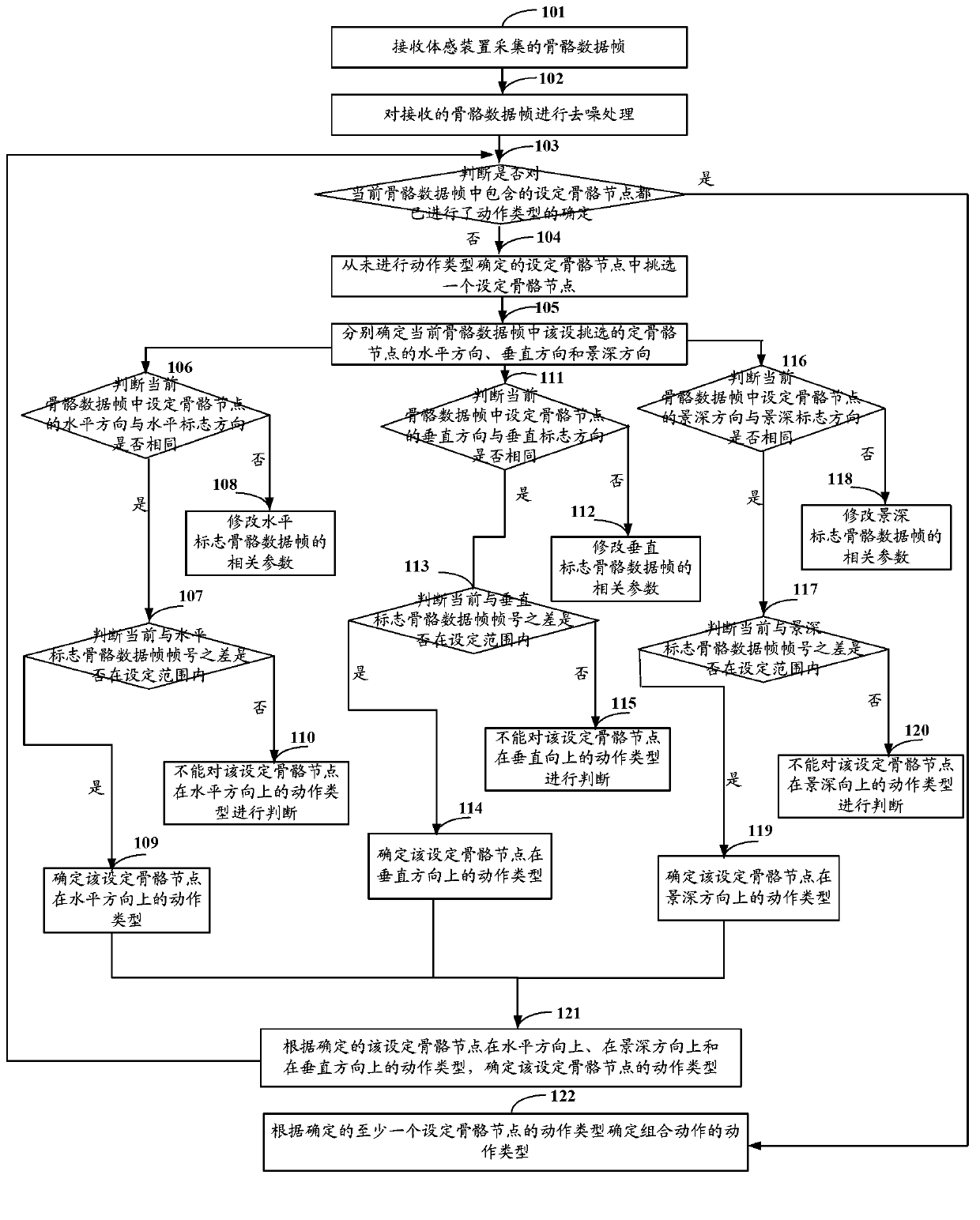

[0045] Such as image 3 As shown, it is a flow chart of the action type recognition method in Embodiment 1 of the present invention, including the following steps:

[0046] Step 101: Receive the bone data frame collected by the somatosensory device.

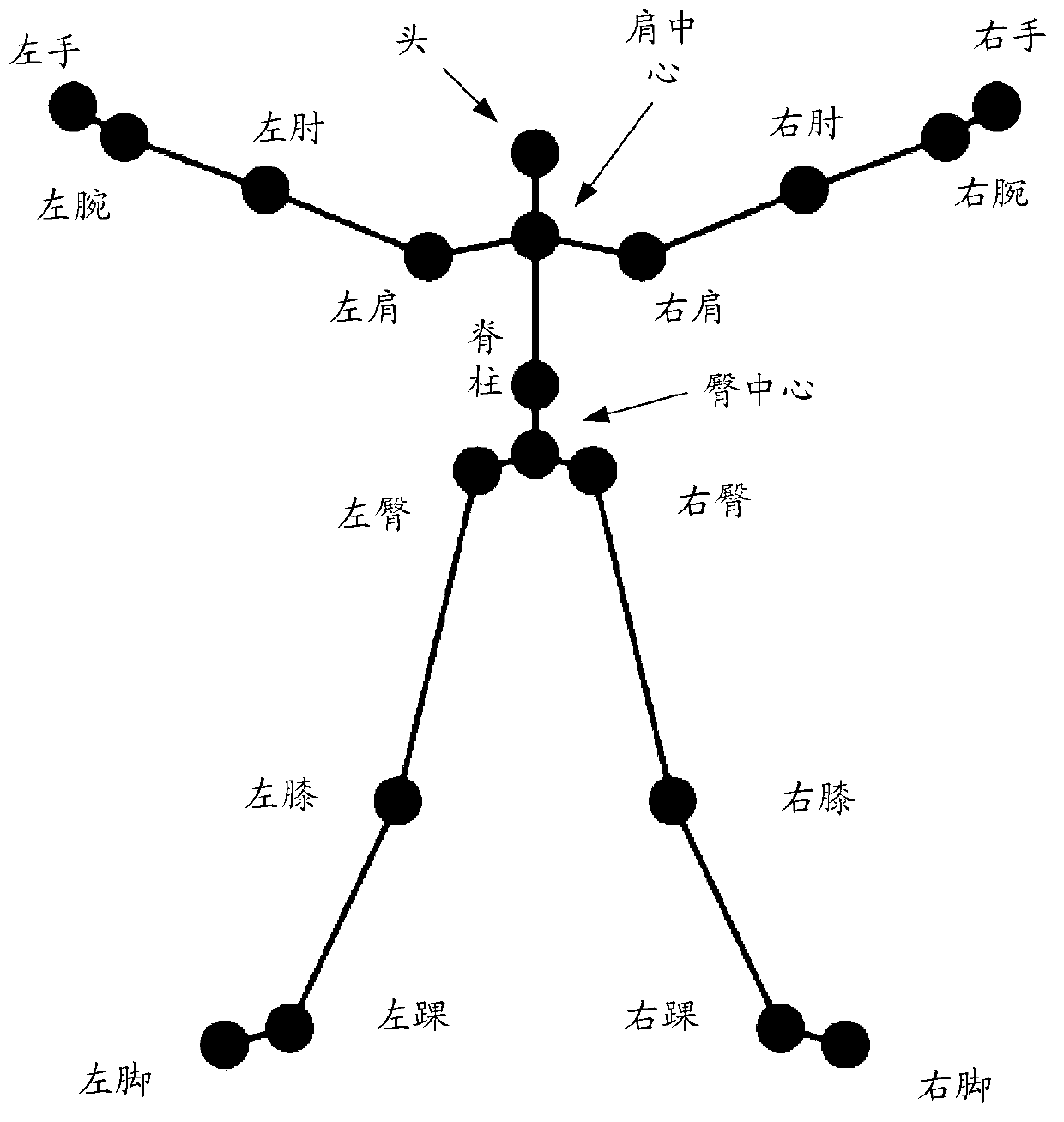

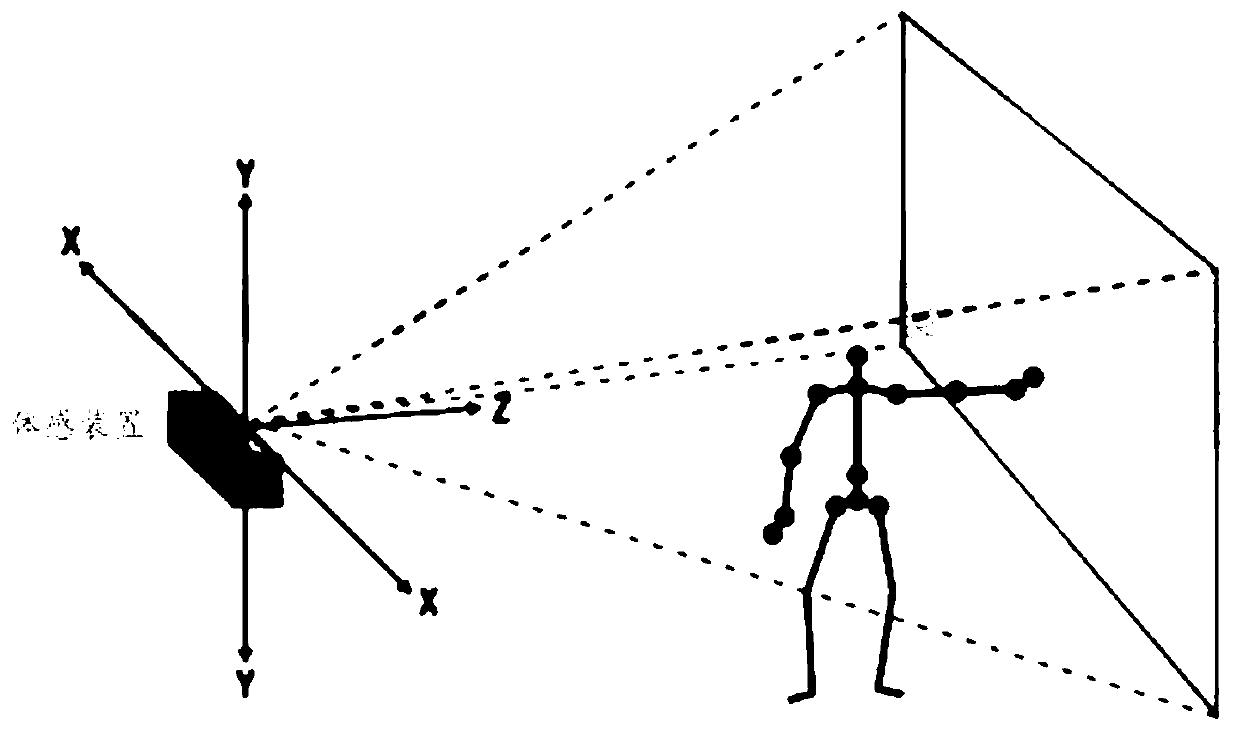

[0047] The skeletal data frame includes reference skeletal nodes and at least one setting coordinates of the skeletal nodes in a three-dimensional coordinate space composed of mutually perpendicular horizontal directions, vertical directions, and depth-of-field directions;

[0048] The set skeletal node is determined according to the needs of actual action recognition, and may be any one of the above-mentioned 20 joint points in the skeletal data frame.

[0049] Step 102: Perform denoising processing on the received skeleton data frame.

[0050] It should be noted that step 102 is a preferred step in Embodiment 1 of the present invention. Salt and pepper noise and other types of noise will be generated during image acquisition ...

Embodiment 2

[0151] Such as Figure 5 As shown, it is a flow chart of the program broadcasting method in Embodiment 2 of the present invention, and the program broadcasting method includes the following steps:

[0152] Step 201: Use the action recognition method in Embodiment 1 to determine the action type of at least one combined action for setting the skeletal nodes in the currently received skeletal data frame;

[0153] Step 202: Determine whether the action type of the determined combination action is a valid action type; if yes, execute step 203; if not, go to step 201.

[0154] In this step 202, if the determined action type of the combined action belongs to the preset action type set, it is determined that the determined action type is a valid action type; otherwise, it is determined that the determined action type is an invalid action type.

[0155] Step 203: According to the stored correspondence between the action type of the combination action and the special effect animation, ...

Embodiment 3

[0168] With the same inventive concept as the first embodiment of the present invention, the third embodiment of the present invention provides an action type recognition device, such as Figure 6 As shown, the device includes: a receiving module 101, a first determining module 102, a first judging module 103, a second determining module 104, an action type determining module 105 and a combined action type determining module 106, wherein:

[0169] The receiving module 101 is used to receive the skeleton data frame collected by the somatosensory device, the skeleton data frame includes the reference skeleton node and at least one set skeleton node in a three-dimensional coordinate space composed of mutually perpendicular horizontal direction, vertical direction and depth of field direction coordinate of;

[0170] The first determination module 102 is used to determine the horizontal direction, vertical direction and depth direction of the set bone node in the current bone data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com