Quasi-three dimensional reconstruction method for acquiring two-dimensional videos of static scenes

A two-dimensional video, quasi-three-dimensional technology, applied in 3D modeling, image data processing, instruments, etc., can solve problems such as loss of edge information in depth maps

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The technical scheme of the present invention is described in detail below in conjunction with accompanying drawing:

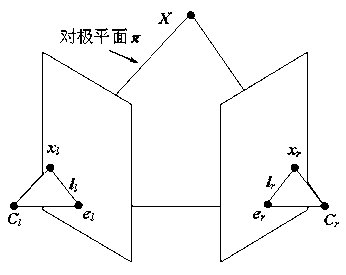

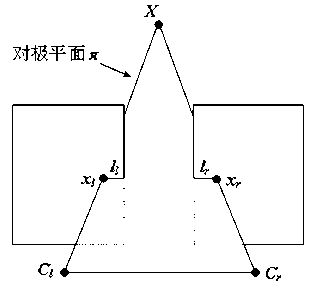

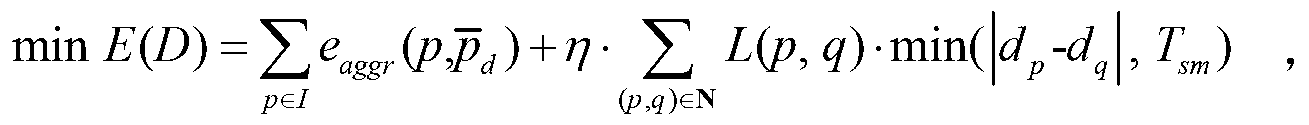

[0037] The idea of the present invention is to use the method of epipolar line correction combined with stereo matching to solve the video disparity map sequence, avoiding the high computational complexity of SFM, BP, image segmentation and bundle adjustment and optimization required in the 3D video reconstruction method with the help of MVS The operation process simplifies the solution process of the video disparity map sequence. The present invention further adopts a simple and easy-to-operate quasi-Euclidean epipolar correction method. As a preferred embodiment of the method of the present invention, first, for each frame in the two-dimensional video, extract another frame with a fixed number of frames apart from it, and simulate a dual-viewpoint image; then adopt a quasi-Euclidean epipolar line correction method to correct the dual-viewpoint image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com