Video tracking method based on spread fusion

A technology of communication fusion and video tracking, which is applied in the field of video tracking based on communication fusion, can solve problems such as not considering information, and achieve the effect of improving accuracy and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

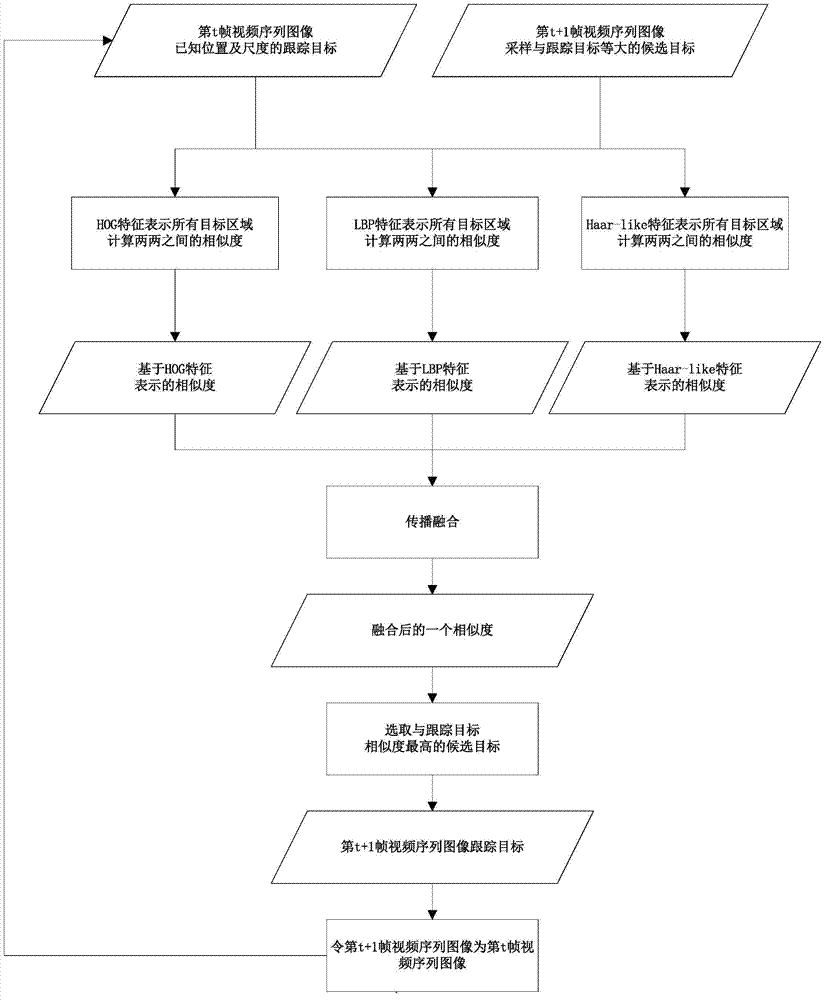

[0017] The invention relates to a video target tracking method based on matching. Consider a simple tracking mode, that is, a tracking target area with a certain position and scale in the t-th video sequence image is known, and some candidate target areas of the same size as the known target are selected on the t+1 frame video sequence image, and the Each candidate target area is matched with the known tracking target area of the current frame one by one, and the candidate target area with the highest matching degree is found as the position of the tracking target of the t+1 frame video sequence image; the tracking target position and The t+1 frame video sequence image of the scale is used as the t frame video sequence image. Repeat the previous step, and the target of the t+2 frame video sequence image can also be tracked; and so on, the t+2 frame video sequence image The target of all subsequent video frames can be tracked.

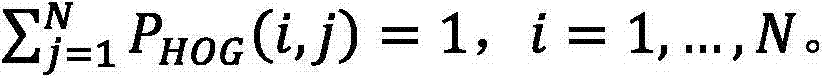

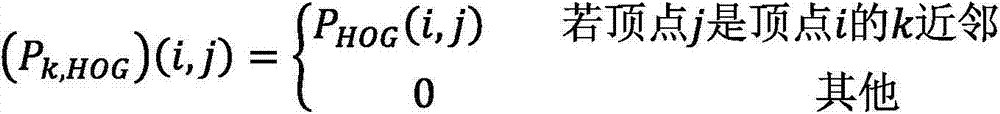

[0018] In the traditional matching-based video tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com