Multi-camera-array depth perception method

A multi-camera, depth-sensing technology, applied in the field of depth perception of multi-camera arrays, can solve the problems of depth map resolution, accuracy, distance and real-time performance that are difficult to meet application requirements, single camera receiving mode, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described in detail below in conjunction with specific examples.

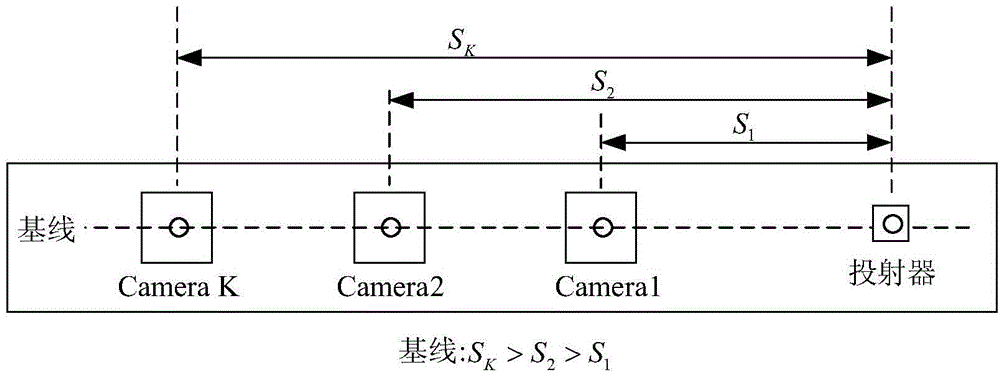

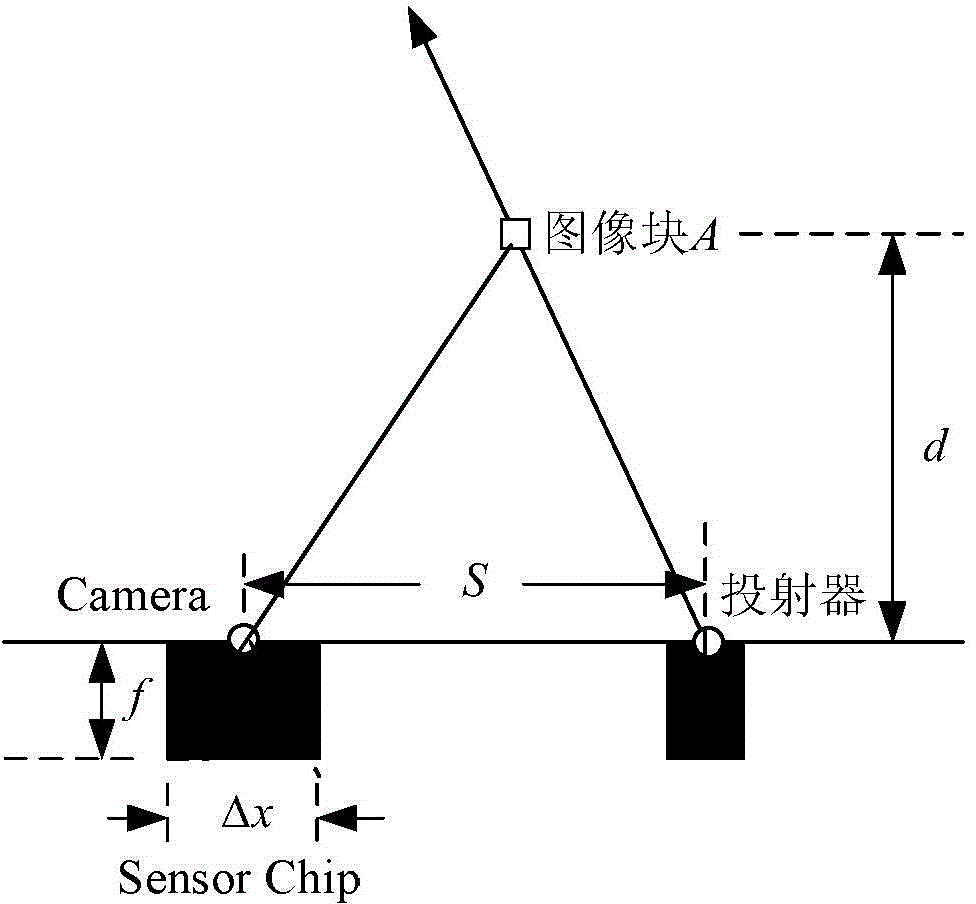

[0024] In general, the multi-camera array depth perception method of the embodiment of the present invention uses a laser speckle projector or other projection device to project a fixed pattern, encodes the space with structured light, and then uses multiple cameras on the same baseline to obtain the projected pattern. Through the respective depth calculation and depth map fusion, high-resolution and high-precision image depth information (distance) is generated for target recognition or motion capture of three-dimensional images.

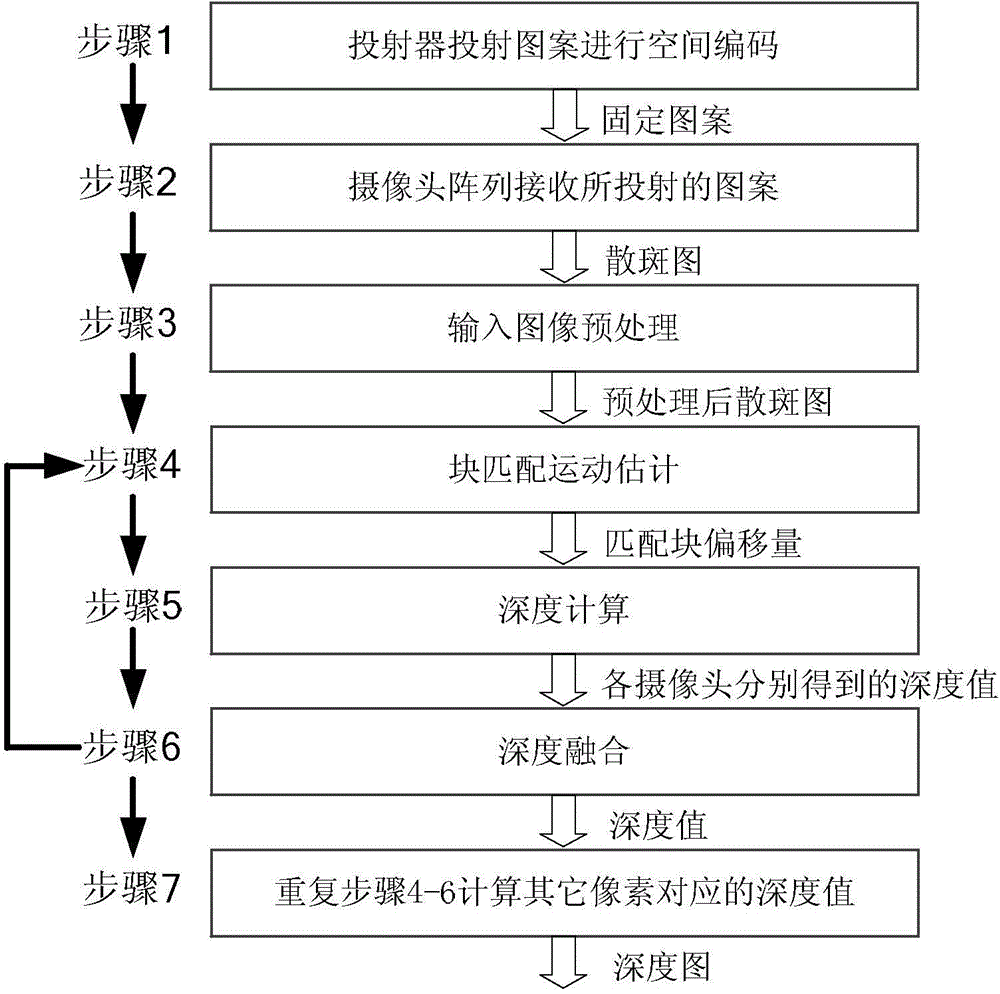

[0025] figure 1 It schematically illustrates the overall flow of the multi-camera array depth perception method according to the embodiment of the present invention. For clarity, the following will combine figure 2 , image 3 , Figure 4 , Figure 5 , Figure 6 , Figure 7 , Figure 8 to describe the method.

[0026] Step 1. Ado...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com