Unified framework for precise vision-aided navigation

a vision-aided navigation and unified framework technology, applied in the field of visual odometry, can solve the problems of insufficient assistance for users, inability to reliably work with gps, and most of the available navigation systems to function efficiently, so as to reduce or eliminate the accumulation of navigation drift, improve visual odometry performance, and improve the effect of visual odometry performan

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

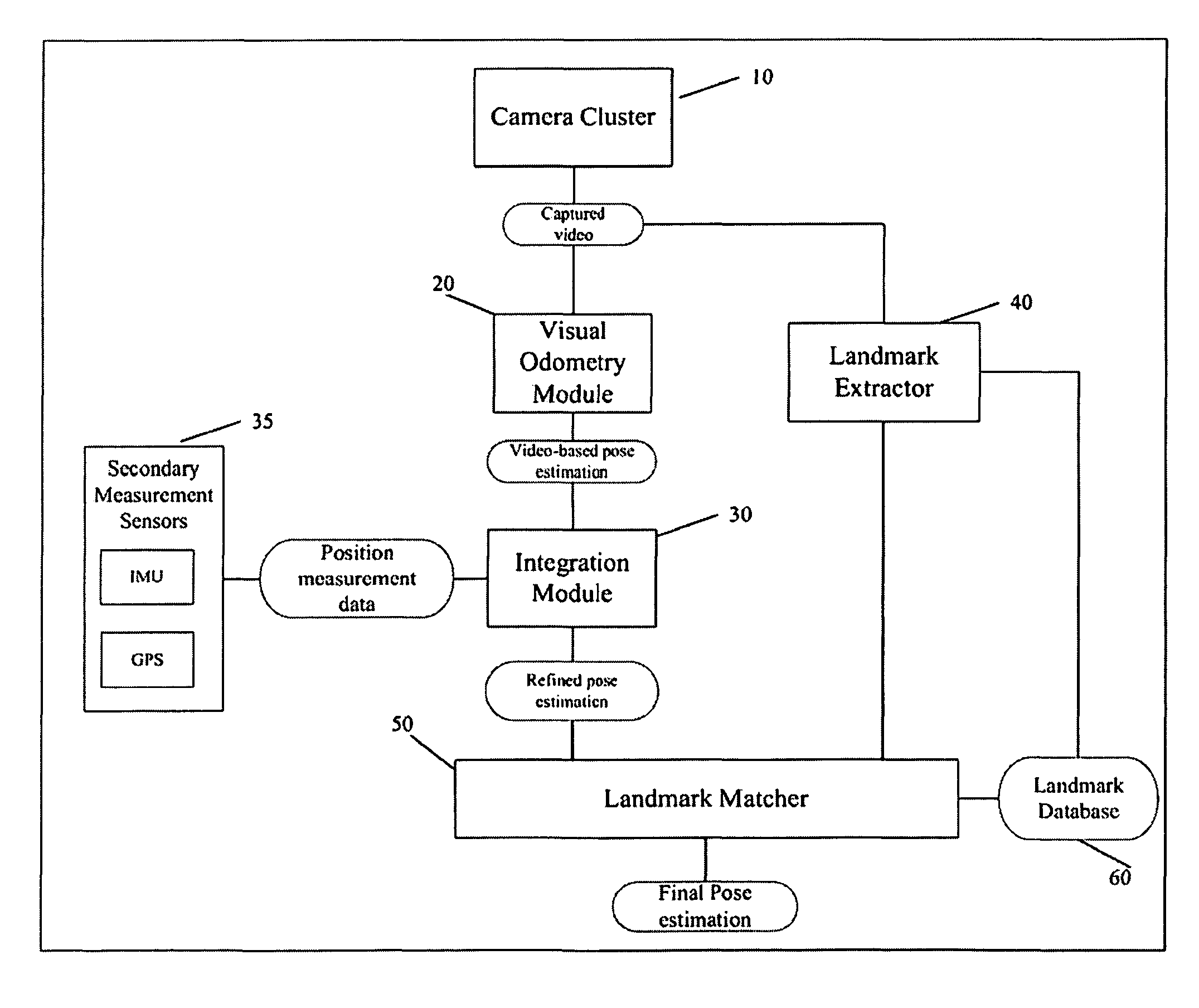

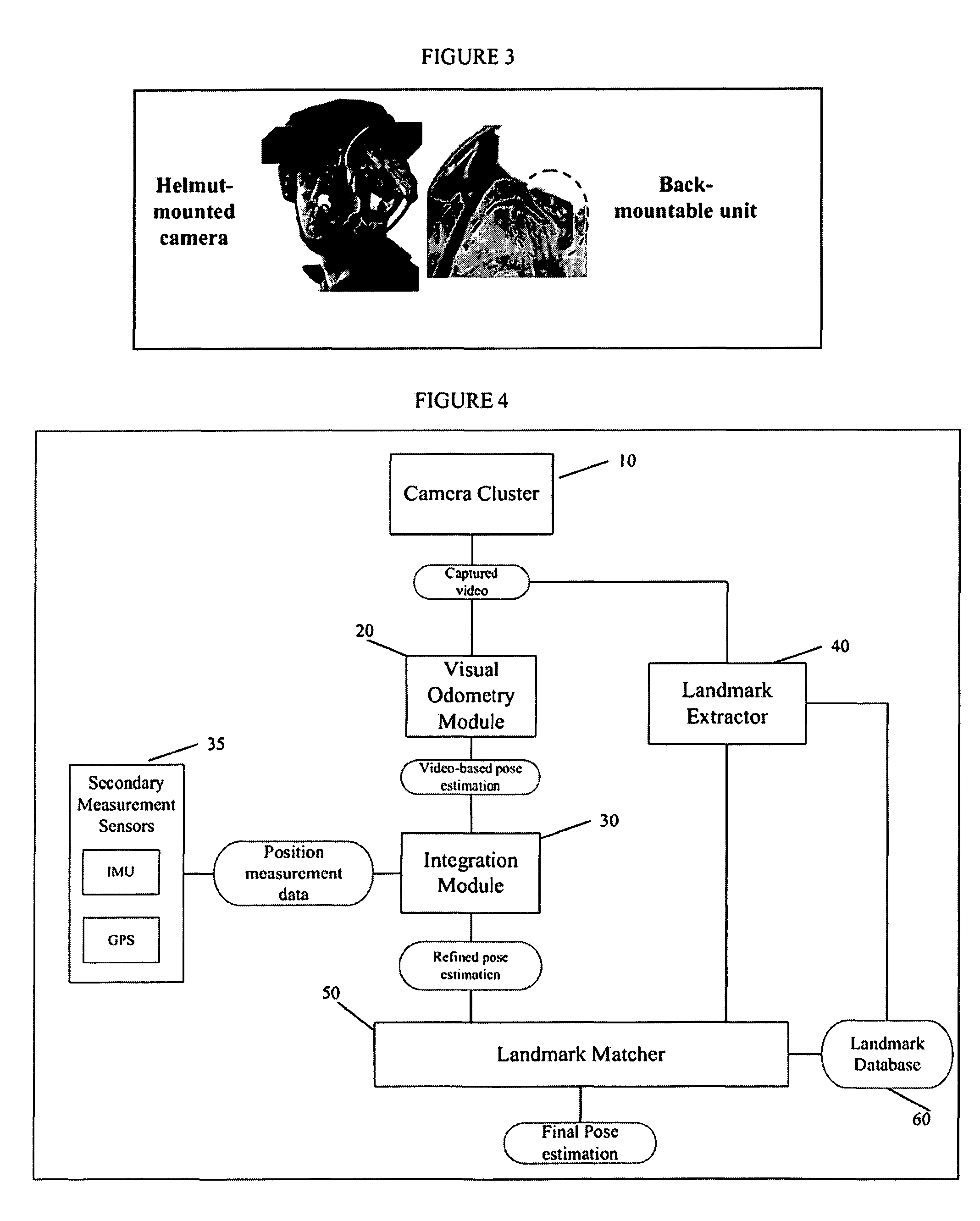

[0034]The present invention relates to vision-based navigation systems and methods for determining location and navigational information related to a user and / or other object of interest. An overall system and functional flow diagram according to an embodiment of the present invention is shown in FIG. 4. The systems and methods of the present invention provide for the real-time capture of visual data using a multi-camera framework and multi-camera visual odometry (described below in detail with reference to FIGS. 3, 5, and 6); integration of visual odometry with secondary measurement sensors (e.g., an inertial measurement unit (IMU) and / or a GPS unit) (described in detail with reference to FIG. 3); global landmark recognition including landmark extraction, landmark matching, and landmark database management and searching (described in detail with reference to FIGS. 3, 7, 8, and 9).

[0035]As shown in FIG. 4, the vision-based navigation system 1 (herein referred to as the “Navigation S...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com