Distributed mass short text KNN (K Nearest Neighbor) classification algorithm and distributed mass short text KNN classification system based on information entropy feature weight quantification

A classification algorithm and information entropy technology, applied in the field of massive short text distributed KNN classification algorithm and system, can solve problems that have not yet been effectively expanded

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

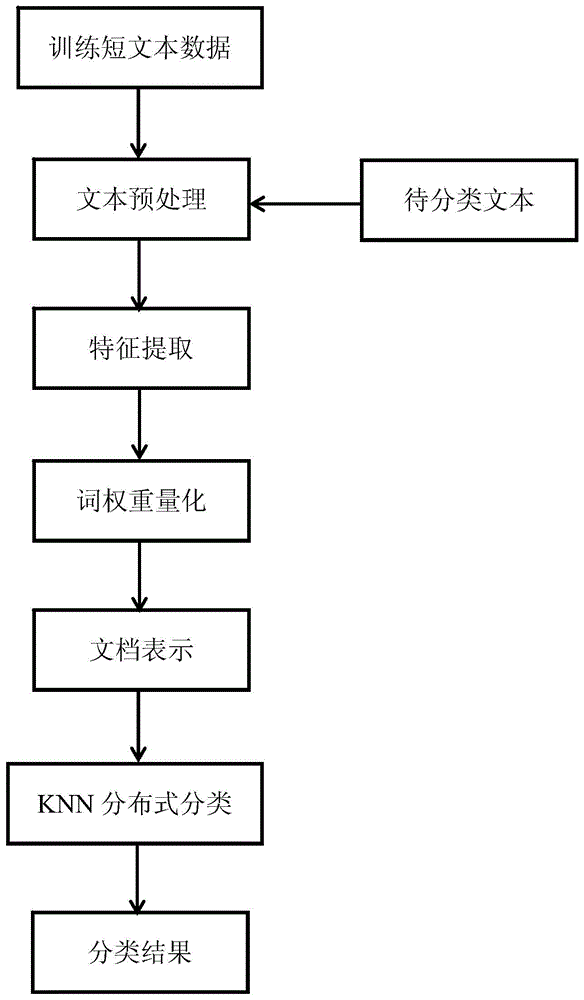

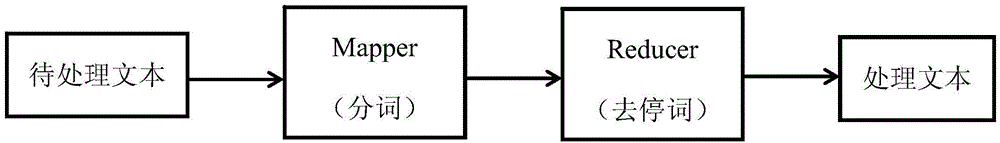

[0059] like figure 1 As shown, the present invention is based on the massive short text distributed KNN classification algorithm of information entropy feature weight quantization, comprising the following steps:

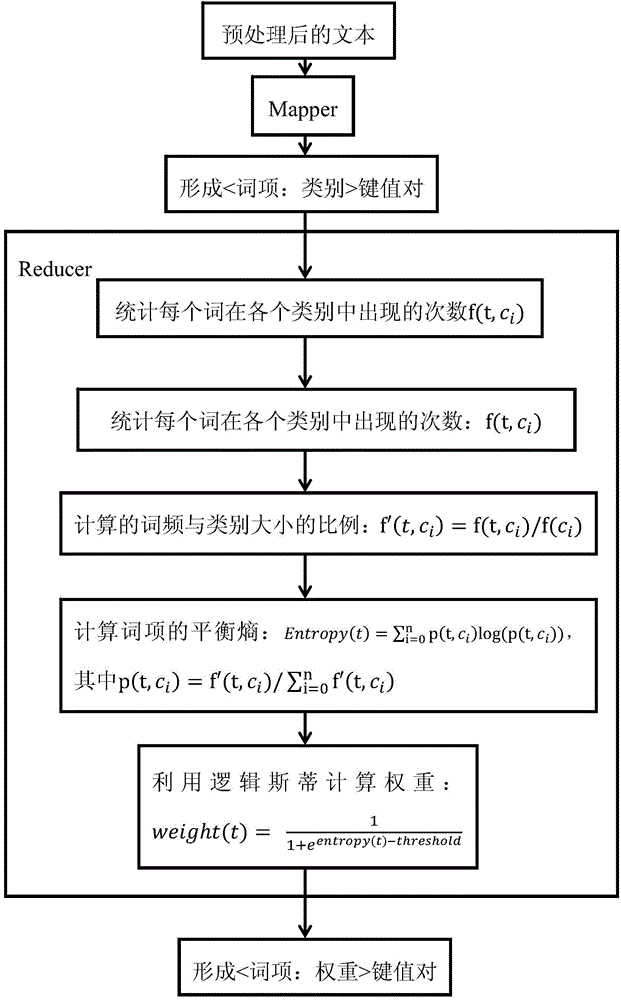

[0060] S1. The information entropy index is used to measure the certainty of the distribution of features in the data set, and the features with high certainty are assigned high weights, otherwise, low weights are assigned to obtain a weight quantization method reflecting the class distribution;

[0061] S2. Based on the Hadoop distributed computing platform and designed with the MapReduce computing framework, it is divided into two rounds of MapReduce operation combinations;

[0062] In the first round of Map operation, the training set is evenly divided into multiple sub-training sets and distributed to the nodes where the operation is performed. Set similarity calculation. In the first round of Reduce operation, the similarity calculated by Map is sorted in eac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com