On-line video abstraction generation method based on depth learning

A technology of video summarization and deep learning, which is applied in the field of online generation of video summaries based on deep learning, and can solve the problem that the video summarization method cannot meet the requirements of online processing of streaming video applications.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

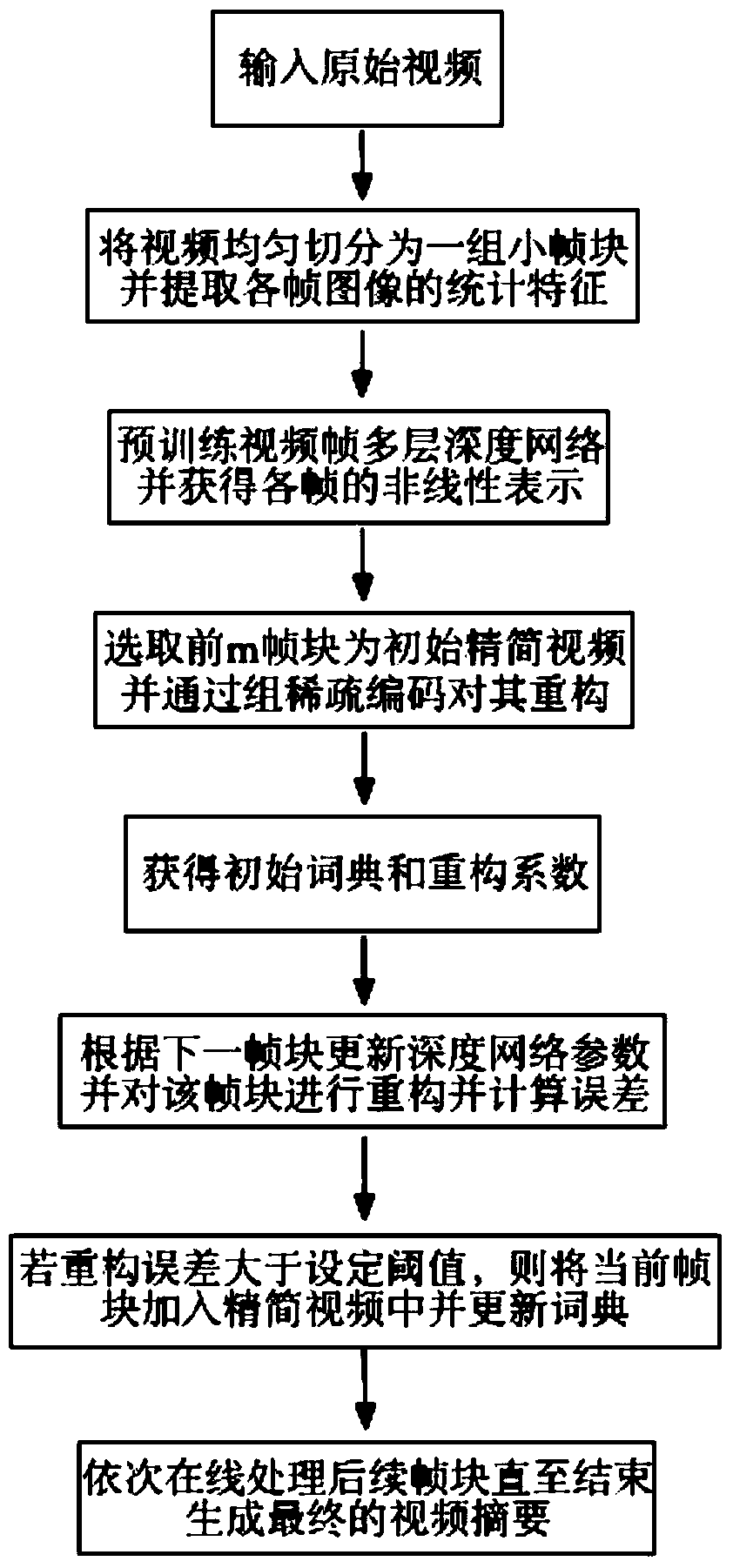

[0042] Refer to attached figure 1 , to further illustrate the present invention:

[0043] 1. After obtaining the original video data, perform the following operations:

[0044] 1) The video is evenly divided into a group of small frame blocks, each frame block contains multiple frames, and the statistical features of each frame image are extracted to form a corresponding vectorized representation;

[0045] 2) Pre-train the multi-layer deep network of video frames to obtain the nonlinear representation of each frame;

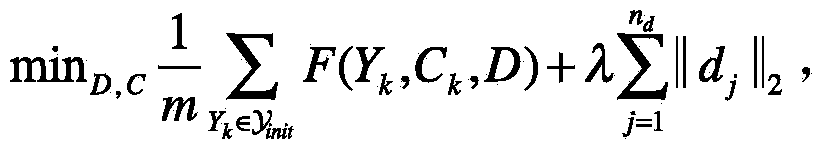

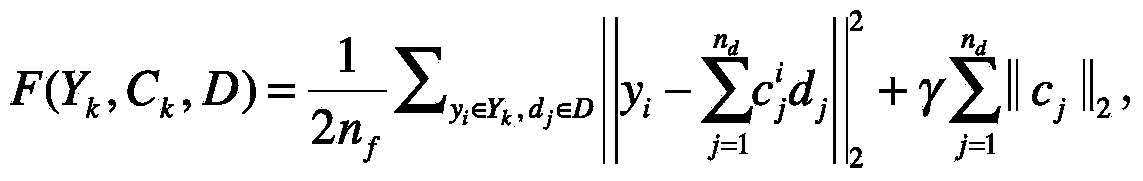

[0046] 3) Select the first m frame blocks as the initial simplified video, and reconstruct it through the group sparse coding algorithm to obtain the initial dictionary and reconstruction coefficients;

[0047]4) Update the deep network parameters according to the next frame block, and at the same time reconstruct the frame block and calculate the reconstruction error. If the error is greater than the set threshold, add the frame block to the simplified video a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com