A method, device and system for cross-page prefetching

A prefetching and cross-page technology, applied in the computer field, can solve the problems of low prefetching hit rate and low memory access efficiency of prefetching devices.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

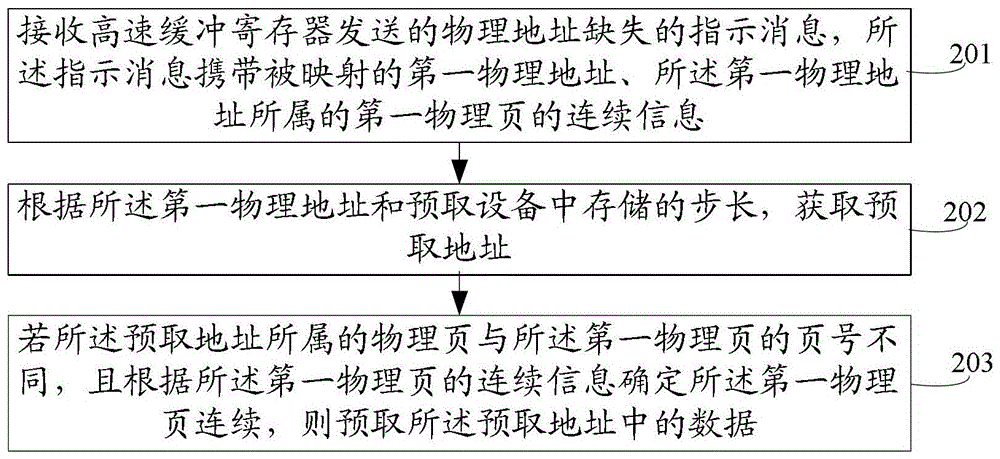

[0095] An embodiment of the present invention provides a cross-page prefetching method, specifically as figure 2 As shown, the method includes:

[0096] 201. Receive a physical address missing indication message sent by the cache register, where the indication message carries the mapped first physical address and continuous information about the first physical page to which the first physical address belongs, wherein the first The continuous information of the physical page is used to indicate whether the physical page mapped by the first virtual page and the physical page mapped by the next virtual page continuous with the first virtual page are continuous, and the first virtual page contains the first physical address The first virtual address of the mapping.

[0097] It should be noted that, usually when the processor does not hit instructions or data from the cache register, in order to improve the speed of reading instructions or data, the cache register usually reads i...

Embodiment 2

[0166] An embodiment of the present invention provides a cross-page prefetching method, specifically as Figure 7 The illustrated cross-page prefetch system is described below, and the cross-page prefetch system includes a processor, a memory, a cache register, a bypass conversion buffer, and a prefetch device.

[0167] In order to achieve cross-page prefetch, first, when the application program is loaded, the processor allocates the program instruction and data storage space for the application program in the memory, specifically as Figure 8 shown, including:

[0168] 801. The processor receives a first indication message for applying for a physical memory space, where the first indication message carries capacity information of the memory space.

[0169] 802. The processor allocates physical memory space and virtual memory space according to the capacity information of the memory space.

[0170]Specifically, when the application program is loaded, the processor first rece...

Embodiment 3

[0210] An embodiment of the present invention provides a prefetching device 1200, specifically as Figure 12 As shown, the prefetching device 1200 includes a receiving unit 1201 , an obtaining unit 1202 , and a prefetching unit 1203 .

[0211] The receiving unit 1201 is configured to receive a physical address missing indication message sent by the cache register, where the indication message carries the mapped first physical address and continuous information of the first physical page to which the first physical address belongs, Wherein, the continuity information of the first physical page is used to indicate whether the physical page mapped by the first virtual page and the physical page mapped by the next virtual page continuous with the first virtual page are continuous, and the first virtual page includes The first virtual address mapped to the first physical address.

[0212] The obtaining unit 1202 is configured to obtain a prefetch address according to the first phy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com