Method and device for reading length of data block of cache memory in self-adaption mode

A technology for caching data and data reading, applied in the field of electronics, can solve the problems of not making full use of the locality of data space, increasing the number of CPU pauses, and poor overall computer performance, so as to improve overall performance and reduce the number of times of data reading. , The effect of reducing the number of CPU pauses

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

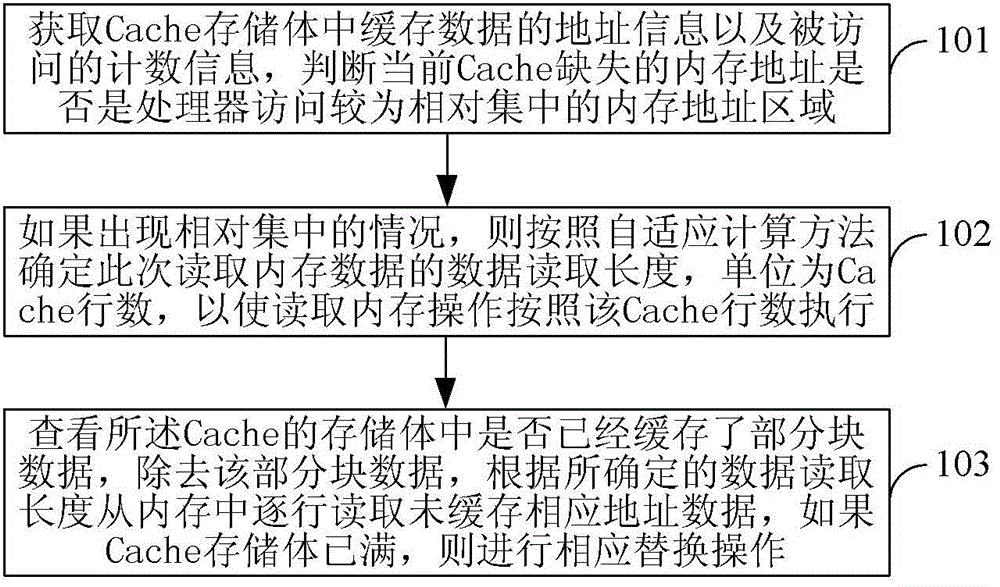

[0039] Embodiment 1, a data block length adaptive reading method of Cache, comprising:

[0040] When the last level of Cache of the processor misses, obtain the cached data information of the Cache;

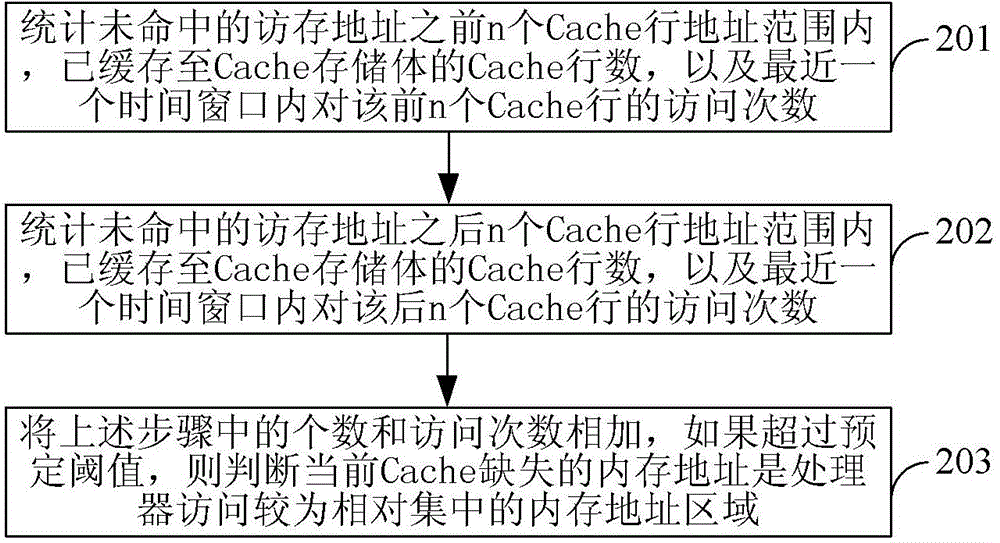

[0041] According to the cached data information, it is judged whether the memory access address of the miss and the address of the cached data in the Cache are concentrated;

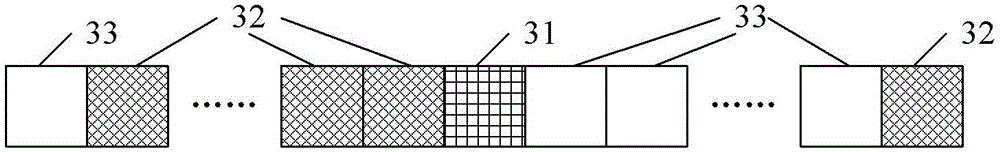

[0042] If it is centralized, determine the data read length suitable for the data distribution concentration, and the unit is the number of Cache lines;

[0043] The processor reads the data from the memory into the Cache according to the determined data read length centering on the memory access address of the miss.

[0044] In this embodiment, if the memory access address of the miss is relatively concentrated with the address of the cached data in the Cache, it means that the memory address missing from the current Cache is the memory hotspot address area accessed by the processor; if this happens, then...

Embodiment 2

[0076] Embodiment 2, a data block length adaptive reading device of Cache, comprising:

[0077] The cache data information obtaining unit is used to obtain the cache data information of the Cache when the processor's last level of Cache misses;

[0078] A judging unit, configured to judge whether the memory access address of the miss and the address of the cached data in the Cache are concentrated according to the cached data information;

[0079] The length determination unit is used to determine the data read length suitable for the data distribution concentration degree when concentrated, and the unit is the number of Cache rows;

[0080] The reading unit is configured to read data from the memory into the Cache according to the determined data reading length centering on the memory access address of the miss.

[0081] In an implementation manner of this embodiment, the cached data information may include address information of the cached data and a count of access times. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com