Patents

Literature

1278results about How to "Improve reading speed" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

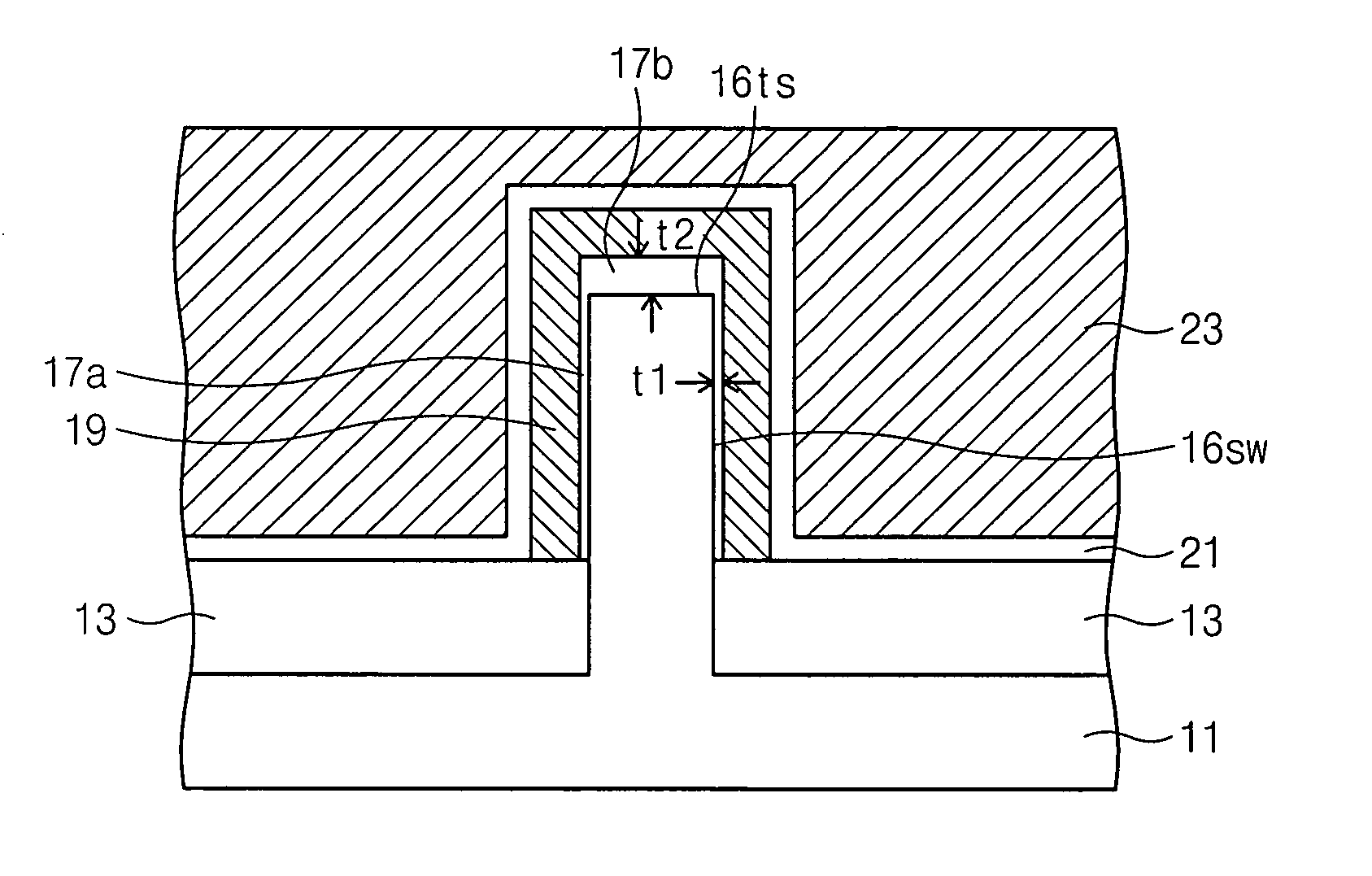

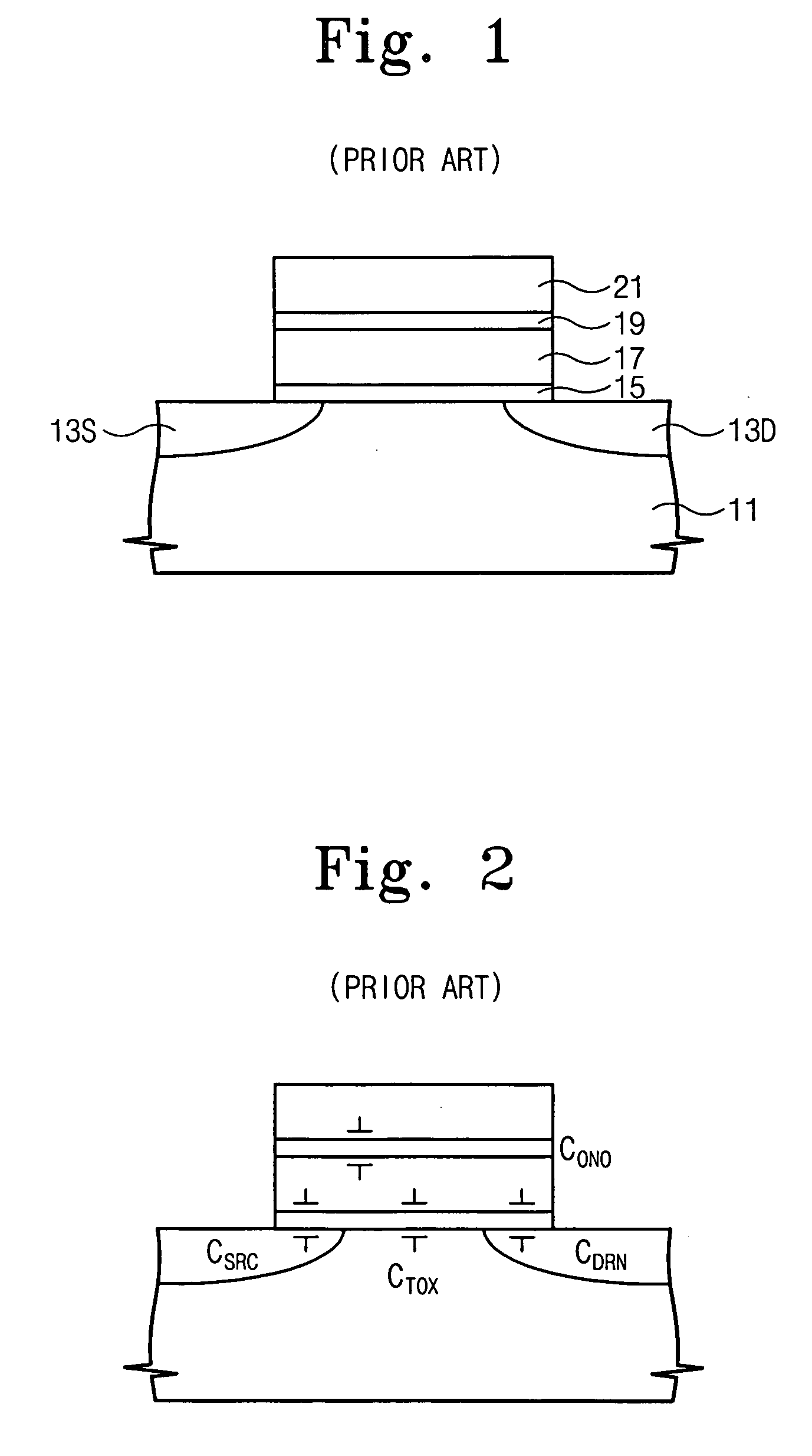

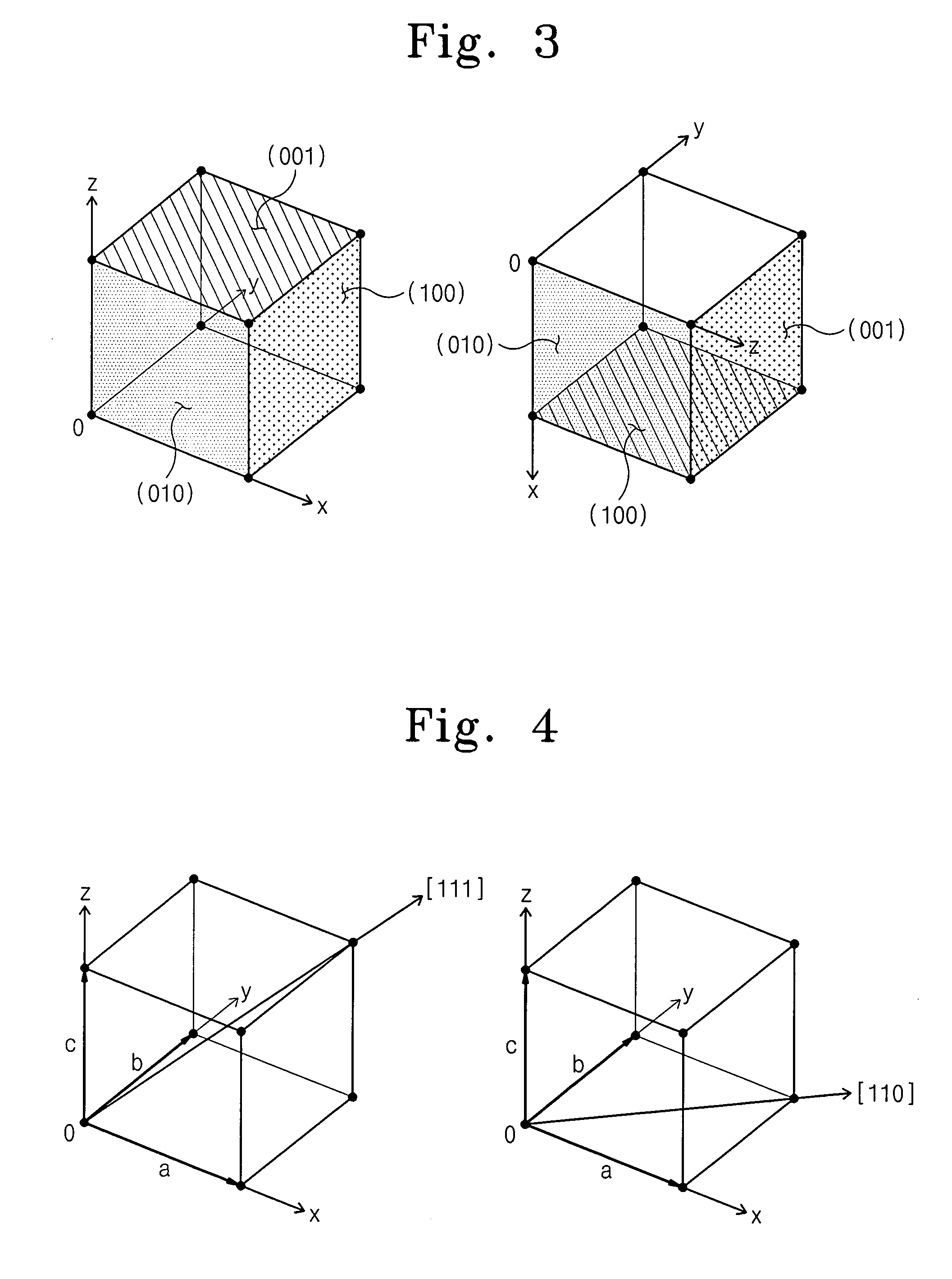

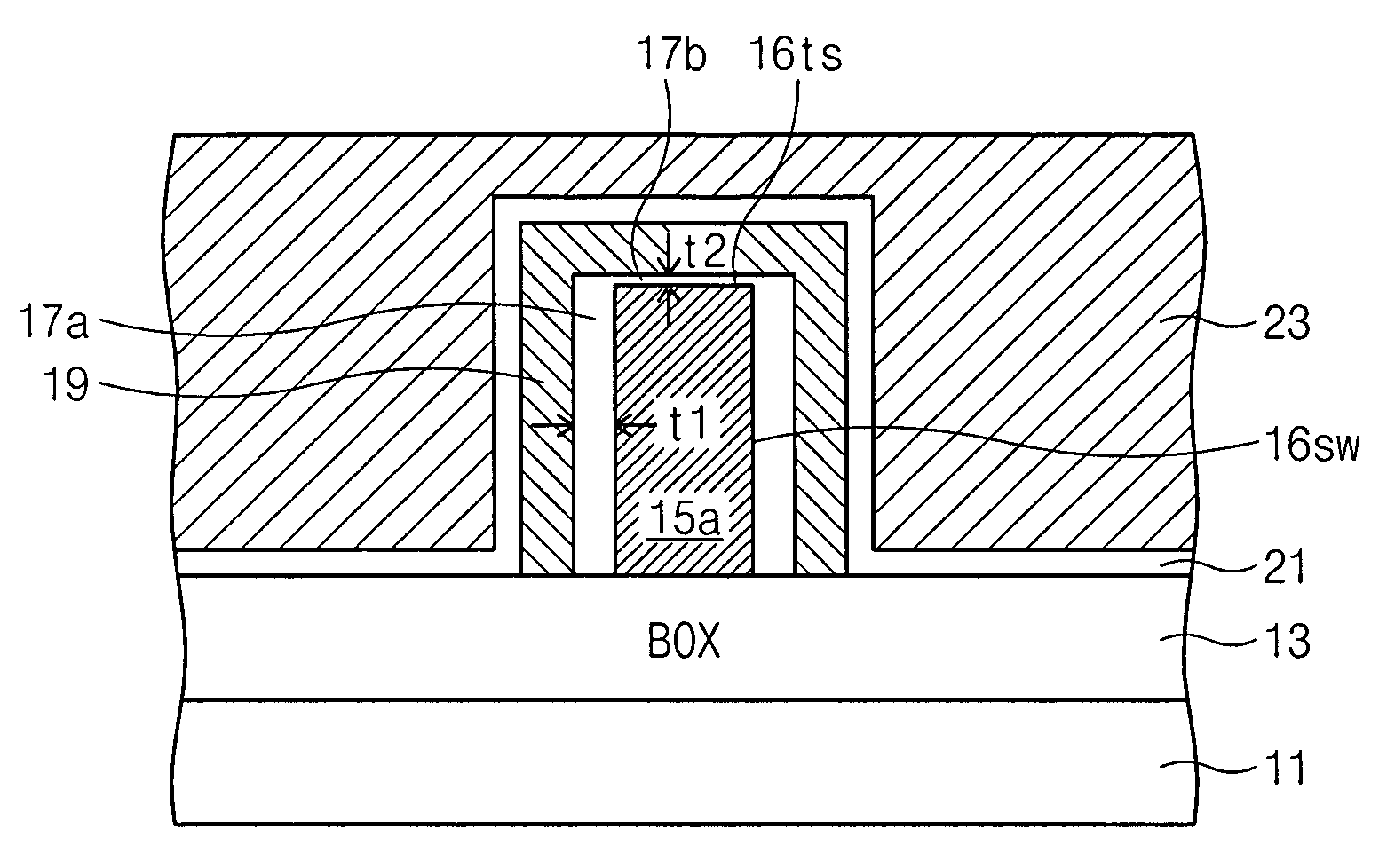

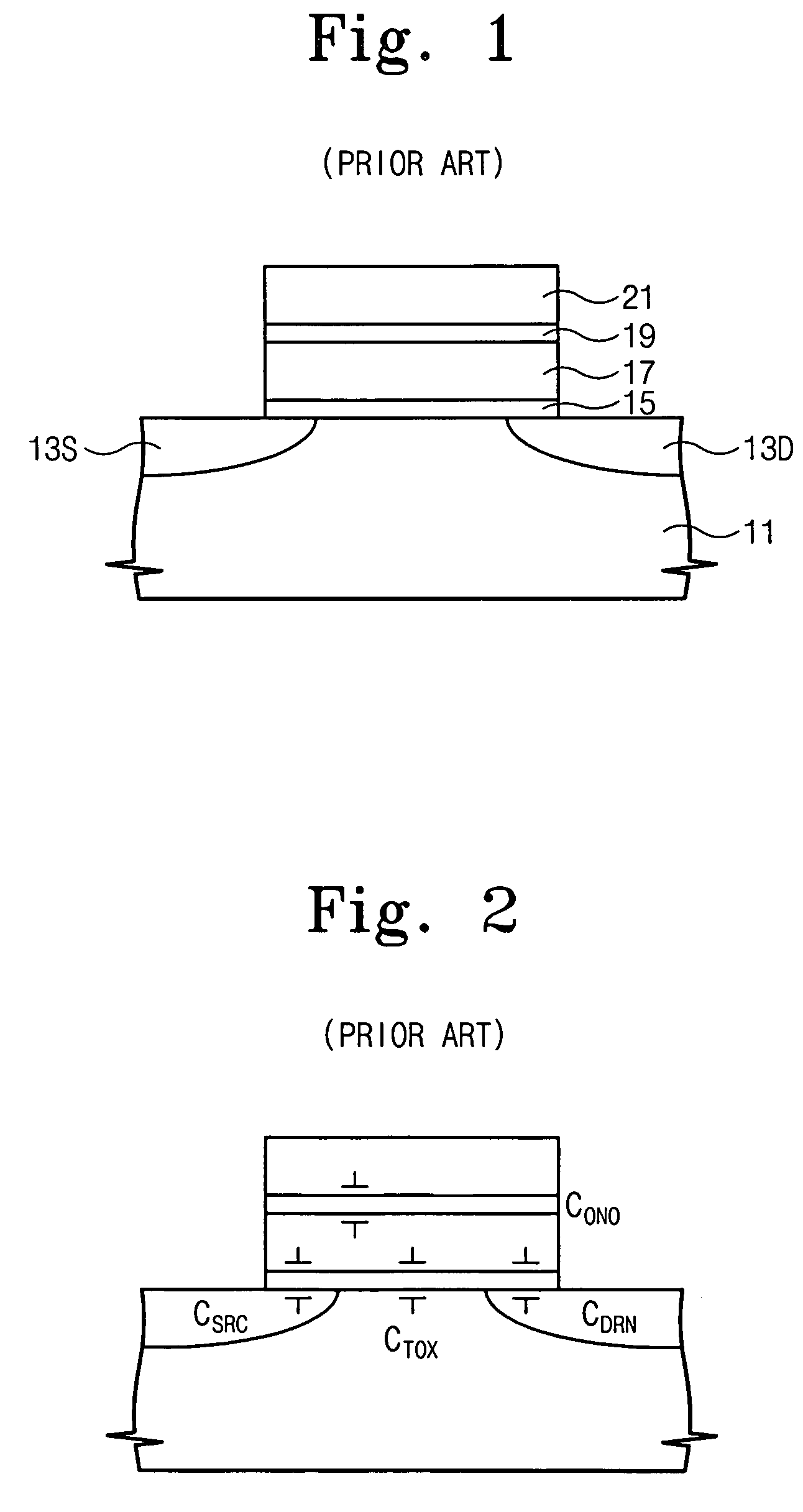

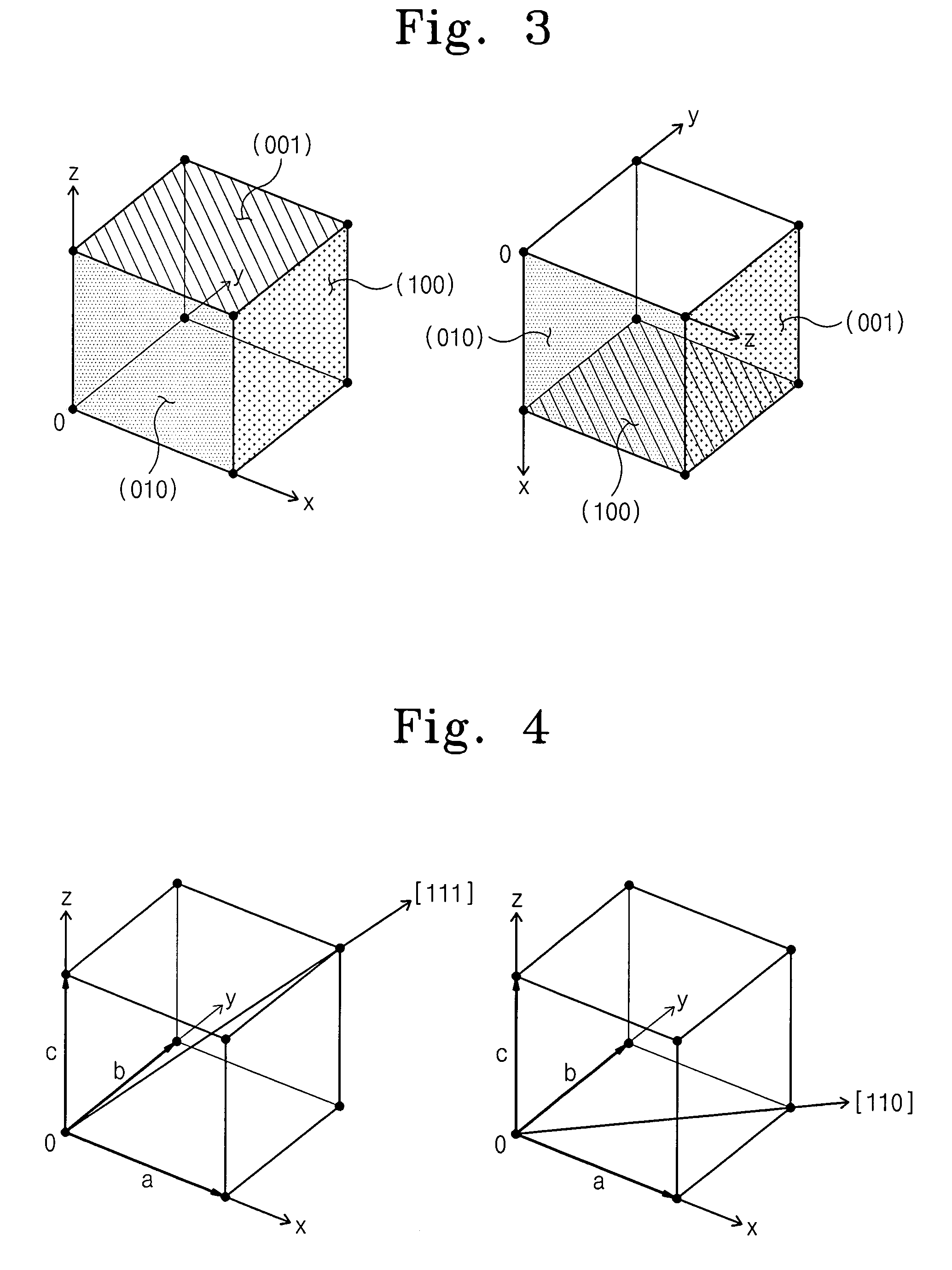

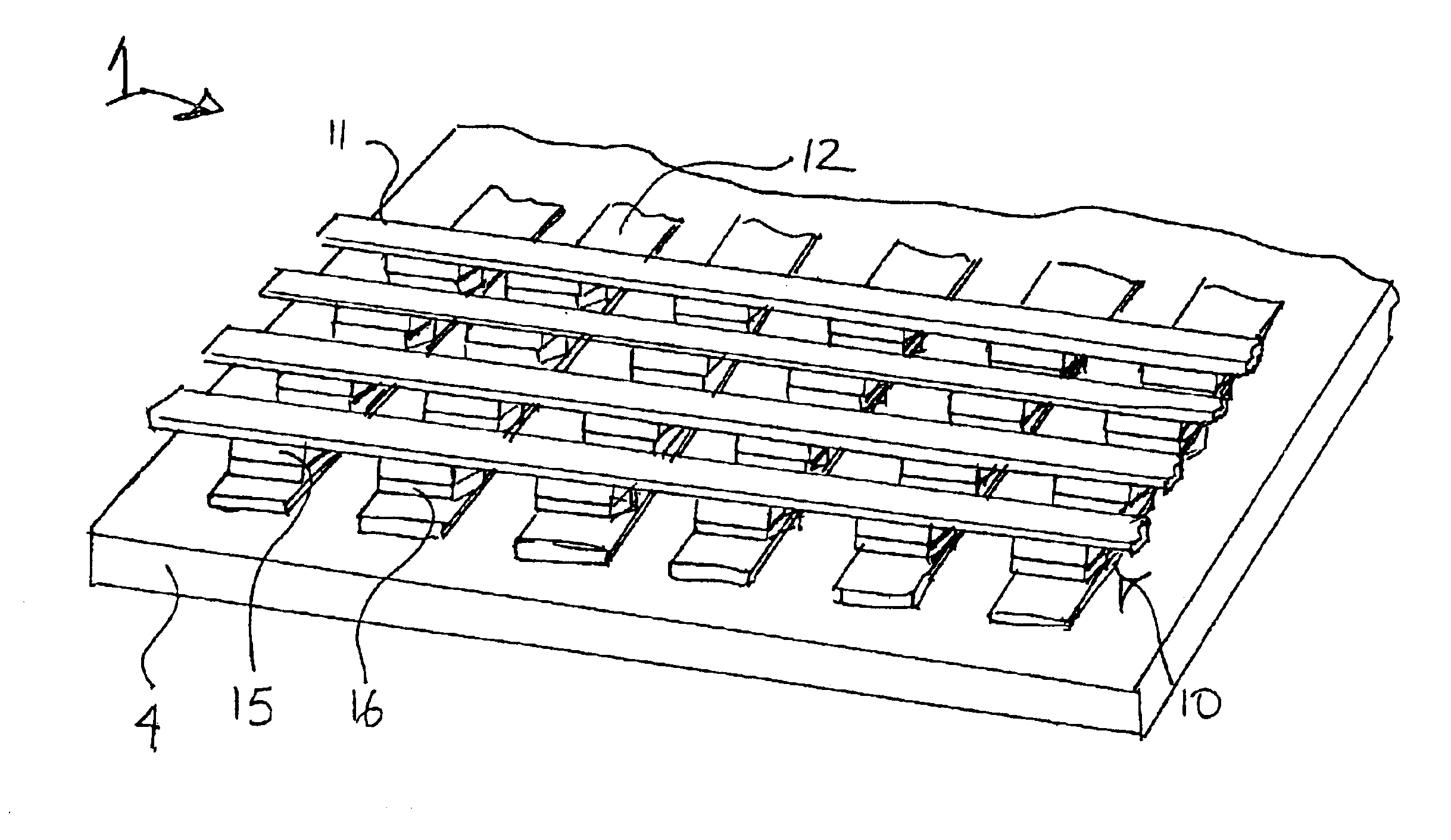

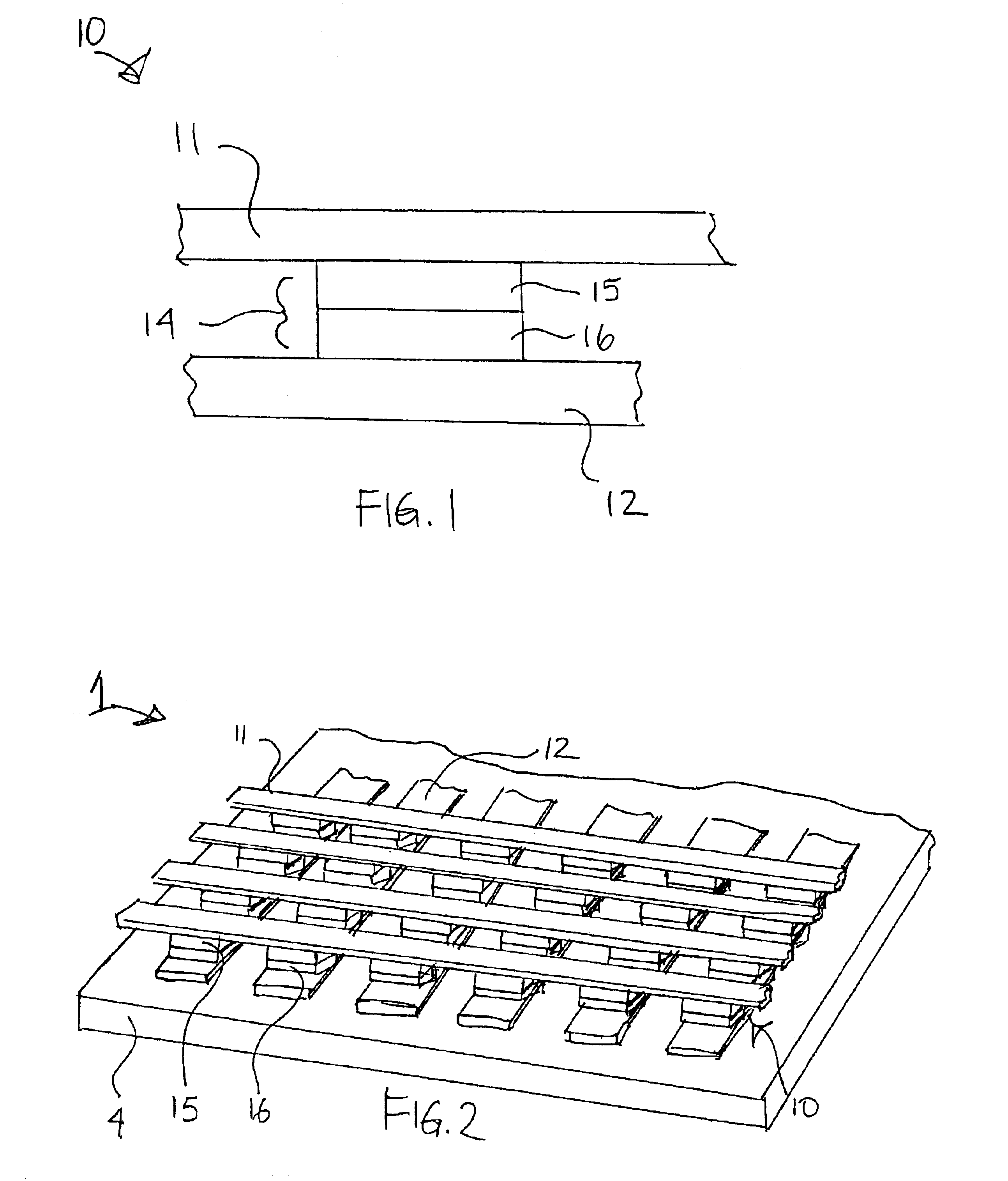

Flash memory device using semiconductor fin and method thereof

ActiveUS20060044915A1Improve scalabilityProgramming and erasing efficiencySolid-state devicesSemiconductor/solid-state device manufacturingCoupling ratioEngineering

A flash memory device according to the present invention includes a semiconductor fin including a top surface and a side surface originated from different crystal planes. The flash memory device comprises: insulating layers having different thicknesses formed on a side surface and a top surface of the semiconductor fin, a storage electrode, a gate insulating layer and a control gate electrode sequentially formed on the insulating layers. A thin insulating layer enables charges to be injected or emitted through it, and a thick insulating layer increases a coupling ratio. Accordingly, it is possible to increase an efficiency of a programming or an erase operation of a flash memory device.

Owner:SAMSUNG ELECTRONICS CO LTD

Flash memory device using semiconductor fin and method thereof

ActiveUS7285820B2Improve scalabilityProgramming and erasing efficiencyTransistorSolid-state devicesCoupling ratioCrystal plane

A flash memory device according to the present invention includes a semiconductor fin including a top surface and a side surface originated from different crystal planes. The flash memory device comprises: insulating layers having different thicknesses formed on a side surface and a top surface of the semiconductor fin, a storage electrode, a gate insulating layer and a control gate electrode sequentially formed on the insulating layers. A thin insulating layer enables charges to be injected or emitted through it, and a thick insulating layer increases a coupling ratio. Accordingly, it is possible to increase an efficiency of a programming or an erase operation of a flash memory device.

Owner:SAMSUNG ELECTRONICS CO LTD

Memory device with active and passive layers

InactiveUS6858481B2Improve reading speedIncrease write speedNanoinformaticsSolid-state devicesAmount of substanceElectron mobility

A memory including memory cells having active and passive layers may store multiple information bits. The active layer may include an organic polymer that has a variable resistance based on the movement of charged species (ions or ions and electrons) between the passive layer and the active layer. The passive layer may be a super-ionic material that has high ion and electron mobility. The active layer may be self-assembled from a monomer in a liquid or gas.

Owner:SPANSION LLC

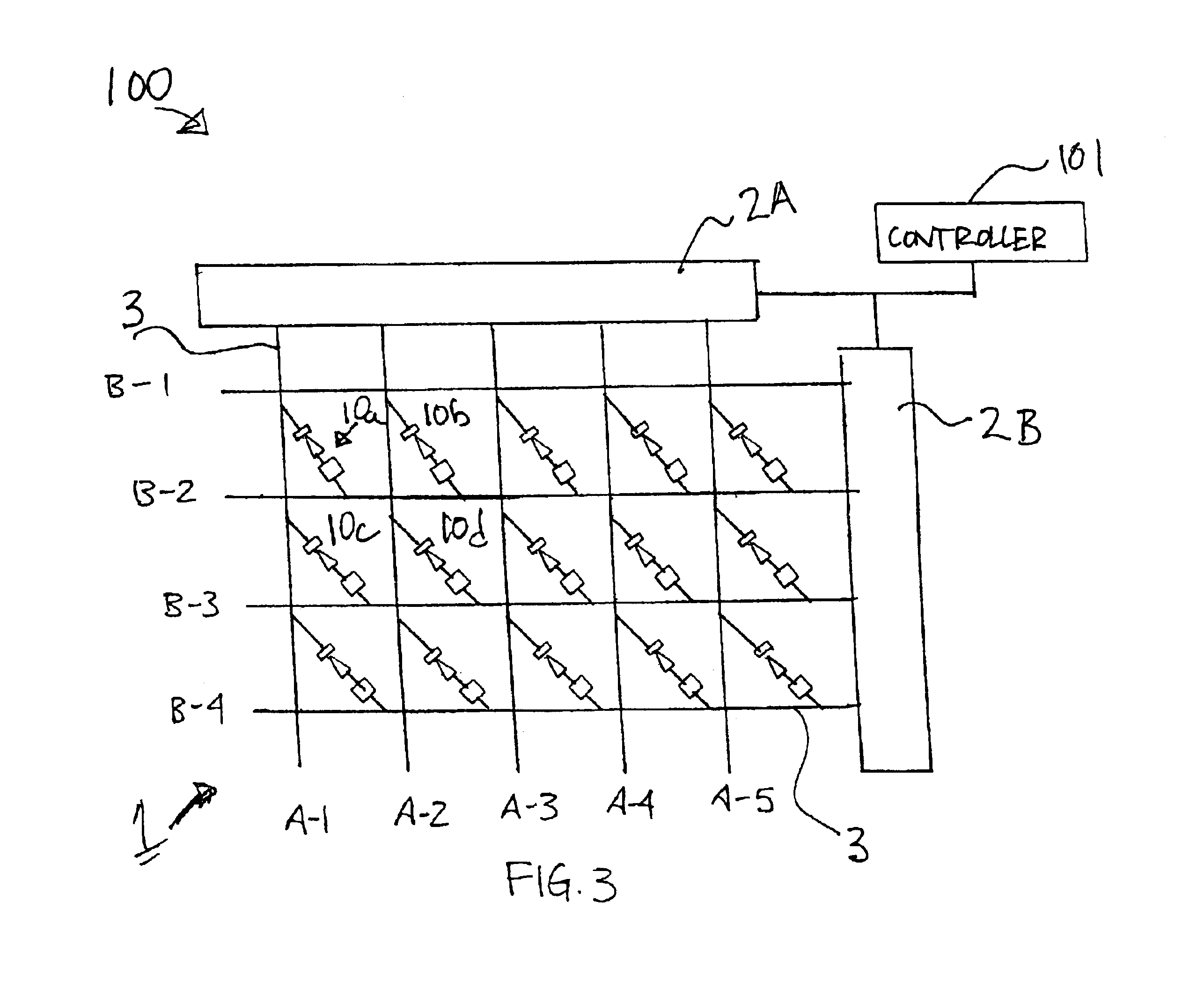

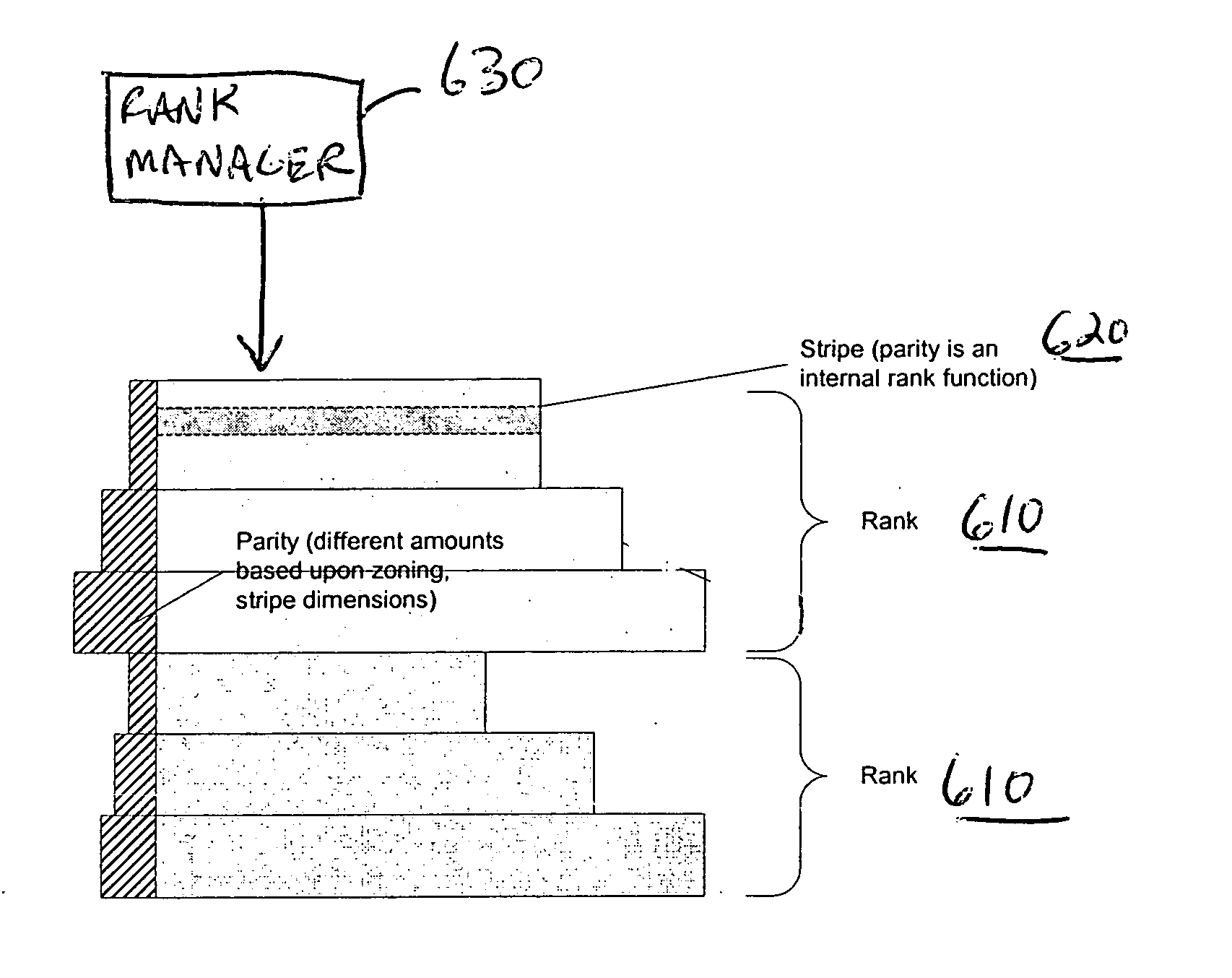

Transaction-based storage system and method that uses variable sized objects to store data

ActiveUS20050257083A1Increase write speedImprove reading speedMemory loss protectionError detection/correctionExtendible hashingBinary tree

The present invention provides a storage system for storing variable sized objects. The storage system is preferably a transaction-based system that uses variable sized objects to store data. The storage system is preferably implemented using arrays disks that are arranged in ranks. Each rank includes multiple stripes. Each stripe may be read and written as a convenient unit for maximum performance. A rank manager is able to dynamically configure the ranks to adjust for failed and added disks by selectively shortening and lengthening the stripes. The storage system may include a stripe space table that contains entries describing the amount of space used in each stripe. An object map provides entries for each object in the storage system describing the location (e.g., rank, stripe and offset values), the length and version of the object. A volume index translates regions of logical storage into object identifiers. The storage system may implement various types of formats such as I-node, binary tree and extendible hashing formats.

Owner:COUSINS ROBERT E

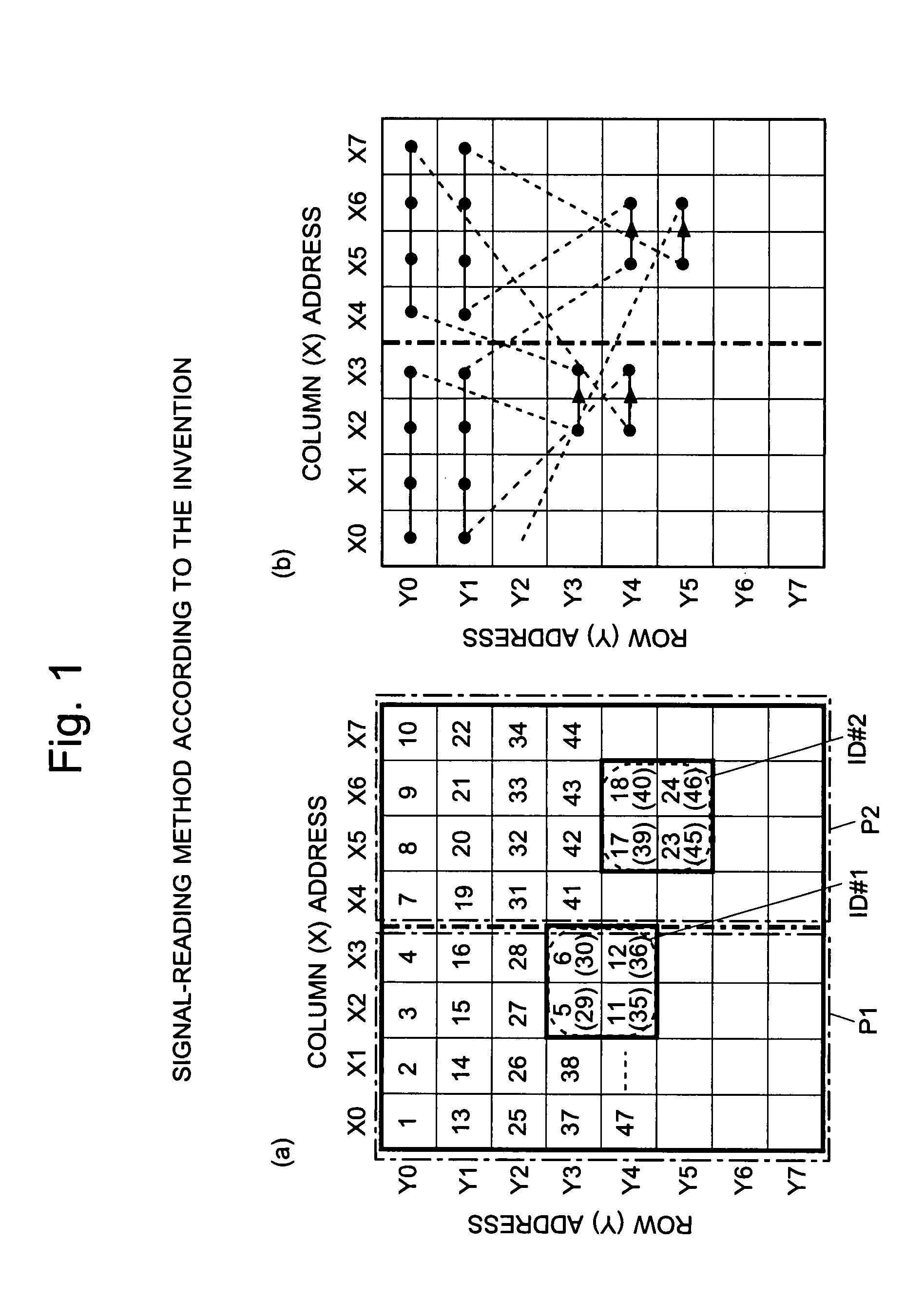

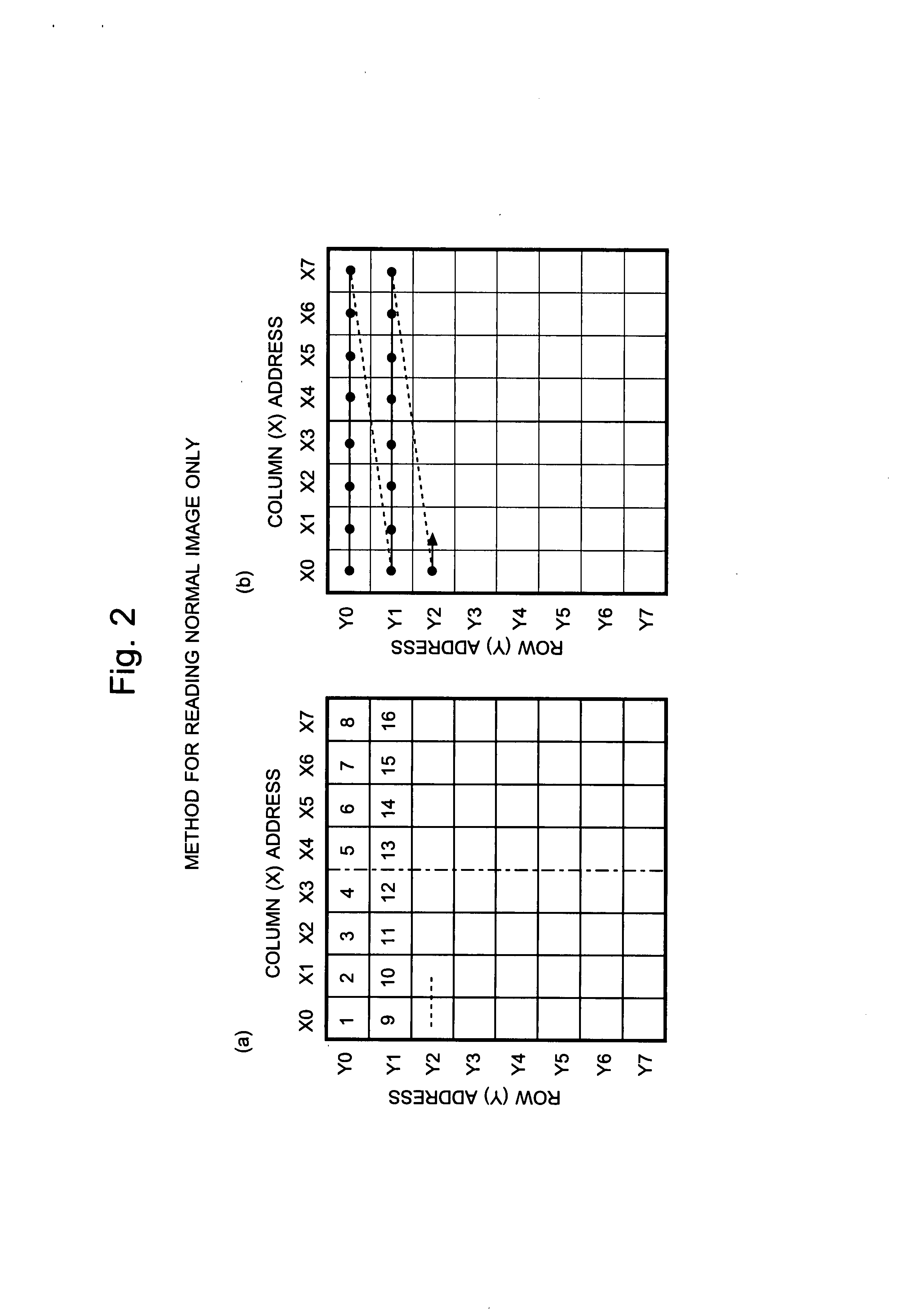

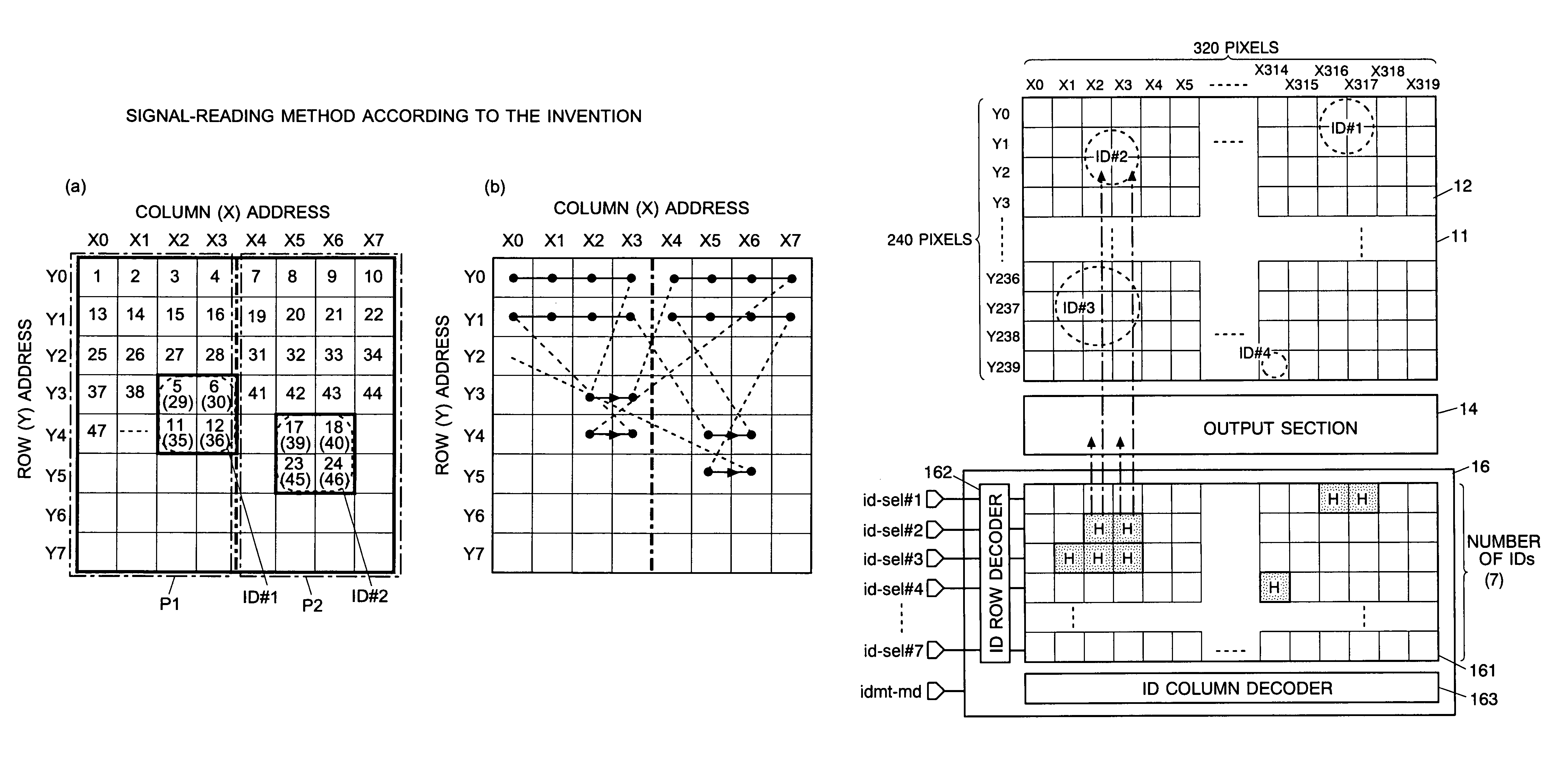

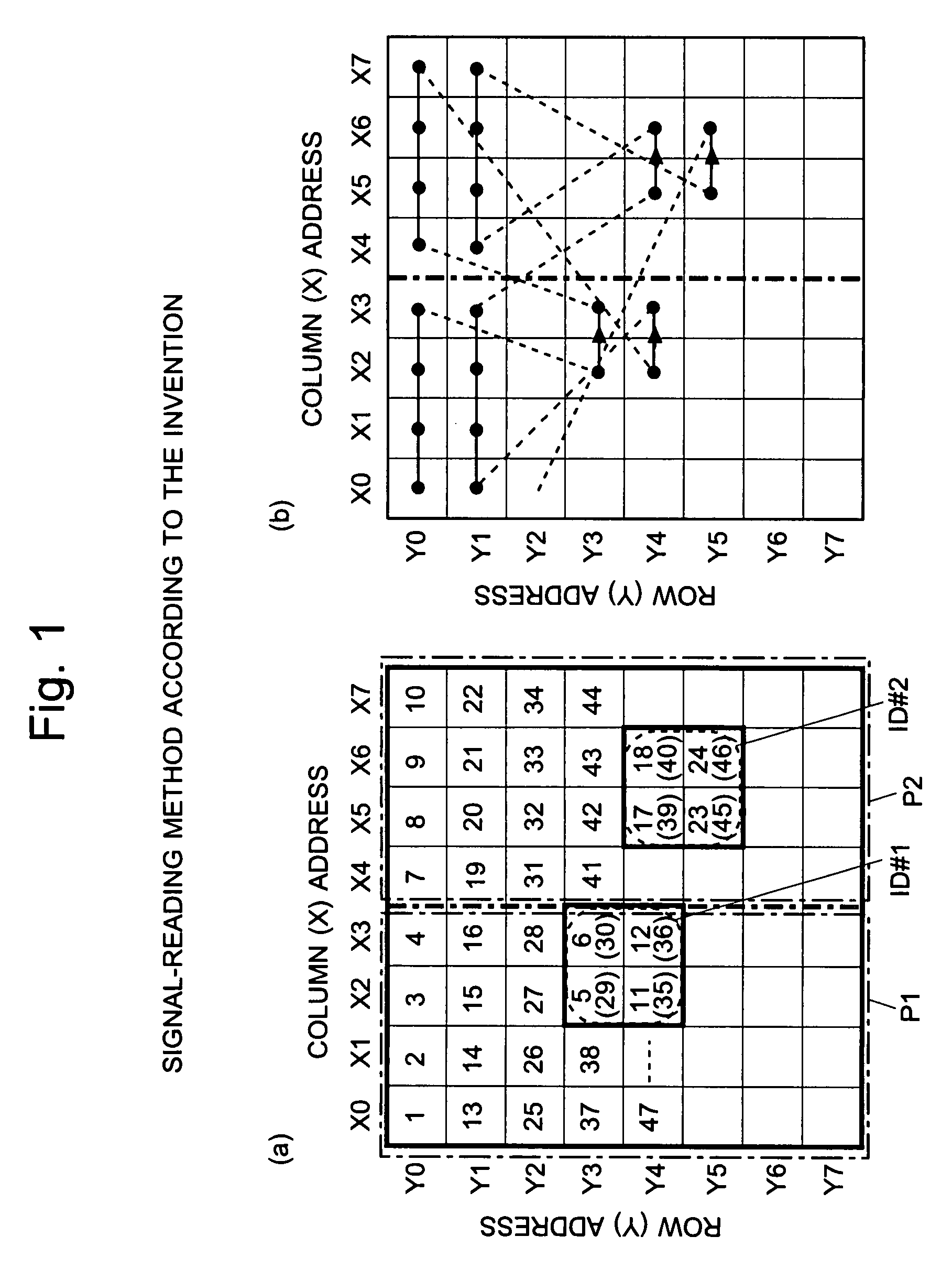

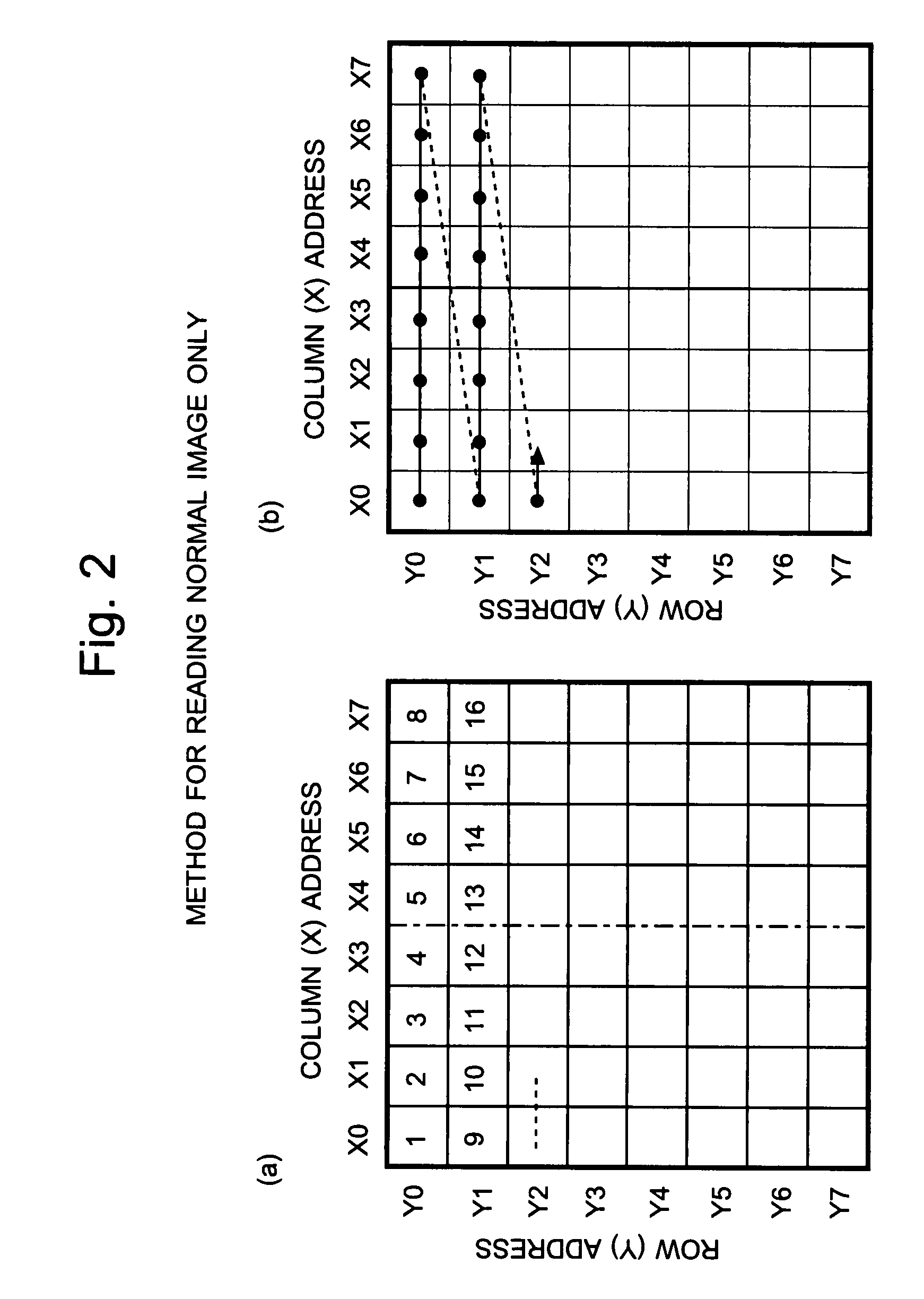

Imaging Device and Method for Reading Signals From Such Device

InactiveUS20080297615A1Small areaIncrease reading speedTelevision system detailsTelevision system scanning detailsImage sensorAudio power amplifier

Each pixel cell (12) of an image sensor (10) is made of a 4-Tr structure, in which only one Tr for resetting a column (X) is so added to an ordinary 3-Tr APS as to reset only an arbitrary pixel selectively, thereby to confine the pixel size. When a pixel signal is to be read, the period, for which the pixel signals composing an ordinary image of one frame are read, is finely divided so that the pixel signals of the pixels receiving an ID light for the period are read out bit by bit and repeatedly. At this time, for only the column being read, an electric current is fed to a read amplifier in the pixel cell (12) or a variable gain amplifier in an output unit (14), thereby to suppress the power consumption. As a result, a lower power consumption and a higher pixel formation can be attained in an image pickup device for picking up an image and for acquiring the ID information of a light beacon existing in the image pickup range.

Owner:JAPAN SCI & TECH CORP +1

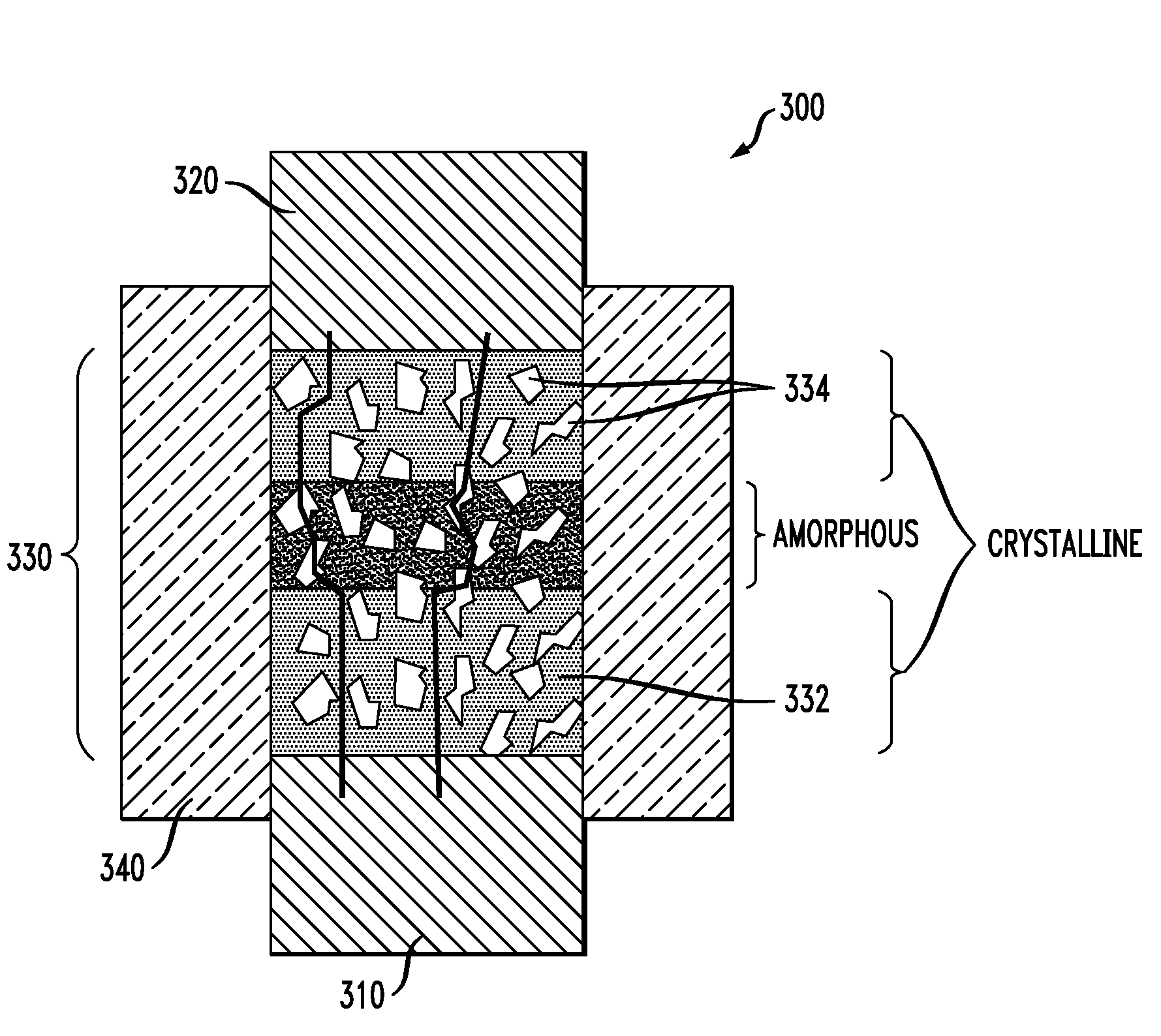

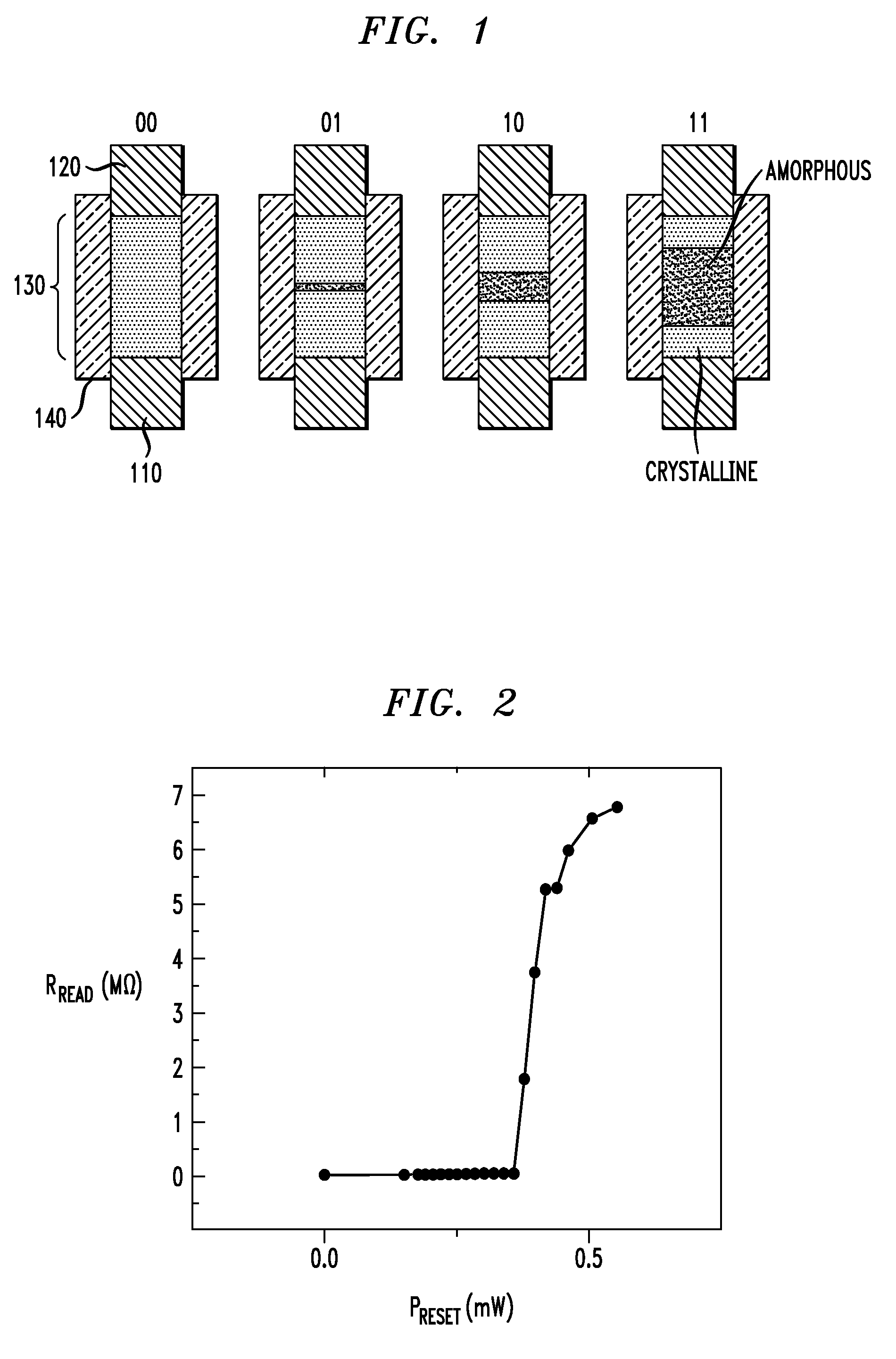

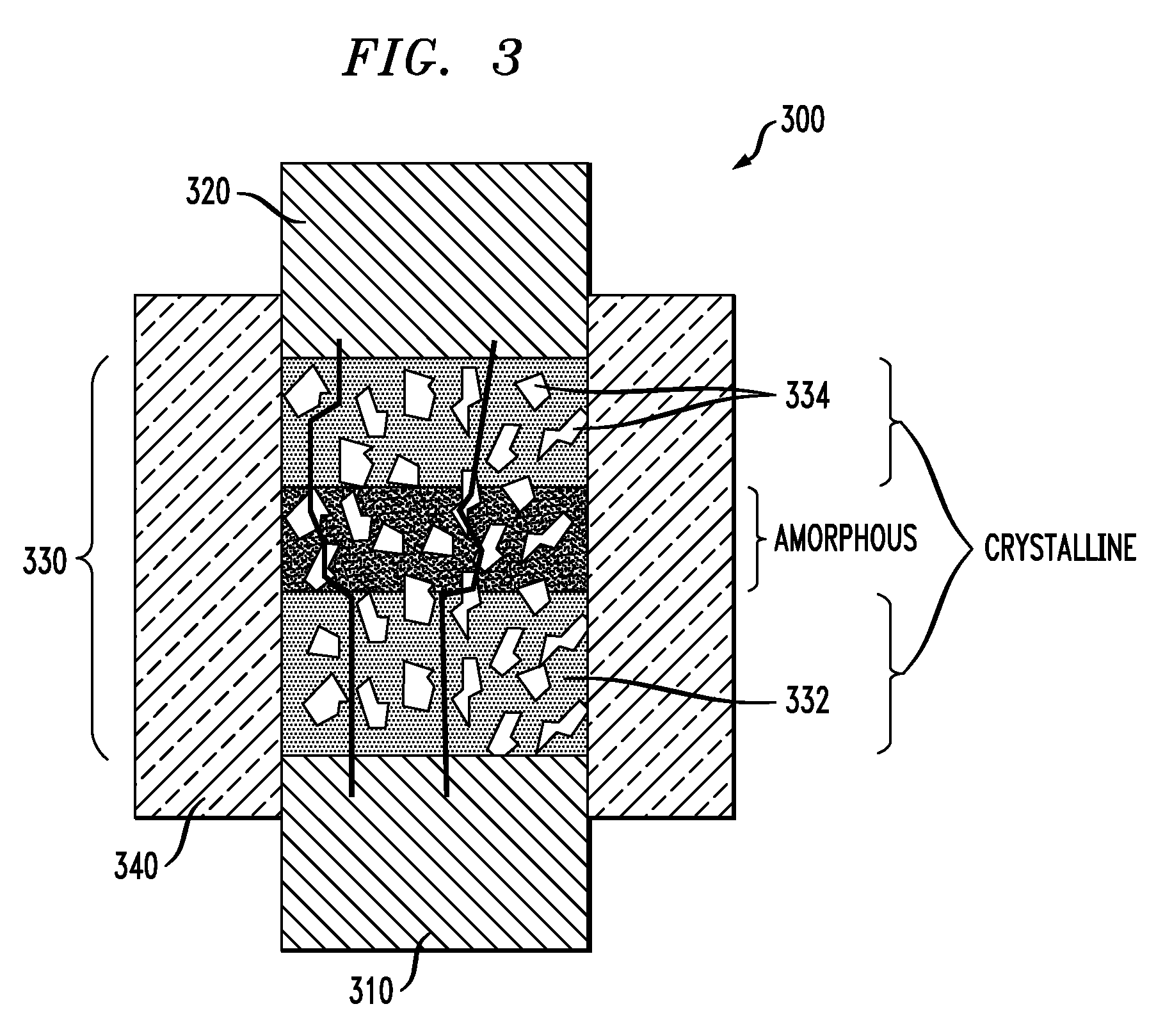

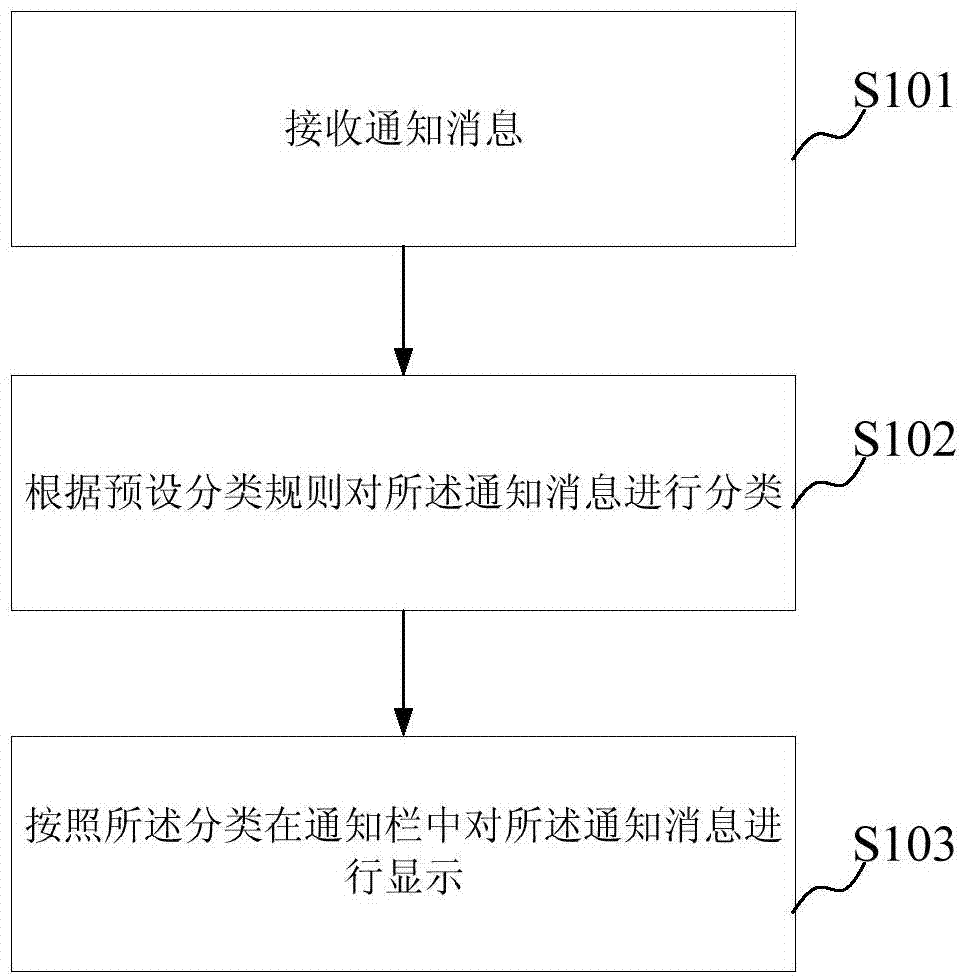

Resistance Limited Phase Change Memory Material

InactiveUS20080165569A1High resistance valueSlow reading speedSemiconductor/solid-state device manufacturingDigital storagePhase-change materialPhase-change memory

A memory cell comprises a first electrode, a second electrode and a composite material. The composite material electrically couples the first electrode to the second electrode. Moreover, the composite material comprises a phase change material and a resistor material. At least a portion of the phase change material is operative to switch between a substantially crystalline phase and a substantially amorphous phase in response to an application of a switching signal to at least one of the first and second electrodes. In addition, the resistor material has a resistivity lower than that of the phase change material when the phase change material is in the substantially amorphous phase.

Owner:IBM CORP +2

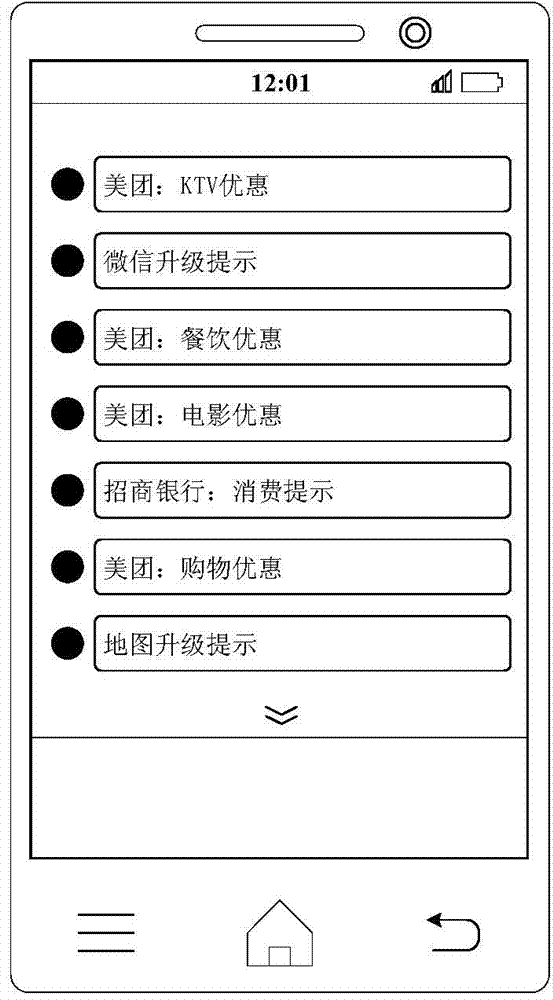

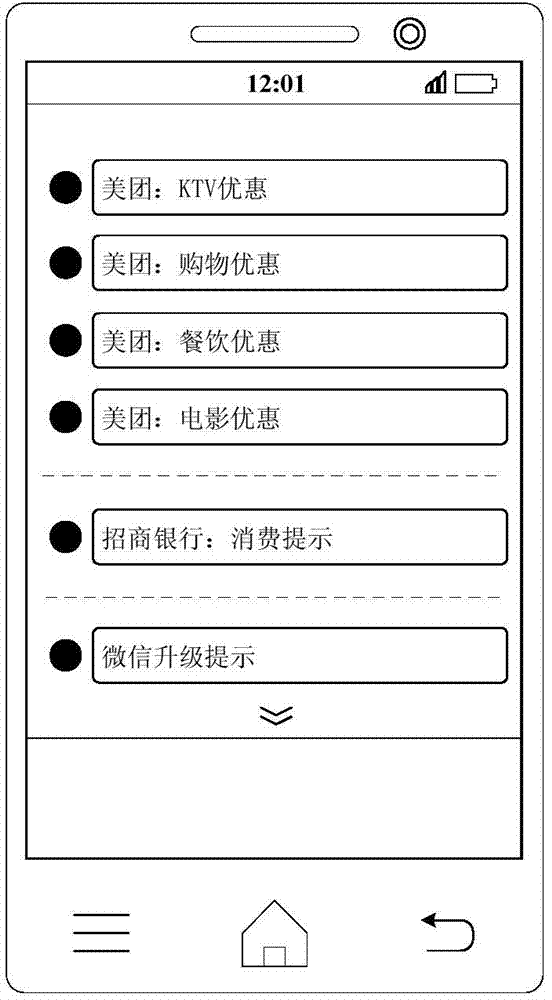

Method and device for displaying notification messages

ActiveCN104506715AImprove operational efficiencyImprove reading speedSubstation equipmentTerminal equipmentComputer terminal

The disclosed embodiment provides a method and a device for displaying notification messages, which are applied to terminal equipment. The method comprises the following steps: receiving the notification messages; classifying the notification messages according to the preset classification rule; displaying the notification messages in notification bars according to the classification. In the method provided by the embodiment, the terminal equipment such as a mobile phone can classify the notification messages according to the preset classification rule after receiving the notification message of a system or each APP (application); for example, the notification messages with the same source are classified into the same class, and then the notification messages are displayed in the notification bars according to the classification, thus an interface of each notification bar is concise and not disordered; a user can rapidly find out concerned message; the reading speed of the user is improved; the operation efficiency of the terminal equipment is improved; the user experience is also optimized.

Owner:XIAOMI INC

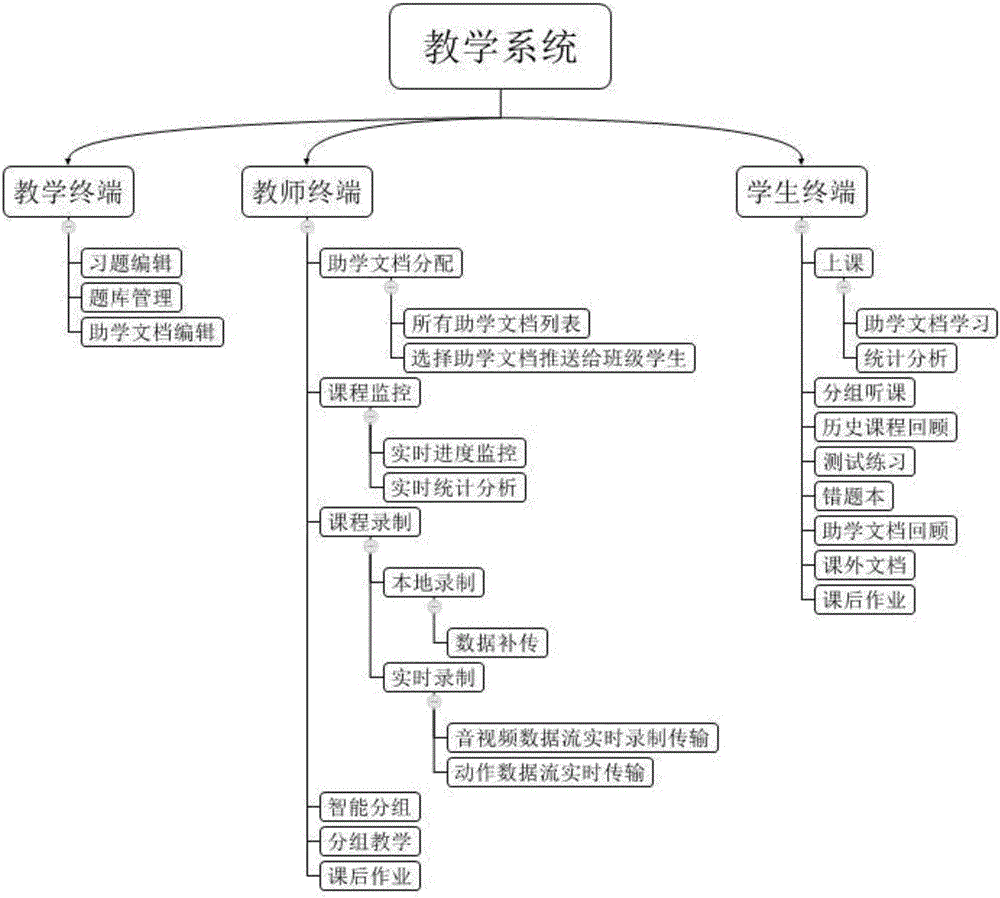

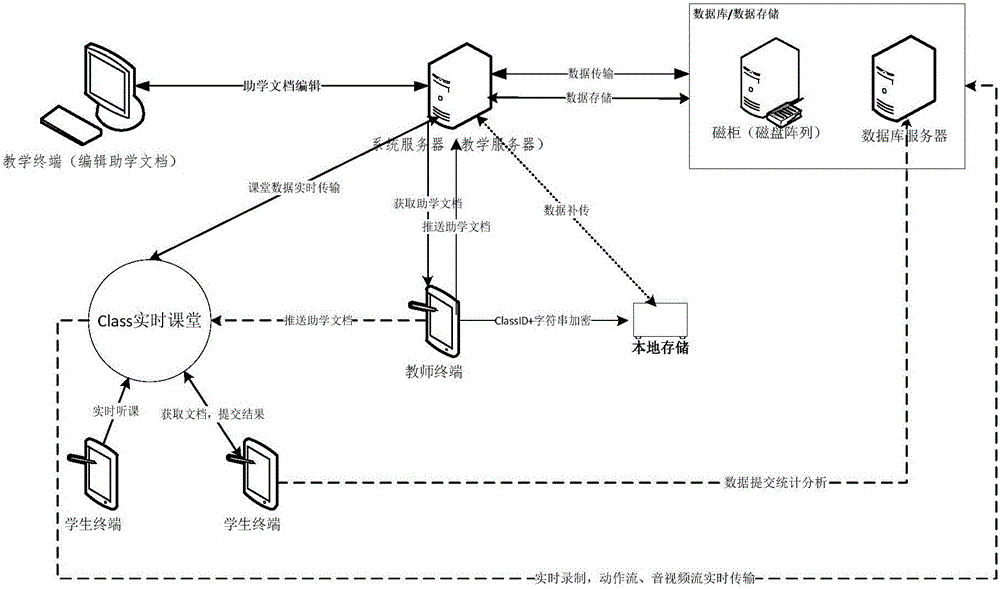

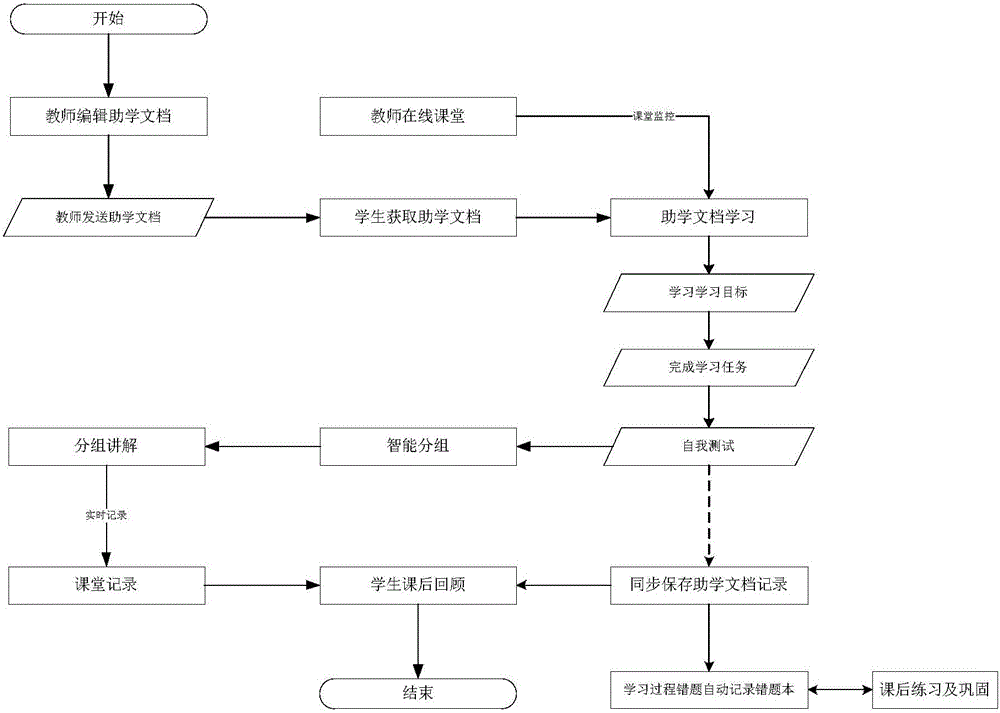

Internet teaching method and system

ActiveCN105869091AImprove learning efficiencyImprove learning effectData processing applicationsElectrical appliancesTimestampSource Data Verification

The invention provides an Internet teaching method and system for developing teaching activities through the Internet including a wireless communication network, and particularly discloses a method and system capable of recording the Internet teaching process in real time and accurately and efficiently storing the Internet teaching process in a cloud side. The method includes the step of evaluating educational document learning results by students to learn and distinguish learning situations of students of different levels, thereby achieving targeted differential teaching, tutoring, homework doing, exercising and the like and easily improving the learning efficiency and learning effect, especially continuously improving the students at different learning progresses. Furthermore, by means of a data return gateway, a data verification mechanism, a timestamp verification mechanism, an audio and video flow verification mechanism, a data complementary transmitting mechanism, a local fragmentation encrypting, storing and uploading mechanism and other means, recorded data is stored in the cloud side efficiently and accurately.

Owner:鹰硕(韶关)信息产业集团有限公司

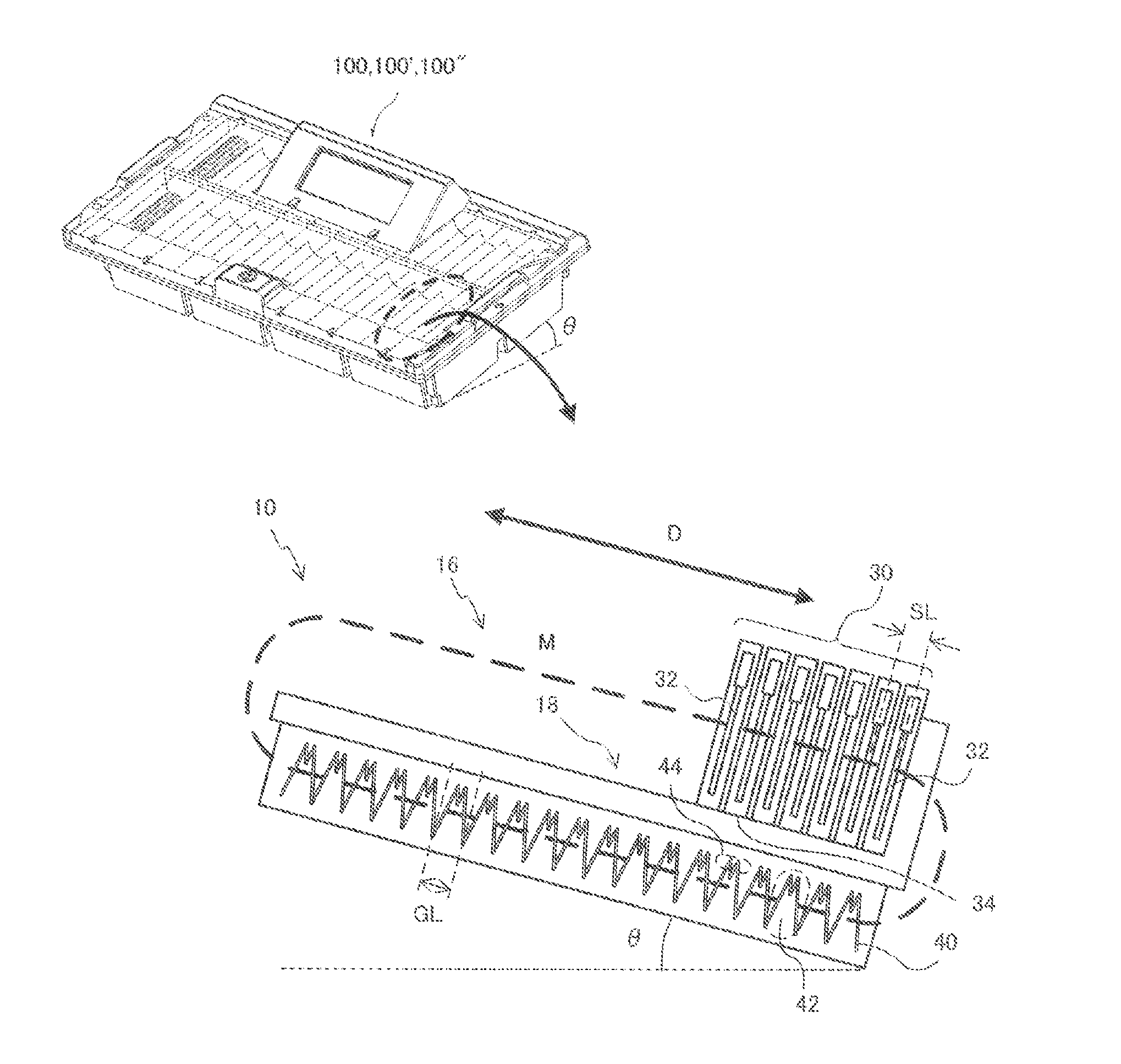

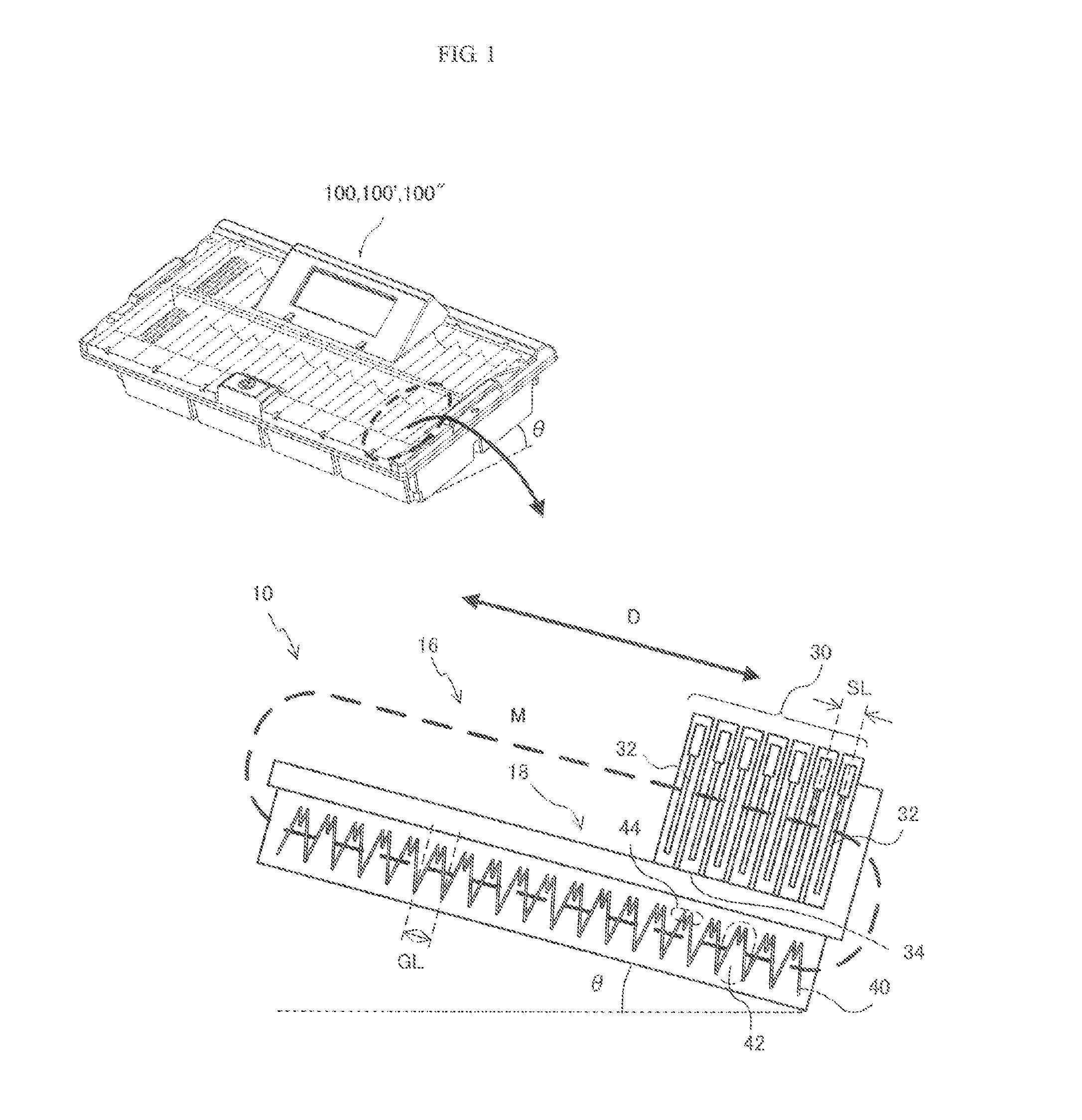

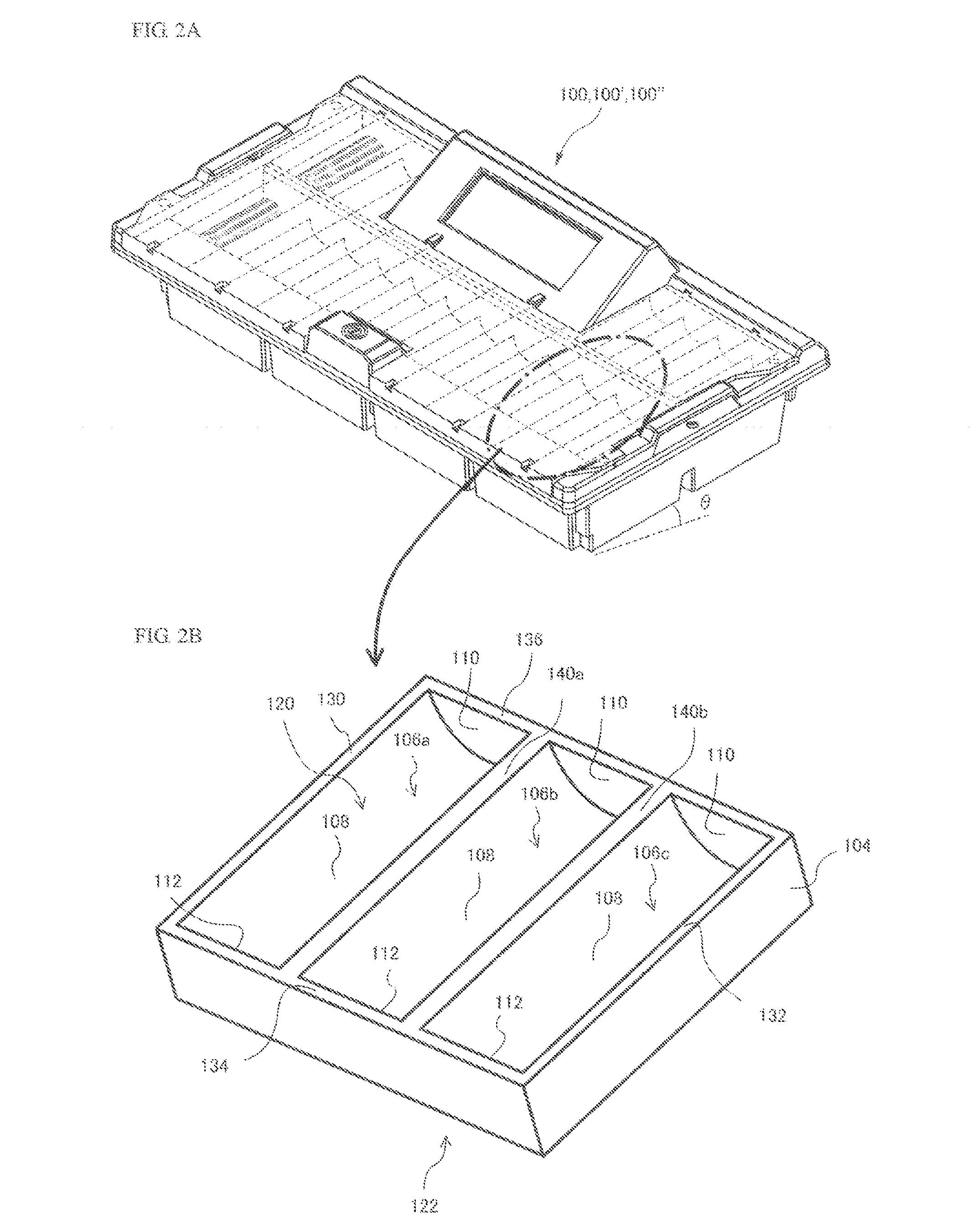

Token storage device

ActiveUS20140291399A1Accurate readingIncreases magnitudeAntenna supports/mountingsSensing record carriersMagnetic fieldElectrical and Electronics engineering

Provided is a chip tray which is capable of accurately reading out an IC tag for use in RFID without increasing a magnitude of a magnetic field generated by an antenna. A magnetic field generating antenna is disposed along a direction in which a plurality of tokens having embedded therein feeder antennas of IC tags for use in RFID are stacked.

Owner:UNIVERSAL ENTERTAINMENT CORP

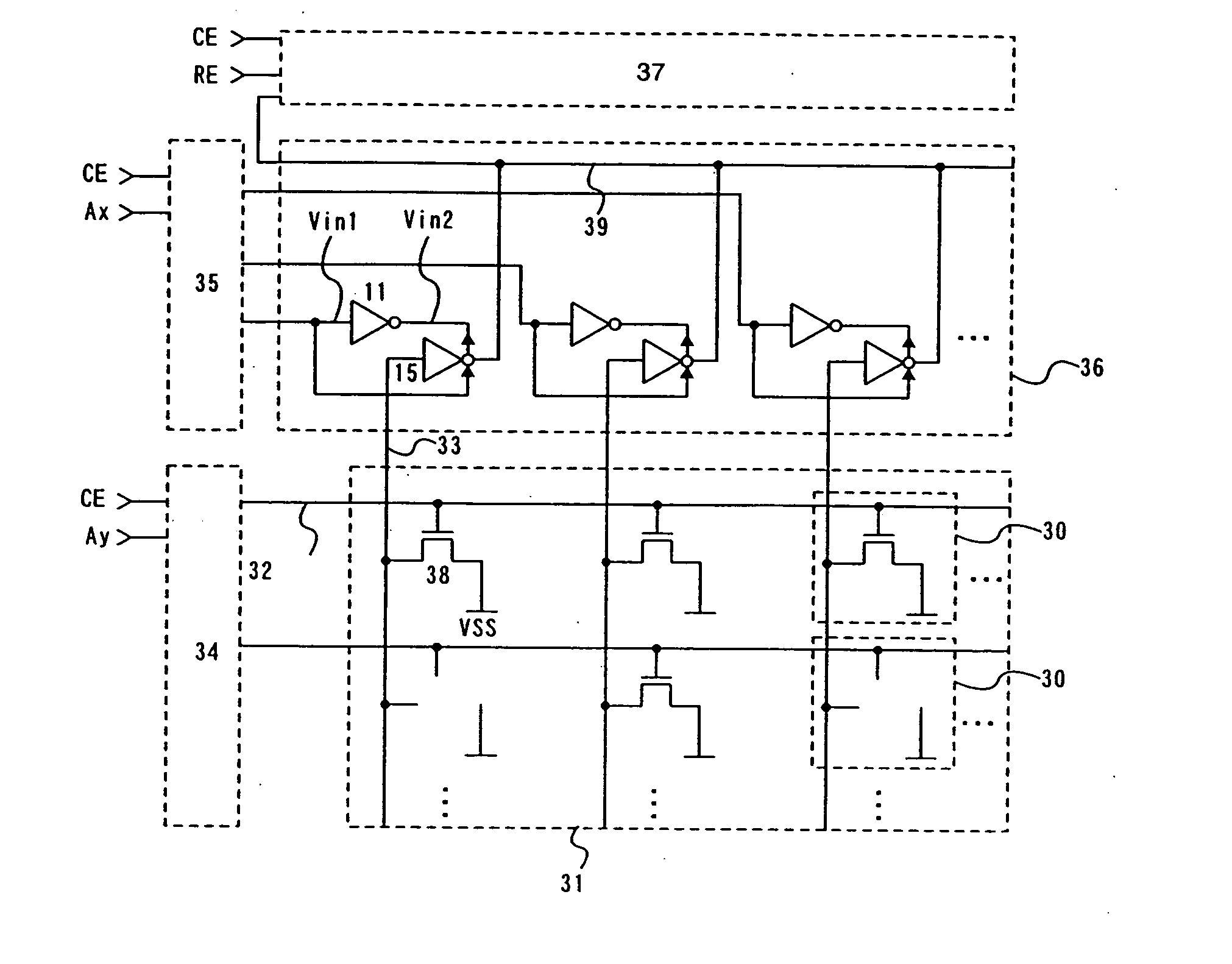

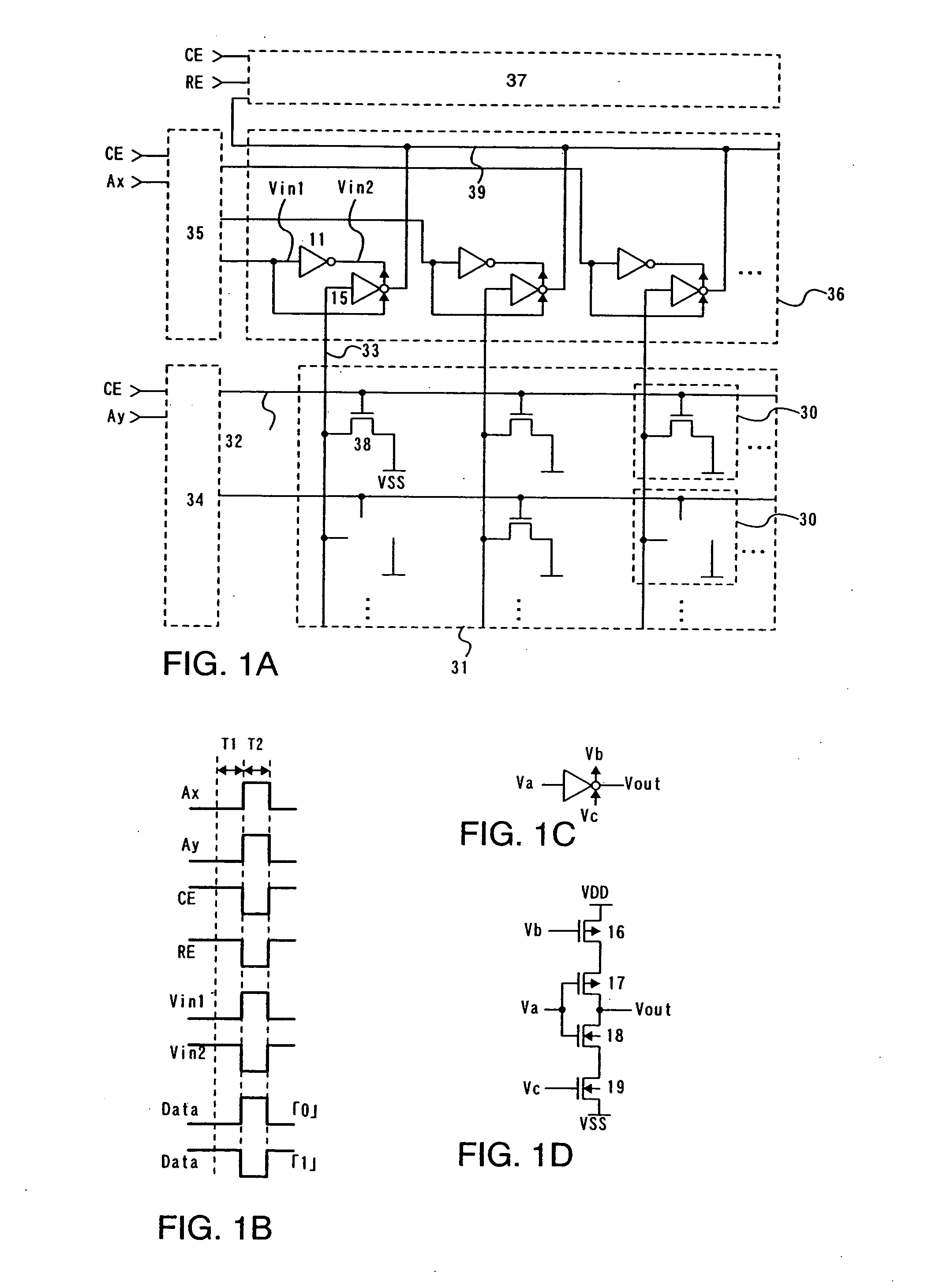

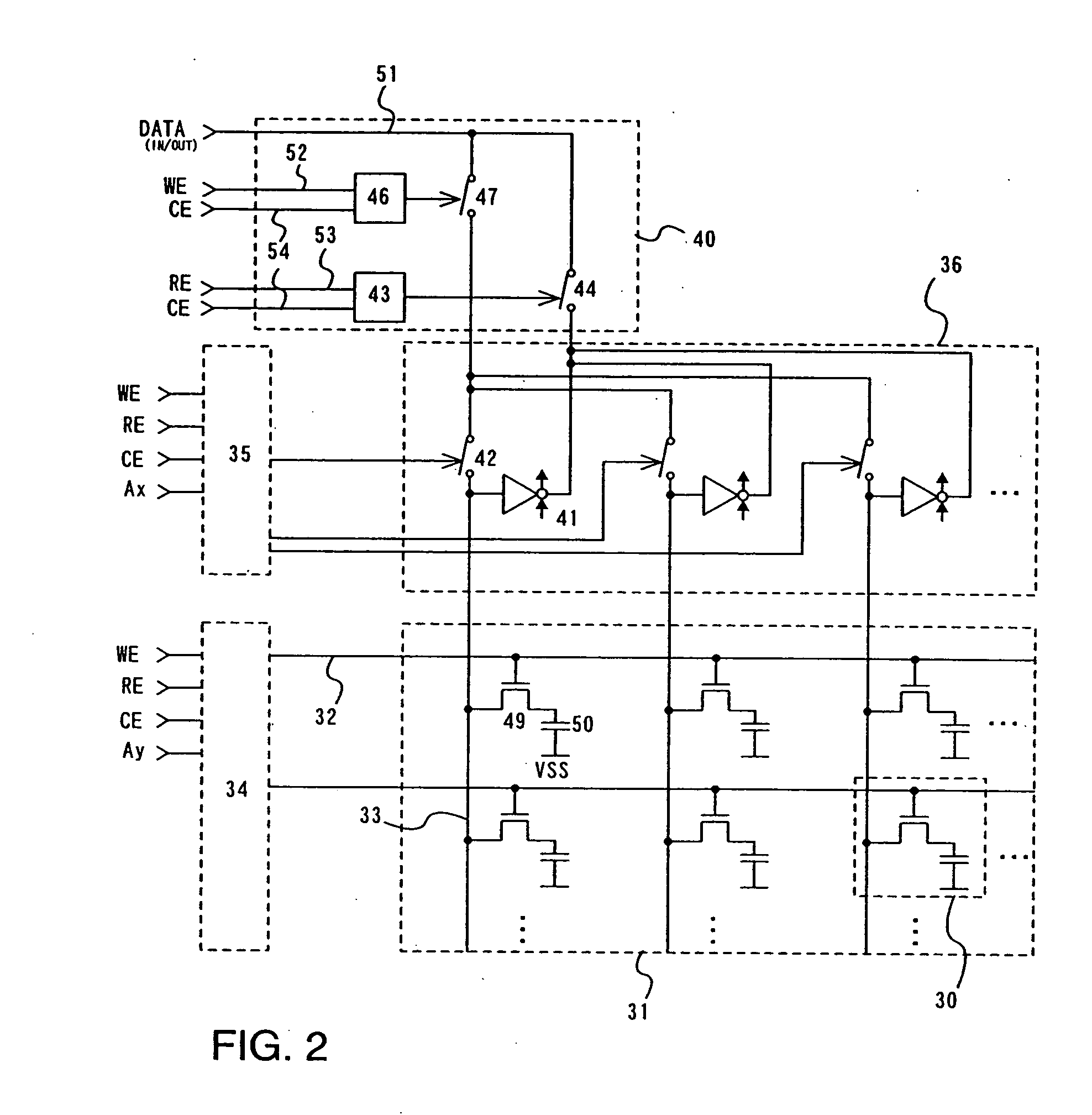

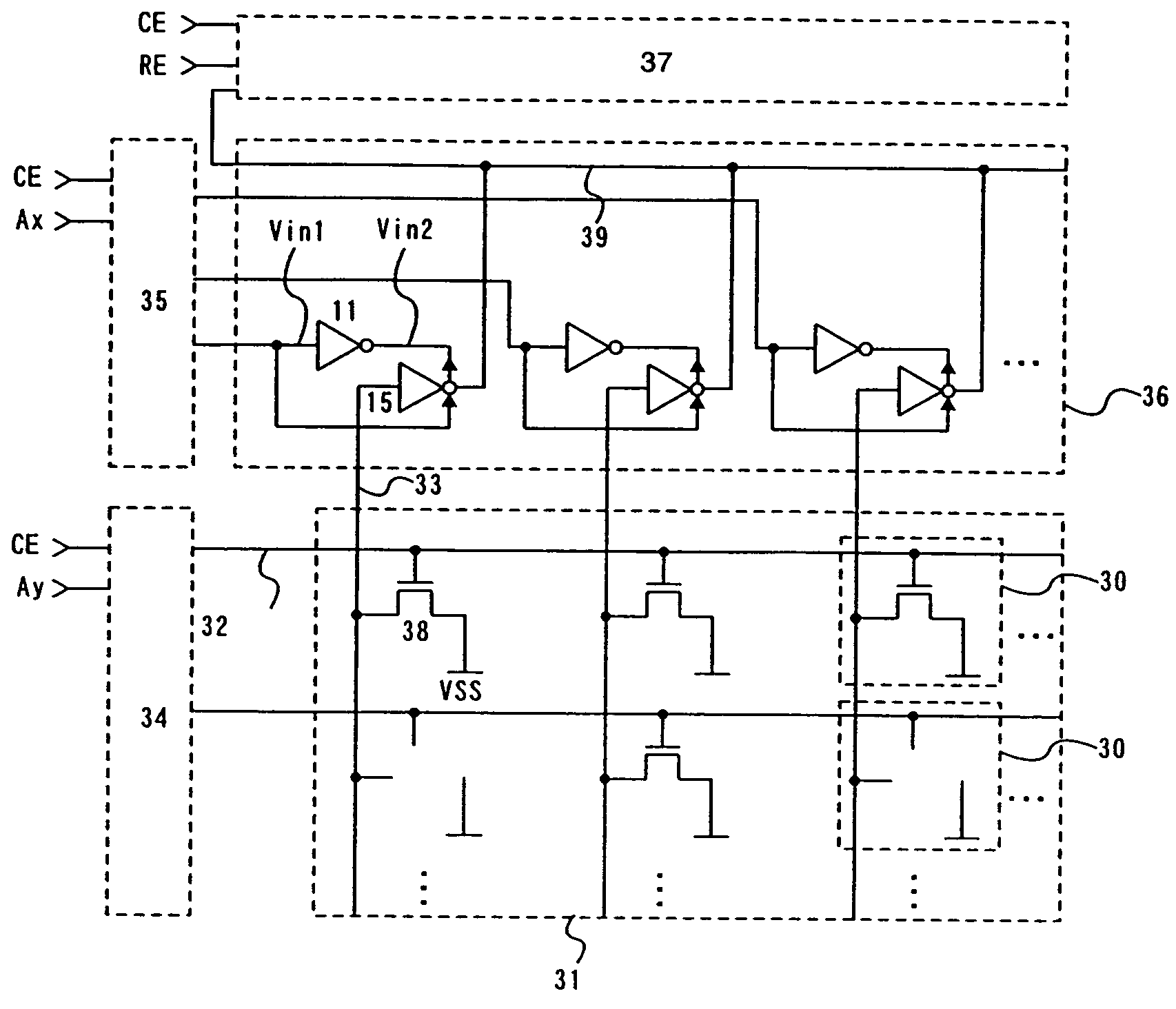

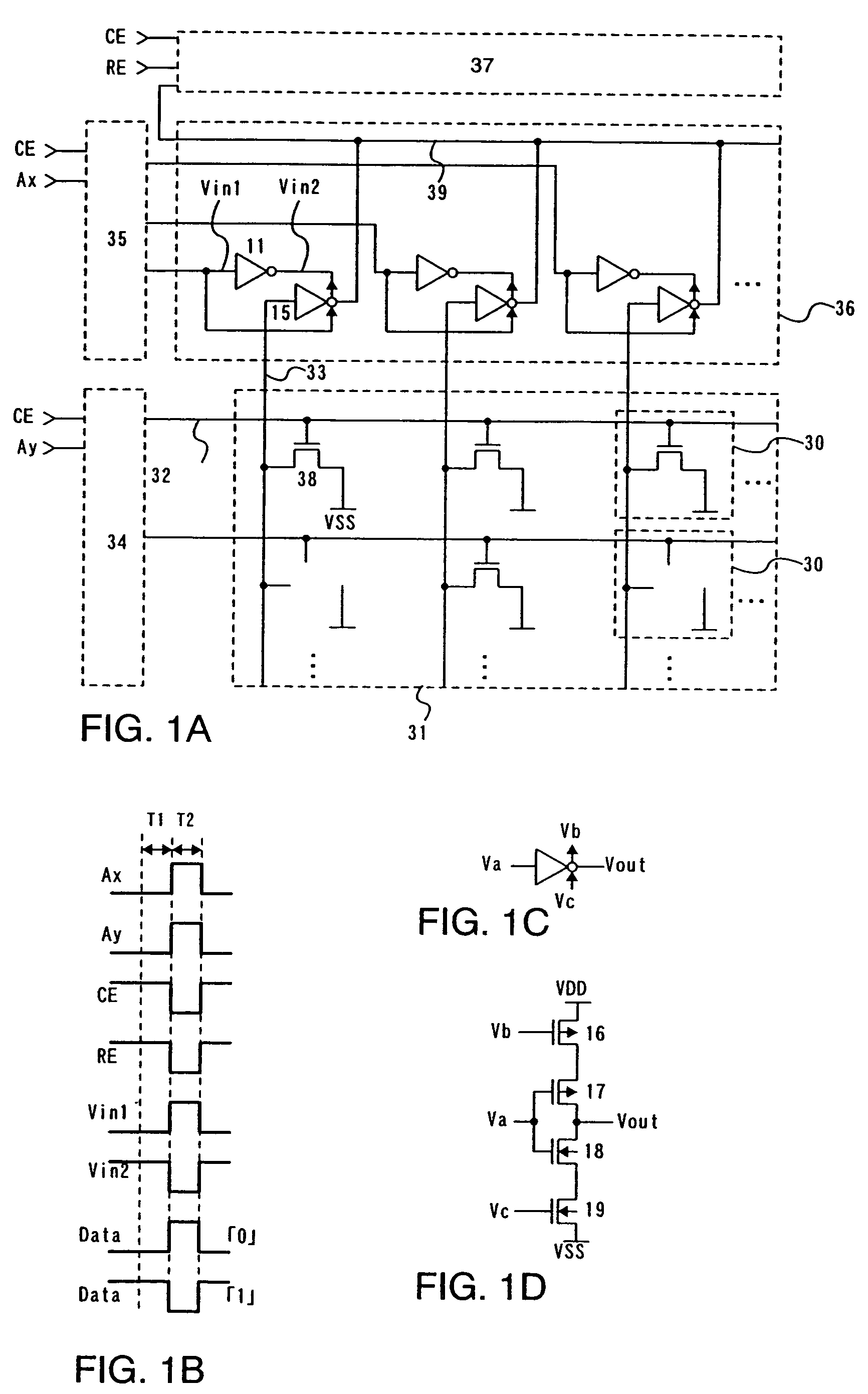

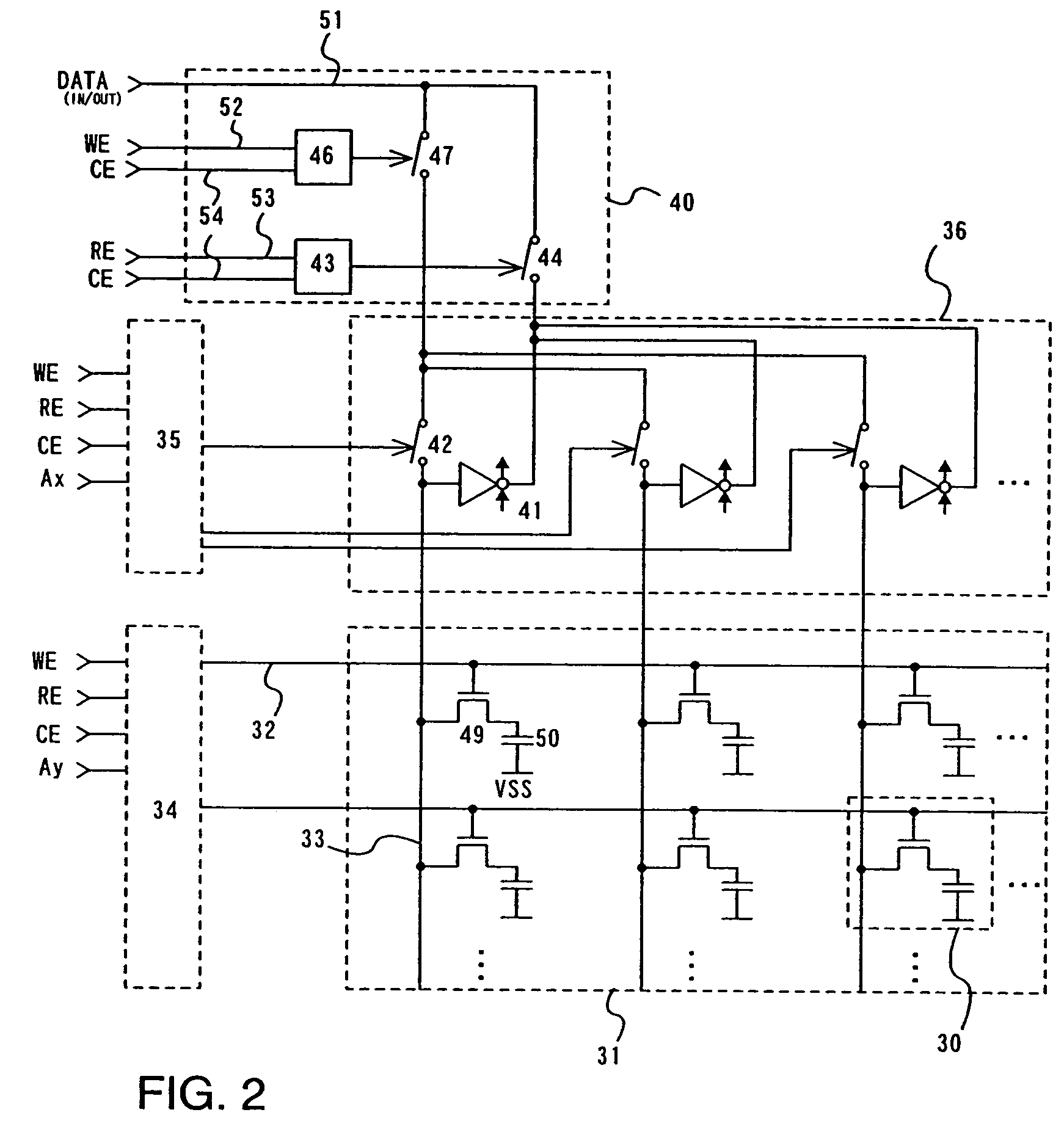

Memory and driving method of the same

ActiveUS20050047266A1Increase productionSuppression of increase in mounting areaRead-only memoriesElectronic switchingBit lineComputer science

According to the invention, mounting area is decreased and yield is improved by decreasing the number of elements, and a memory with less burden on peripheral circuitry and a driving method thereof are provided. The invention comprises a memory cell including a memory element in a region where a bit line and a word line cross with an insulator interposed between them, a column decoder, and a selector including a clocked inverter. An input node of the clocked inverter is connected to the bit line while an output node is connected to a data line. Among a plurality of transistors connected in series which form the clocked inverter, a gate of a P-type transistor of which source or drain is connected to a power source on the high potential side VDD and a gate of an N-type transistor of which source or drain is connected to a power source on the low potential side VSS are connected to the column decoder.

Owner:SEMICON ENERGY LAB CO LTD

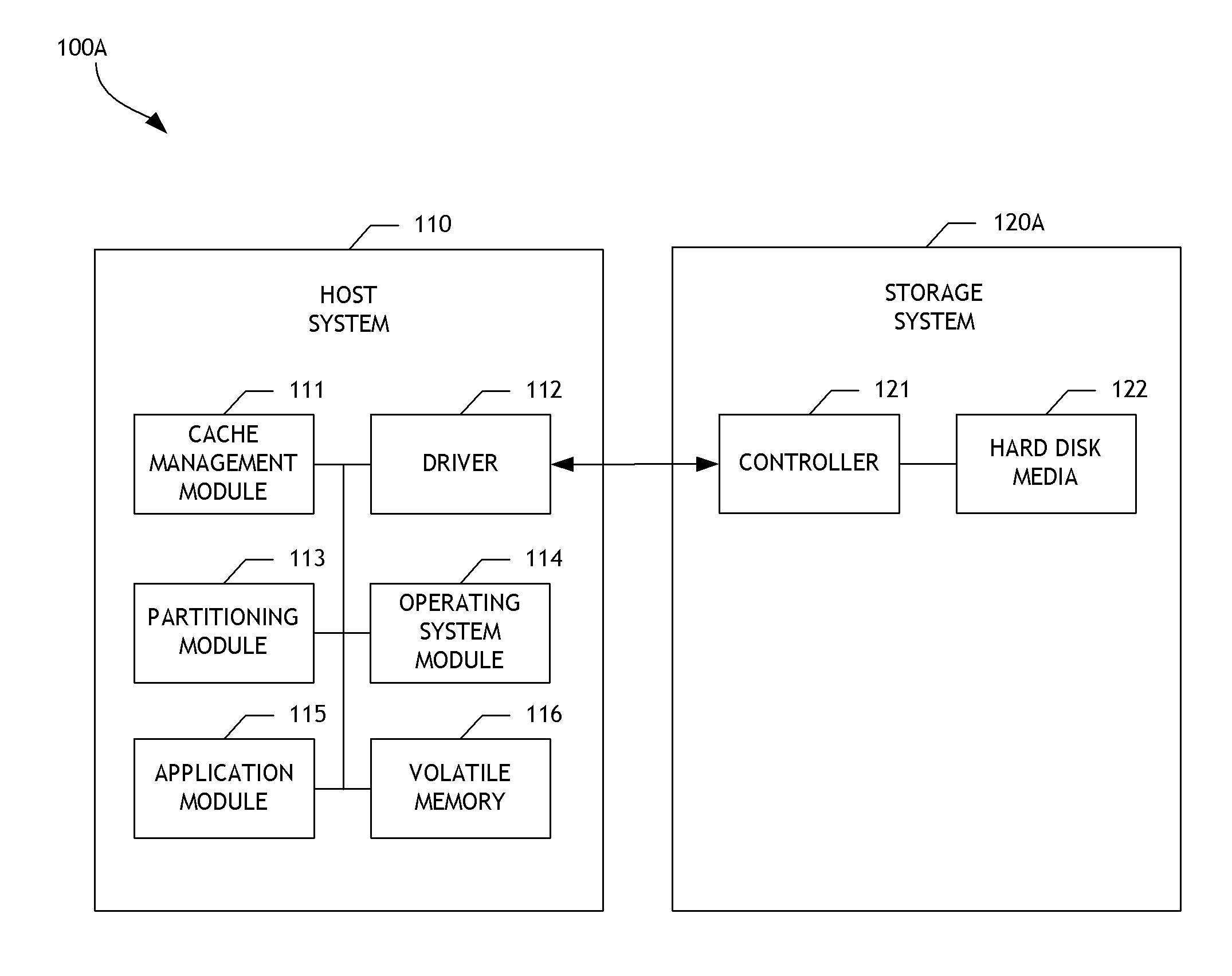

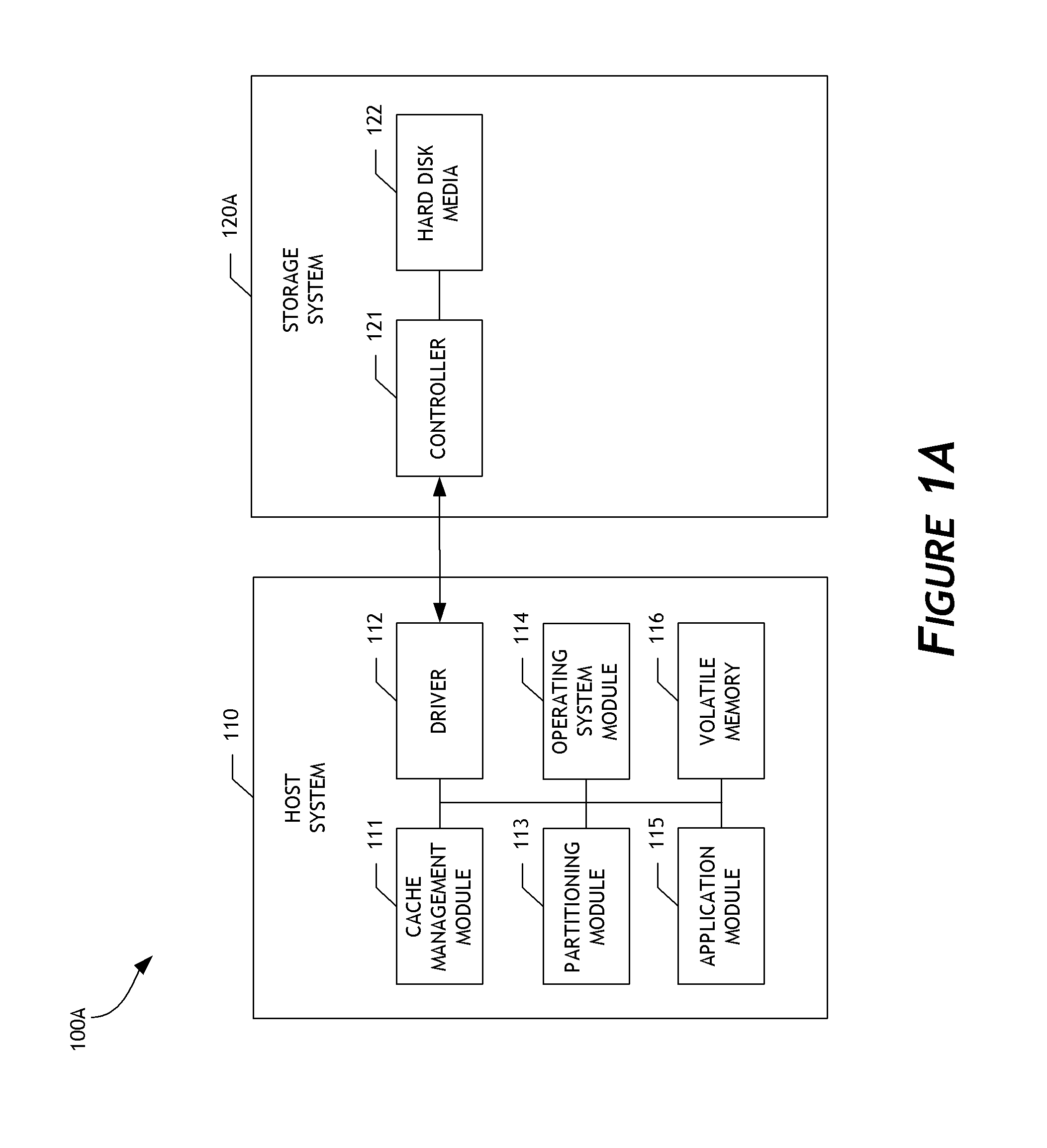

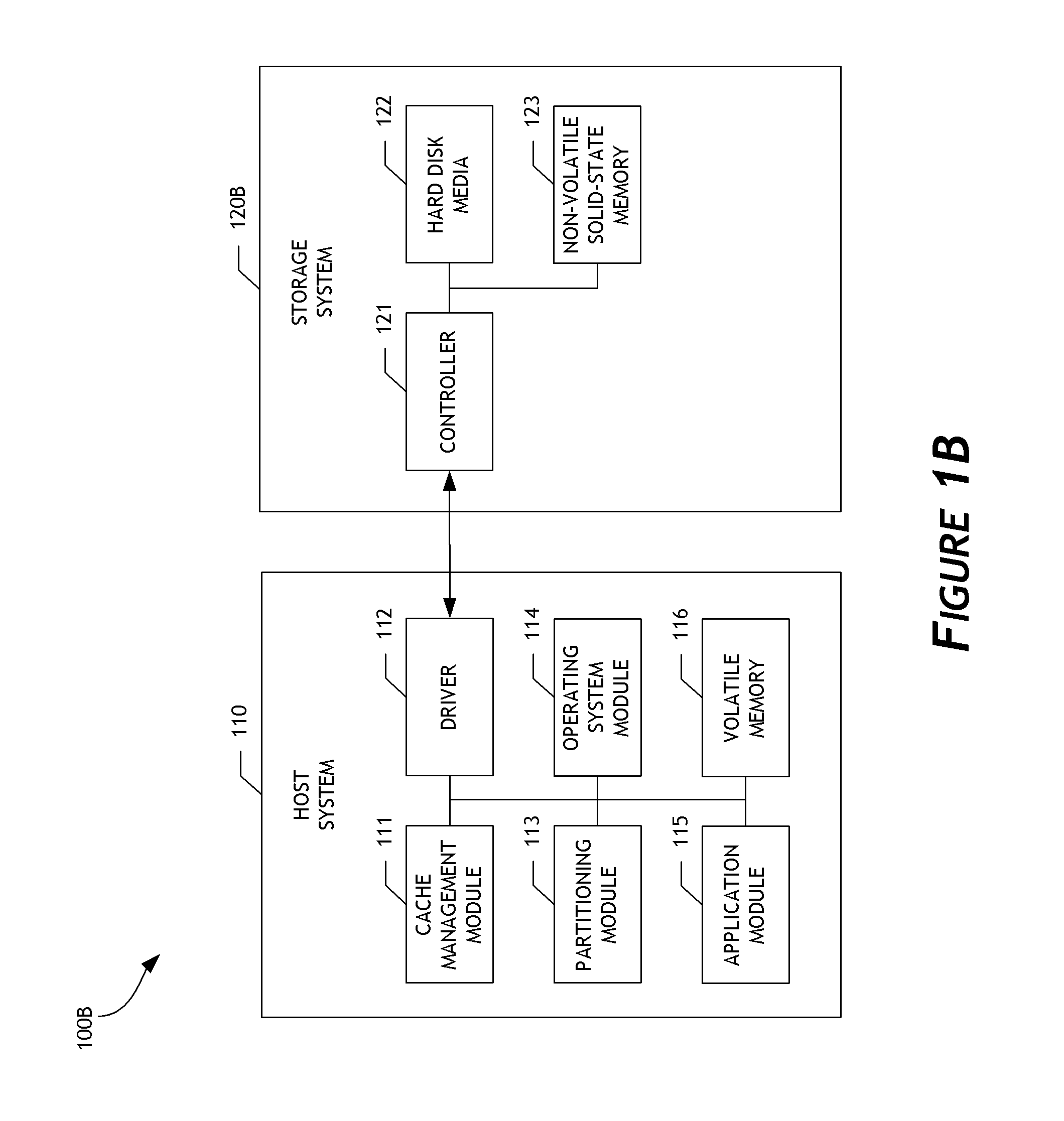

Caching data in a high performance zone of a data storage system

ActiveUS9280472B1Short response timeFaster access timeMemory architecture accessing/allocationMemory adressing/allocation/relocationCache managementData store

A combination of a host system and a storage system is disclosed that facilitates improved responsiveness of the host system to user requests. In one embodiment, the host system includes a cache management module, a partitioning module, and a driver. The cache management module determines write data to cache to a dedicated zone of a hard disk media of the storage system. The partitioning module partitions the hard disk media into one partition including the dedicated zone and another partition not including the dedicated zone. The driver transmits storage access commands to the storage system to cache data in and retrieve cached data from the one partition. Thereby, the host system can access the write data from the hard disk media more quickly than if the write data were cached in and accessed from another zone of the hard disk media.

Owner:WESTERN DIGITAL TECH INC

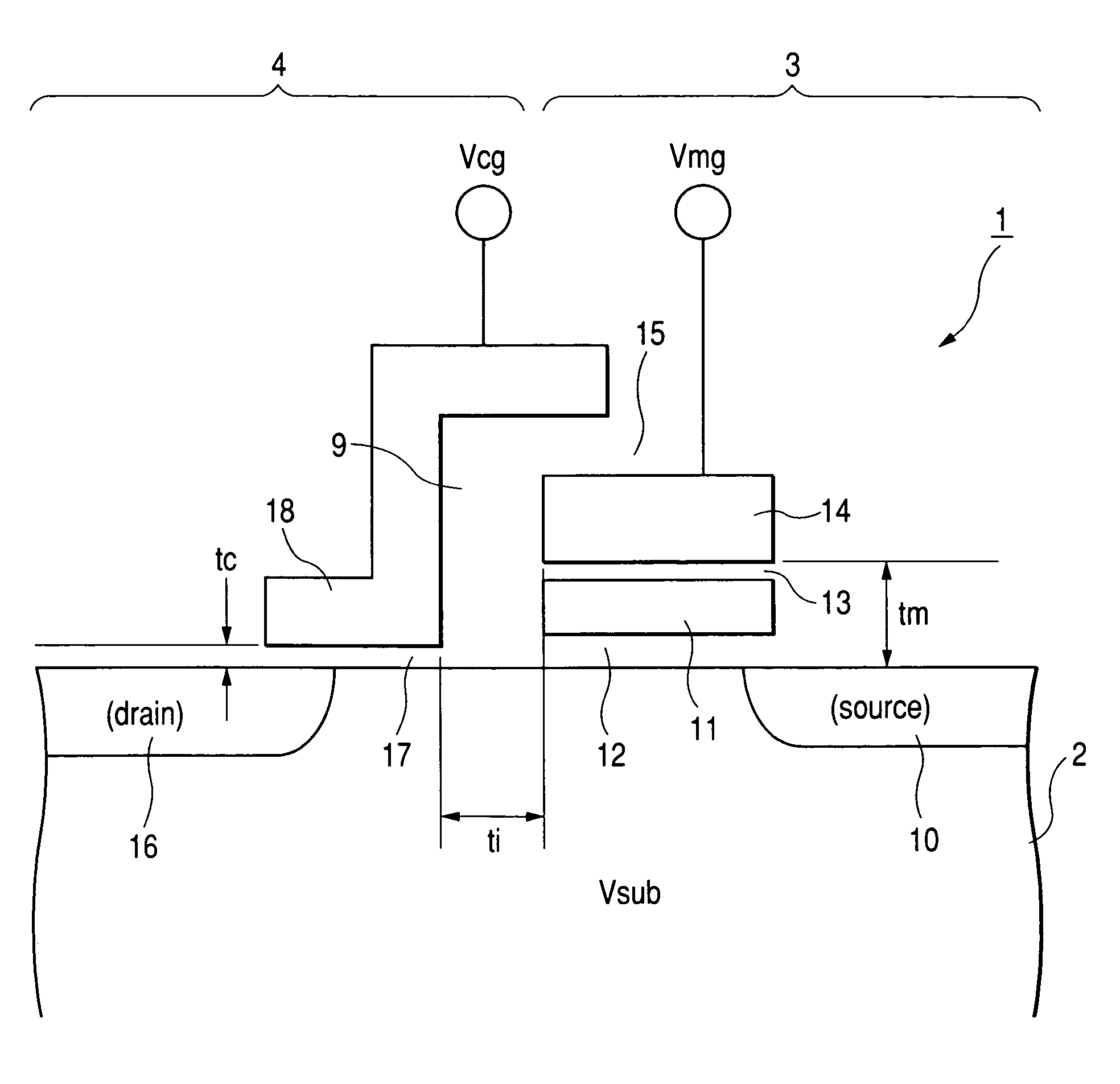

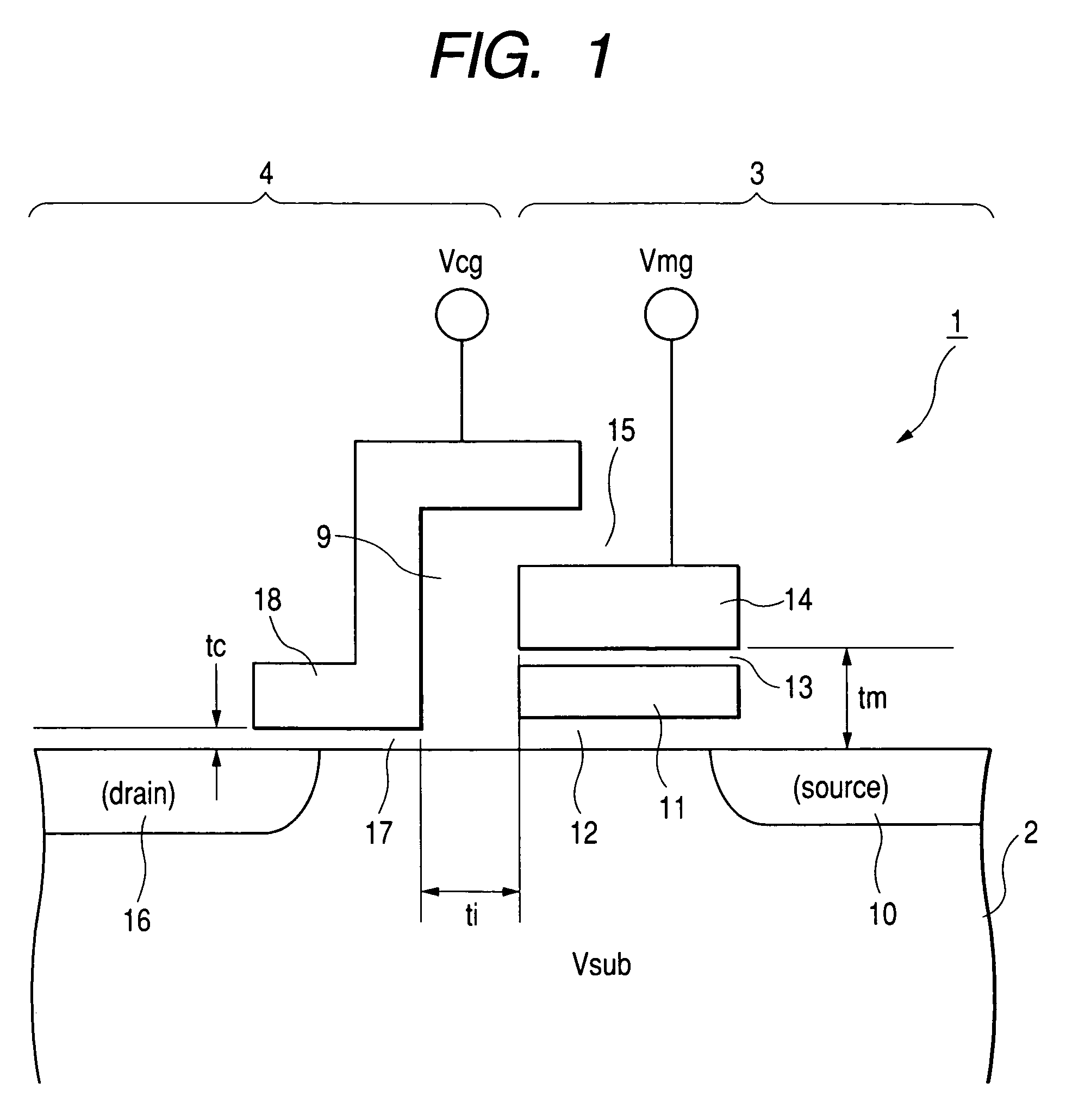

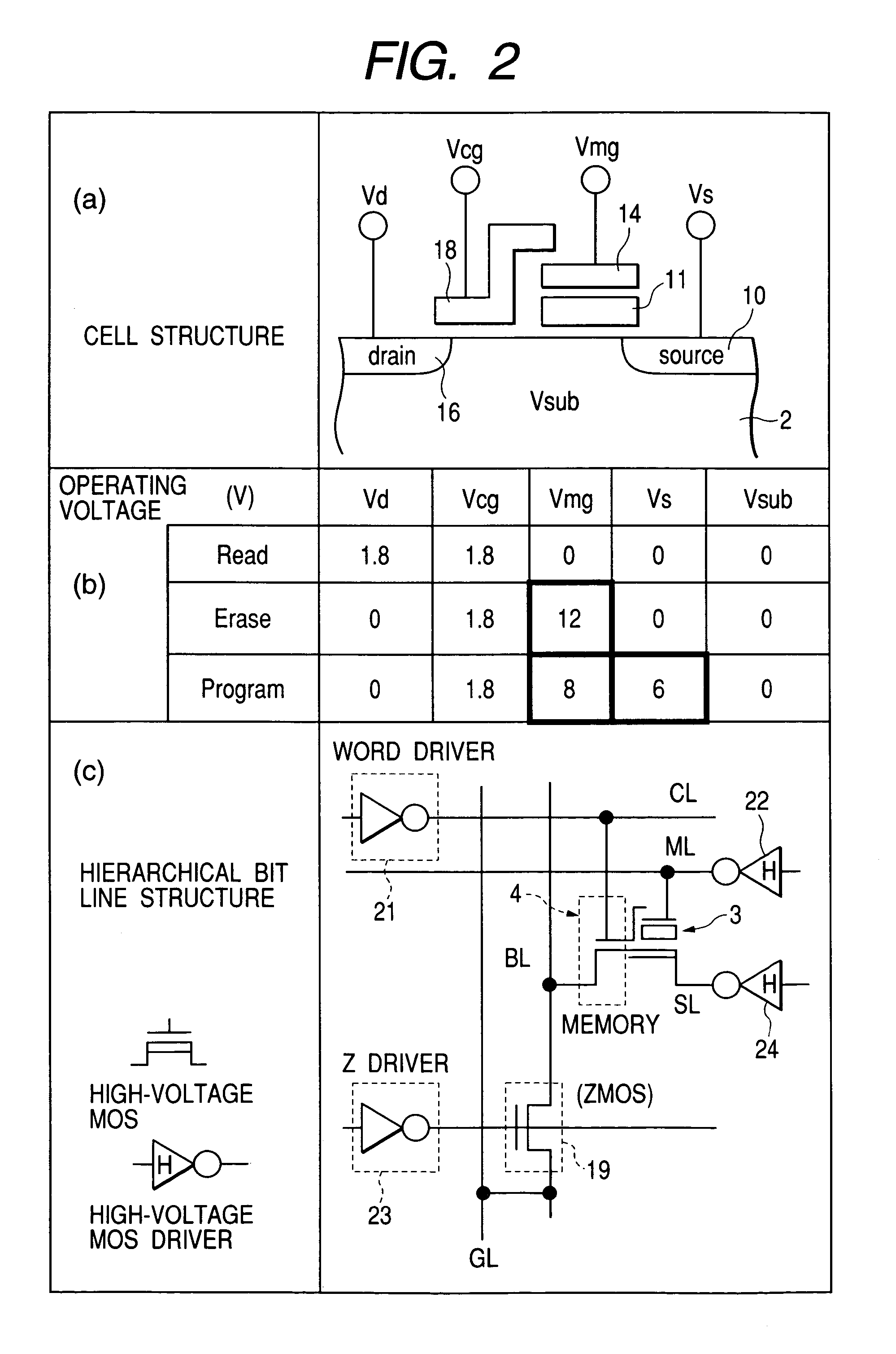

Nonvolatile semiconductor memory device employing transistors having different gate withstand voltages for enhanced reading speed

A semiconductor device includes a plurality of nonvolatile memory cells (1). Each of the nonvolatile memory cells comprises a MOS type first transistor section (3) used for information storage, and a MOS type second transistor section (4) which selects the first transistor section. The second transistor section has a bit line electrode (16) connected to a bit line, and a control gate electrode (18) connected to a control gate control line. The first transistor section has a source line electrode (10) connected to a source line, a memory gate electrode (14) connected to a memory gate control line, and a charge storage region (11) disposed directly below the memory gate electrode. A gate withstand voltage of the second transistor section is lower than that of the first transistor section. Assuming that the thickness of a gate insulating film of the second transistor section is defined as tc and the thickness of a gate insulating film of the first transistor section is defined as tm, they have a relationship of tc<tm.

Owner:RENESAS ELECTRONICS CORP

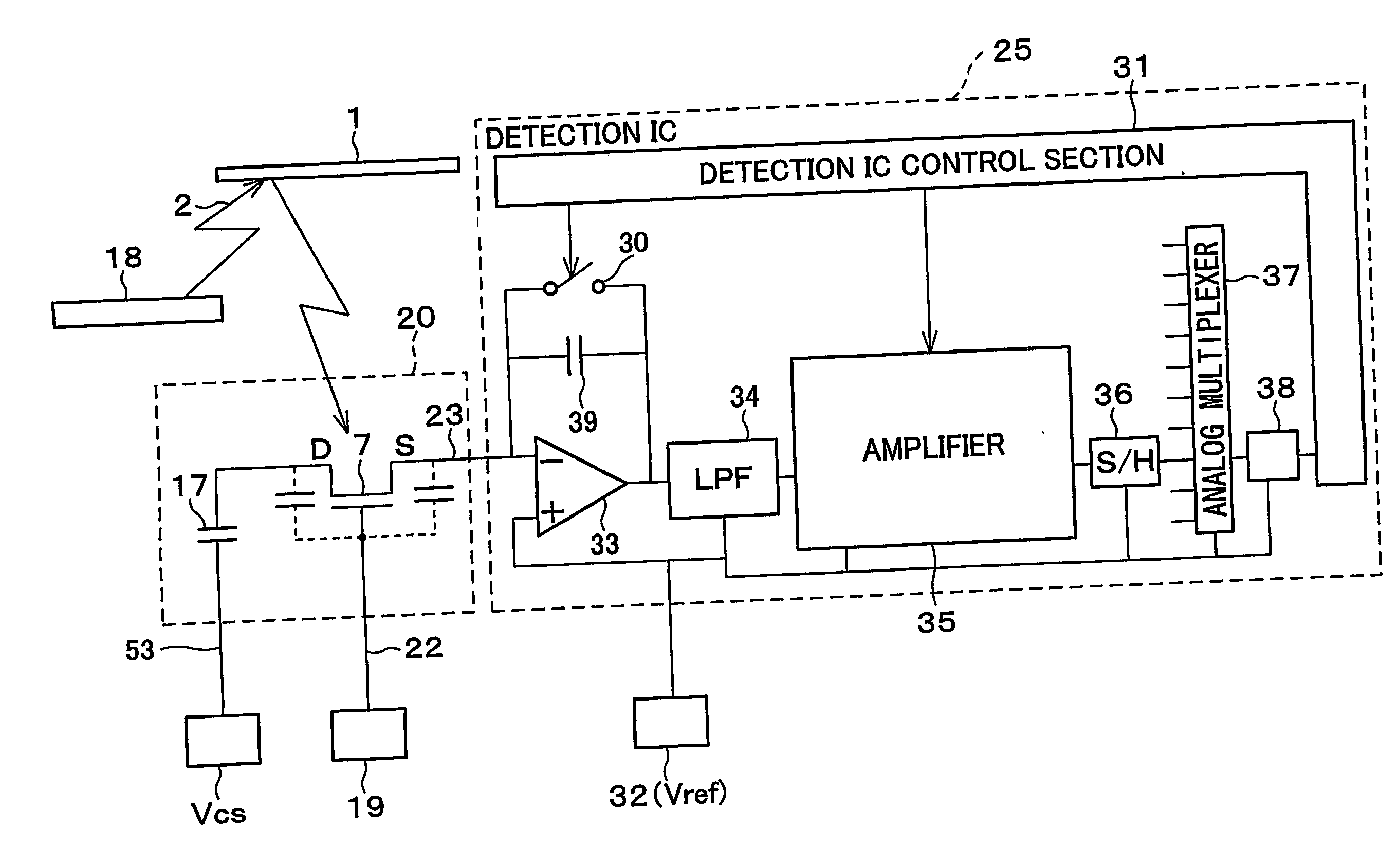

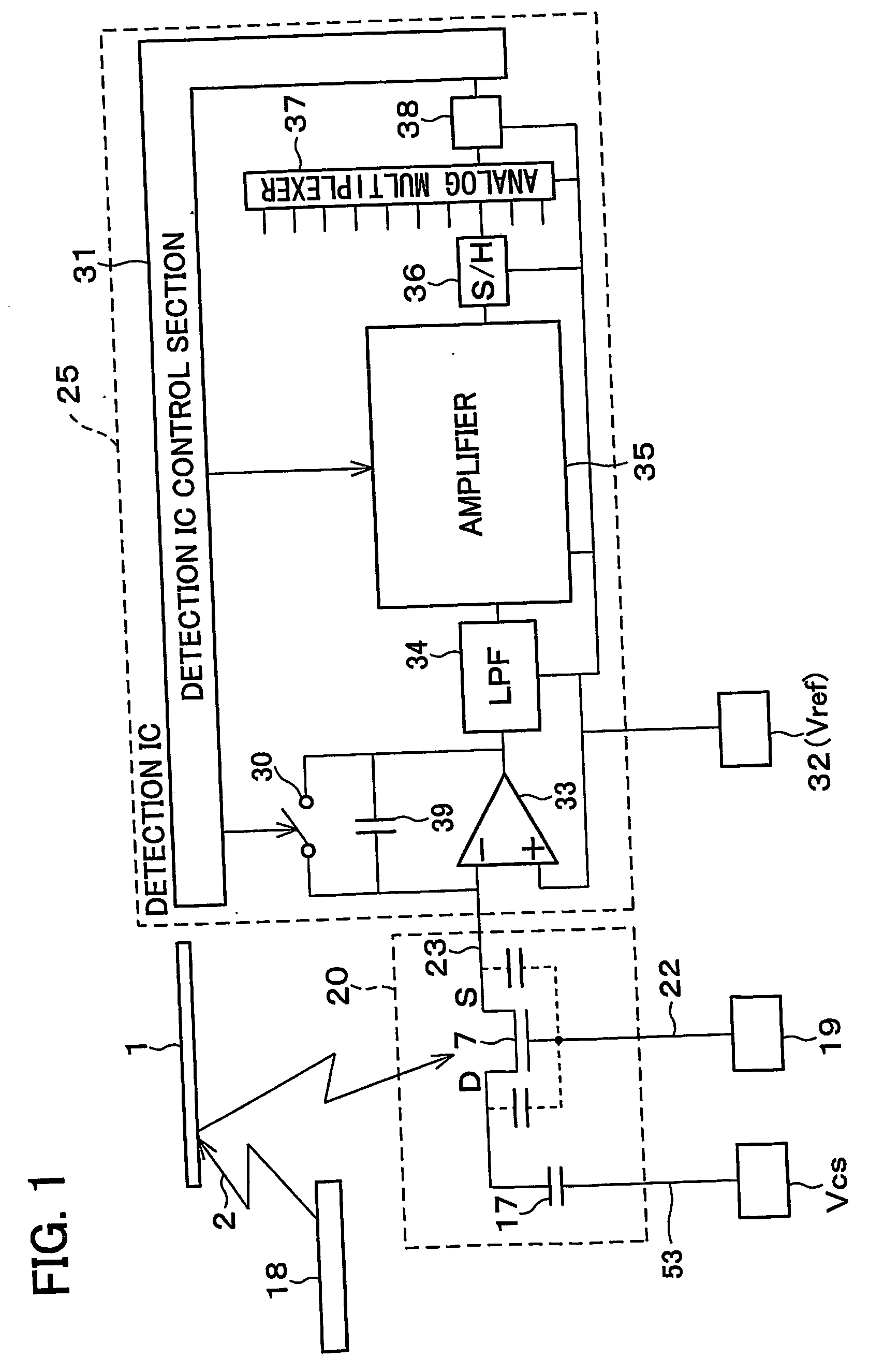

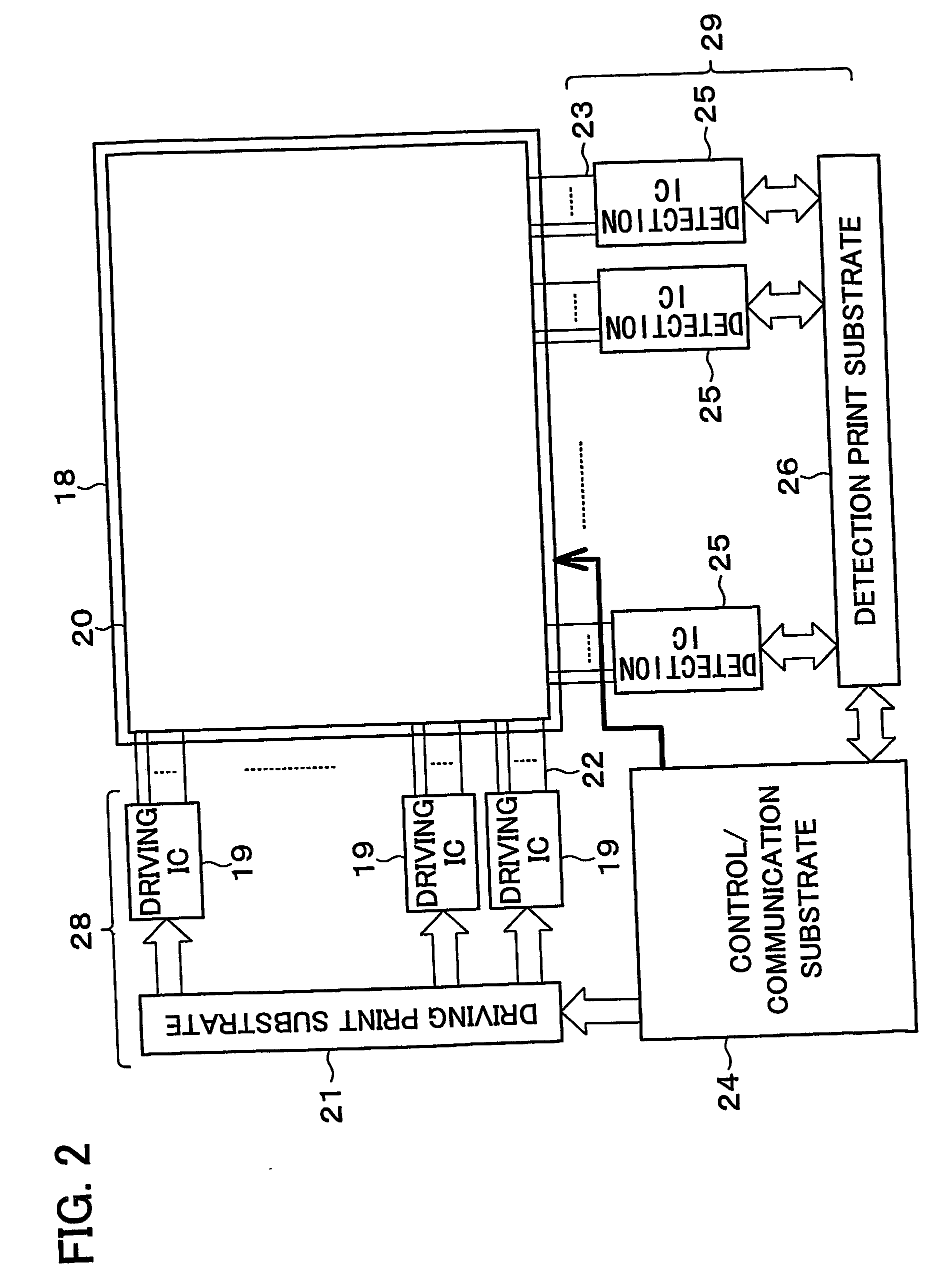

Imaging device and method for reading signals from such device

InactiveUS7728893B2Reduce the numberImprove reading speedTelevision system detailsTelevision system scanning detailsAudio power amplifierVariable-gain amplifier

Each pixel cell (12) of an image sensor (10) is made of a 4-Tr structure, in which only one Tr for resetting a column (X) is so added to an ordinary 3-Tr APS as to reset only an arbitrary pixel selectively, thereby to confine the pixel size. When a pixel signal is to be read, the period, for which the pixel signals composing an ordinary image of one frame are read, is finely divided so that the pixel signals of the pixels receiving an ID light for the period are read out bit by bit and repeatedly. At this time, for only the column being read, an electric current is fed to a read amplifier in the pixel cell (12) or a variable gain amplifier in an output unit (14), thereby to suppress the power consumption. As a result, a lower power consumption and a higher pixel formation can be attained in an image pickup device for picking up an image and for acquiring the ID information of a light beacon existing in the image pickup range.

Owner:JAPAN SCI & TECH CORP +1

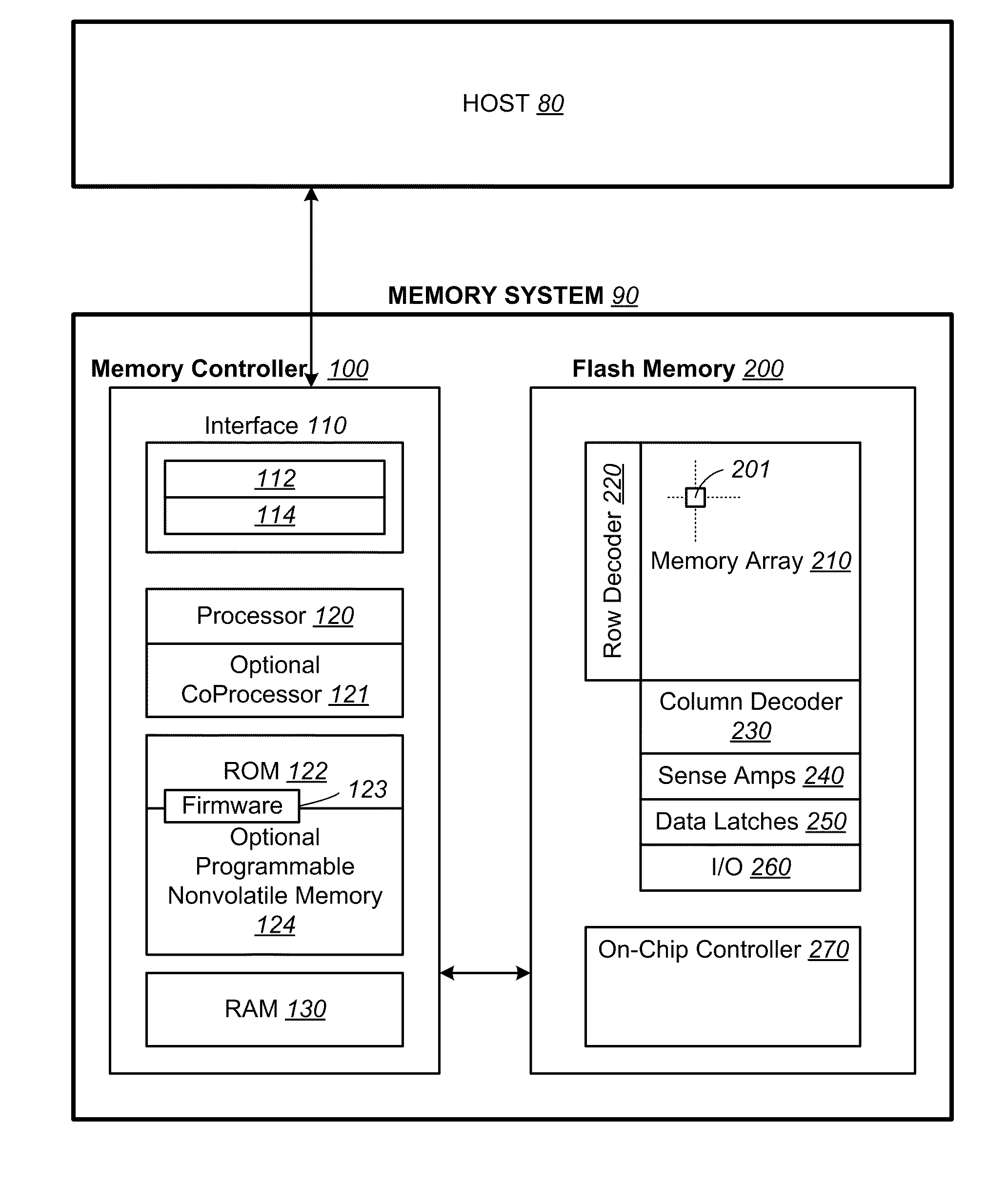

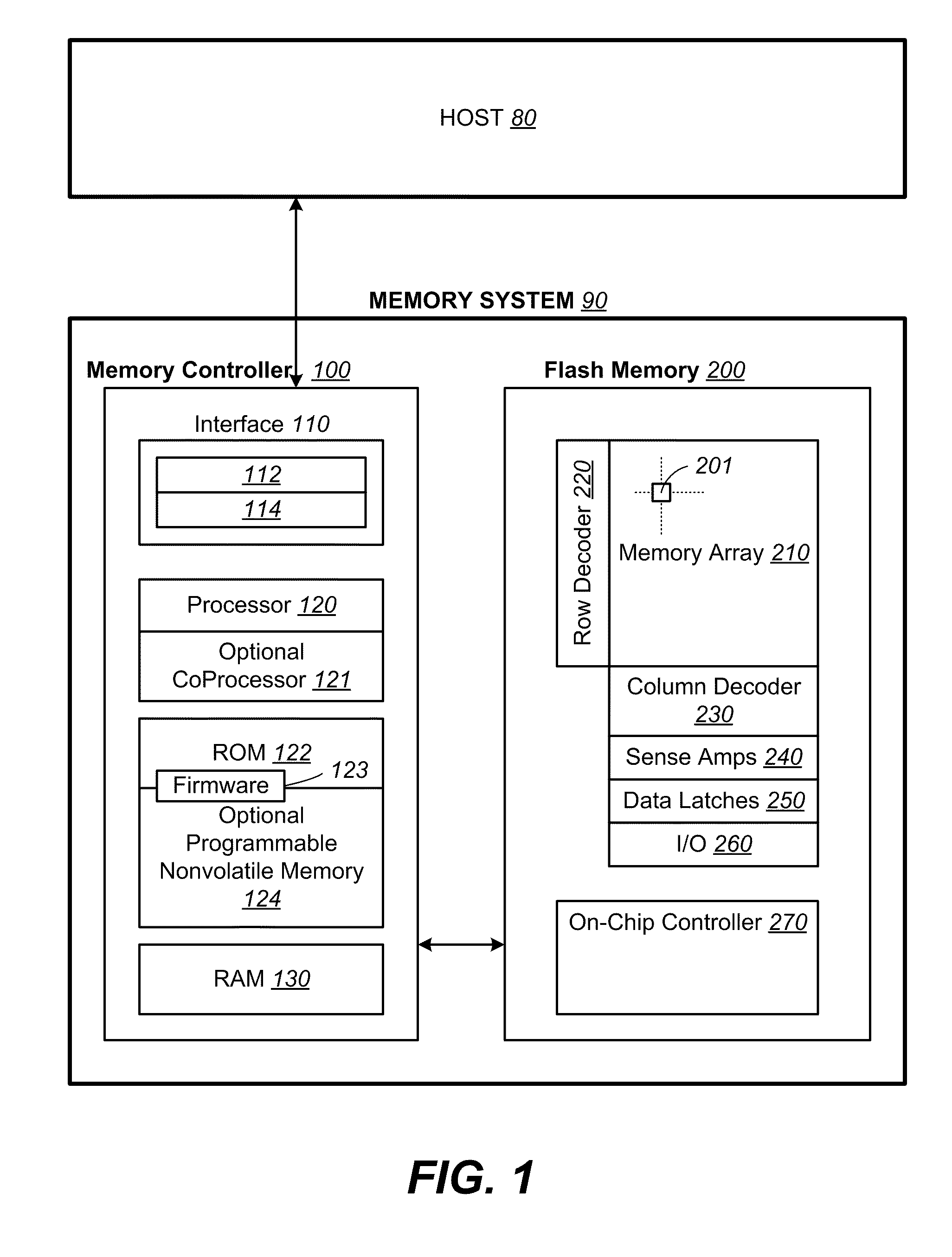

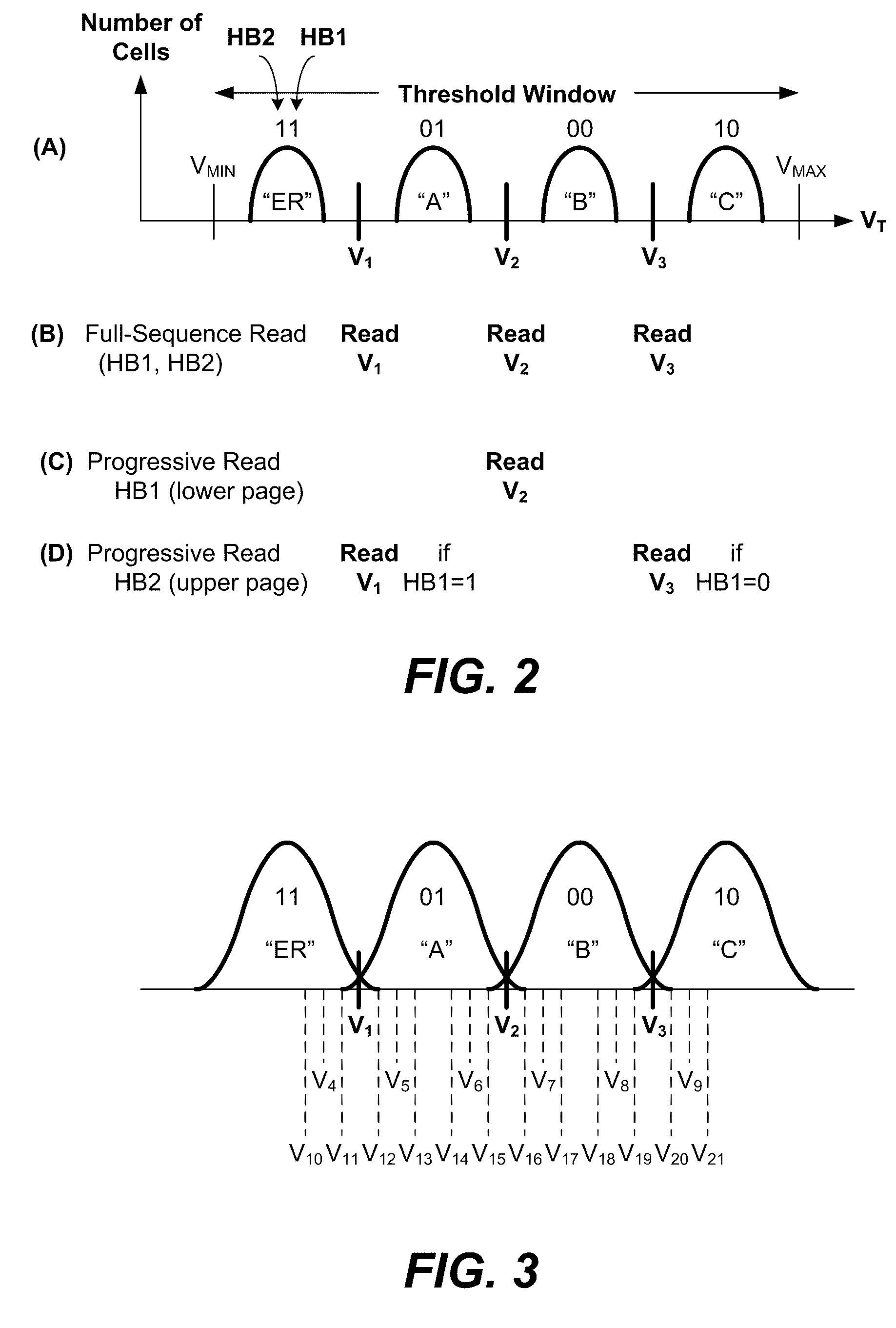

Non-volatile memory and methods with reading soft bits in non uniform schemes

ActiveUS8099652B1Increasing digital circuit requiredImprove reading speedError detection/correctionRead-only memoriesImage resolutionComputer science

A non-volatile memory has its cells' thresholds programmed within any one of a first set of voltage bands partitioned by a first set of reference thresholds across a threshold window. The cells are read at a higher resolution relative to a second set of reference thresholds so as to provide additional soft bits for error correction. The reference thresholds of the second set are set up to be non-uniformly distributed on the threshold window so as to provide higher resolution at designated regions. At the same time they are conducive to be read in groups for soft bits to be read bit-by-bit systematically with a simple algorithm and read circuit and using a minimum of data latches. This is accomplished by relaxing the requirement that the first set of reference threshold is a subset of the second set and that the resulting soft bits are symmetrically distributed about the hard bits.

Owner:SANDISK TECH LLC

Memory and driving method of the same

ActiveUS7158439B2More burden is imposed upon peripheral circuitryReduce the burden onRead-only memoriesElectronic switchingBit lineElectricity

A memory having a bit line, a word line crossing the bit line, a memory cell electrically connected to the bit line and to the word line, a column decoder and a selector including a clocked inverter having a plurality of transistors electrically connected in series between a first power source and a second power source is provided. An input node of the clocked inverter is connected to the bit line, an output node of the clocked inverter is electrically connected to a data line, the plurality of transistors comprise a P-type transistor and a N-type transistor, a gate electrode of the P-type transistor and a gate electrode of the N-type transistor are electrically connected to the column decoder, and a sense amplifier is not interposed between the bit line and the input node of the clocked inverter.

Owner:SEMICON ENERGY LAB CO LTD

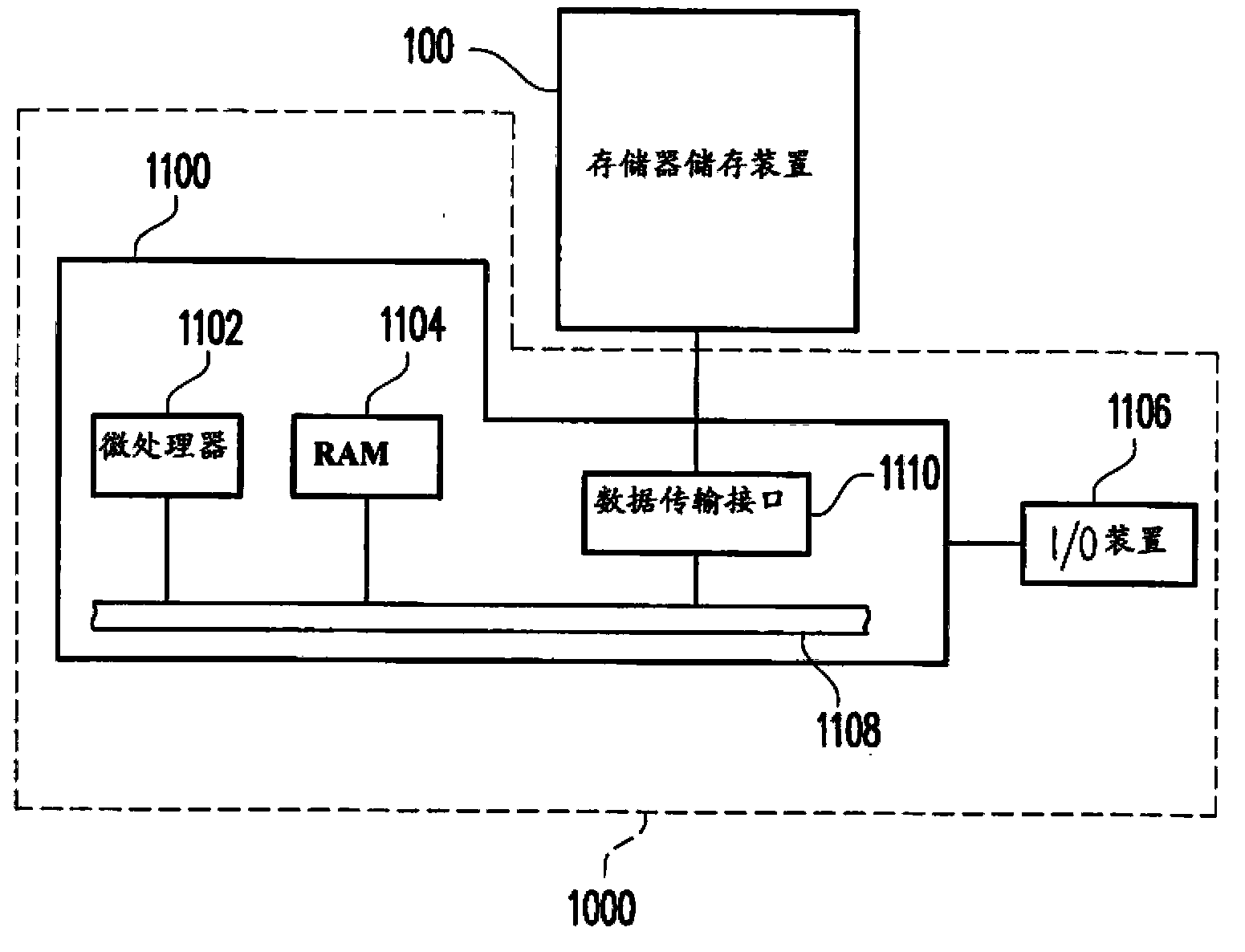

Mapping information recording method, memory controller and memory storage device

ActiveCN104102585AImprove reading speedInput/output to record carriersMemory adressing/allocation/relocationMemory controllerAddress mapping

The invention provides a mapping information recording method, a memory controller and a memory storage device, which are used for a rewritable nonvolatile memory module. The method comprises the following steps: configuring multiple logic addresses; establishing at least one logic address mapping table and storing the at least one logic address mapping table to the rewritable nonvolatile memory module; the method also comprises the following steps: receiving data to be stored to multiple continuous logic addresses from a host system; writing the data to multiple entity programmable units; updating mapping relations between the continuous logic addresses and the entity programmable units in a corresponding logic address mapping table loaded into a buffer memory; storing a continuous mapping table in the buffer memory, and recording continuous mapping records which correspond to the continuous logic addresses in the continuous mapping table.

Owner:PHISON ELECTRONICS

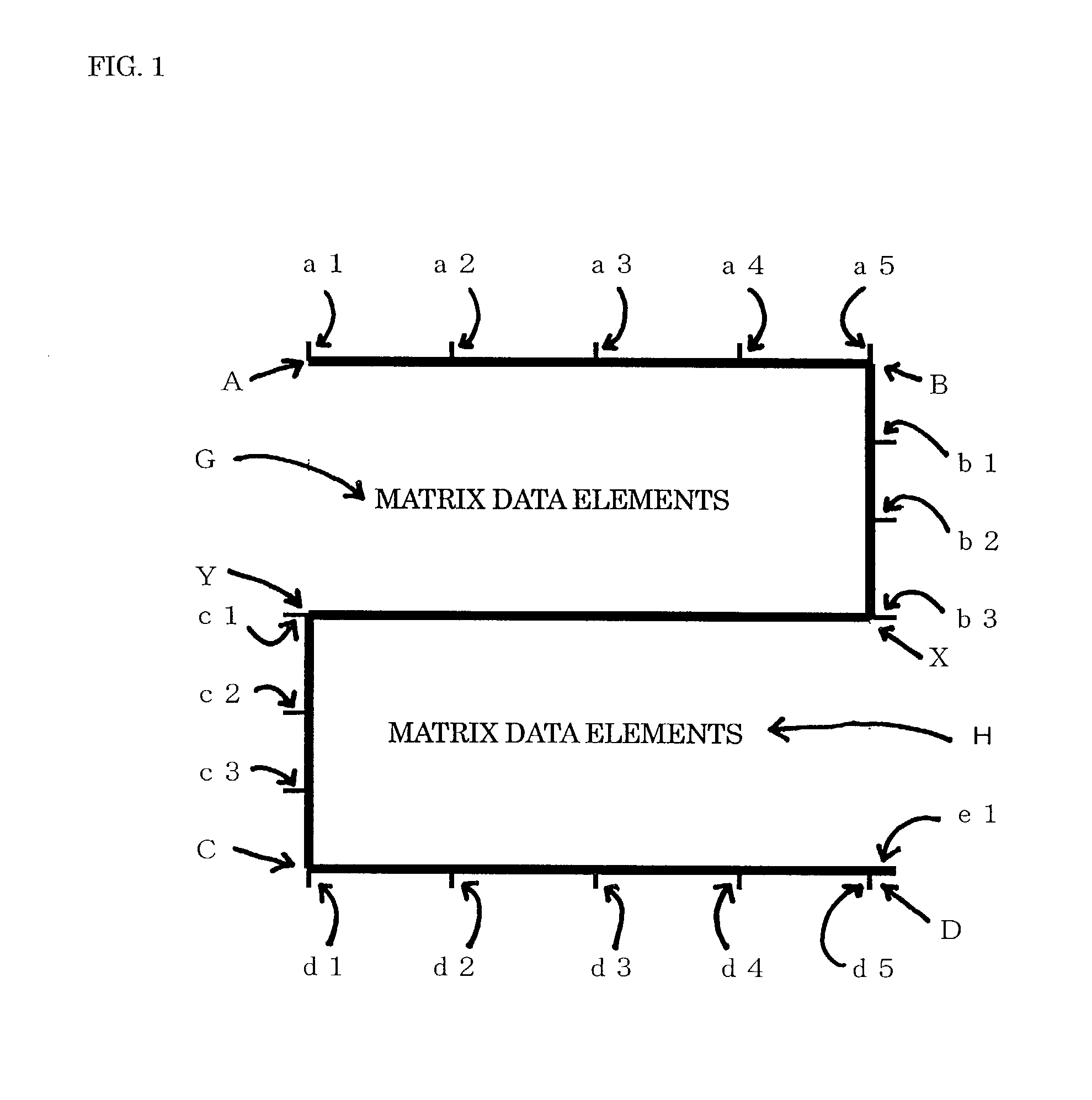

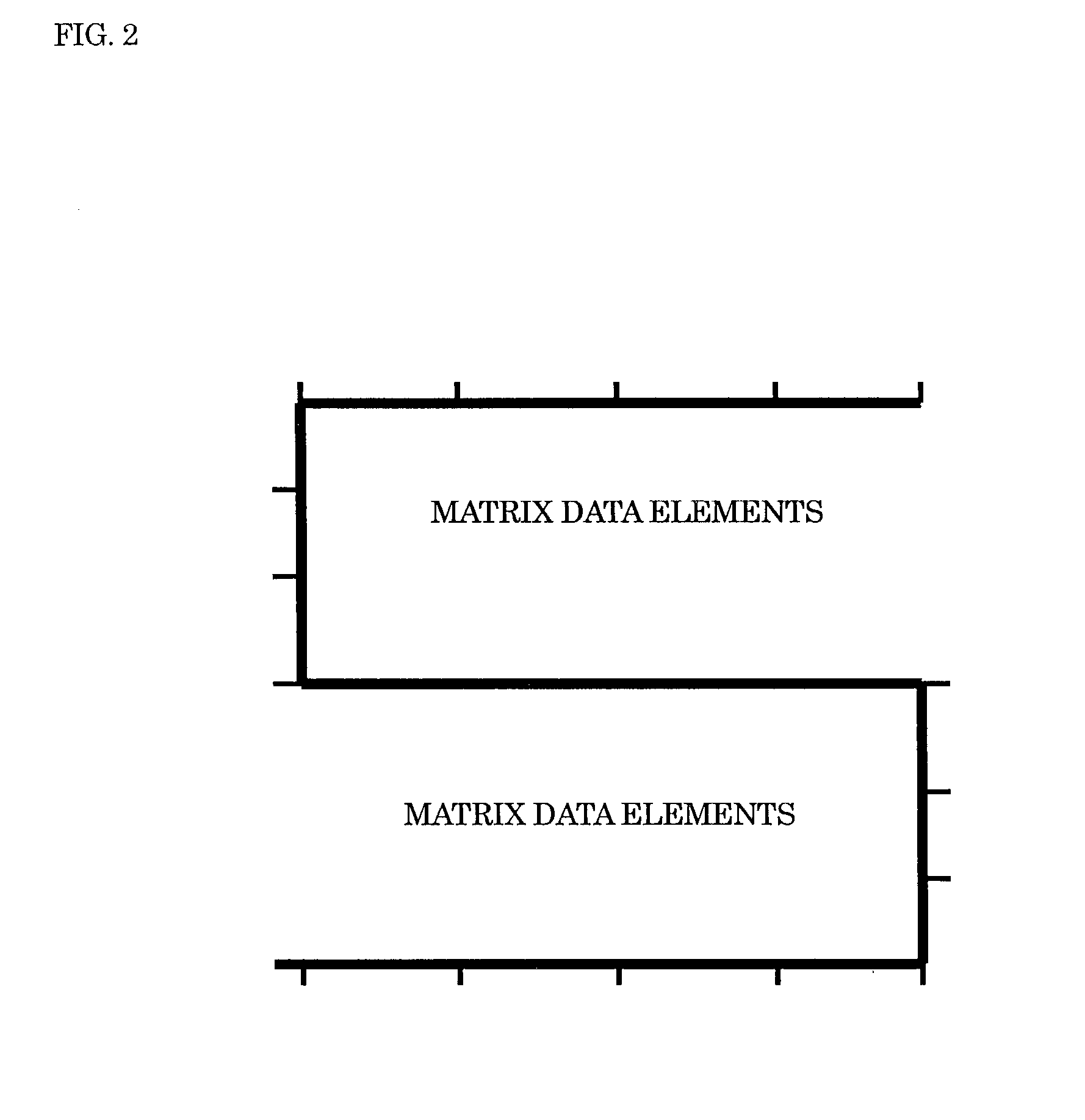

Two-dimensional code

InactiveUS20030029923A1Improve reading speedHigh densityCharacter and pattern recognitionRecord carriers used with machinesProgramming languageHigh density

A two-dimensional code for recognizing data in high speed and recording large amount of data in a limited space. The two-dimensional code was designed by locating one solid line that covers four corners of the area for matrix data element in order to obtain information of physical size of the two-dimensional code easily, and by arranging capture lines on the solid line to make a structure to obtain data density for capturing the two-dimensional code by high resolution CCD(Charged Coupled Devices) image scanner, and it can be presented that can store large amount of data in a limited size and generation of code with high density becomes possible.

Owner:MIZOGUCHI SATOSHI

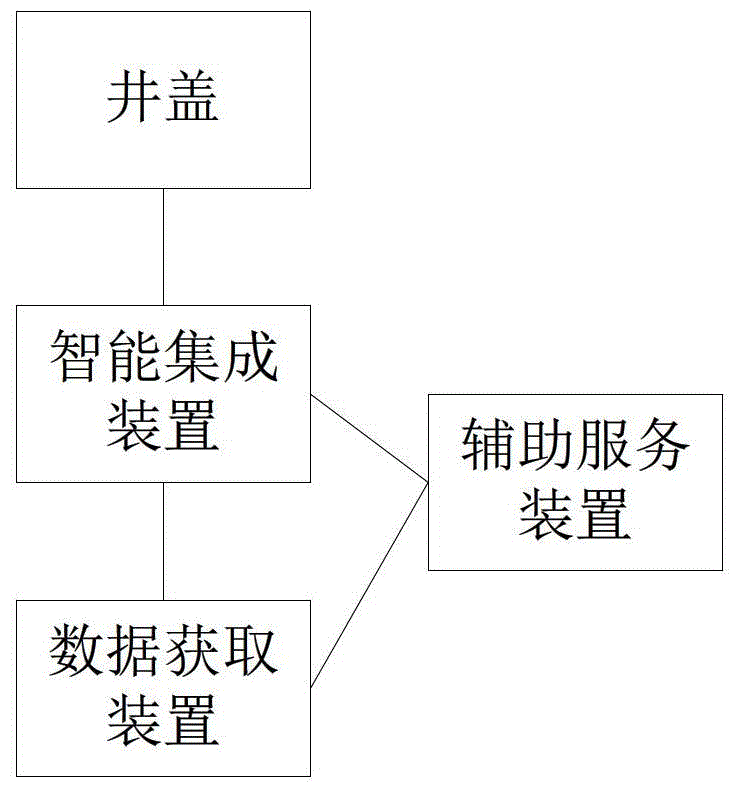

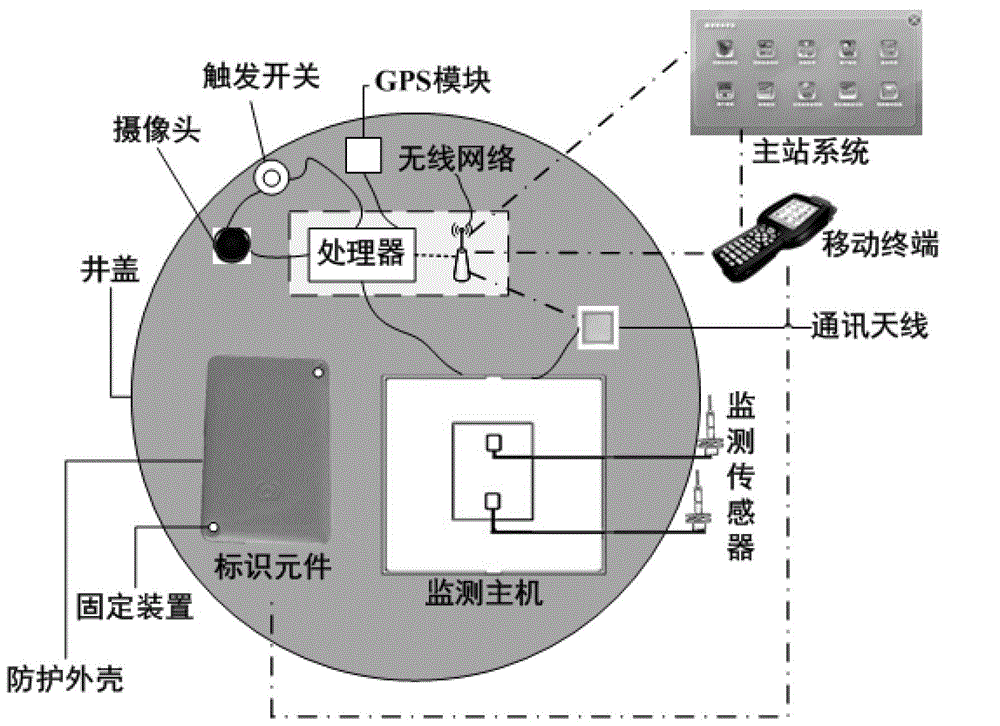

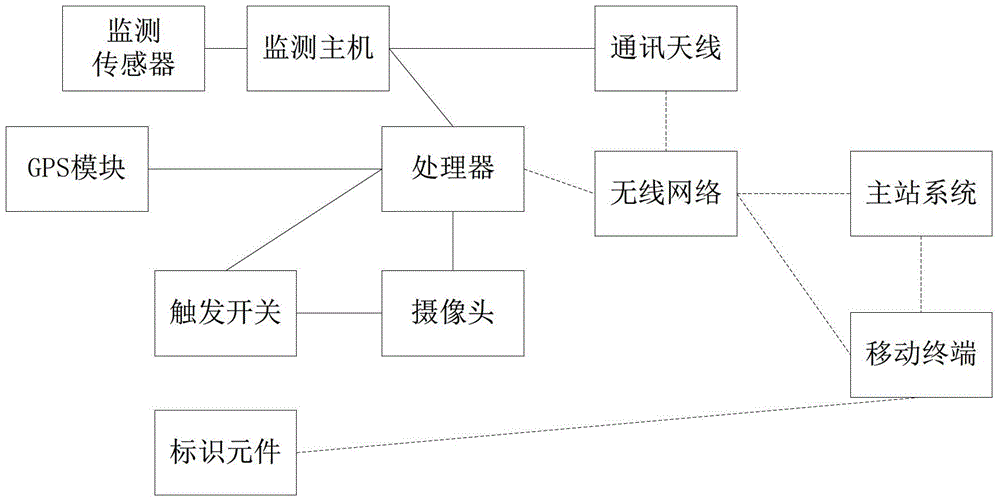

Multi-functional intelligent well cover system

InactiveCN104420482AThe collection is less affected by the environmentImprove reading speedArtificial islandsUnderwater structuresRelevant informationCovering system

The invention discloses a multi-functional intelligent well cover system. The multi-functional intelligent well cover system is characterized by comprising a well cover, an intelligent integration device for performing intelligent processing on the well cover, an information acquisition device for obtaining relevant information of the well cover, and an auxiliary service device for providing application service to the intelligent integration device and the information acquisition device. The multi-functional intelligent well cover system can perform positioning, unique identification, alarm and monitoring on the well cover.

Owner:北京清控人居环境研究院有限公司

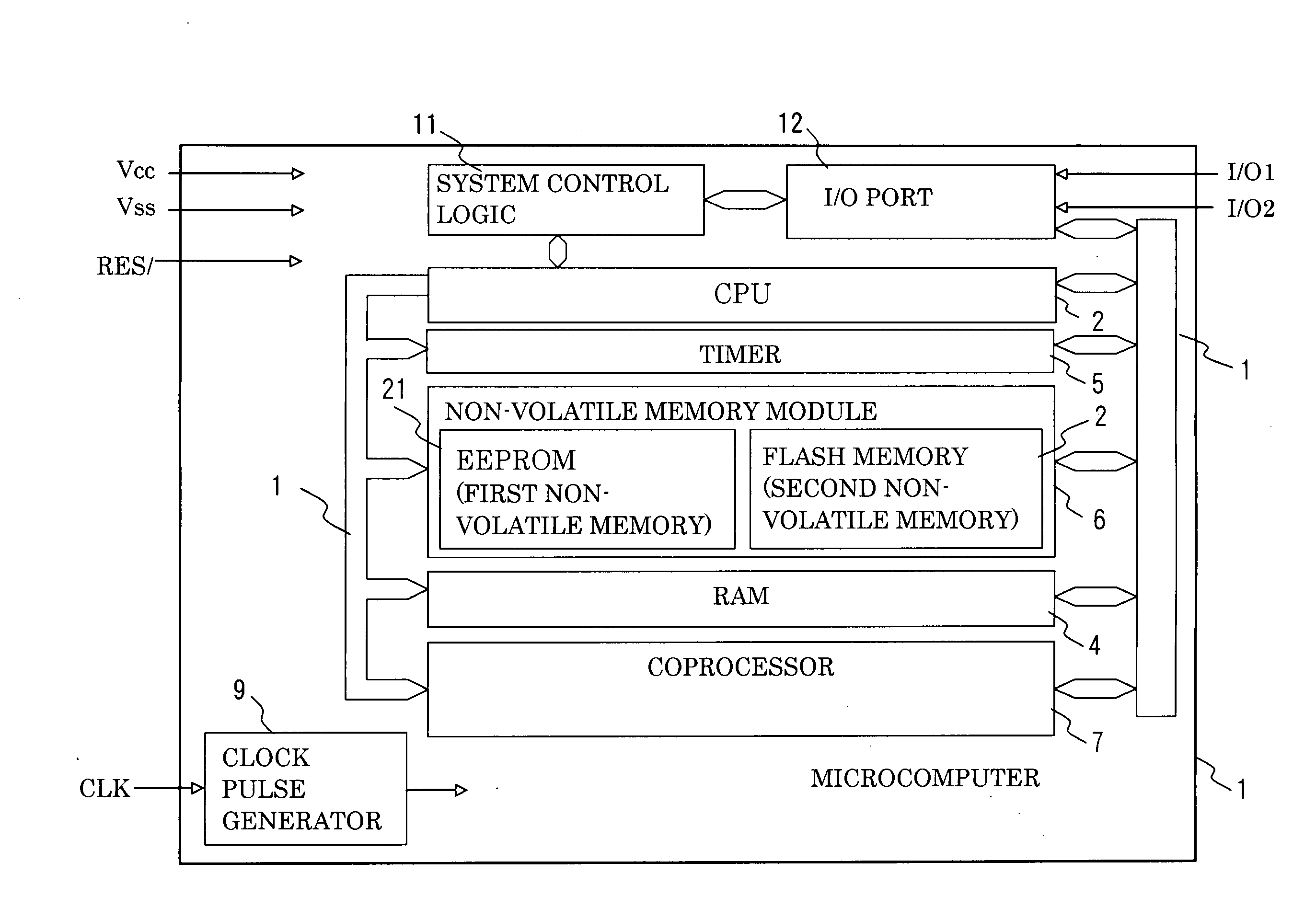

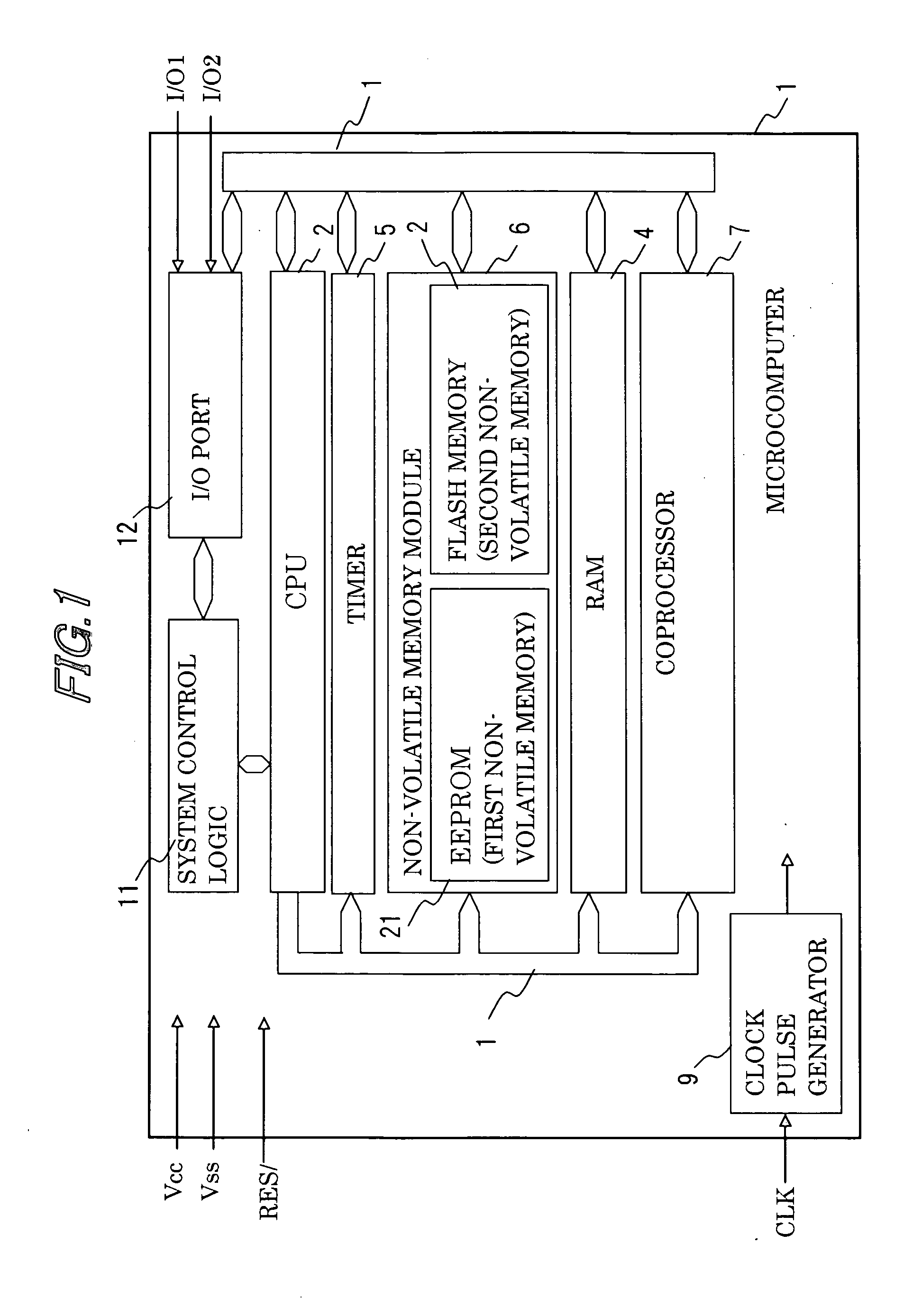

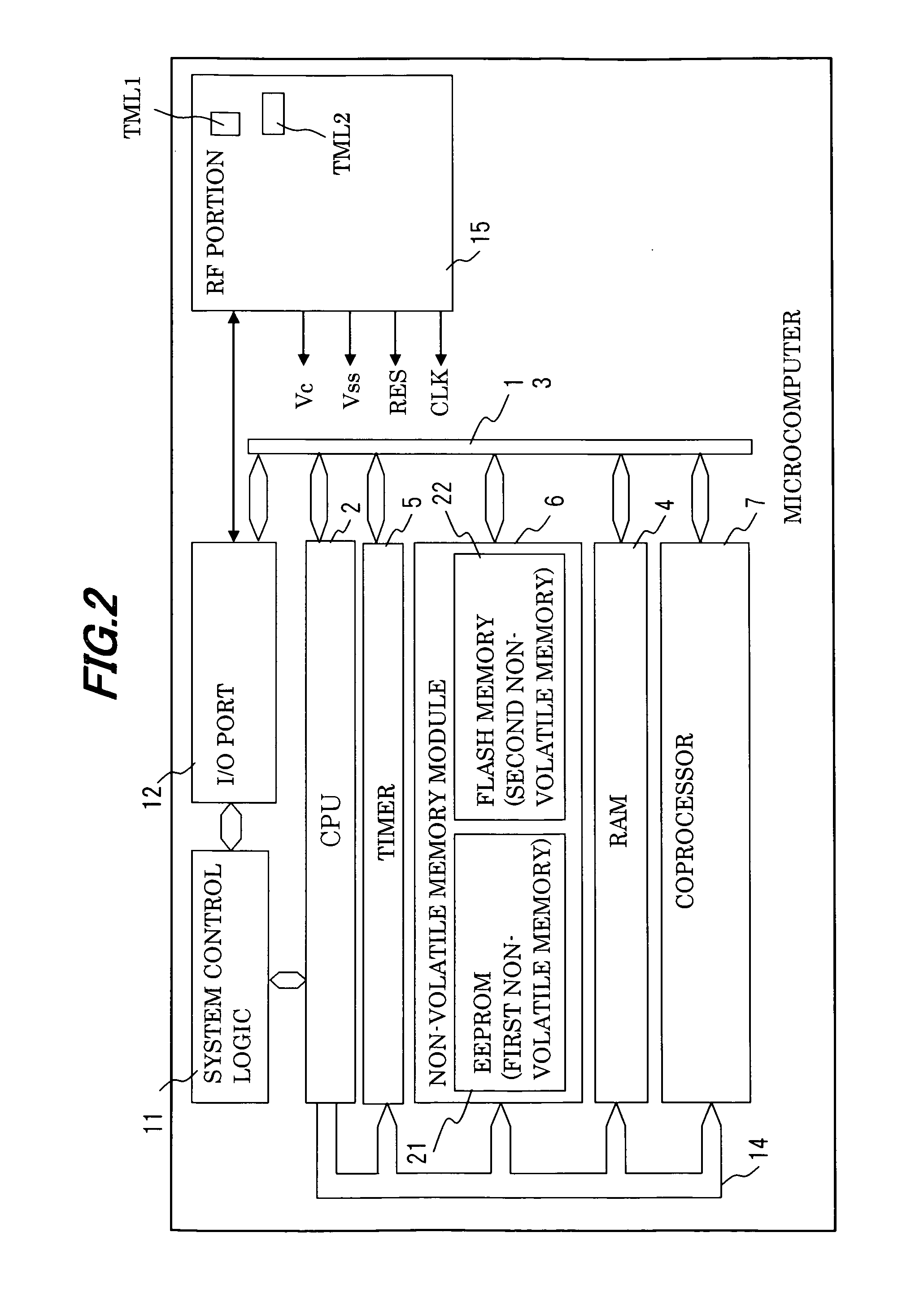

Semiconductor processing device and IC card

InactiveUS20090213649A1Improve reliabilitySmall sizeRead-only memoriesUnauthorized memory use protectionSemiconductorEncryption

A semiconductor processing device according to the invention includes a first non-volatile memory (21) for erasing stored information on a first data length unit, a second non-volatile memory (22) for erasing stored information on a second data length unit, and a central processing unit (2), and capable of inputting / outputting encrypted data from / to an outside. The first non-volatile memory is used for storing an encryption key to be utilized for encrypting the data. The second non-volatile memory is used for storing a program to be processed by the central processing unit. The non-volatile memories to be utilized for storing the program and for storing the encryption key are separated from each other, and the data lengths of the erase units of information to be stored in the non-volatile memories are defined separately. Therefore, the stored information can efficiently be erased before the execution of a processing of writing the program, and the stored information can be erased corresponding to the data length of a necessary processing unit in the write of the encryption key to be utilized in the calculation processing of the CPU.

Owner:RENESAS ELECTRONICS CORP

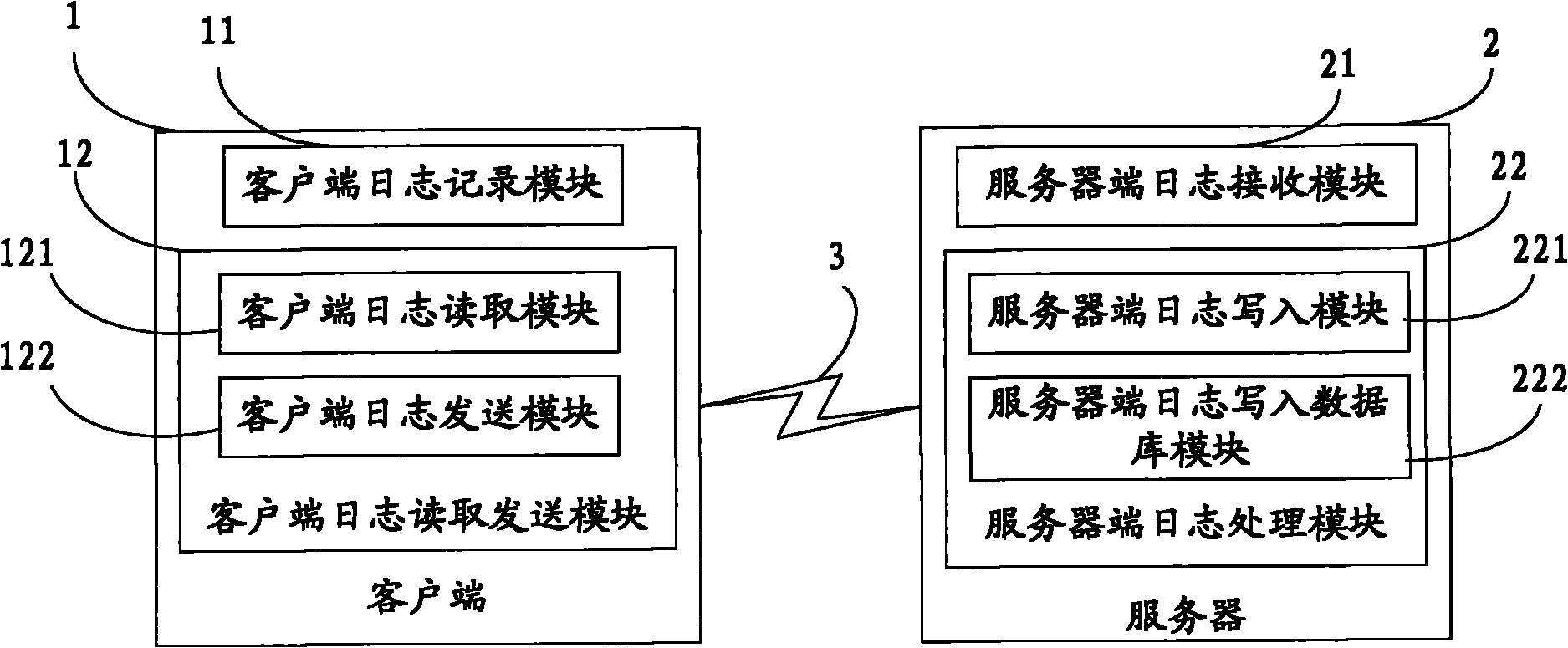

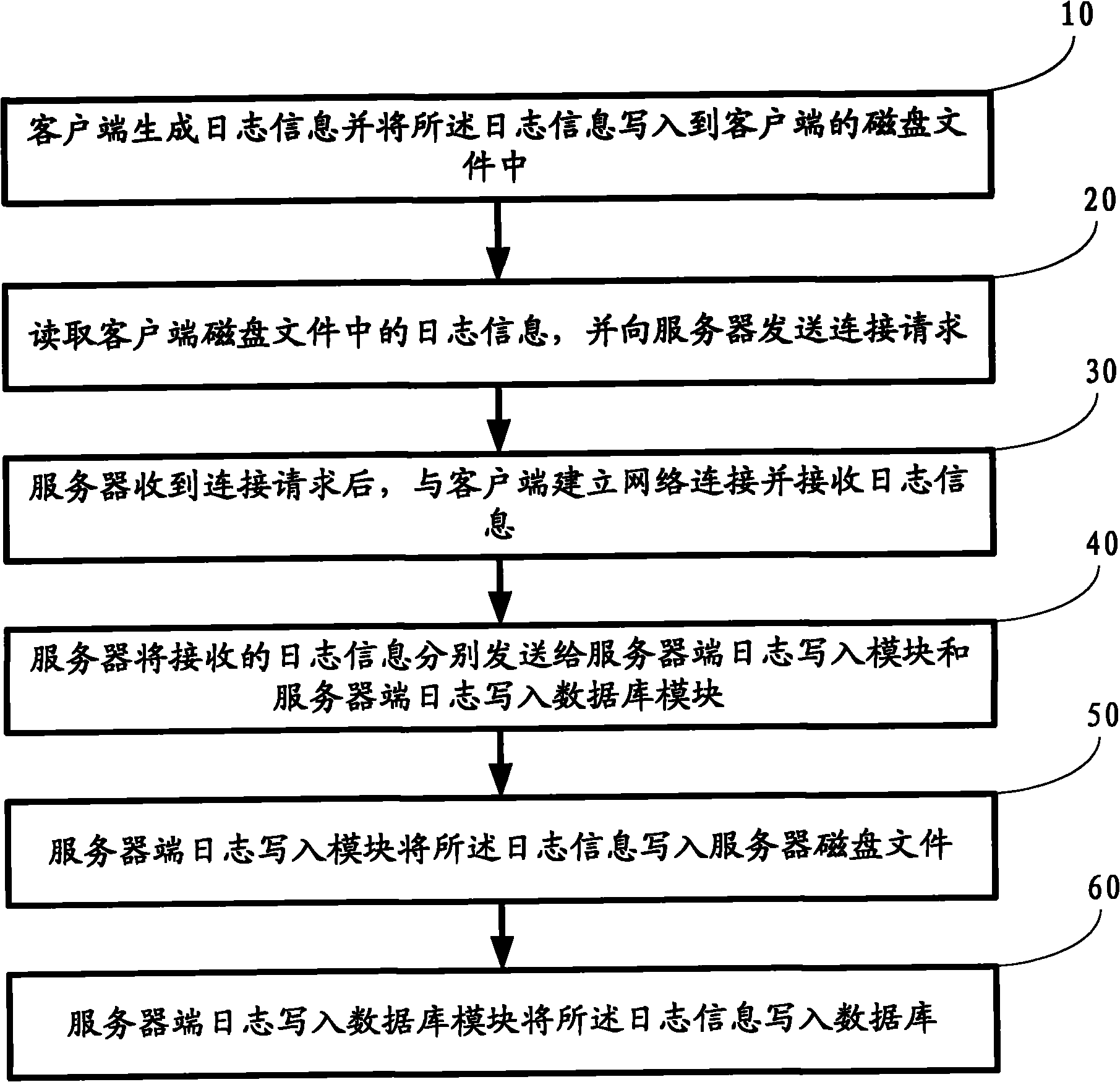

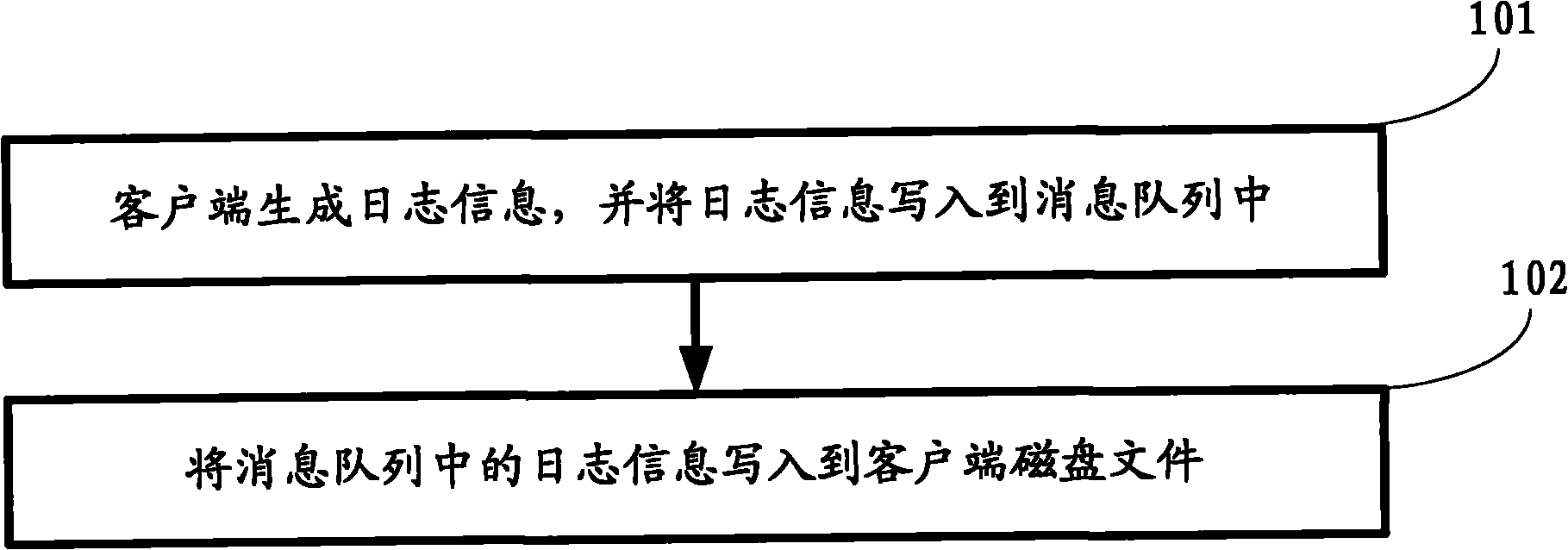

Log acquisition device and log acquisition method

InactiveCN101969386AReal-time deliveryRealize distributed collectionData switching networksServer logNetwork connection

The invention relates to a log acquisition device and a log acquisition method. The device comprises at least one client for collecting logs and at least one server for recording the logs, wherein log information is transmitted between the client and the server through a network in real time. The method comprises that: the client generates the log information, writes the log information into a disk file of the client, reads the log information from the disk file of the client, and sends a connection request to the server; after receiving the request, the server establishes network connection with the client and receives the log information; the server sends the received log information to a server log writing module and a server log writing database module; the server log writing module writes the log information into the disk file of the server; and the server log writing database module writes the log information into the database. When the device and the method of the invention are used, the data analysis is more real-time, and the distributed acquisition of the logs is realized.

Owner:道有道科技集团股份公司 +1

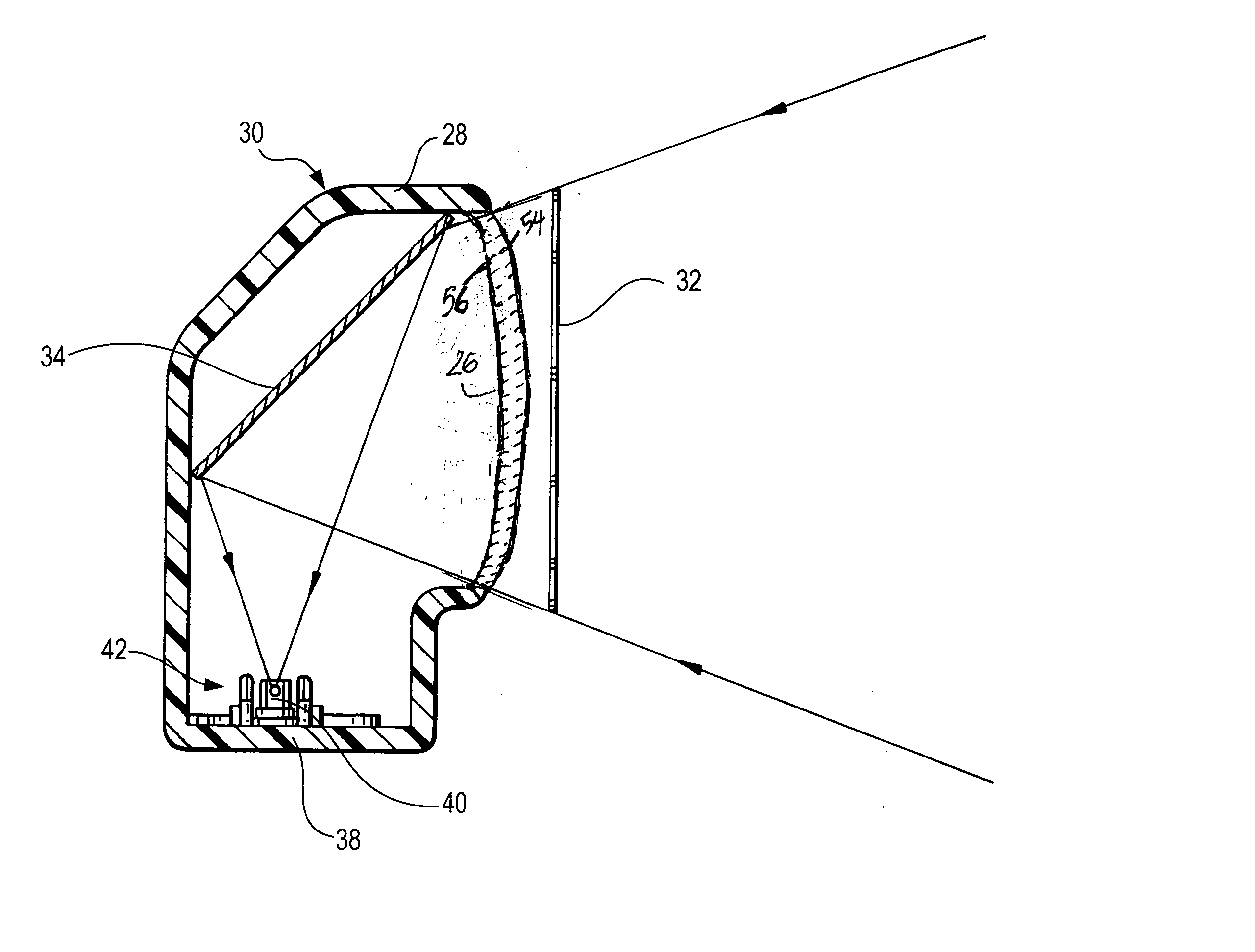

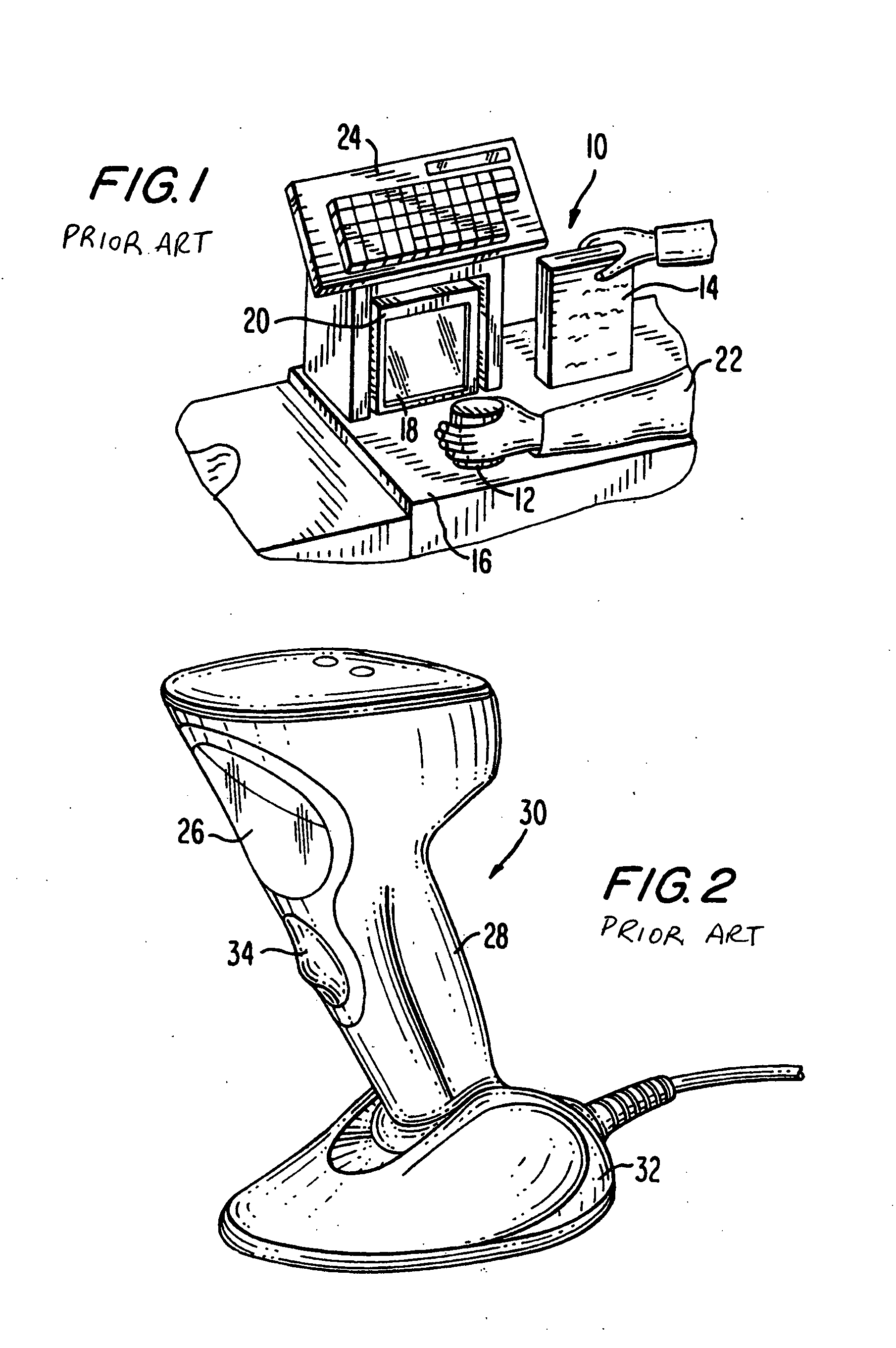

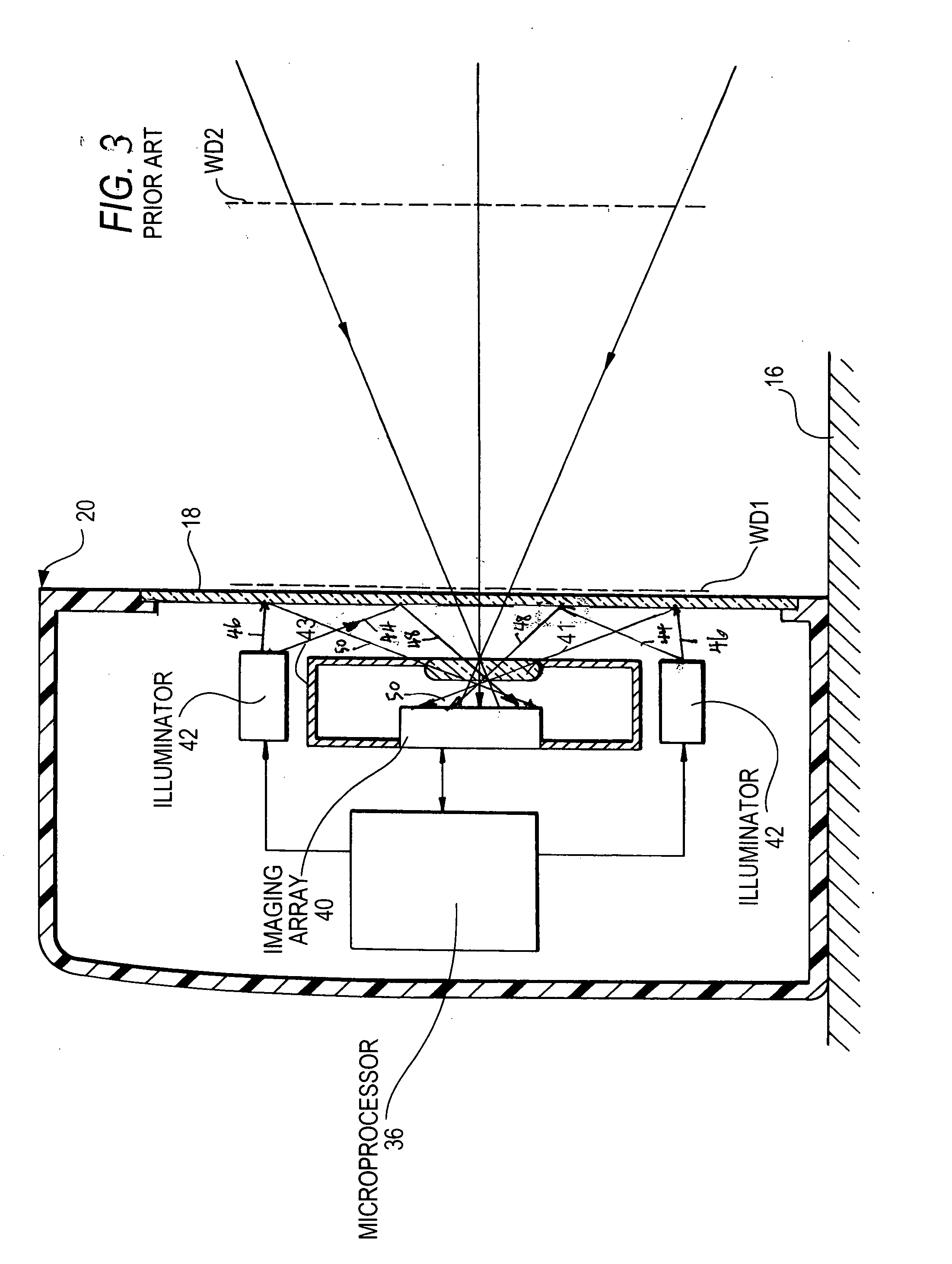

Imaging reader and method with internal window reflections directed away from imager

ActiveUS20070194119A1Reliably capturing imageEnhance reader performanceCharacter and pattern recognitionSensing by electromagnetic radiationImage captureOptics

A target is illuminated with illumination light for image capture by a solid-state imager of an imaging reader. Internal reflections of the illumination light at a window are minimized, if not eliminated, by configuring the window as a non-planar optical element operative for redirecting the internal reflections away from the imager to enhance reader performance.

Owner:SYMBOL TECH LLC

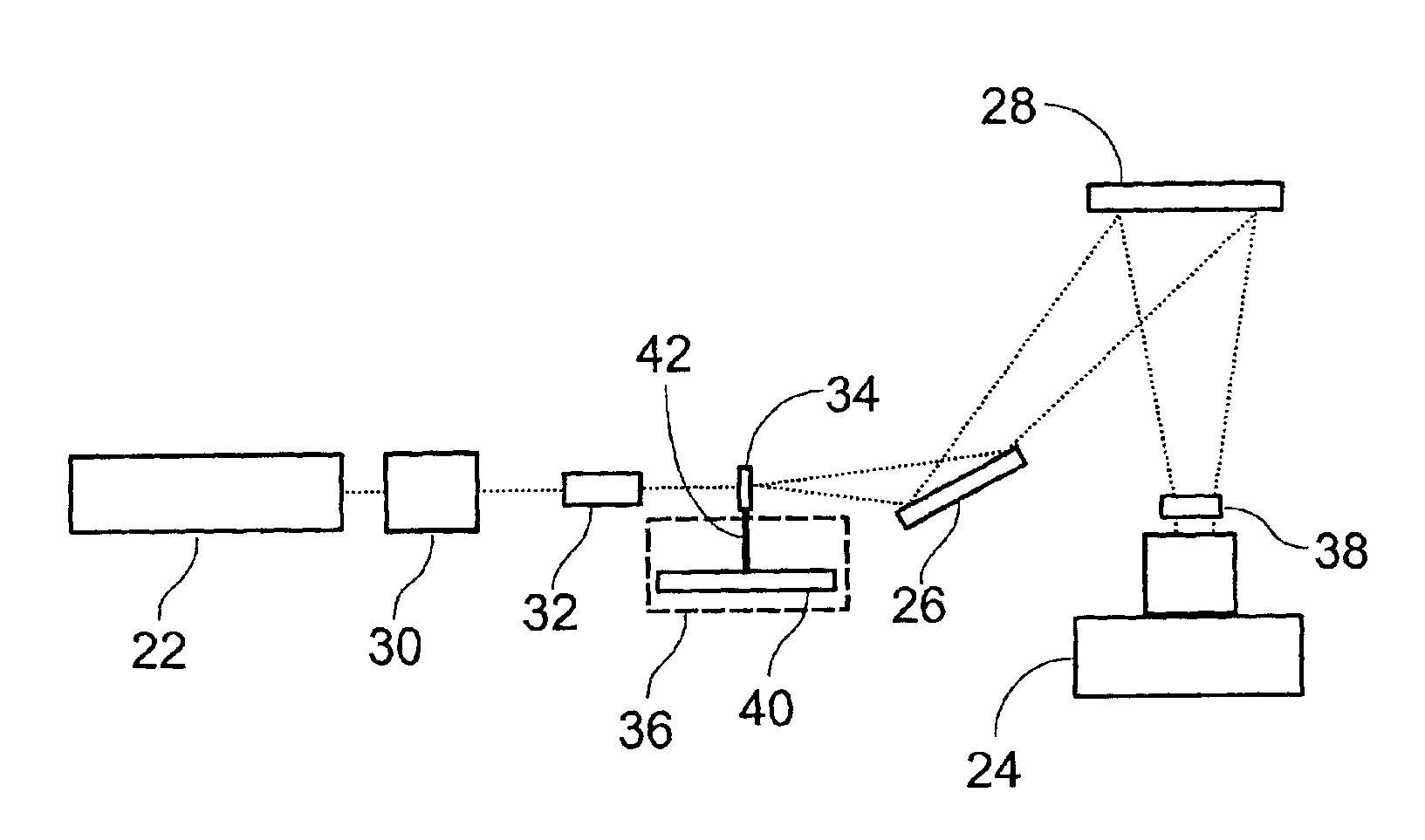

Light detection device

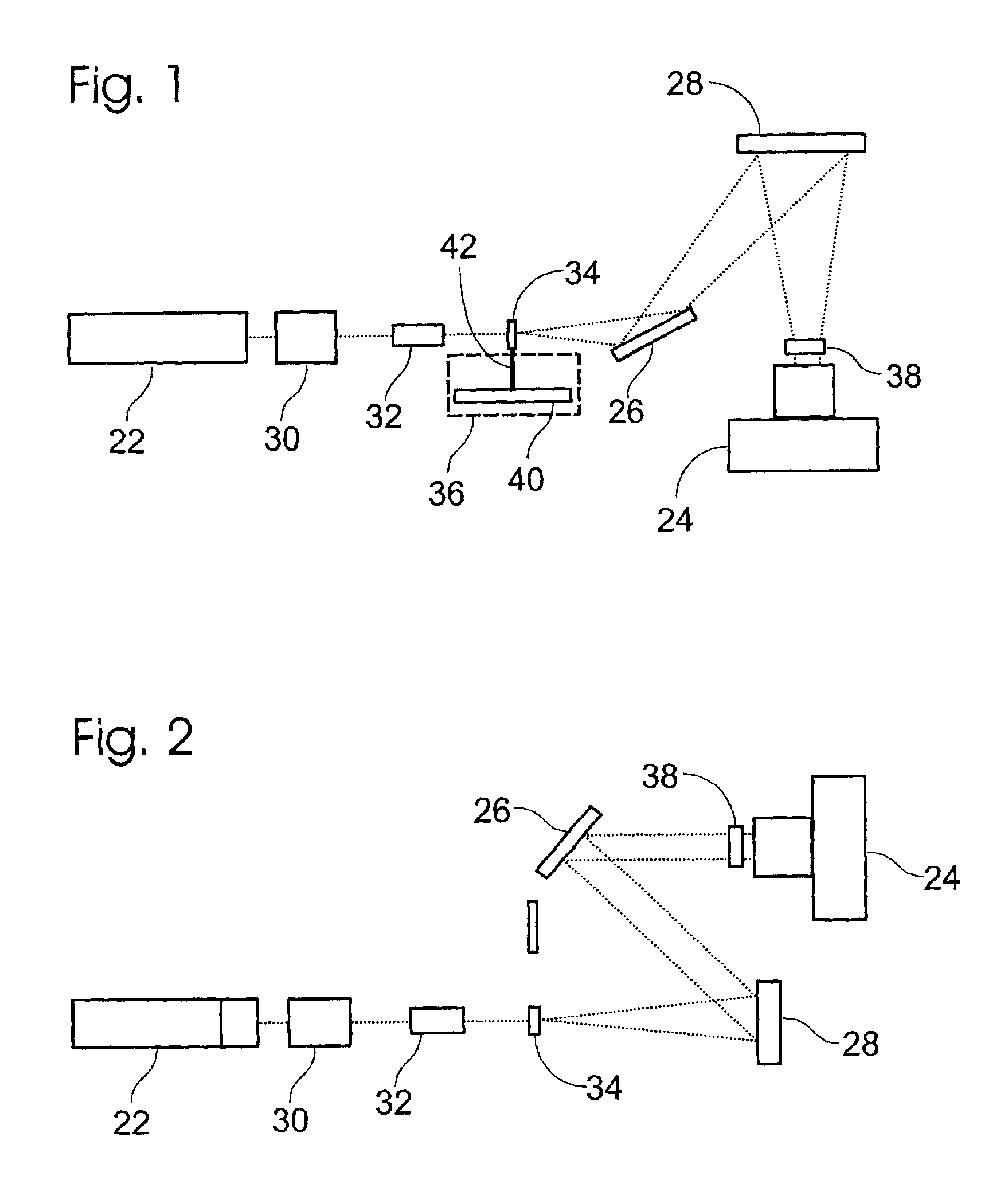

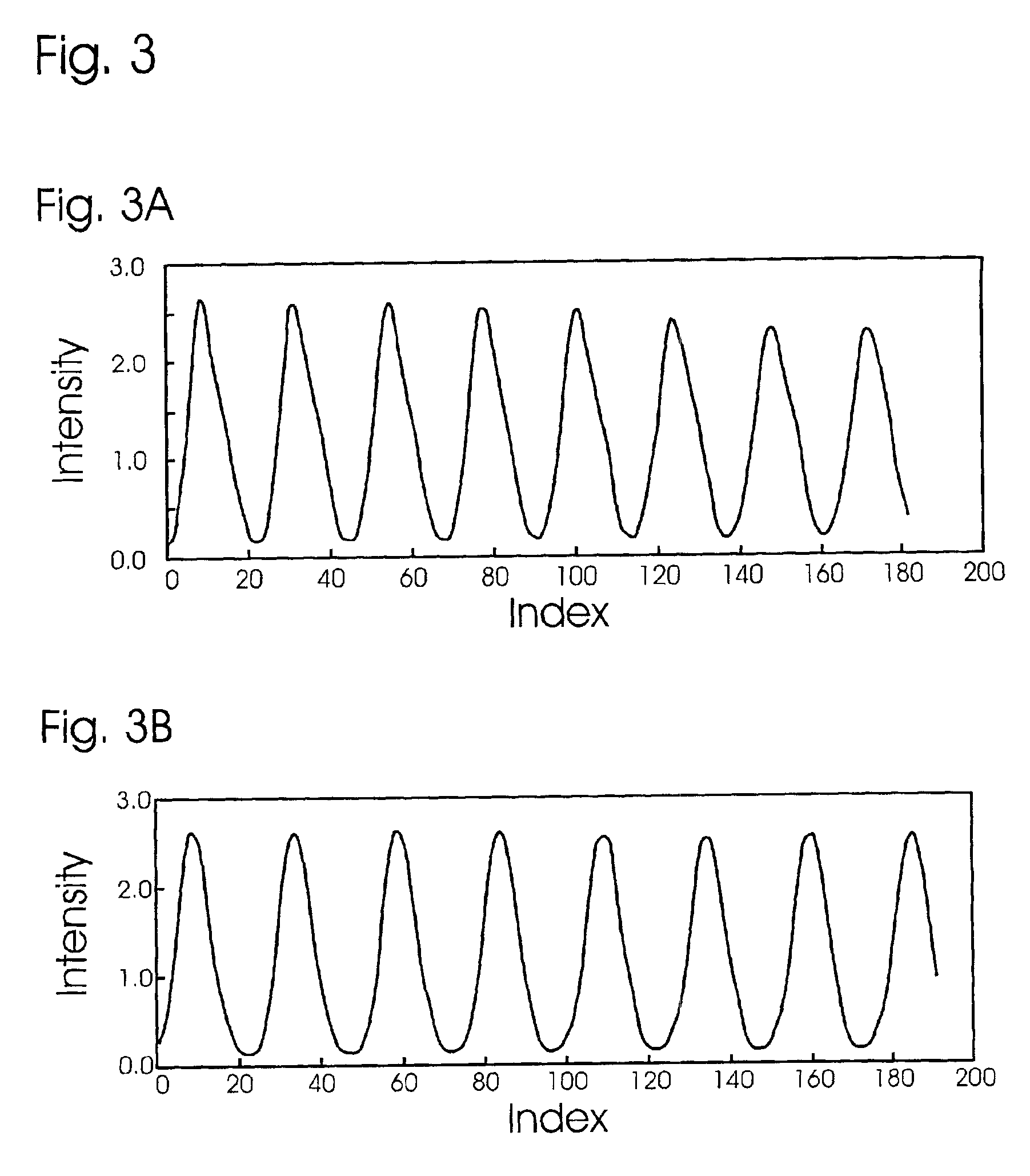

InactiveUS6930314B2Increase flexibilityImprove reading speedSpectrum investigationScattering properties measurementsGenomicsMicroscope slide

Apparatus and methods for optical illumination and / or detection with improved flexibility and / or read speed. The apparatus and methods may include mechanisms for selecting and switching between multiple excitation wavelengths and / or simultaneously reading from a plurality of sample sites. The apparatus and methods may be used with microplates, PCR plates, cell culture plates, biochips, chromatography plates, microscope slides, and other substrates for high-throughput screening, genomics, SNPs analysis, pharmaceutical research and development, life sciences research, and other applications.

Owner:MOLECULAR DEVICES

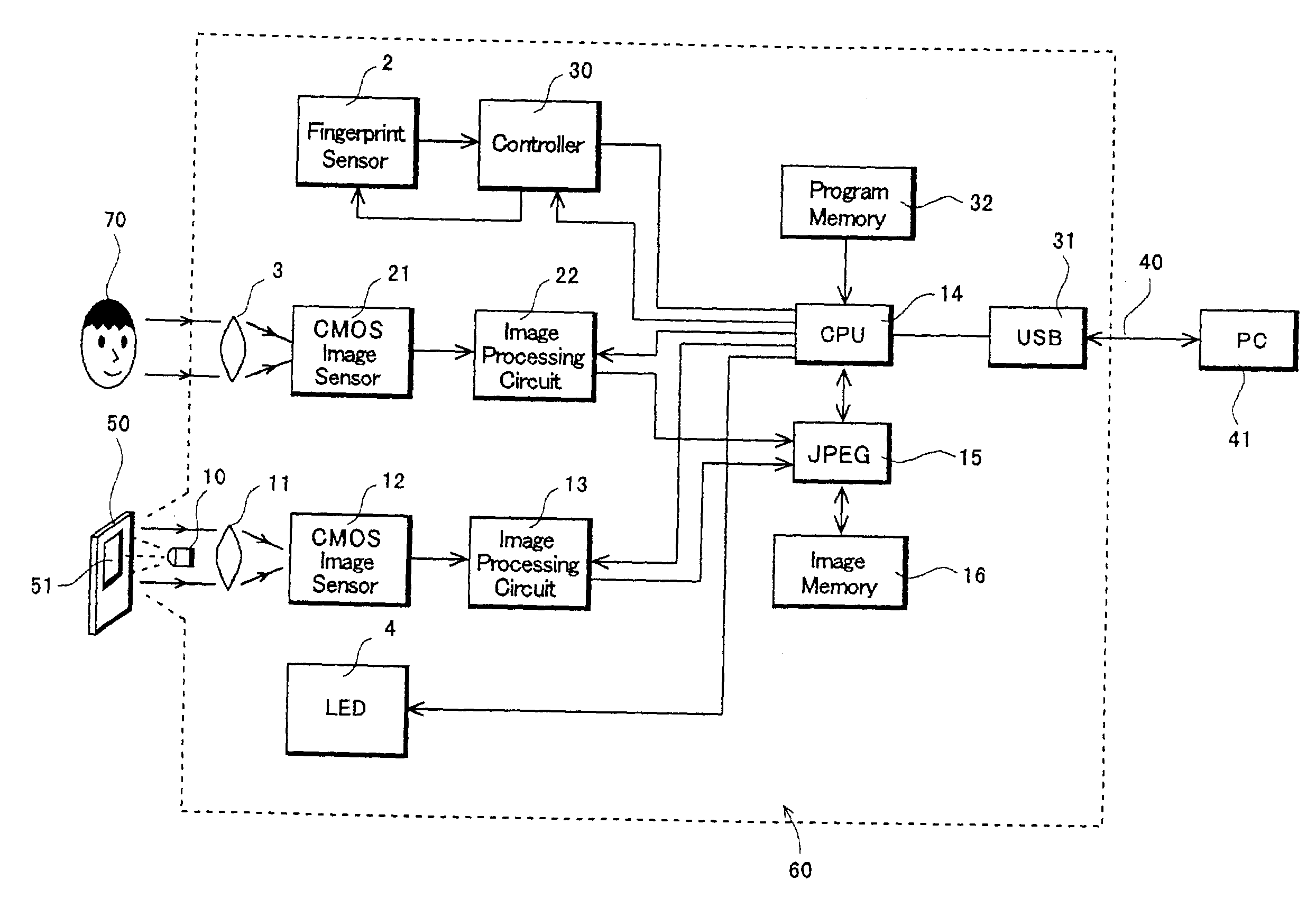

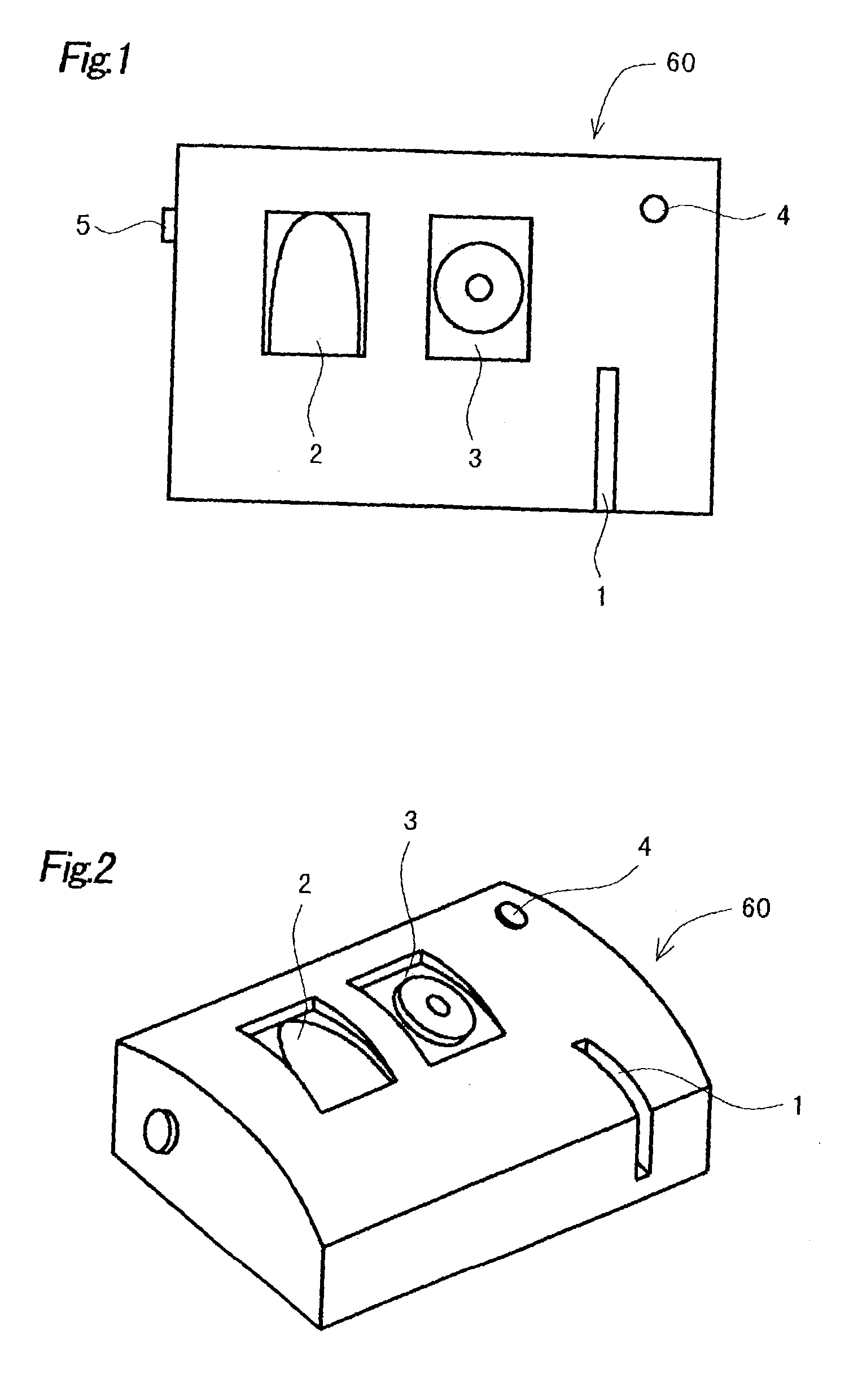

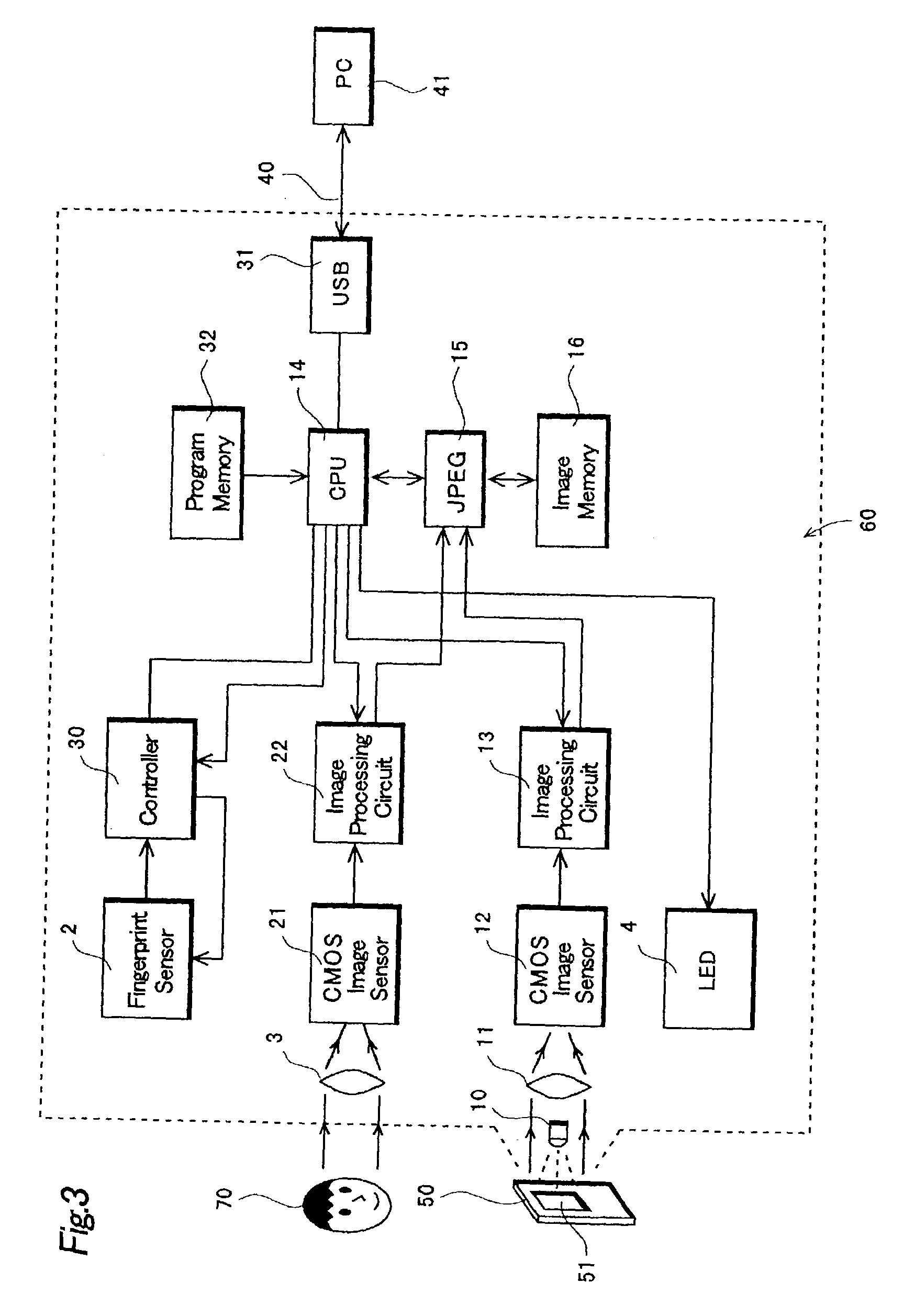

Personal authentication system and method thereof

ActiveUS20030123710A1Improve reading speedReduce distanceCo-operative working arrangementsCharacter and pattern recognitionCMOSBarcode

A personal authentication system of this invention has a card reader, in which a bar code reading device for reading a two-dimensional barcode containing personal data, a CMOS image sensor for producing face data by photographing the face of a person, and a fingerprint reading device for producing fingerprint data by reading the fingerprint of the person are assembled as one unit. A personal computer performs a projection transform and a brightness correction to the barcode read by the card reader for acquiring accurate data. Then, the personal data, the face data, and the fingerprint data are compared with database for authenticating the person. Therefore, this invention achieves more accurate personal authentication and leads to improved security.

Owner:SEMICON COMPONENTS IND LLC

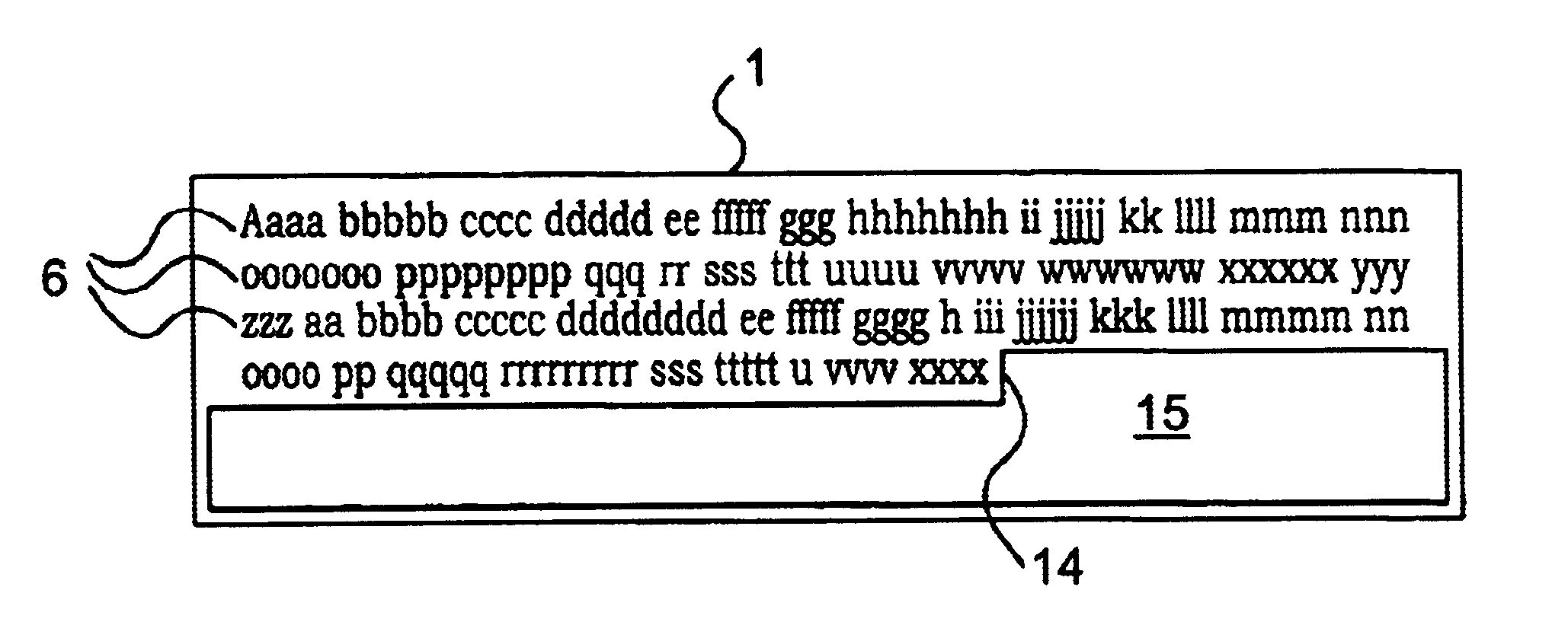

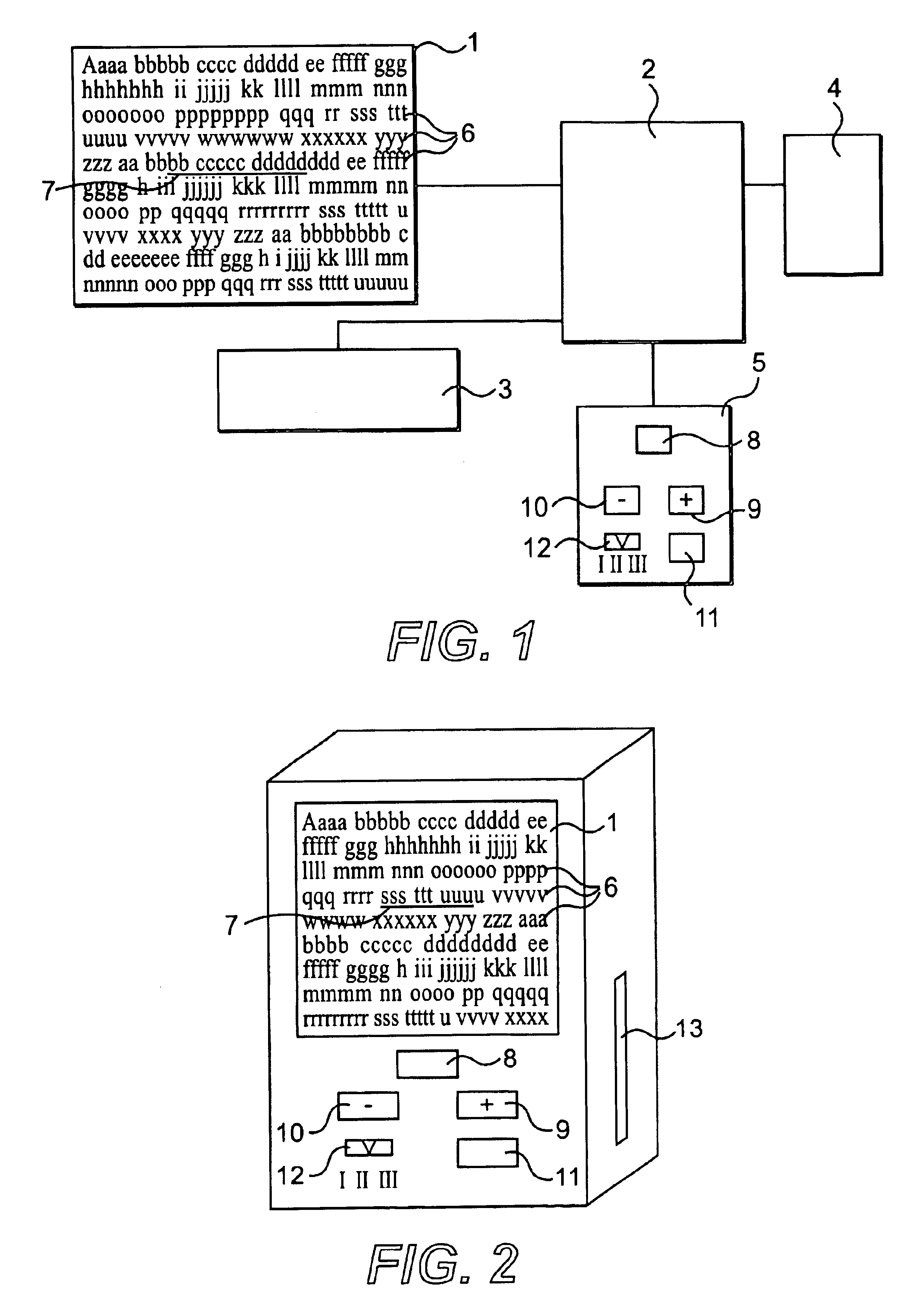

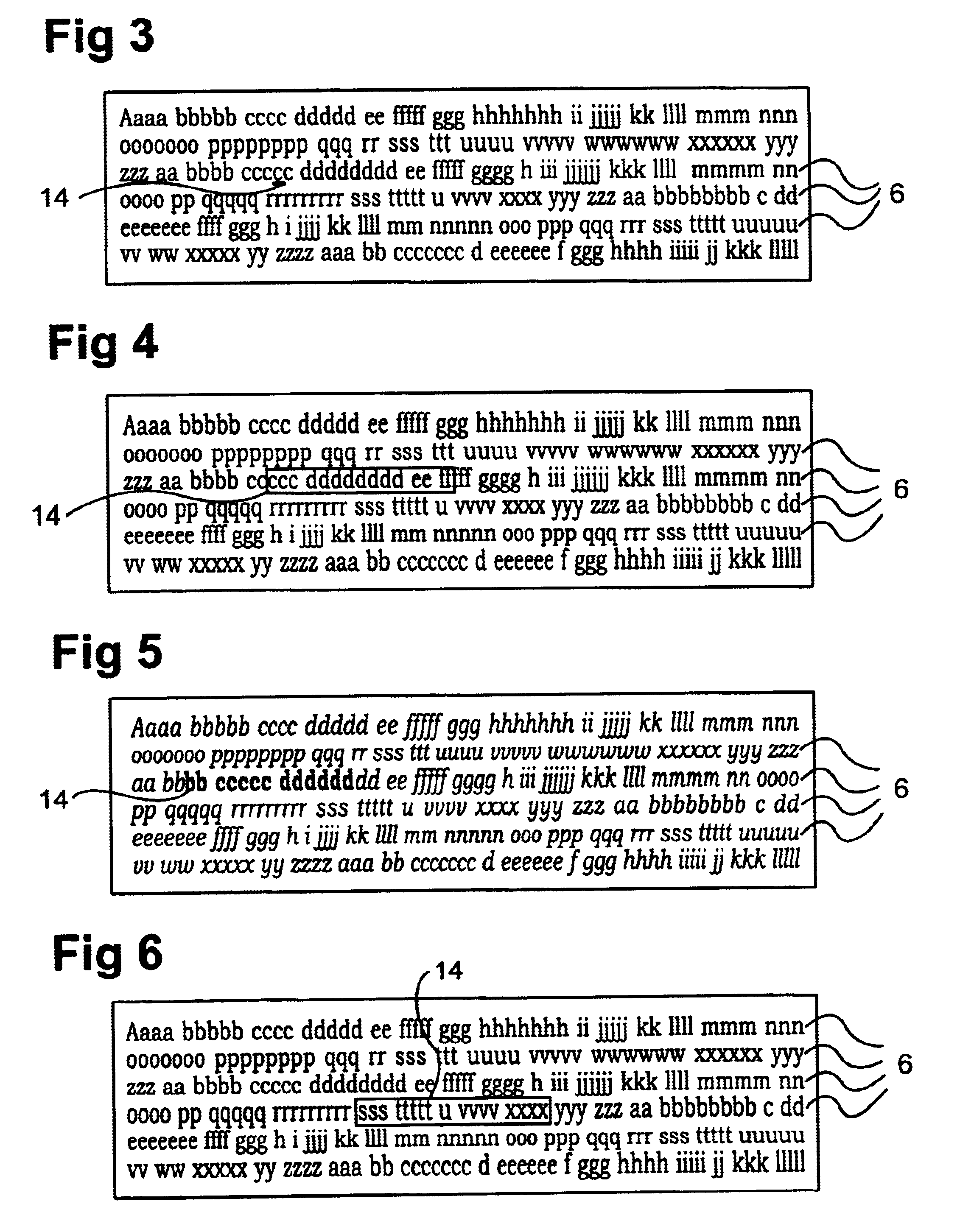

Device for supporting reading of a text from a display member

InactiveUS6726487B1Improve reading speedEasily follow textReadingCathode-ray tube indicatorsComputer science

Owner:DALSTROM TOMAS

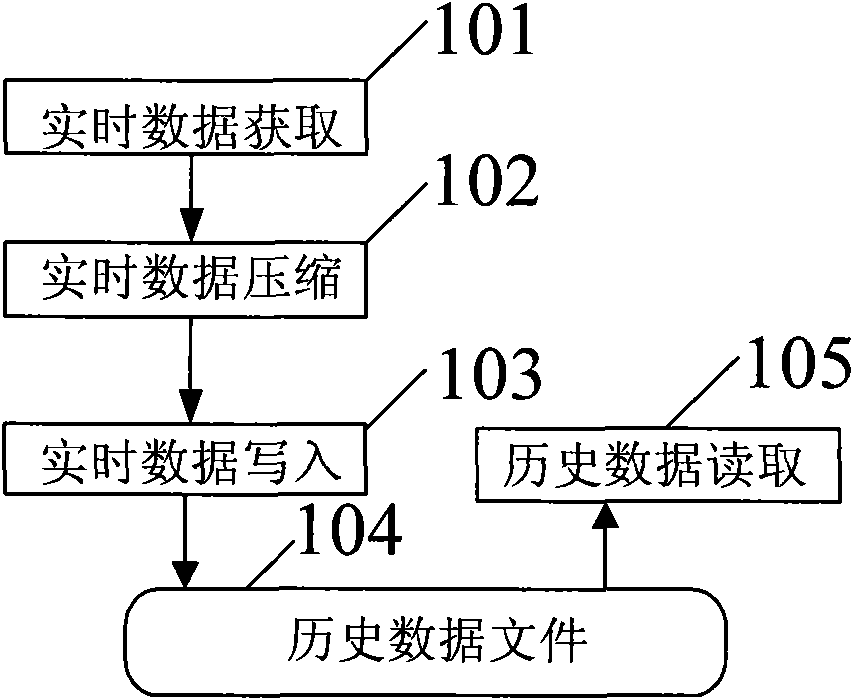

Establishing method for process control historical data file structure and data read-write method

ActiveCN102043795AReduce disk space usageSave disk spaceSpecial data processing applicationsData fileData compression

The invention discloses an establishing method for a process control historical data file structure, comprising the following steps: S1, establishing a file header, and writing file identification information into the file header; S2, establishing an index area, and dividing the index area into a measuring point description area and a data address area, wherein a data address in the data address area is an initial address of the historical data in a historical data file when fixed time duration data is written every time; S3, establishing a data area used for storing written measuring point historical data, wherein all data of each measuring point is stored on at least three continuous storage area. The invention further discloses a storage method and query method for process control historical data. In the invention, by data compression combined with a plurality of modes, the space of a magnetic disc can be greatly saved; the data storage speed is increased by adopting modes of blockwriting and memory mapping; and the data reading speed is greatly improved by modes of continuous storage, fast search and block reading of single measuring point data.

Owner:SHANGHAI XINHUA CONTROL TECH GROUP

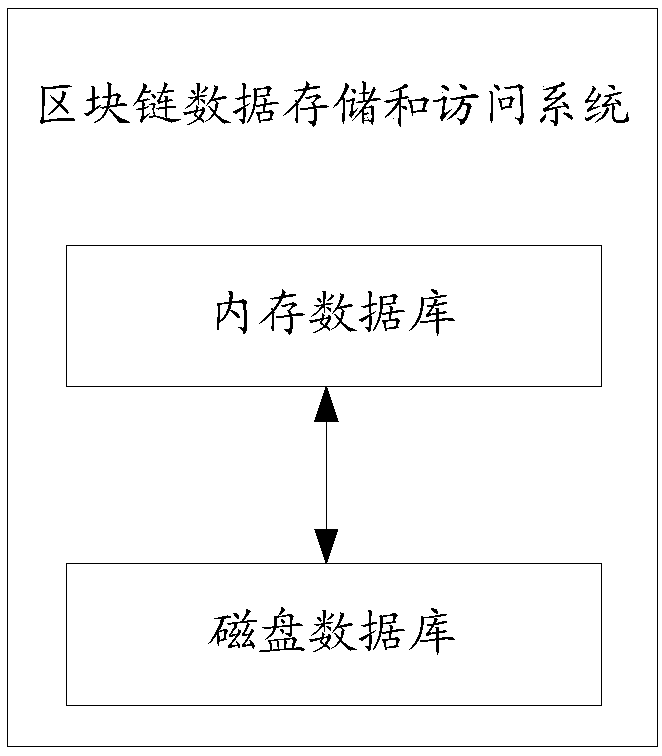

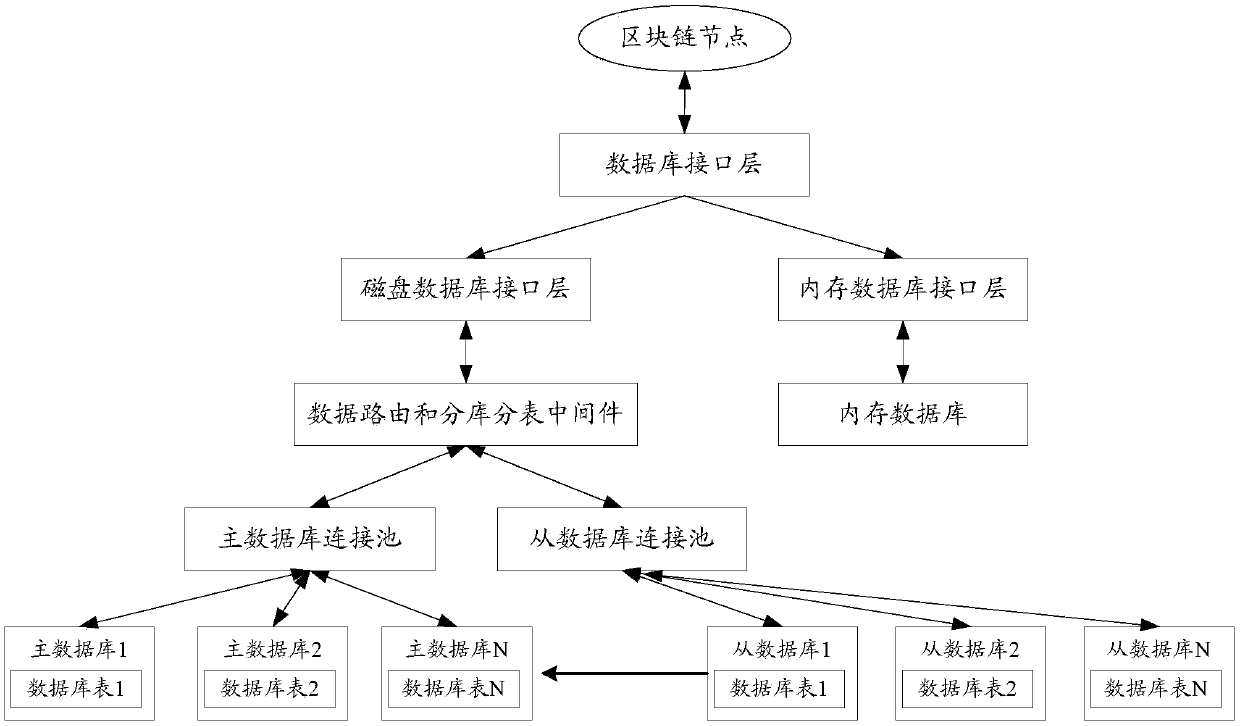

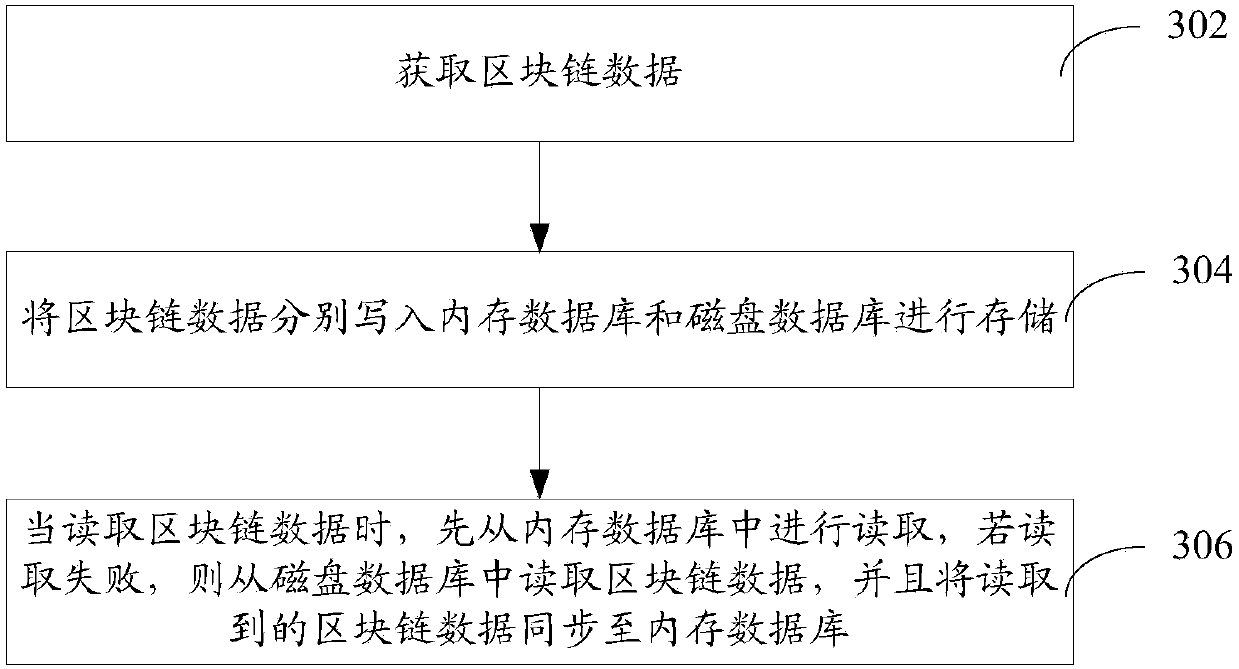

Block-chain data storage system and method

ActiveCN107704196AIncrease storageImprove reading speedInput/output to record carriersPayment architectureData storage systemBlockchain

The invention relates to a block-chain data storage system and method. The system includes a memory database and a disk database. The memory database and the disk database respectively receive block-chain data written by a block-chain node, and store the block-chain data. When the block-chain data are read by the node, reading from the memory database is firstly carried out by the node, and if reading fails, the block-chain data are read from the disk database, and the read block-chain data are synchronized to the memory database. By adopting the system, reading and writing efficiency of the block-chain data can be effectively improved.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

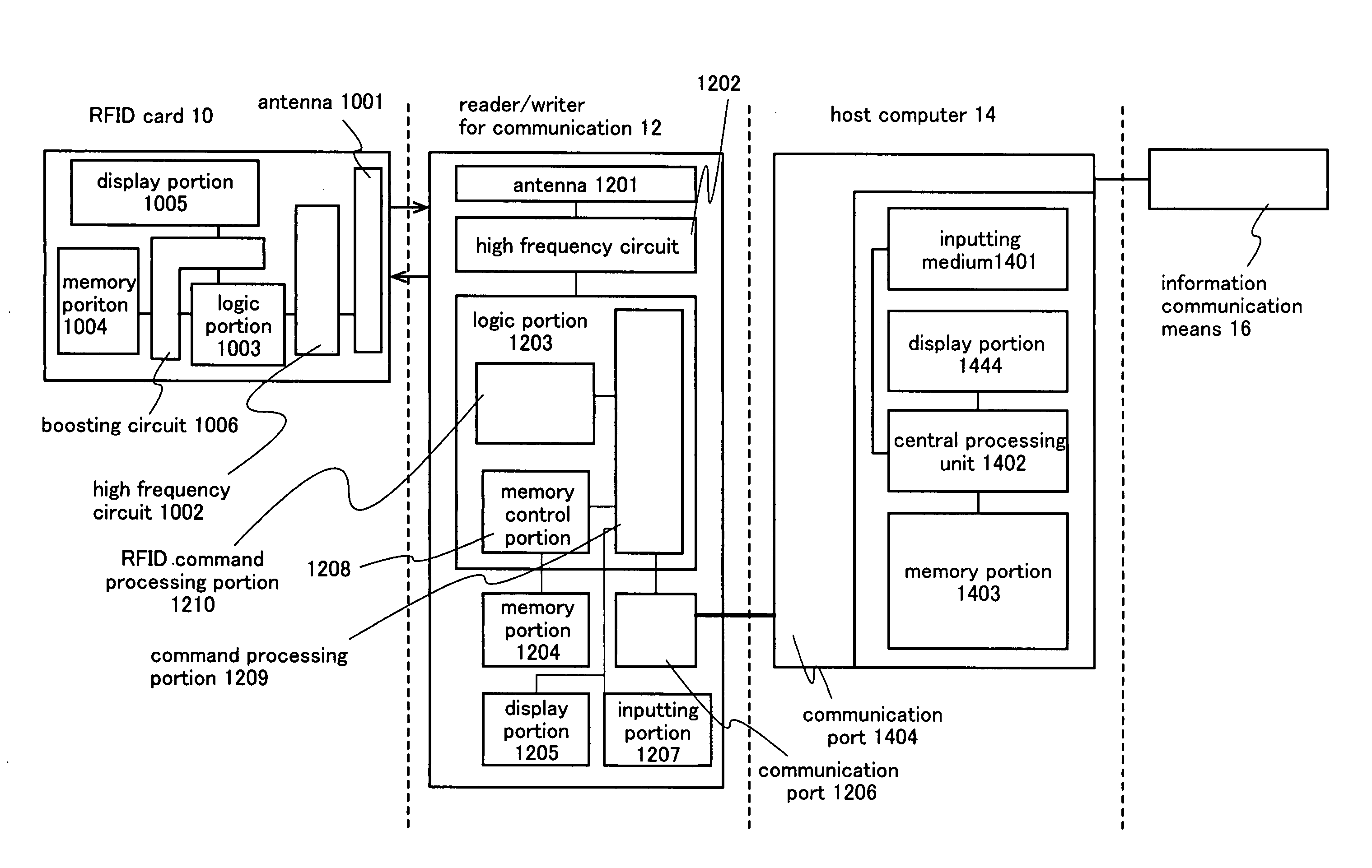

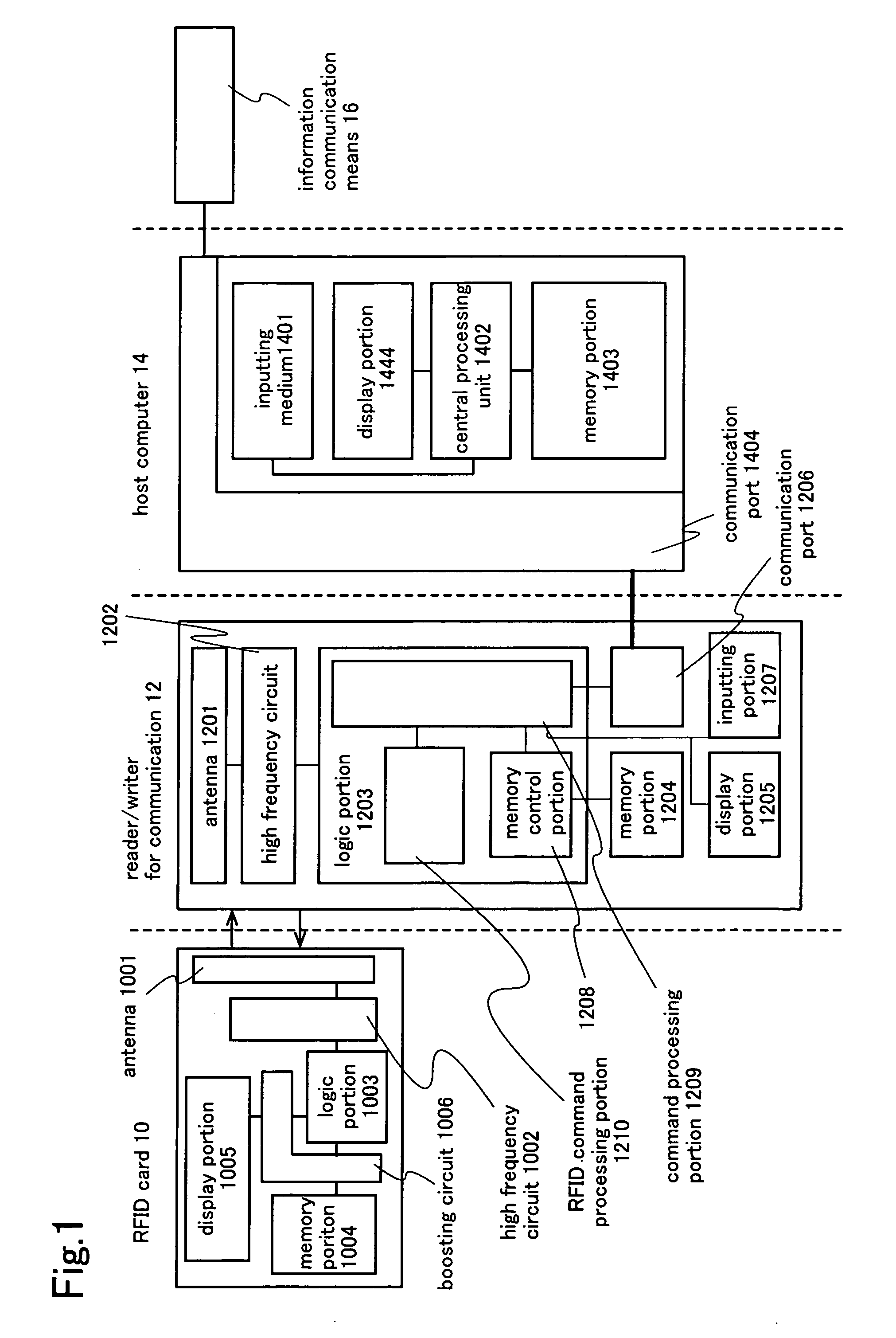

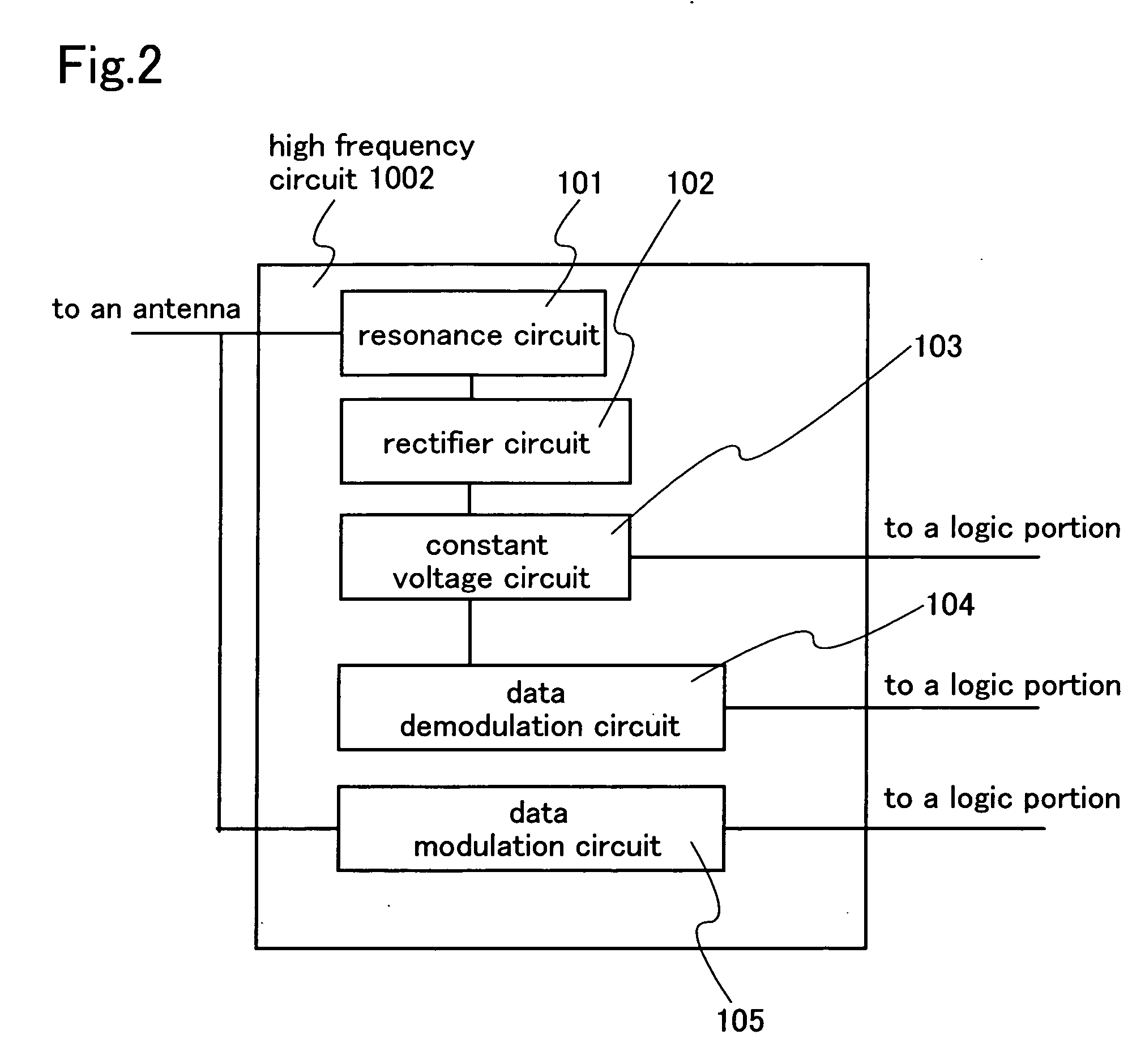

Terminal device and communication system

InactiveUS20060267769A1Reduce power consumptionSmall sizeBurglar alarm by hand-portable articles removalAudible signalling systemsComputer hardwareCommunications system

One of the objects of the present invention is to provide a communication system in which a user in a specific area can obtain information immediately without operating a device. The communication system has an RFID card, a reader / writer for communication, a host computer, and an information communication means. The RFID card transmits and receives information wirelessly to and from the reader / writer for communication. Thus a user can carry the RFID card freely. The memory portion in the RFID card has a rewritable memory portion and a non-writable memory portion. Electric power required for operation of the RFID card is supplied wirelessly, which contributes to reduction is size and weight of the RFID card. By providing a display portion in the RFID card, information transmitted wirelessly can be displayed on the display portion and the user can recognize information immediately.

Owner:SEMICON ENERGY LAB CO LTD

Systems and methods for a learner interaction process

InactiveUS20120329027A1Easy to learnImprove learning efficiencyData processing applicationsElectrical appliancesProcess systemsComputer science

System and method for a learner interaction process comprising receiving information regarding one or more learners, receiving information regarding the educational material that the one or more learners would like to learn, presenting more than one question regarding the educational material prior to presenting the educational material to the one or more learners, receiving answers to the more than one questions presented to the one or more learners, presenting the educational material to the one or more learners, identifying the amount of time the one or more learns reviewed the educational material, presenting and receiving answers to more than one question regarding the educational material that the one or more learners has reviewed after the educational material has been presented to the one or more learners, and determining whether the one or more learners learned the educational material presented based on the one or more learners answers to the questions.

Owner:LEWOLT BRUCE

Image reading device and image reading method

InactiveUS20050270590A1Shorten the overall cycleImprove reading speedTelevision system detailsSolid-state devicesEngineeringCapacitor

The image reading device of the present invention includes (i) a sensor substrate which functions as a photoelectric transfer element having a photodetecting TFT and a pixel capacitor and (ii) a driving IC for applying a voltage to a gate electrode of the photodetecting TFT so as to drive the photodetecting TFT into an ON state or an OFF state. The driving IC applies a voltage, whose polarity is opposite to average polarity of a voltage making the photodetecting TFT in the OFF state, to the gate electrode of the photodetecting TFT in an arbitrary period. Thus, it is possible to provide the image reading device which can suppress variation of a photodetecting TFT property (resistance value) which is observed in a short time.

Owner:SHARP KK

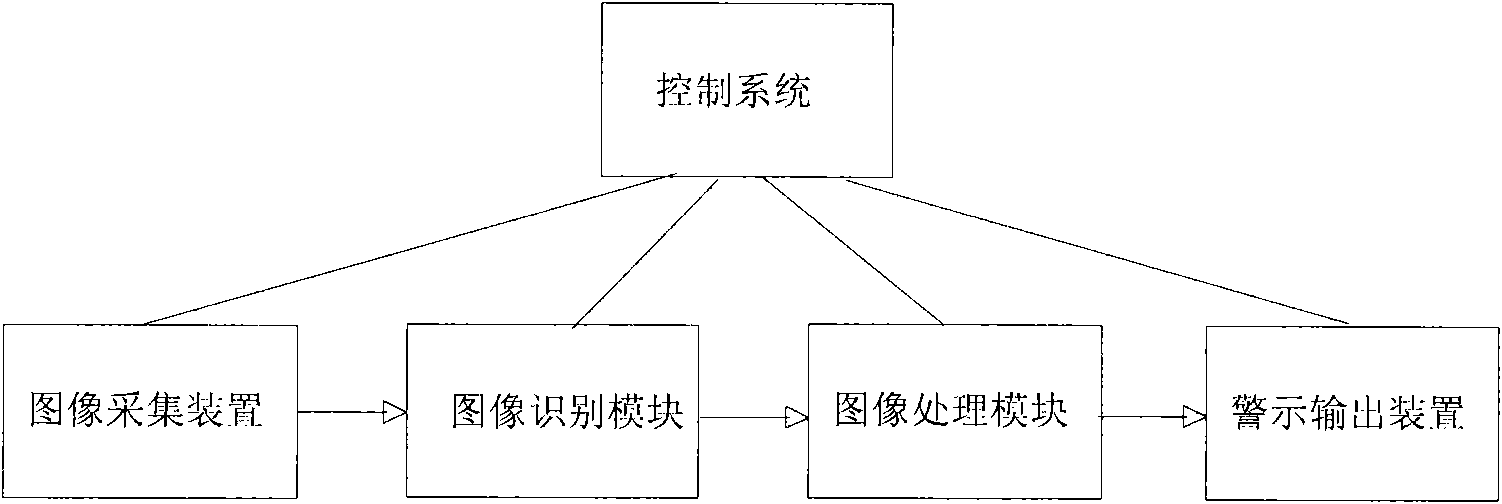

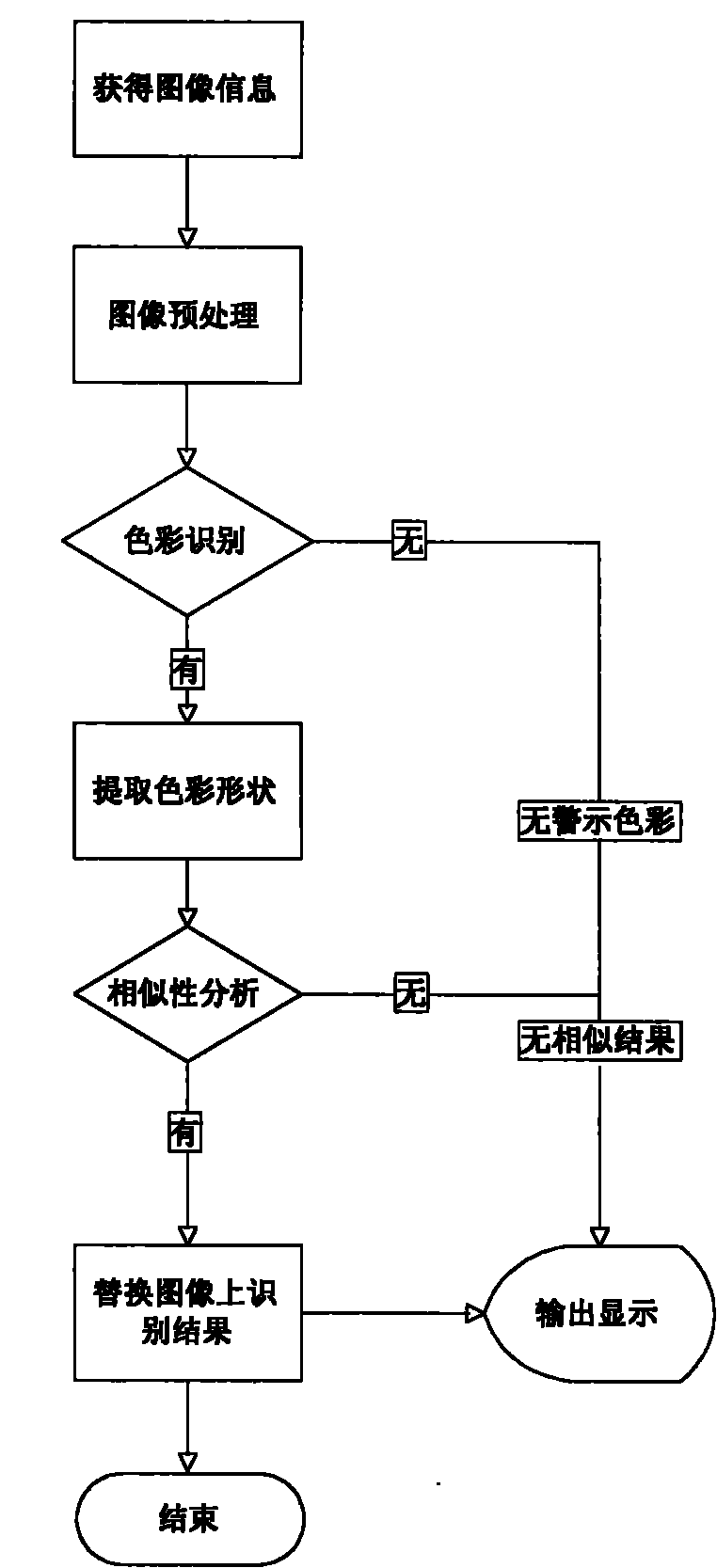

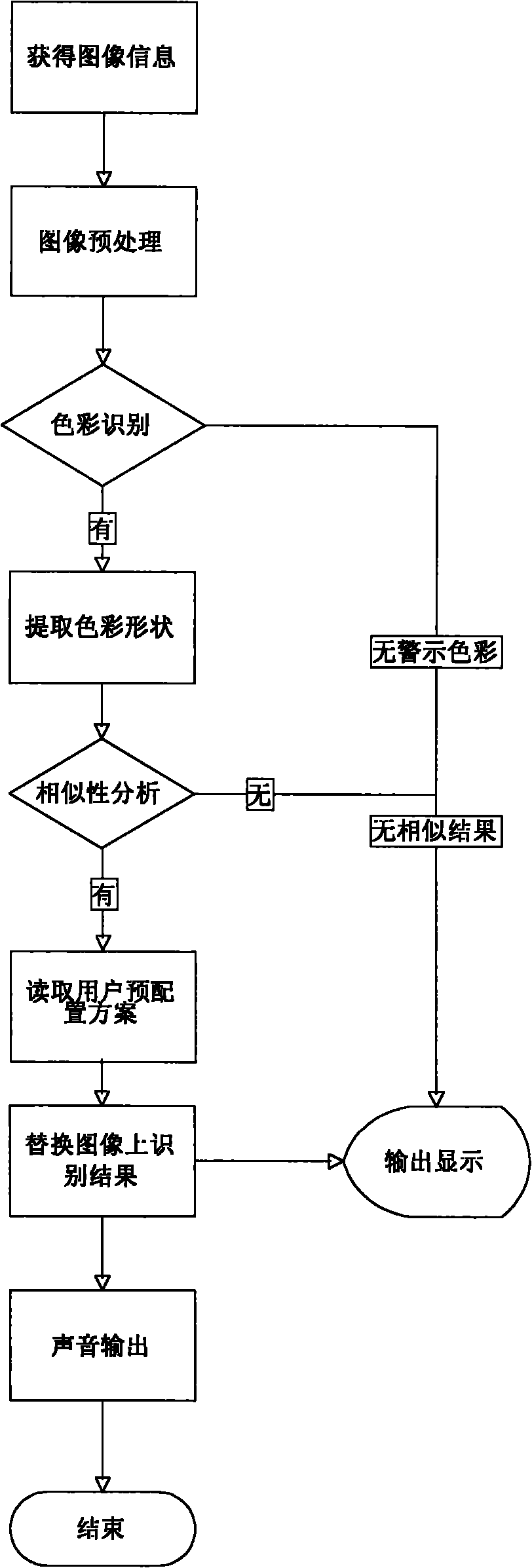

Auxiliary driving system for achromate or tritanope based on image identification technology

InactiveCN101783964AImprove reading speedLow costColor signal processing circuitsCharacter and pattern recognitionTraffic signalImaging processing

The invention claims an auxiliary driving system for achromate or tritanope based on image identification technology. The auxiliary driving system for achromate or tritanope comprises a control system, an image acquisition device, an image identification module, an image processing module and a warning output device. The driving assist system for achromate or tritanope comprises the following working steps of: step 1 of image acquisition for acquiring the image in front of a vehicle and transmitting the image to the image identification module; step 2 of image identification for analyzing the image, identifying an existing traffic light signal and / or a warning mark according to the factors of colors, shapes and similarities in different regions, and marking the identified traffic light signal and / or warning mark; step 3 of processing image for processing the image to the marked mark and replacing the colors into the colours capable of being identified by achromate or tritanope; step 4 of warning for displaying the warning to the driver with colour blindness or colour weakness by an image display device or a voice output device. By adopting the invention, the traffic signal lamp and the warning mark are effectively displayed to the driver with colour blindness or colour weakness.

Owner:上海乐毅信息科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com