Panoramic video stitching method based on multi-depth image transformation matrix

A transformation matrix, panoramic video technology, applied in the field of video splicing, can solve the problems of large amount of calculation, produce artifacts, interfere with splicing line adjustment, etc., and achieve the effect of fast running speed and small amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Preferred embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

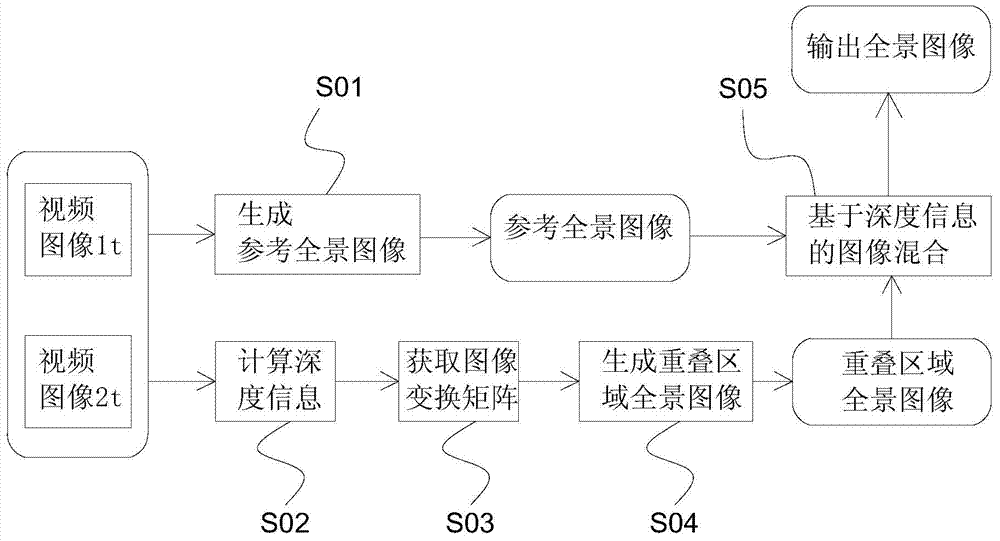

[0037] see Figure 1 to Figure 3 In this embodiment, the panoramic video stitching method based on the multi-depth image transformation matrix of the present invention is described in detail by taking two videos captured by two cameras and including overlapping areas to synthesize a panoramic video as an example.

[0038] background calibration process

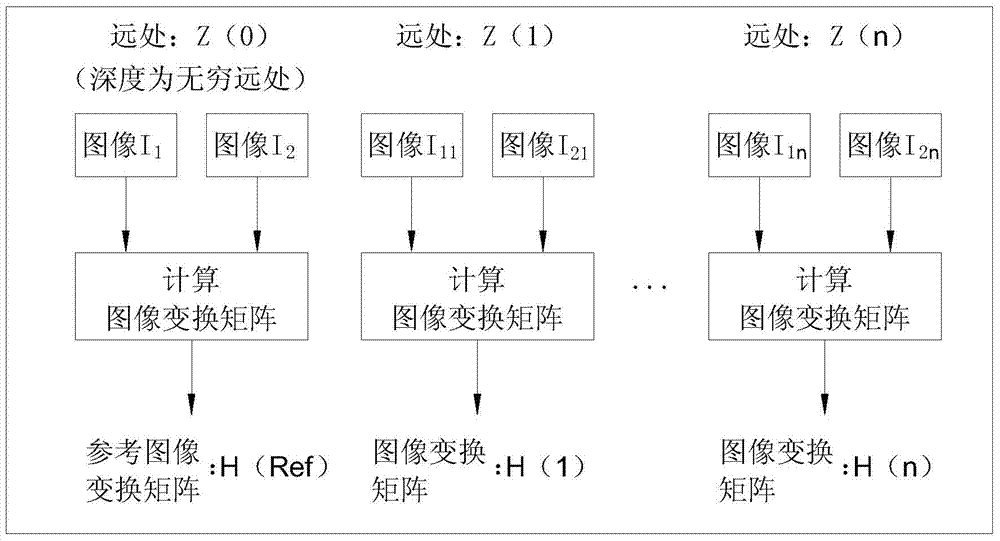

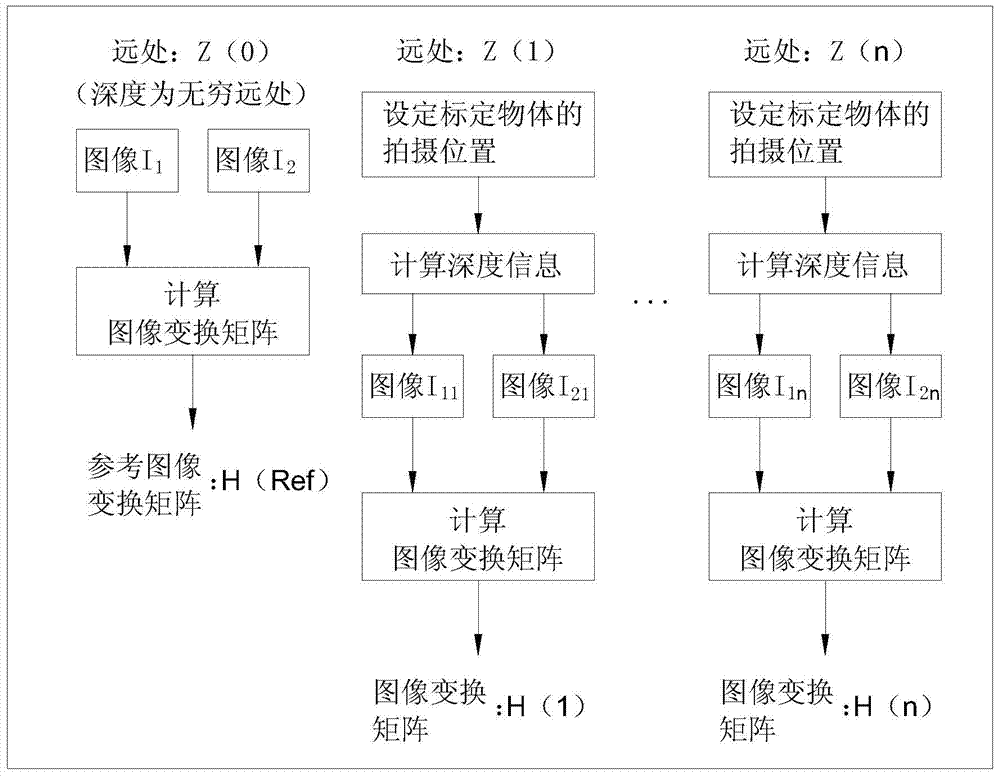

[0039] Such as figure 1 As shown, the depth level at infinity is marked as Z(0), and the image I with depth at infinity is obtained from a video 1 and get an image with depth at infinity from another video I 2 According to the formula (1), the image transformation matrix H is calculated as the reference image transformation matrix H(Ref); according to the order of depth from far to near, a certain depth information value in the distance is first marked as Z(1), and the two The image I obt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com