Face detection apparatus and method

A face detection and face technology, applied in instruments, character and pattern recognition, computer parts, etc., can solve problems such as the reduction of the accuracy of the face model and the error convergence of the eye position of the model, so as to shorten the processing time, suppress the The effect of misfit

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

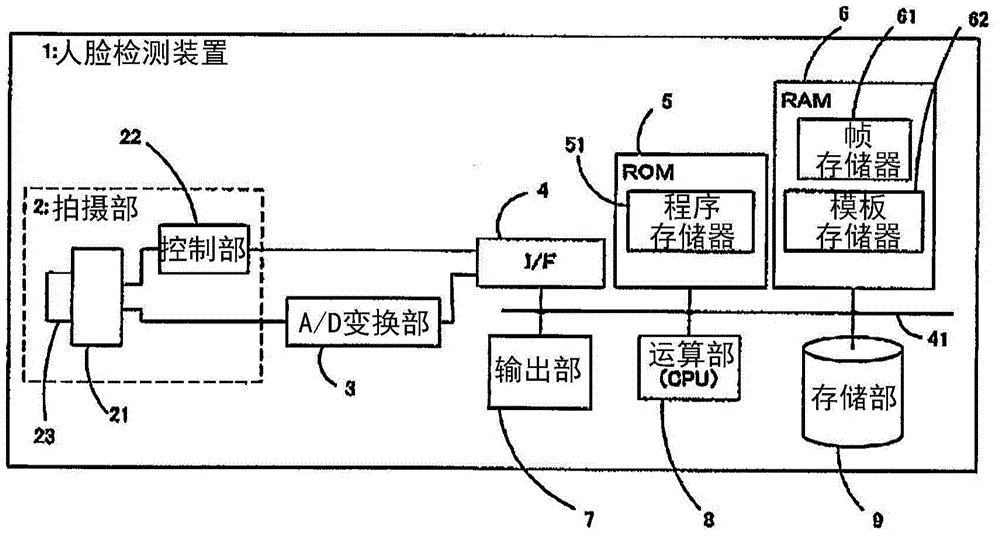

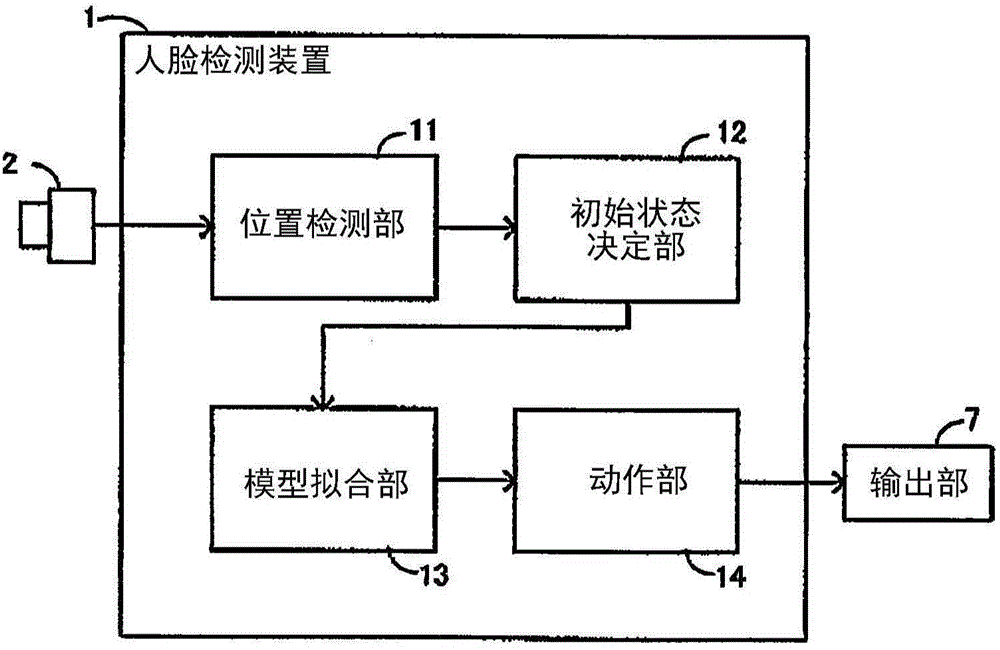

[0040] Hereinafter, embodiments of the present invention will be described with reference to the drawings, but the present invention is not limited to the embodiments. In addition, in the drawings described below, components having the same functions are denoted by the same reference numerals, and overlapping descriptions thereof may be omitted.

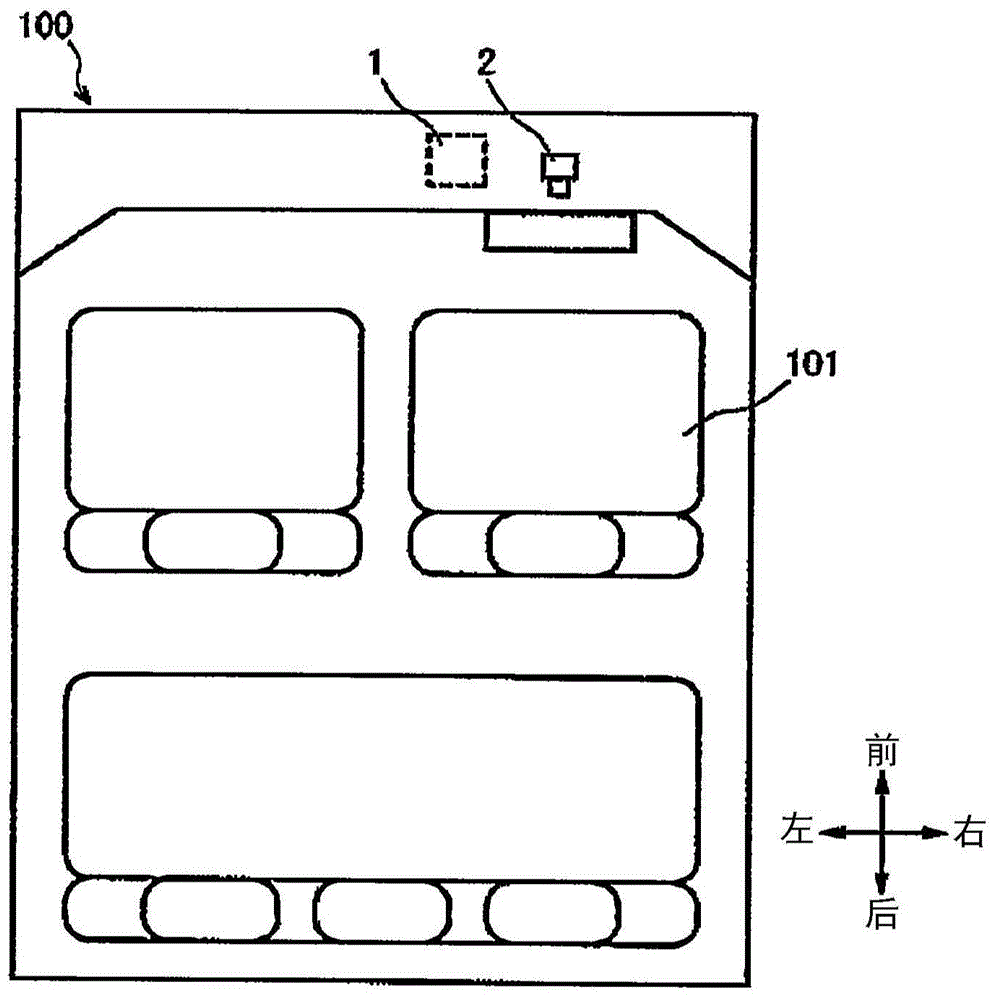

[0041] figure 1 It is a schematic diagram showing the cabin of a vehicle 100 provided with the face detection device 1 according to the present embodiment. Vehicle 100 includes face detection device 1 including imaging unit 2 . Face detection device 1 may be installed at any place in vehicle 100 . The face detection device 1 may be a stand-alone device, or may be incorporated into another system (for example, a car navigation system) in the vehicle 100 .

[0042] The imaging unit 2 is provided in front of the driver's seat 101 (that is, on the traveling direction side of the vehicle 100 ) in the vehicle compartment. The imaging u...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com