Voice control method and device

A voice control and voice engine technology, applied in voice analysis, voice recognition, instruments, etc., can solve problems that affect user experience, wrong operation, and voice control of music playback applications, and achieve the effect of improving user experience and convenience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

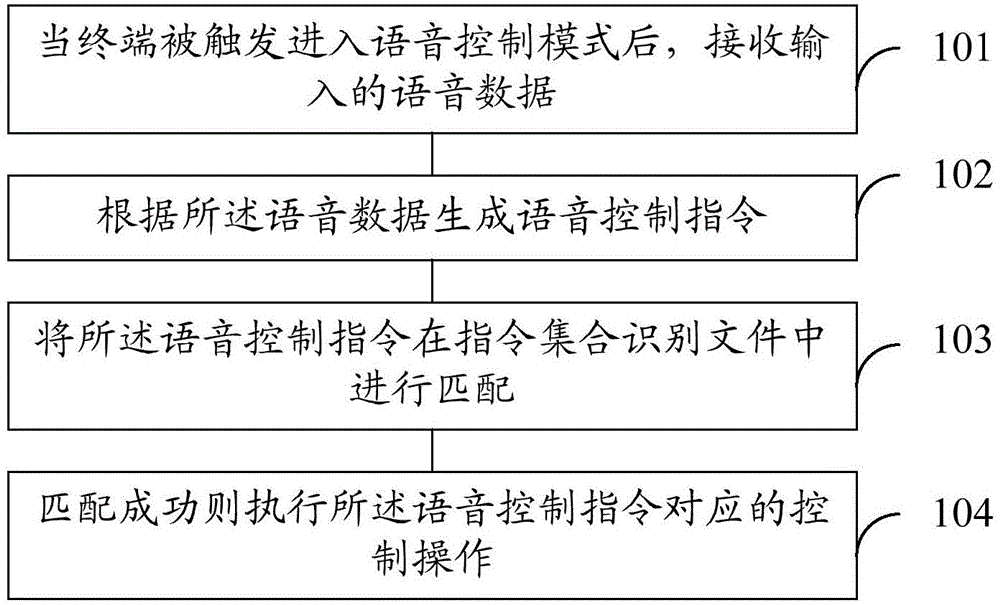

[0063] refer to figure 1 , which shows a flow chart of the steps of Embodiment 1 of a voice control method of the present application, which may specifically include the following steps:

[0064] Step 101, when the terminal is triggered to enter the voice control mode, receive input voice data;

[0065] In this embodiment of the application, the user can control the terminal through a remote control device, or control the terminal through voice.

[0066] The terminal is equipped with a recording module. When the terminal enters the voice control mode, the recording module can monitor the voice data input by the user in real time. When the user inputs voice data, the voice data input by the user can be received through the recording module.

[0067] For example, when the user inputs voice data such as "play a song", "what's the weather like today", "channel 15", "death squads", etc., the terminal can receive the above voice data input by the user through the recording module. ...

Embodiment 2

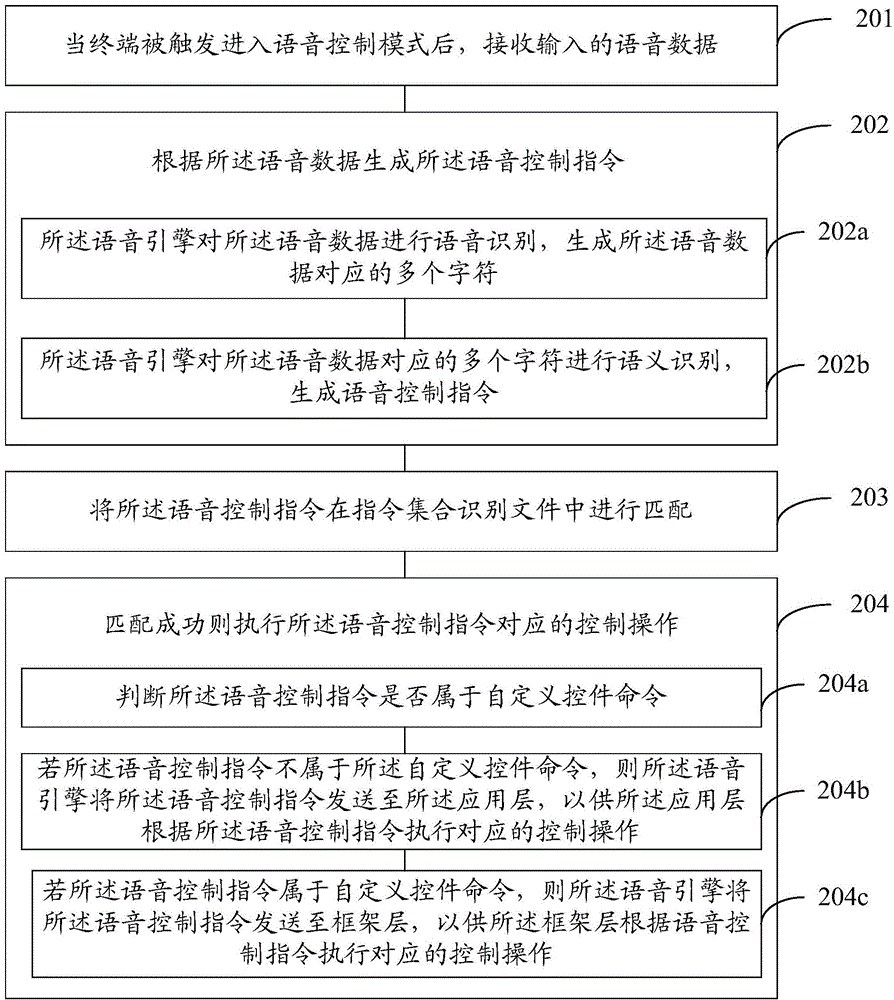

[0092] refer to figure 2 , which shows a flow chart of the steps of Embodiment 2 of a voice control method of the present application, which may specifically include the following steps:

[0093] Step 201, when the terminal is triggered to enter the voice control mode, receive input voice data;

[0094] After the terminal enters the voice control mode, the user can input voice data into the terminal to realize voice control of the terminal.

[0095] In a preferred embodiment of the present application, when the terminal receives the voice control mode start command, it can enter the voice control mode according to the voice control mode start command.

[0096] The user can trigger the terminal to enter the voice control mode by triggering the button on the remote control device to issue a voice control mode start command; or send the voice data corresponding to the voice control mode start command to the recording module on the remote control device to trigger the terminal t...

Embodiment 3

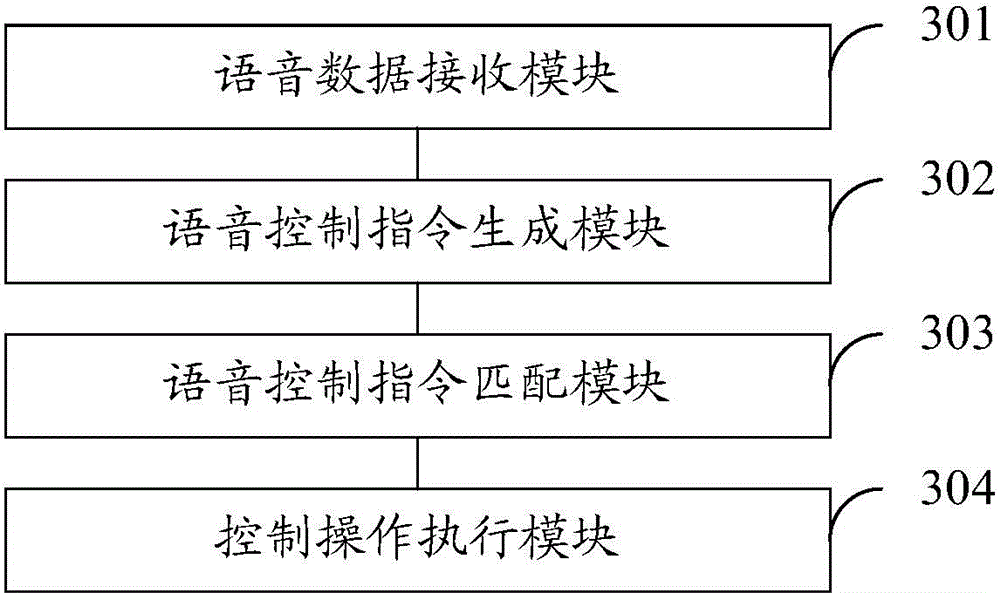

[0163] refer to image 3 , which shows a structural block diagram of an embodiment of a voice control device in the present application, which may specifically include the following modules:

[0164] A voice data receiving module 301, configured to receive input voice data after the terminal is triggered to enter the voice control mode;

[0165] A voice control instruction generating module 302, configured to generate a voice control instruction according to the voice data;

[0166] Voice control instruction matching module 303, configured to match the voice control instruction in the instruction set identification file; the instruction set identification file includes a set of control control instructions supported by the current interface;

[0167] The control operation execution module 304 is configured to execute the control operation corresponding to the voice control instruction when the matching is successful.

[0168] The device in this embodiment is configured to ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com