Method for identifying actions in video based on continuous multi-instance learning

A technology in action recognition and video, which is applied in the field of recognition and detection, and can solve problems such as inapplicable video data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

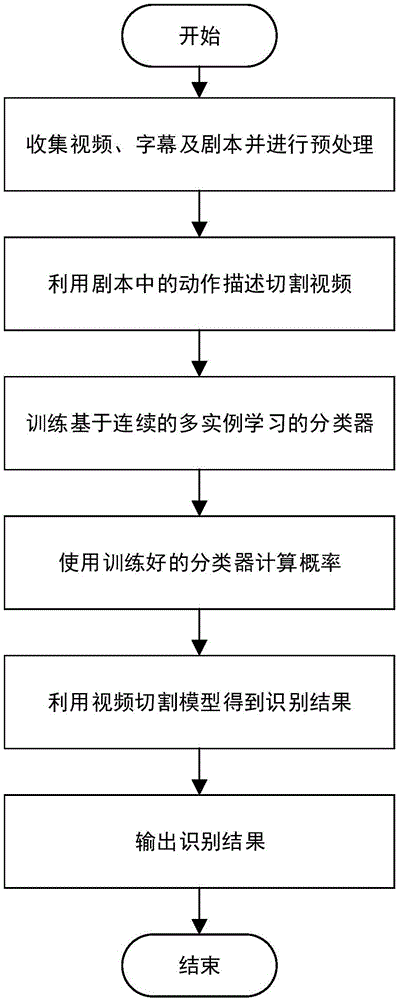

[0034] The present invention will be further described below in conjunction with accompanying drawing.

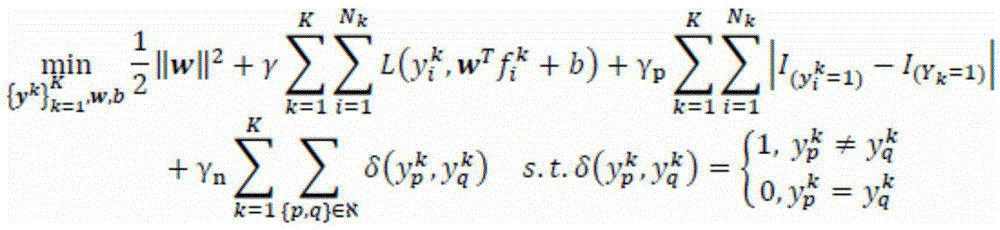

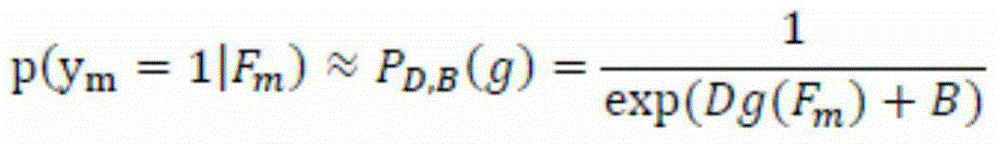

[0035] The invention proposes a method for action recognition in videos based on continuous multi-instance learning. This method first collects movie data from the video website as training data, and at the same time collects subtitles and scripts from the website, matches the subtitles with the dialogue in the script, synchronizes the subtitles and the script, and uses the action description in the script as the corresponding video clip weak label. With video-level weak labels, each video in the training data is segmented into several video segments. Then, for each marker, an action classifier based on continuous multi-instance learning is trained. In the process of testing, firstly, the trained action classifier is used to calculate the probability that each frame of the video input by the user belongs to the action. Then, the recognition final result of each frame is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com