A real-time image fusion method based on visible light and near-infrared dual-band camera

A real-time image and fusion method technology, applied in image enhancement, image data processing, instruments, etc., can solve problems such as insufficient real-time processing, and achieve the effect of good real-time performance and simple hardware implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

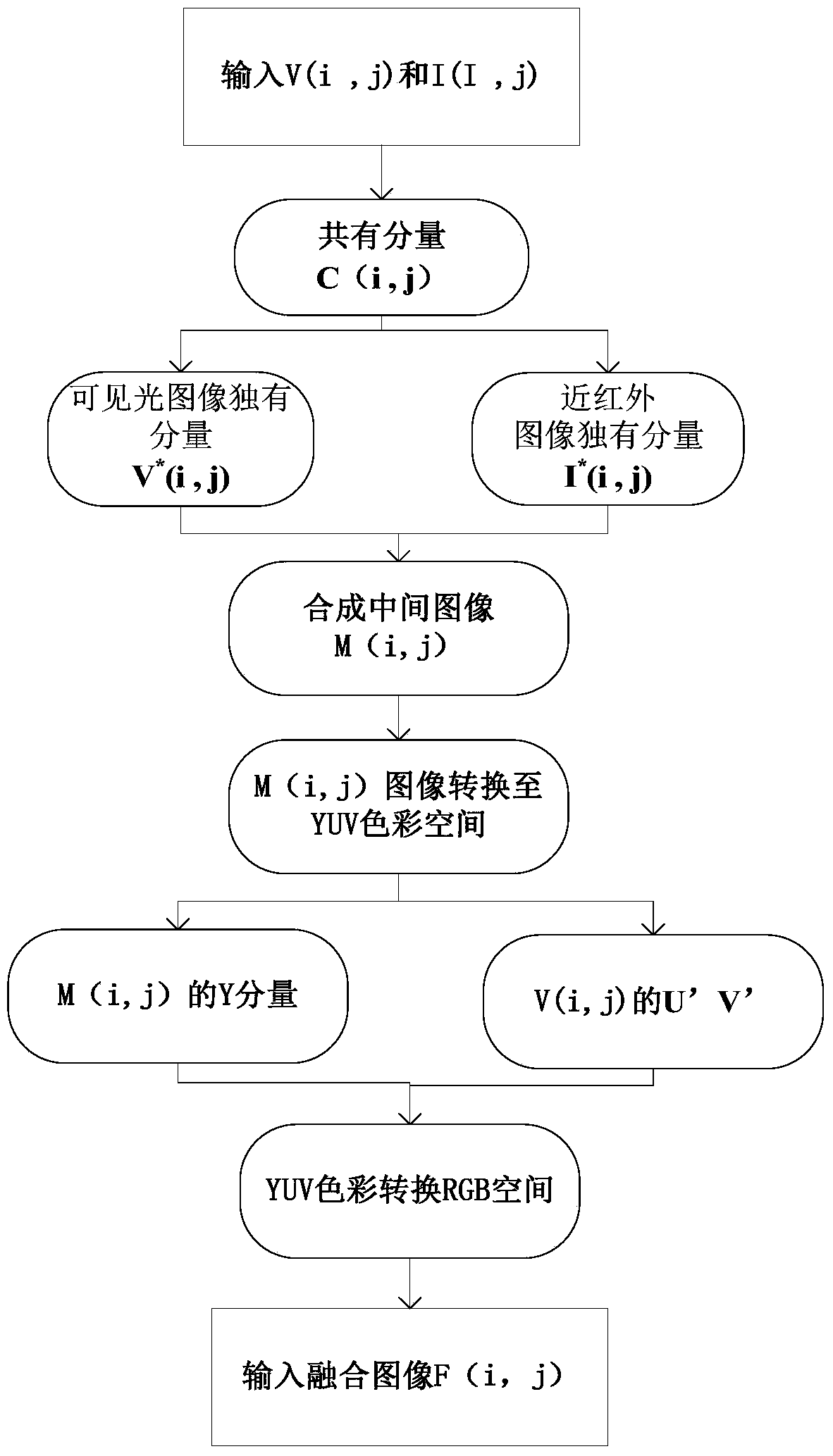

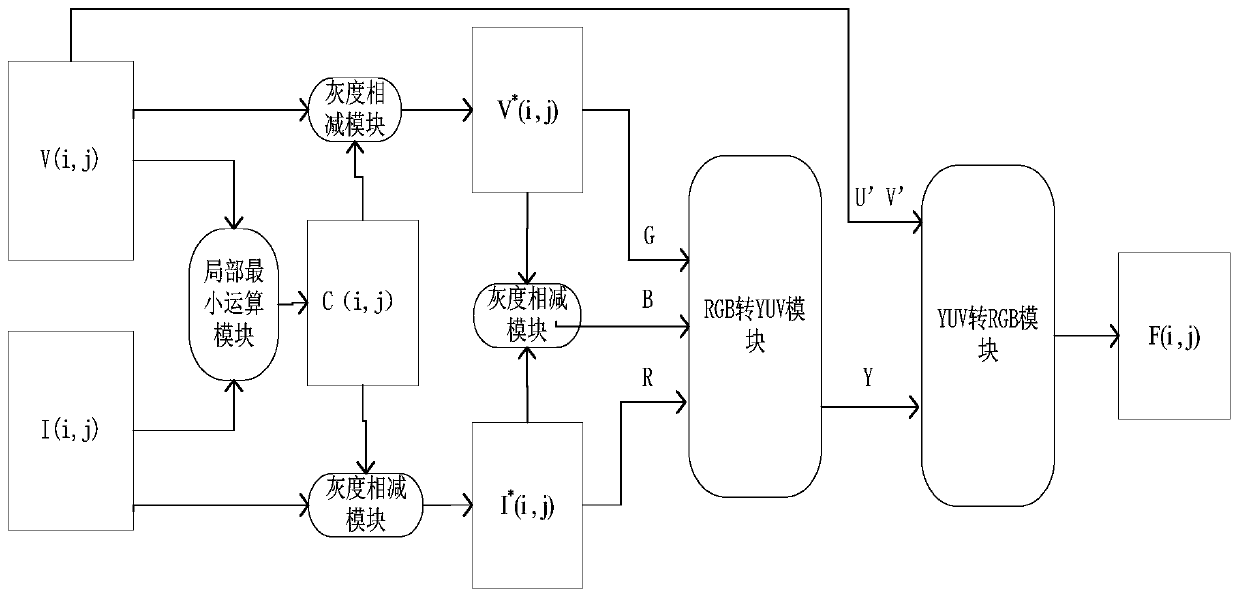

[0029] According to the real-time image fusion method based on visible light and near-infrared dual-band camera described in the manual, its processing flow is as follows figure 1 shown.

[0030] 1. Calculate the common components of the visible light image and the near-infrared image through the local minimum operator. Let the visible light image component be V(i,j) and the near-infrared image component be I(i,j). Then the common component C(i,j) of the two images can be expressed by formula (1)

[0031] C(i,j)=V(i,j)∩I(i,j)=min{V(i,j),I(i,j)} (1)

[0032] 2. The common component of visible light and near-infrared can be subtracted from the light image component to obtain the unique component V of the visible light image * (i, j), expressed as the following formula

[0033] V * (i,j)=V(i,j)–C(i,j) (2)

[0034] 3. Subtract the common component of visible light and near-infrared from the near-infrared image component to obtain the unique component I of the near-infrared im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com