Stereoscopic vision matching method based on weak edge and texture classification

A technology of stereo vision matching and texture classification, which is applied in image analysis, image data processing, instruments, etc., can solve the problems of difficulty in satisfying the real-time performance of the algorithm, large amount of computation, and low matching accuracy, so as to reduce the computation amount of the algorithm and improve Real-time performance and the effect of improving the accuracy rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

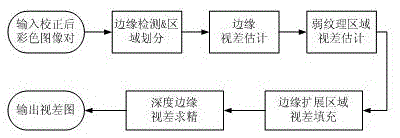

[0113] Referring to the accompanying drawings, the CETR method proposed by the present invention, the CETR algorithm flow process is as follows figure 1 shown.

[0114] The basic idea is to divide the image into edge expansion area and weak texture area according to the edge distribution of the image, and use different methods to estimate the parallax according to the characteristics of the two types of areas, and perform parallax correction or filling according to the results of each other's parallax estimation, and finally After the overall disparity refinement, a complete dense disparity map is obtained.

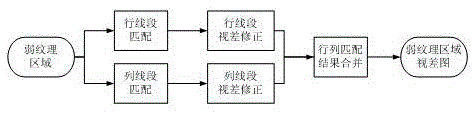

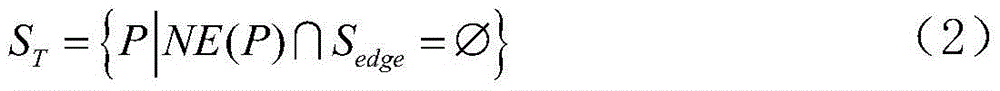

[0115] Algorithm flow: (1) Perform edge detection on the input corrected color image, and divide the weak texture area and edge expansion area according to the detected edge; (2) Perform edge parallax estimation based on local matching for the edge in the reference image, After matching, the unstable edge parallax value is eliminated by repeatability test and stability t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com