Super resolution reconstruction method based on total variation difference and convolution nerve network

A convolutional neural network and super-resolution reconstruction technology, which is applied in the direction of instruments, graphics and image conversion, calculation, etc., can solve the problems of low calculation amount, high computer complexity, poor reconstruction effect, etc., and achieve low calculation amount and simplified Computational complexity, the effect of suppressing the sawtooth effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be described in further detail below in conjunction with the accompanying drawings.

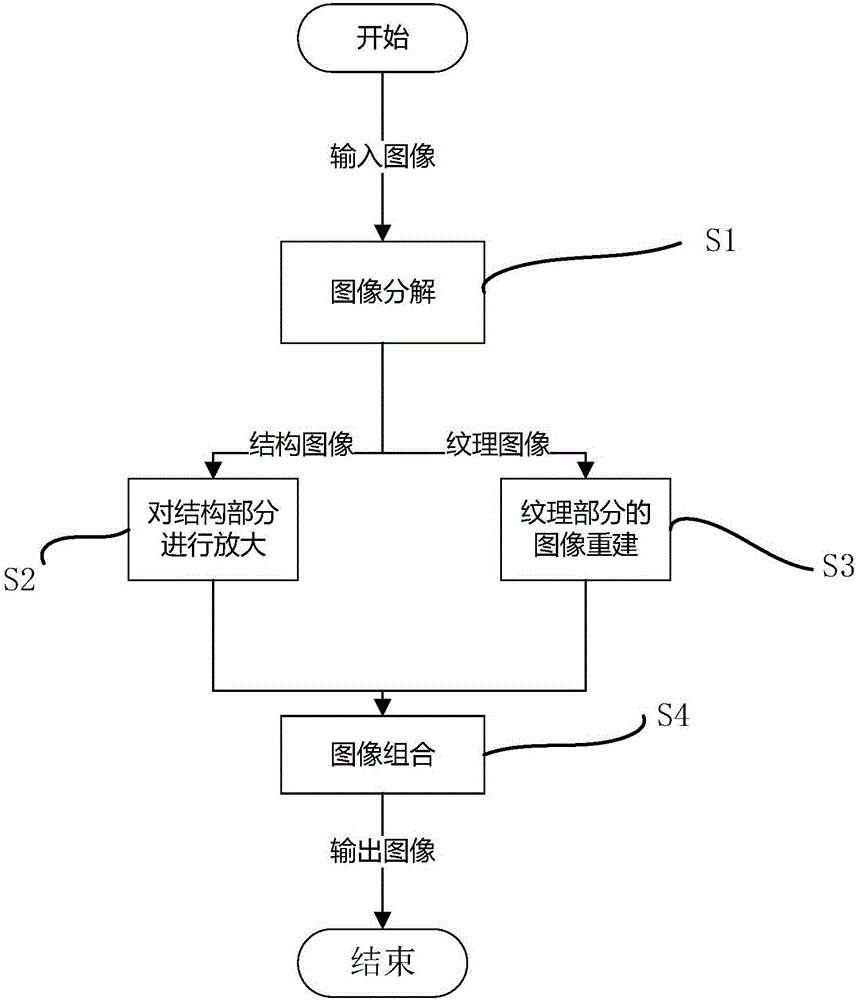

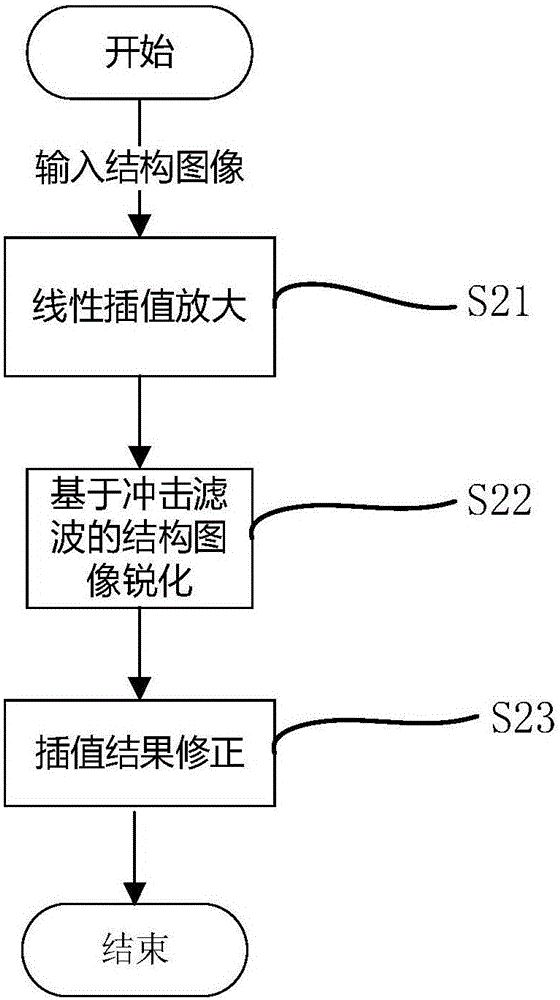

[0045] Such as figure 1 As shown, a super-resolution reconstruction method based on total variable difference and convolutional neural network includes the following steps:

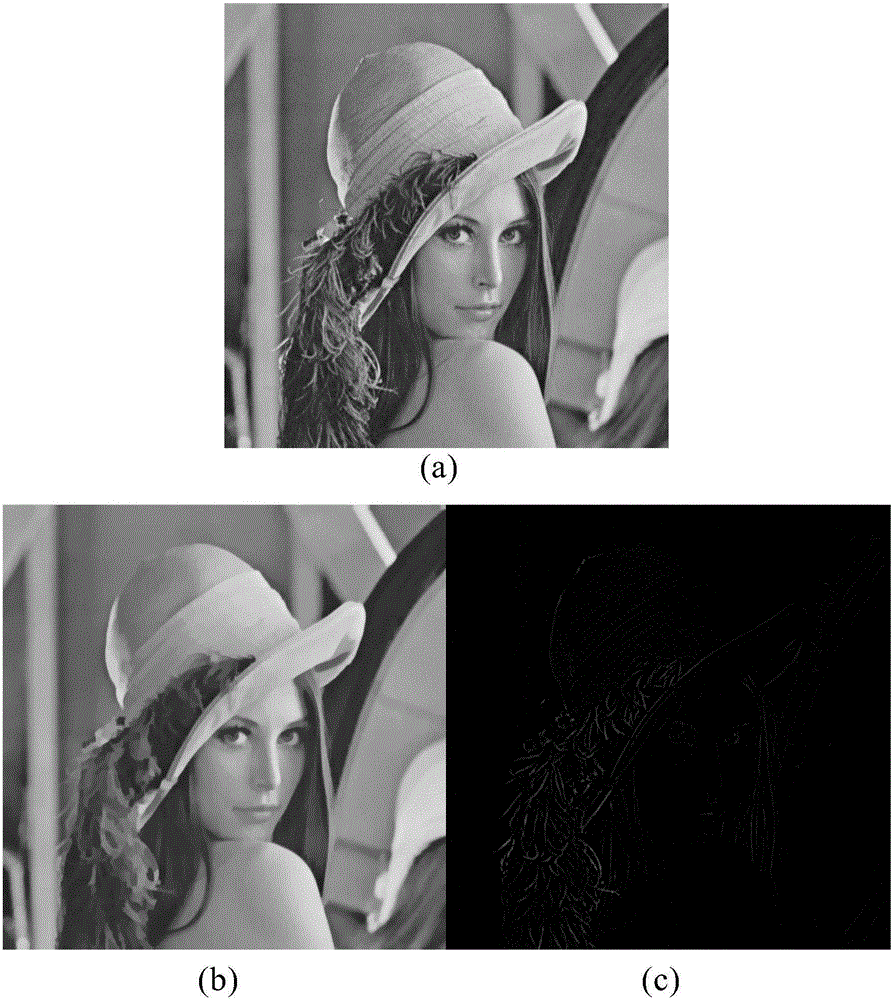

[0046] Image decomposition is carried out in step S1, an image f is decomposed into structure part u and texture part v, f=u+v. The structure part is relatively smooth and has sharp edges, while the texture part contains the texture and details of the image. Decomposition uses a method based on the difference in total variation. The total variation difference refers to the sum of the degree of change of the signal. For a two-dimensional image, the total variation difference is the sum of the gradients of the image. The problem of image decomposition is solved by solving the following minimization equation:

[0047] min u ∫ [ ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com