Motion estimation block matching method based on H.265 video coding

A technology of motion estimation and video coding, applied in the direction of digital video signal modification, electrical components, image communication, etc., can solve time-consuming problems, and achieve the effect of reducing computing time, reducing coding time, image quality and transmission bit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0032] Specific implementation mode one: combine Figure 9 Describe this embodiment, the specific process of a kind of motion estimation block matching method based on H.265 video coding in this embodiment is:

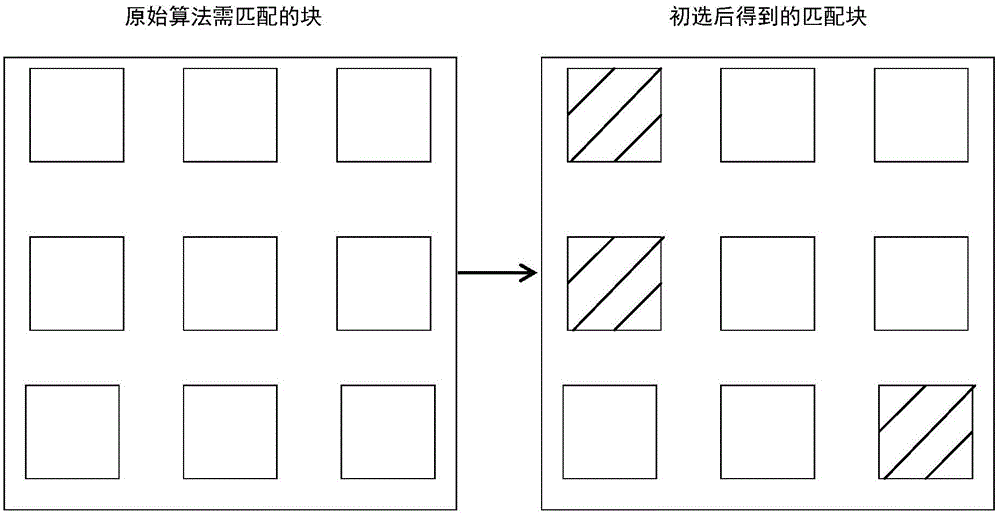

[0033] like figure 1 Shown is a schematic diagram of the primary selection.

[0034] Step 1, preliminary selection stage: according to the division characteristics of the inter prediction unit, select the corresponding down-sampling scheme, and select the candidate matching group from all matching blocks according to the set adaptive threshold;

[0035] Step 2, selection stage: select the candidate matching groups obtained in the preliminary selection stage based on the rate-distortion optimization criterion, select the final matching block, and complete the block matching process in motion estimation.

[0036] In the first step, the number of matching pixels can be effectively reduced through primary selection, and an appropriate matching block can be selected. In t...

specific Embodiment approach 2

[0037] Specific embodiment 2: The difference between this embodiment and specific embodiment 1 is: the primary selection stage in the first step: according to the division characteristics of inter prediction units, select the corresponding down-sampling scheme, and select from all The candidate matching group is selected from the matching block; the specific process is:

[0038] Step 11, the division mode of the inter prediction unit is as follows Figure 2a , 2b , 2c, 2d, 2e, 2f, 2g, and 2h, there are eight kinds of prediction unit divisions in the coding standard, and the shapes are divided into squares and rectangles;

[0039] The downsampling templates for prediction blocks of different shapes are as image 3 , Figure 4 As shown, for a square prediction unit, to determine whether the square is a 32x32 and 64x64 prediction unit, the downsampling is as follows Figure 5 As shown in , if yes, use the down-sampling scheme of the rice character; if not, use the down-sampli...

specific Embodiment approach 3

[0045] Embodiment 3: The difference between this embodiment and Embodiment 1 or 2 is that the selection process of the down-sampling template in Step 1 and 2 is as follows Figure 5 , select the appropriate template from there. Calculate the absolute value of the single pixel difference between the previous frame (time t) and the current frame (time t+1) in the prediction unit. The specific process is to use the following formula:

[0046] SAD_c=abs(piOrg[i]-piCur[i])

[0047] Among them, piOrg represents the pixel in the current frame, piCur represents the pixel in the previous frame, abs is the absolute value symbol, and SAD_c is the single pixel difference between the previous frame (t time) and the current frame (t+1 time) in the prediction unit The absolute value of the value, i is the i-th pixel in the prediction unit.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com