Face Gender Recognition Method Based on Fireworks Deep Belief Network

A deep belief network and gender recognition technology, which is applied in the field of face gender recognition by optimizing the initial parameter space of the deep belief network by the fireworks algorithm, and achieves the effect of global optimization, strong anti-interference and high recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

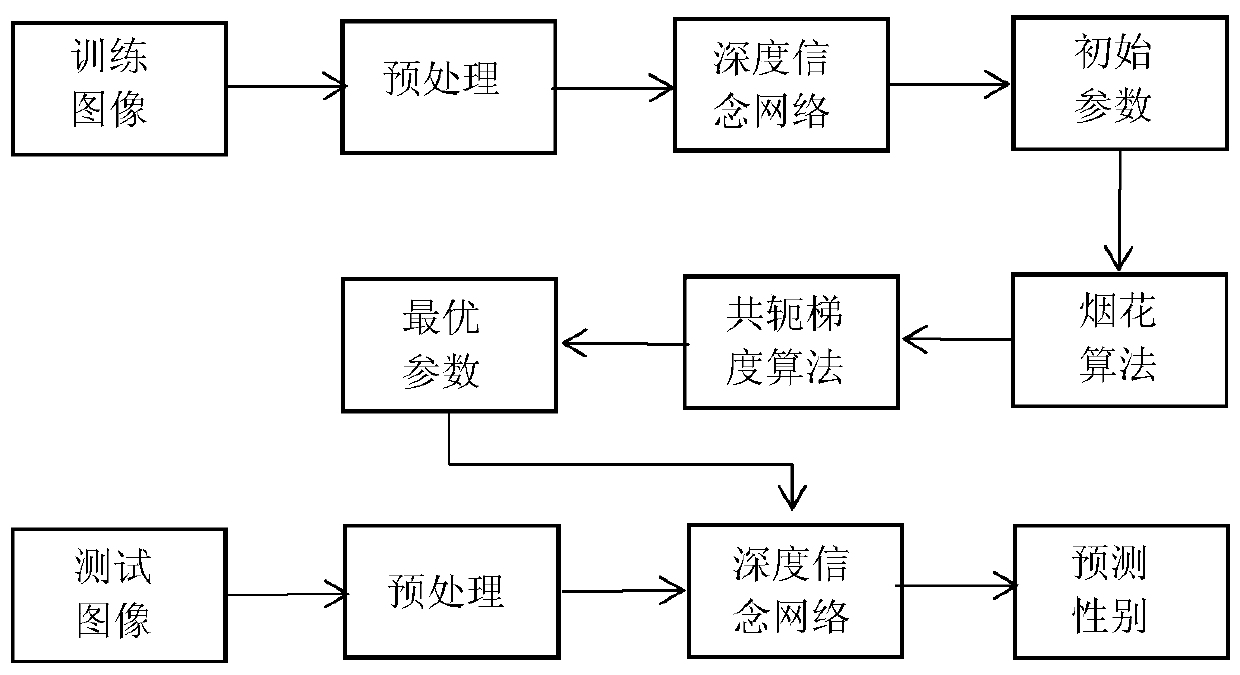

[0044] Take the internationally accepted Extended Cohn-Kanade face database as the input image, MATLAB2010b as the experimental platform, and perform face gender recognition as an example, such as figure 1 As shown, the method is as follows:

[0045] 1. Raw image preprocessing

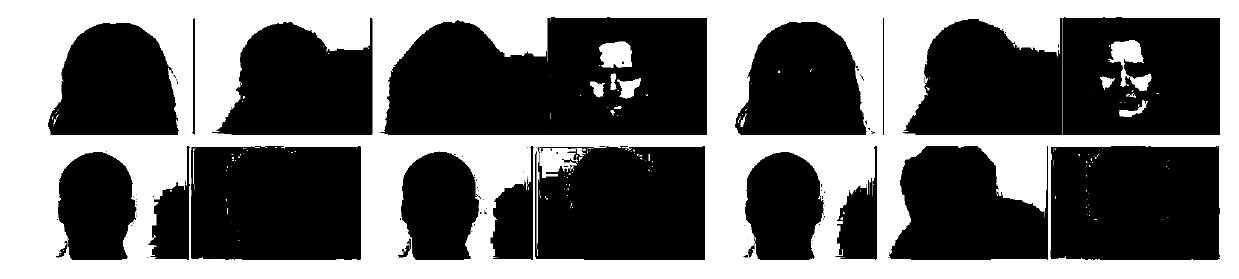

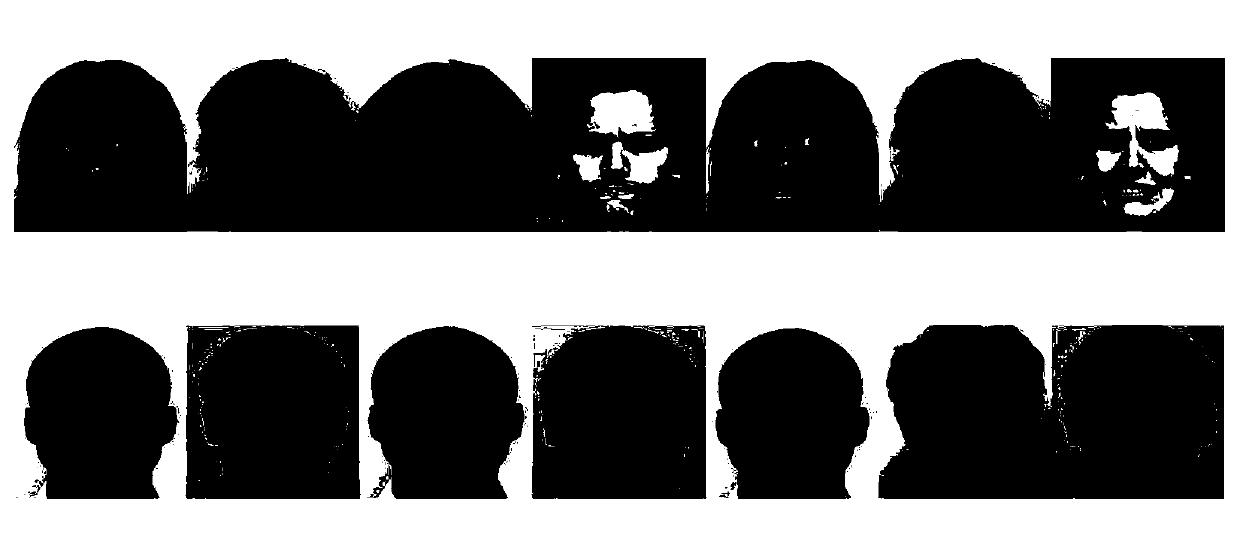

[0046] Extended Cohn-Kanade face database, with 210 training images and 140 test images, some images such as figure 2 As shown, will figure 2 The original color image is converted into a grayscale image, and the face part is segmented, and the size of the face image is sampled by bicubic interpolation method to 24×24 pixels, such as image 3 As shown, each segmented image is converted into a one-dimensional vector, and each row of vector represents an image.

[0047] 2. Training Deep Belief Network

[0048]Set the number of layers of the input layer, hidden layer and output layer of the deep belief network to 1 input layer, 3 hidden layers and 1 output layer respectively, where the number of node...

Embodiment 2

[0073] Taking the internationally accepted MORPH face database as the input image and MATLAB 2010b as the experimental platform, face gender recognition is taken as an example, such as figure 1 As shown, the method is as follows:

[0074] 1. Raw image preprocessing

[0075] MORPH face database, training images are 1400, test images are 1000, some images such as Figure 4 As shown, will Figure 4 The original color image is converted into a grayscale image, and the face part is segmented, and the size of the face image is sampled by bicubic interpolation method to 24×24 pixels, such as Figure 5 As shown, each segmented image is converted into a one-dimensional vector, and each row of vector represents an image.

[0076] 2. Training Deep Belief Network

[0077] Set the number of layers of the input layer, hidden layer and output layer of the deep belief network to 1 input layer, 3 hidden layers and 1 output layer respectively, where the number of nodes in the input layer is...

Embodiment 3

[0102] Taking the internationally accepted LFW face database as the input image, and MATLAB 2010b as the experimental platform, face gender recognition is taken as an example, such as figure 1 As shown, the method is as follows:

[0103] 1. Raw image preprocessing

[0104] LFW face database, 400 training images, 200 test images, some images such as Figure 6 As shown, will Figure 6 The original color image is converted into a grayscale image, and the face part is segmented, and the size of the face image is sampled by bicubic interpolation method to 24×24 pixels, such as Figure 7 As shown, each segmented image is converted into a one-dimensional vector, and each row of vector represents an image.

[0105] 2. Training Deep Belief Network

[0106] Set the number of layers of the input layer, hidden layer and output layer of the deep belief network to 1 input layer, 3 hidden layers and 1 output layer respectively, where the number of nodes in the input layer is 24×24, and t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com