Approximate repeated video retrieval method incorporating global R features

An approximate repetition and global technology, applied in the field of approximate repetition video retrieval, which can solve the problems of single local texture information, low video retrieval accuracy, and ignoring global information of feature points.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The present invention will be described in detail below with reference to the accompanying drawings and specific embodiments.

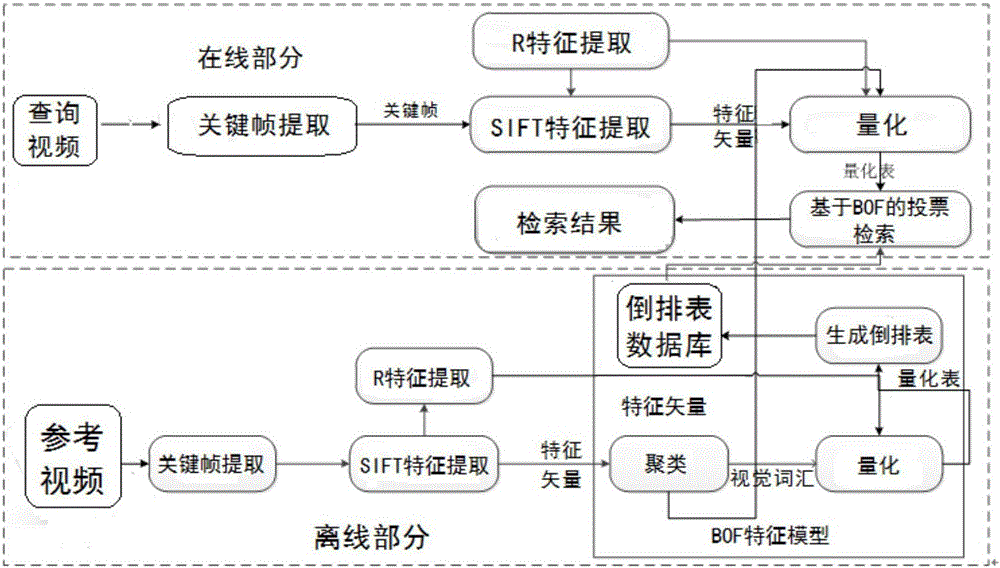

[0075] Framework diagram of an approximate duplicate video retrieval method fused with global R features, such as figure 1 As shown, it can be divided into two parts, namely: offline part and online part. The processing object of the offline part is the target video database, and the inverted index table required for online part query is generated; the online part mainly completes the query process of the query video in the target video database.

[0076] The processing object of the offline part is the reference video library, which performs key frame extraction, SIFT feature extraction, R feature extraction, feature clustering analysis, quantization from feature vectors to visual vocabulary, and generates visual vocabulary and related features. The inverted index table for online part of the query.

[0077] The online part completes the que...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com