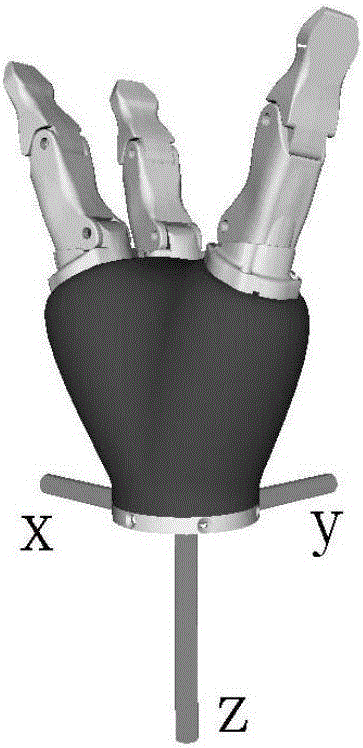

Mechanical paw grasping planning method based on deep projection and control device

A technology of manipulator claws and control devices, which is applied in manipulators, program-controlled manipulators, manufacturing tools, etc. It can solve problems such as slow planning speed, requirements for light stability, and unrealistic modeling, so as to improve overall efficiency and reduce online grabbing Effect of planning time, reducing quantity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

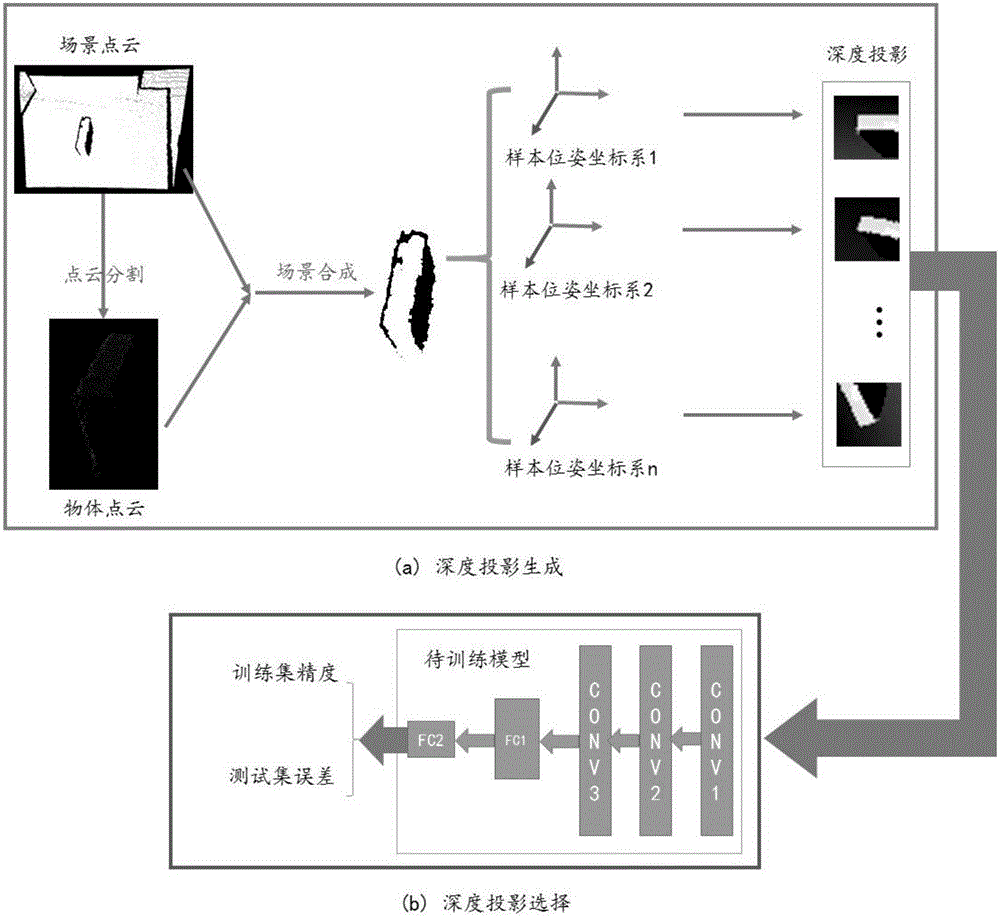

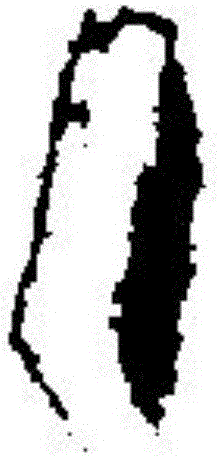

[0085] Embodiment 1: It includes a first computing module and a control module.

[0086] The first computing module generates candidate capture pose samples according to the depth information of the current scene, and uses the trained grasp selection neural network to obtain the optimal grasp pose; the grasp selection neural network is used as a candidate capture Obtain the evaluation standard of the optimal grasping pose from the pose sample; the first calculation module includes the first acquisition unit, the first pose sample generation unit, and the grasping selection unit; the first acquisition unit is to obtain the depth information of the current scene, using The current scene depth information generates the coordinate system of the candidate capture pose, obtains the current scene depth information synthesized under the coordinate system of the candidate capture pose, and the first acquisition unit specifically obtains the current scene point cloud information; from th...

Embodiment 2

[0089] Embodiment 2: In addition to the modules included in Embodiment 1, the control device also includes a second computing module. The second computing module uses the scene depth information to generate positive and negative samples of the grasping pose offline, and performs the training of the grasping selection neural network. . The second operation module includes a second acquisition unit, a second pose sample generation unit and a training unit,

[0090] The second acquisition unit is to acquire the synthesized scene depth information, and the second acquisition unit specifically acquires the scene point cloud information; segment the point cloud information of the object to be captured and the pose information of the carrier from the scene point cloud information; The extracted point cloud information of the object to be grasped and the pose information of the carrier are used to construct the synthetic scene depth information. The second pose sample generation unit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com