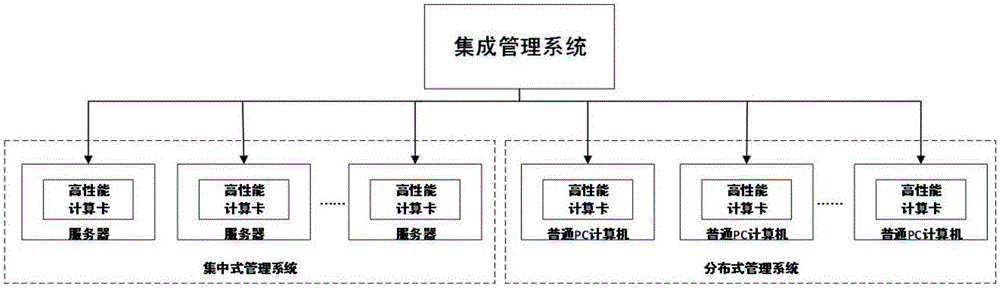

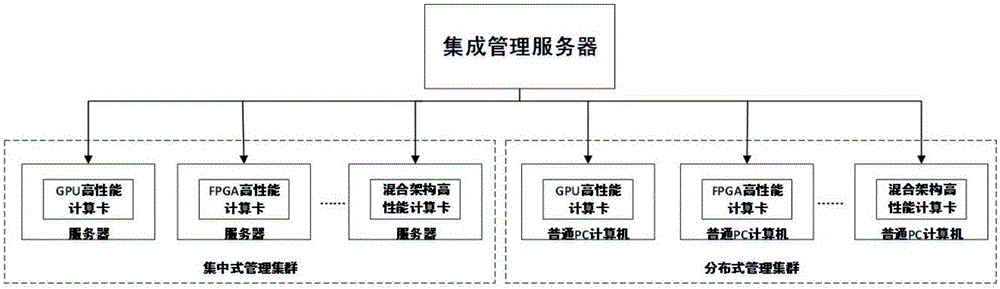

Distributed management framework based on extensible and high-performance computing and distributed management method thereof

A high-performance computing and distributed technology, applied in electrical components, transmission systems, etc., can solve problems such as the inability to meet larger data computing requirements, and achieve the effect of avoiding single-point failure, improving utilization, and low computing power

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

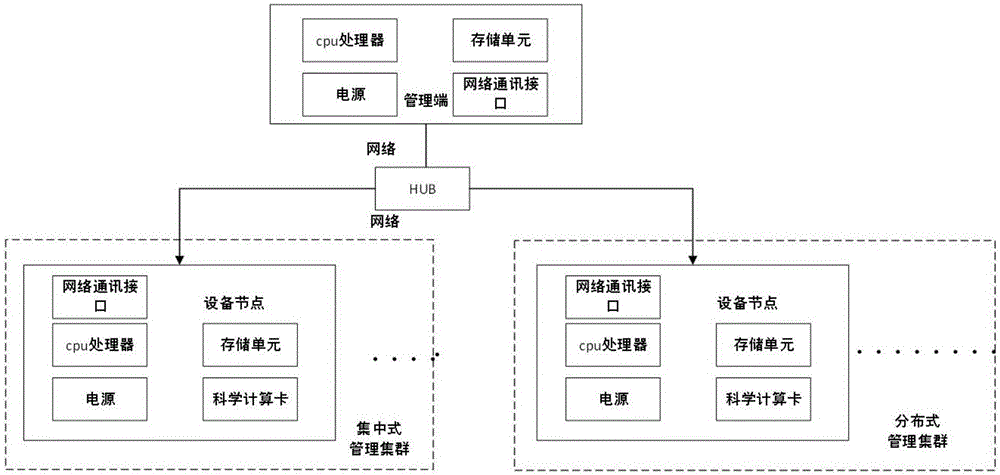

[0048] Example 1: The algorithm of task 1 is composed of two basic calculation cores, namely the sha1 calculation core and the aes128 calculation core.

[0049] Step 1: The user uploads the task to the management terminal, and the management terminal analyzes task 1. By calculating the computing resources of each computing node, it is obtained that 30% of the total tasks run by node 1 and 70% of the total tasks run by node 2 can reach Load balancing.

[0050] Step 2: Send 30% of the tasks to computing node 1 through the network port through the task sending unit, and send 70% of the tasks to computing node 2 through the network port.

[0051] Step 3: After the device node receives the data, the task is handed over to the scientific computing card for calculation. The scientific calculation card obtains the operation result, and uploads the result to the management terminal through the network data port.

[0052] Step 4: The management end obtains the data reported by each de...

example 2

[0053] Example 2: The algorithm of task 2 consists of three basic calculation cores, namely des calculation core, md4 calculation core and sha1 calculation core.

[0054] Step 1: The user uploads the task to the management terminal, and the management terminal analyzes task 2. By calculating the computing resources of each computing node, it is obtained that when node 1 runs 30% of the total tasks and node 2 runs 30% of the total tasks, node 3 In the case of running 40% of the total tasks, load balancing can be achieved.

[0055] Step 2: Through the task delivery unit, send 30% of the total tasks to the computing node 1 through the network port, send 30% of the total tasks to the computing node 2 through the network port, and send 40% of the total tasks to the computing node 2 through the network port Send it to computing node 3.

[0056] Step 3: After the device node receives the data, the task is handed over to the scientific computing card for calculation. The scientific ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com