Method for identifying human body behavior based on part clustering feature

A recognition method and clustering technology, applied in the field of computer vision and pattern recognition, can solve the problems of inaccuracy, no consideration of the independence of joint motion, and no consideration of time series, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

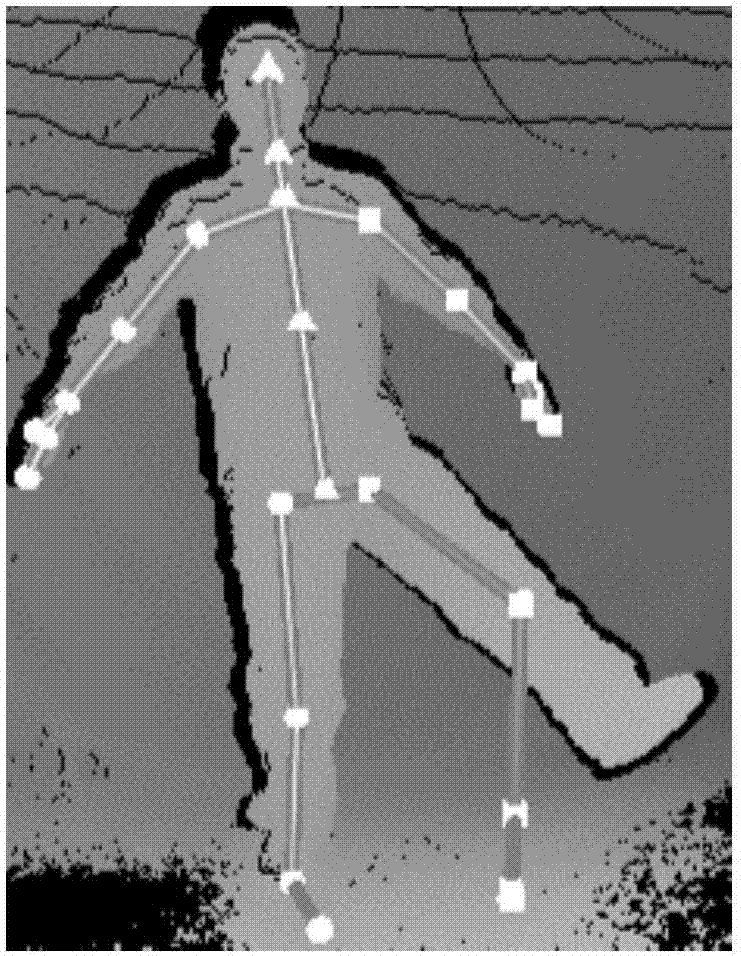

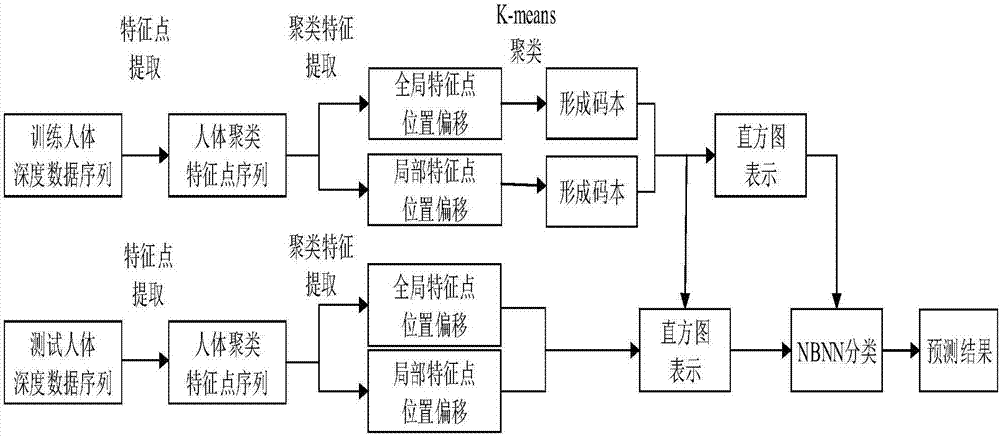

[0057] The example of the present invention provides a human body behavior recognition method based on part clustering features. In order to avoid inaccurate human body joint point position information, the human body sub-part clustering center is used as the feature point representing the human body posture; in order to use the global characteristics of the action sequence information , the present invention adds a global position offset to the sequence feature vector to make up for the defect of only using local position offset information for identification. Based on this, the key issues that need to be solved include: the extraction of human body posture features; the calculation of human action sequence feature vectors; action recognition classification.

[0058] The present invention takes the depth image sequence when the human body is moving as input data to calculate the human action category as the output; wherein, the core link of the calculation is to use the offset...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com