Gesture classification method based on tensor decomposition

A classification method and tensor decomposition technology, applied in the field of gesture classification based on tensor decomposition, can solve the problems of low classification accuracy and unsatisfactory classification accuracy, and achieve the effect of good physical meaning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

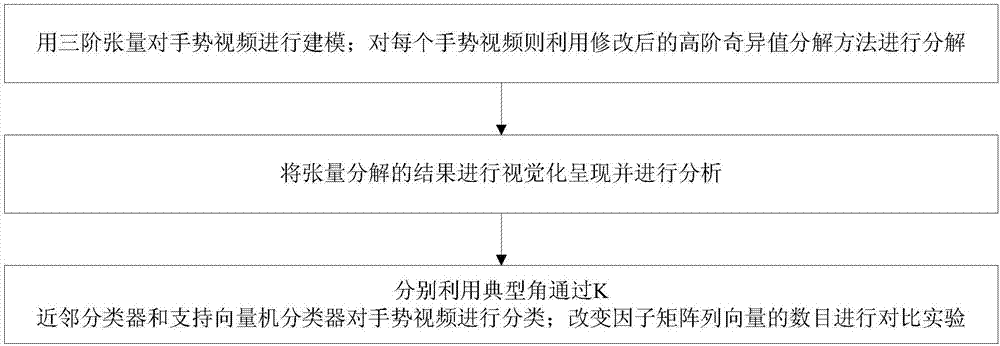

[0032] A gesture classification method based on tensor decomposition, see figure 1 , the gesture classification method includes the following steps:

[0033] 101: Model gesture videos with third-order tensors; decompose each gesture video using a modified high-order singular value decomposition method;

[0034] 102: Visually present and analyze the results of tensor decomposition;

[0035] 103: Use typical angles to classify gesture videos through K-nearest neighbor classifier and support vector machine classifier; change the number of column vectors in the factor matrix to conduct comparative experiments.

[0036] Wherein, in step 101, the step of modeling the gesture video with a third-order tensor is specifically:

[0037] The first order of the tensor indicates the horizontal direction, the second order indicates the vertical direction, and the third order indicates the time axis;

[0038] The image in the read sample is a matrix, and the matrix is concatenated in the...

Embodiment 2

[0046] The scheme in embodiment 1 is further introduced below in conjunction with specific calculation formulas and examples, see the following description for details:

[0047]201: Modeling gesture videos with third-order tensors;

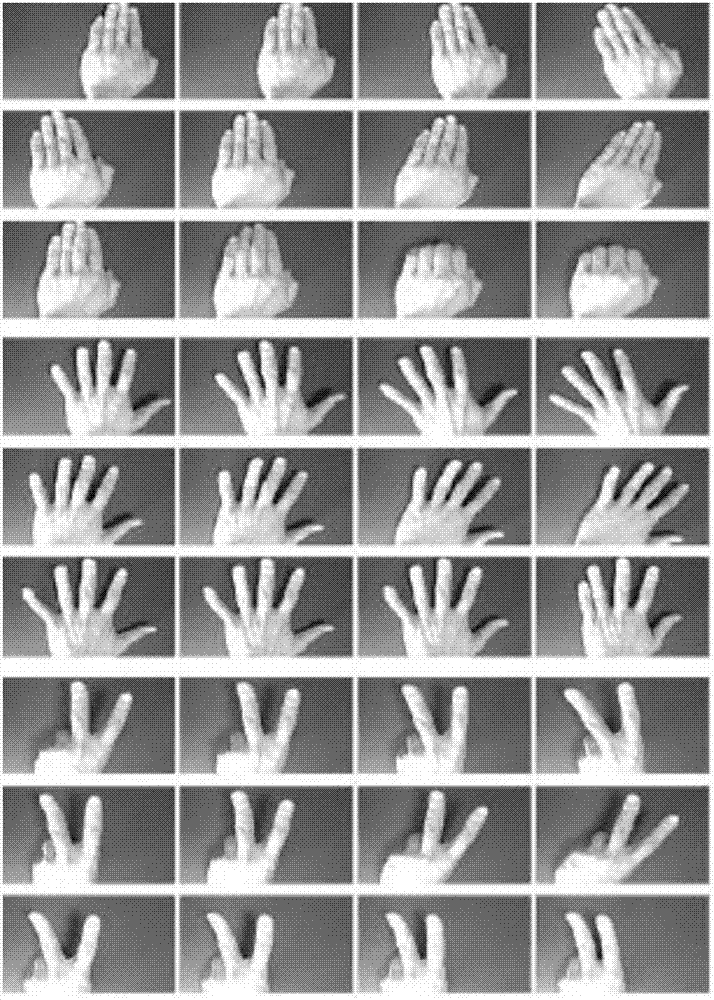

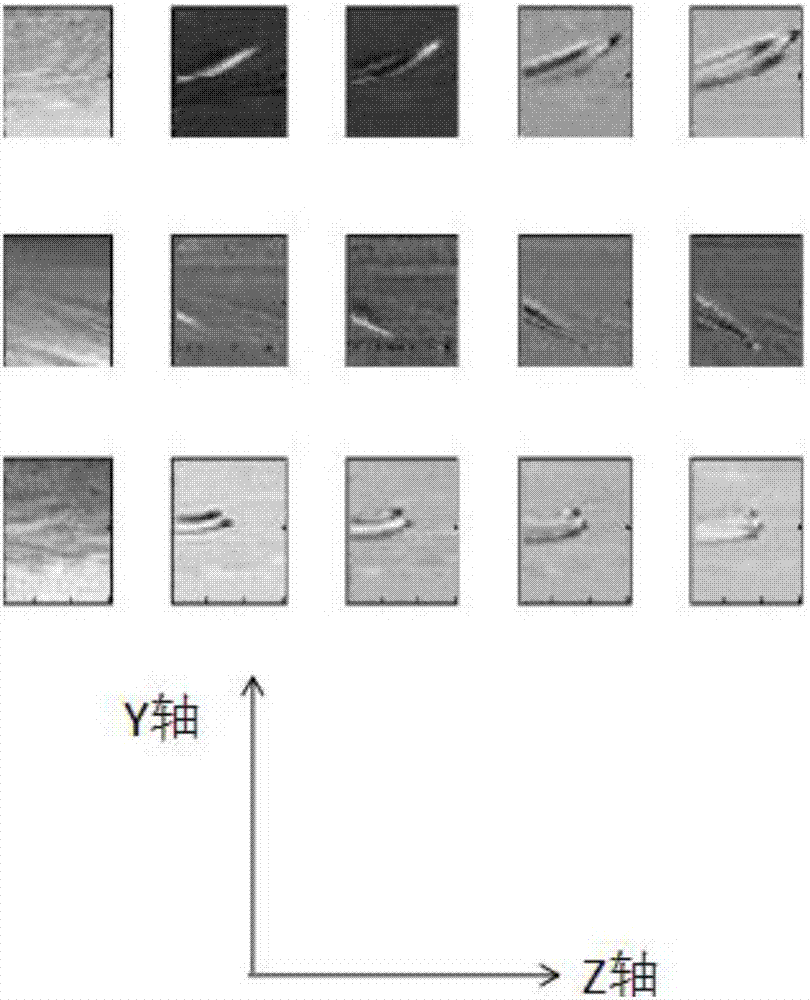

[0048] Specifically: the Cambridge Gesture Database is used in this experiment, including 9 types of gestures, such as figure 2 As shown, a gesture video is represented as a three-order tensor, where the first order of the tensor represents the horizontal direction, the second order represents the vertical direction, and the third order represents the time axis. First read the pictures in the sample as a matrix, and then concatenate these matrices in the third order of the tensor, thus forming a third-order tensor representing the video.

[0049] 202: Utilize a modified High Order Singular Value Decomposition (HOSVD) for each gesture video [7] method to break down;

[0050] First, for N-order (dimensional) tensors Carry out the matrix calcul...

Embodiment 3

[0080] Below in conjunction with specific calculation formula, example, the scheme in embodiment 1 and 2 is carried out feasibility verification, see the following description for details:

[0081]The database of this experiment is the Cambridge Gesture Database, which contains a total of 900 samples, which are divided into 9 categories according to the type of gesture, and 5 types according to the light level of the picture, corresponding to Set1, Set2, Set3, Set4 and Set5 respectively. There are 9 types of gestures under each light level, namely five fingers together to the left, five fingers together to the right, five fingers together to make a fist, five fingers apart to the left, five fingers apart to the right, five fingers apart to close together, V-shaped gestures to the left, V-sign to the right, V-sign to close. Each gesture contains another 20 samples. Each sample contains several pictures, and the number of pictures is inconsistent. One picture in the sample cor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com