A Dynamic Gesture Recognition Method Based on Hybrid Deep Learning Model

A dynamic gesture, deep learning technology, applied in the field of computer vision and machine learning, can solve problems such as loss of interactive information, large model parameters, and destruction of 2D images.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

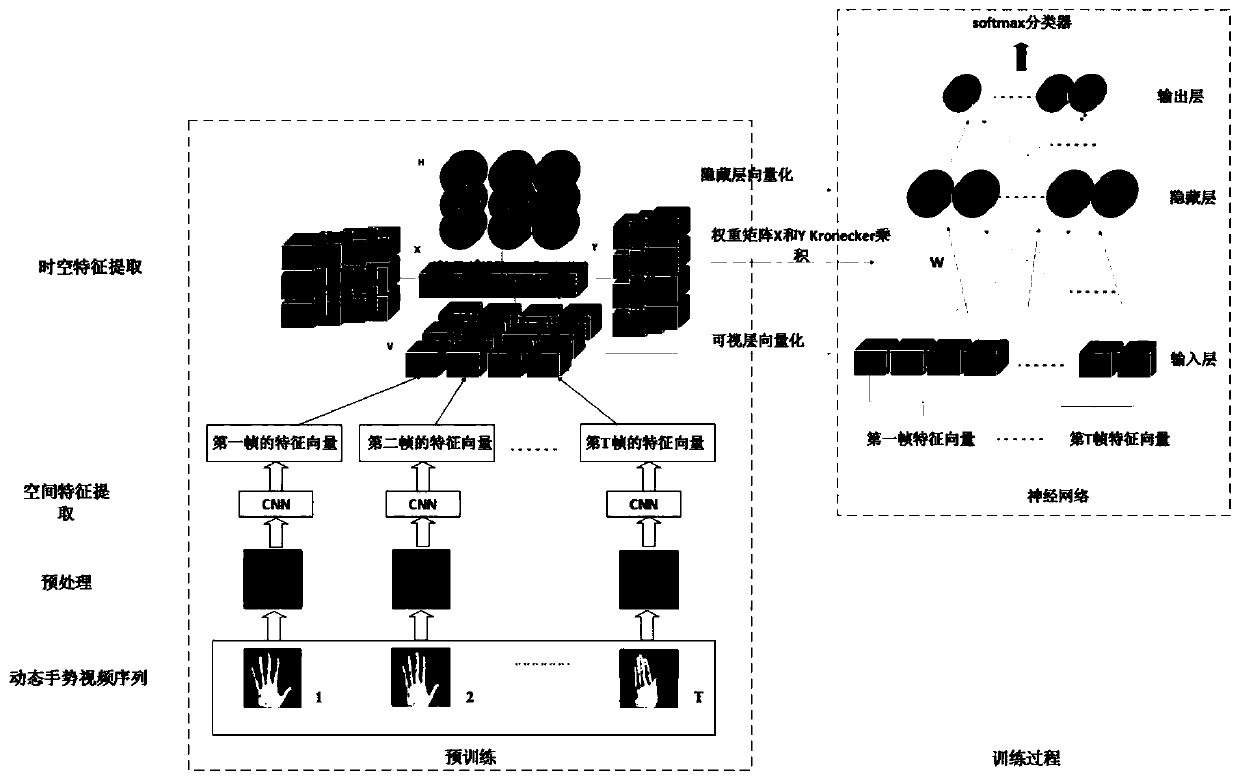

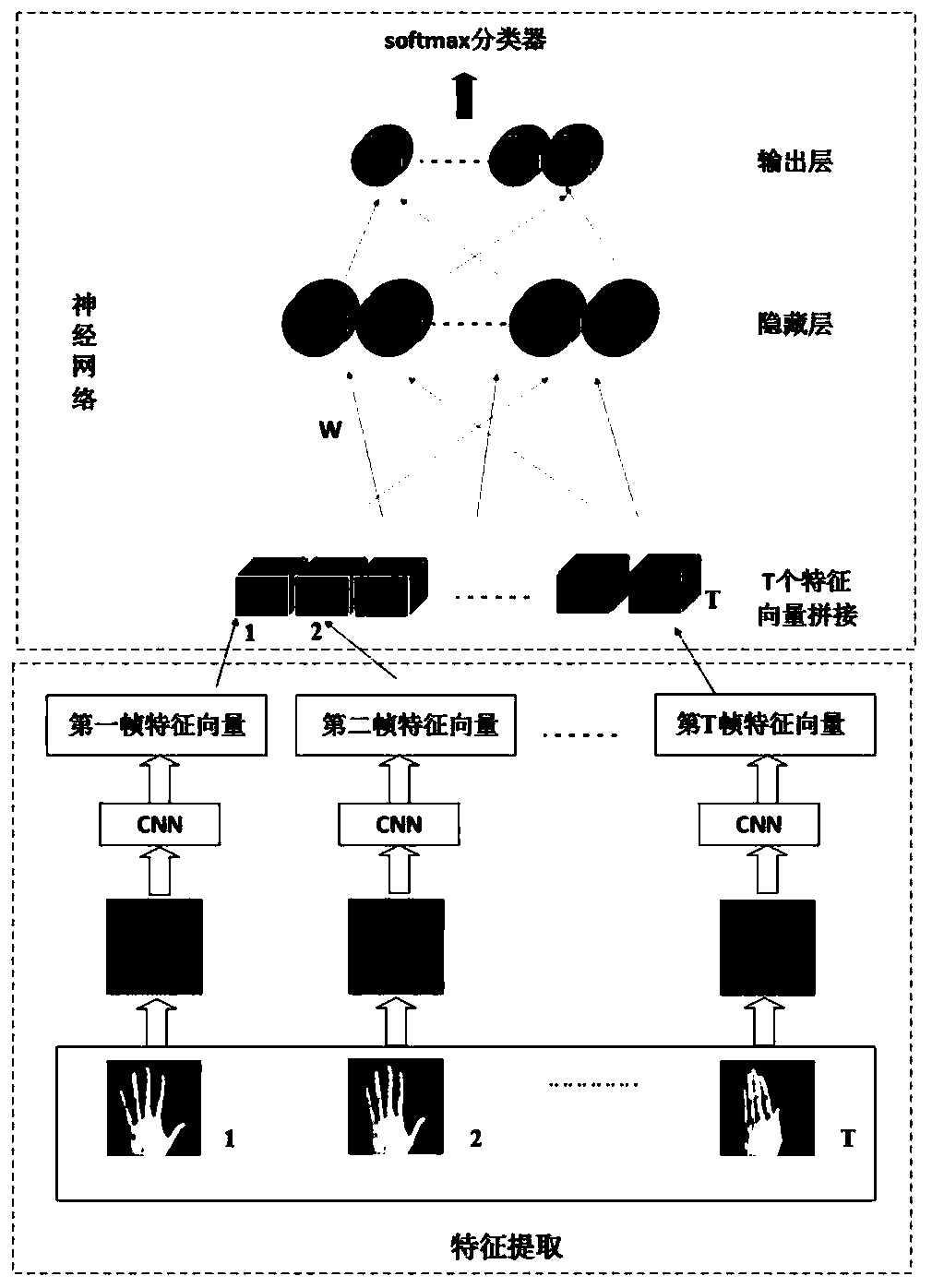

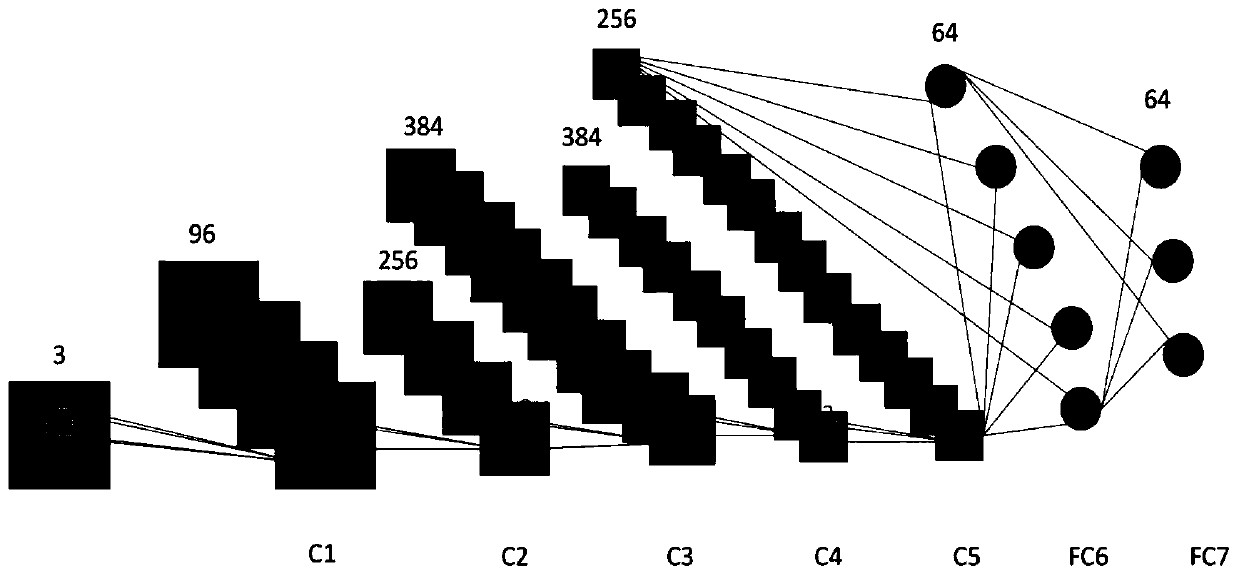

[0052] The embodiment of the present invention provides a dynamic gesture recognition method. The invention is oriented to the problem of dynamic gesture recognition, and utilizes the advantages of CNN and MVRBM to design a method for pre-training NN model based on CNN-MVRBM hybrid model. This method integrates CNN's ability to express images and MVRBM's dimensionality reduction representation and pre-training capabilities for 2D signals, so that on the one hand, it realizes an effective spatiotemporal representation of 3D dynamic gesture video sequences, and on the other hand, it improves the recognition performance of traditional NNs. .

[0053] The CNN-MVRBM-NN hybrid deep learning model includes two stages of training and testing. In the training phase, CNN's effective image feature extraction ability, MVRBM's ability to model 2D signals, and NN's supervised classification characteristics are combined. In the recognition stage, dynamic gesture recognition can be effective...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com