Target identification method based on deep learning network and hot-line robot

A deep learning network and target recognition technology, applied in the field of target recognition of live working robots, can solve the problems of cumbersome operation steps, low error tolerance rate, slow operation, etc., and achieve the effect of high target recognition rate, good recognition performance, and reduction of misrecognition.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] It is easy to understand that according to the technical solution of the present invention, without changing the essential spirit of the present invention, those of ordinary skill in the art can imagine various embodiments of the present invention based on the deep learning network target recognition method and the live working robot . Therefore, the following specific embodiments and drawings are only exemplary descriptions of the technical solutions of the present invention, and should not be regarded as all of the present invention or as a limitation or limitation to the technical solutions of the present invention.

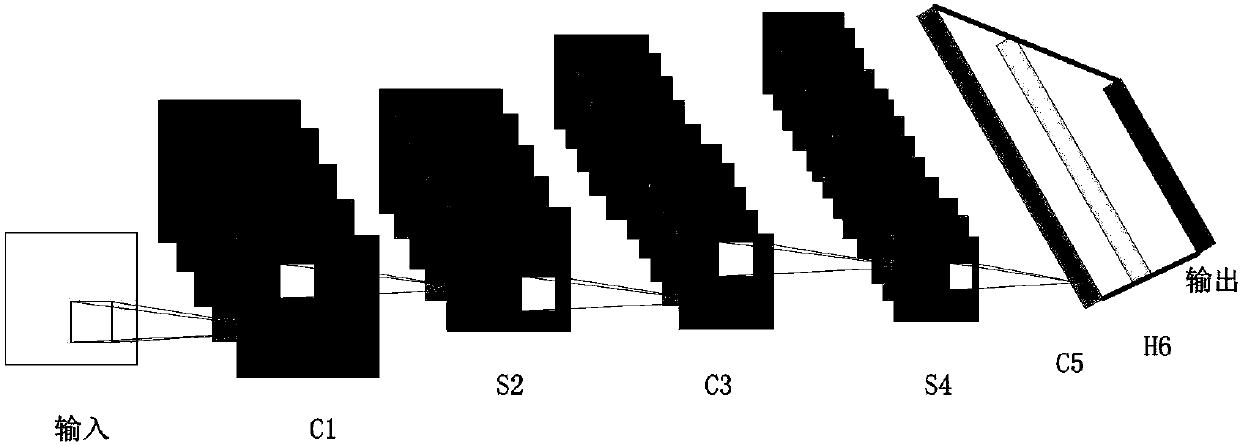

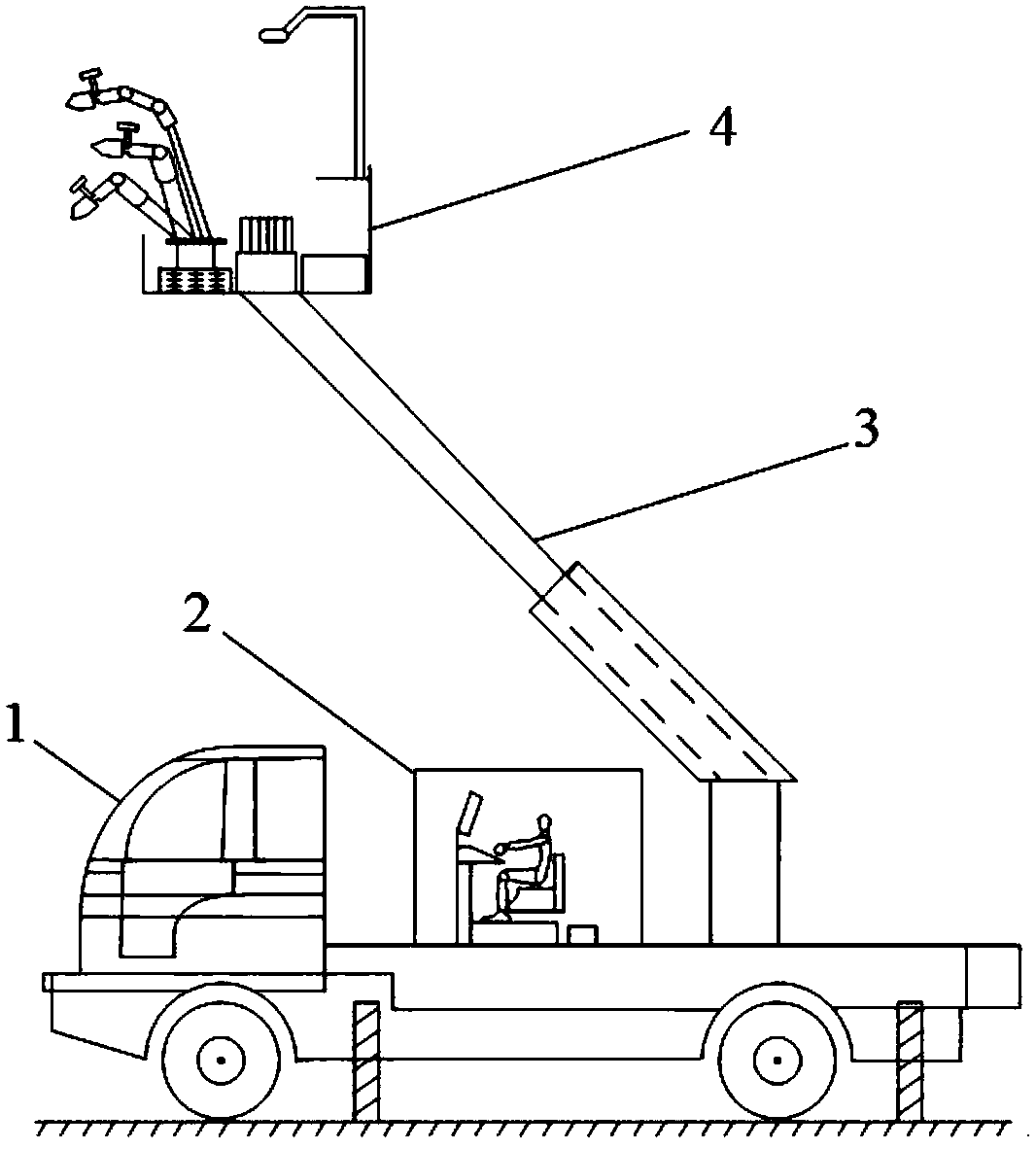

[0037] With reference to the accompanying drawings, a method for target recognition of a live working robot based on a deep learning network includes the following steps:

[0038] Step 1. Collect pictures of job objects and establish a target database. The pictures of the subjects were taken from the Internet and on-site.

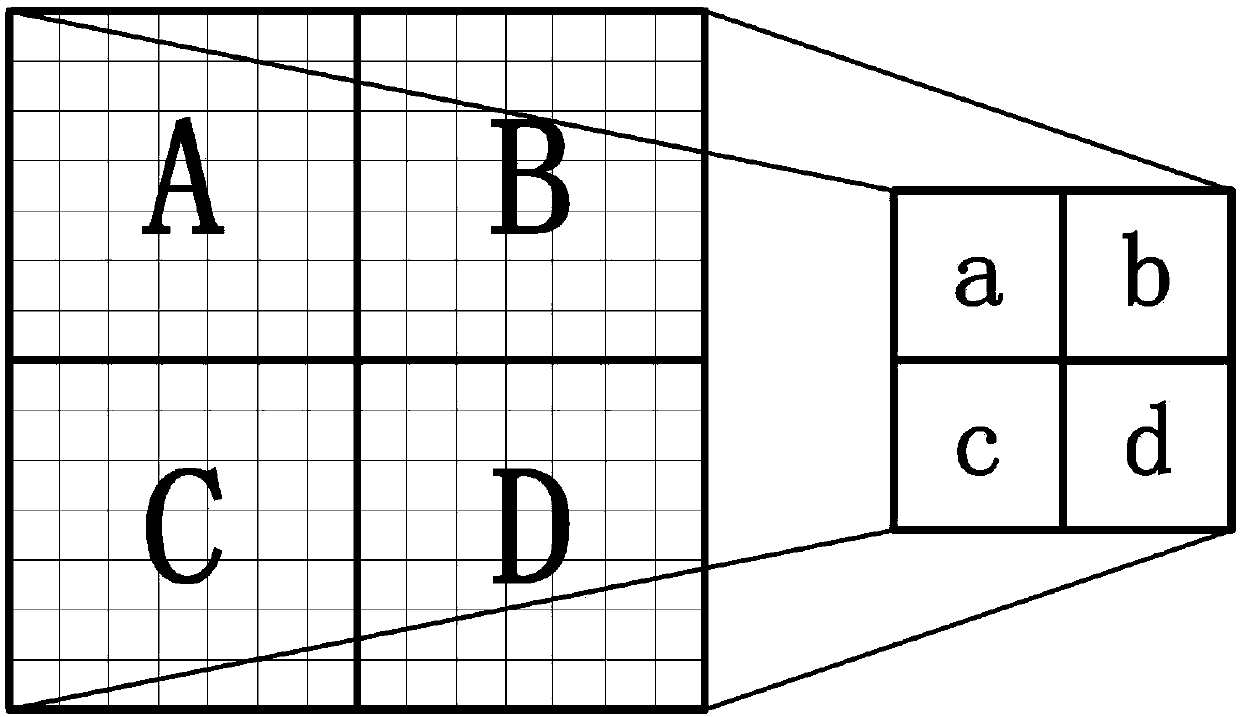

[0039] Step 2: Divide the target d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com