Conversation control device and conversation control method

A technology of dialogue control and words, applied in speech analysis, instrumentation, electrical digital data processing, etc., can solve problems such as inability to correctly estimate user intentions, manual inspection, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

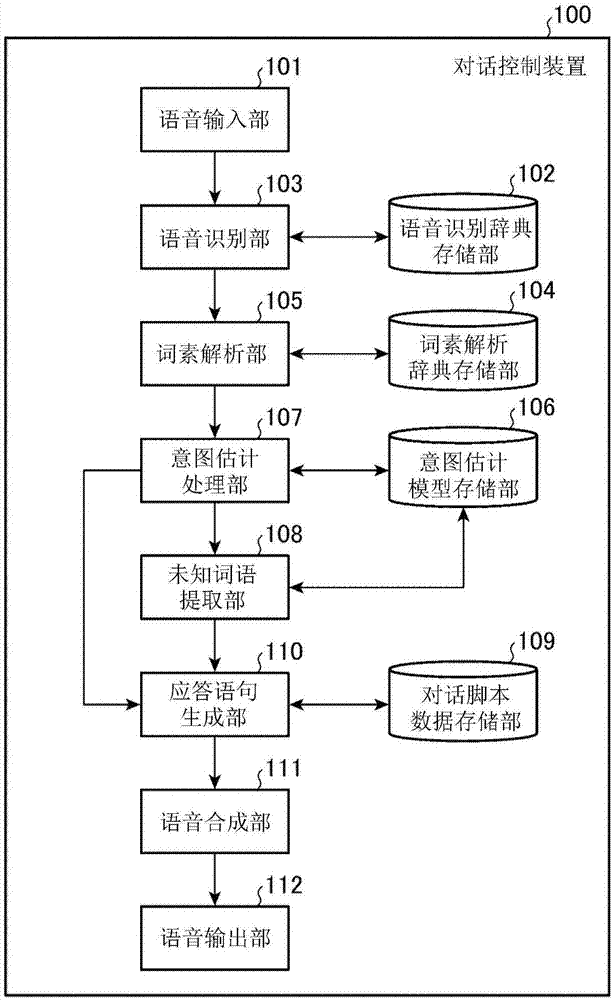

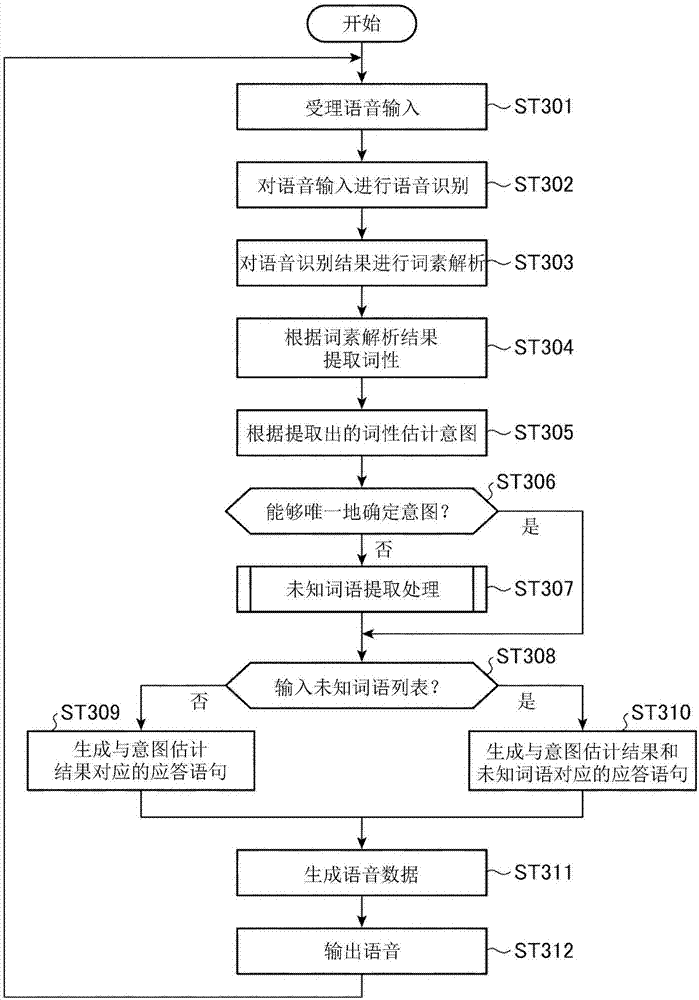

[0038] figure 1 It is a block diagram showing the configuration of the dialog control device 100 according to the first embodiment.

[0039] The dialog control device 100 according to Embodiment 1 includes a speech input unit 101, a speech recognition dictionary storage unit 102, a speech recognition unit 103, a morpheme analysis dictionary storage unit 104, a morpheme analysis unit (text analysis unit) 105, an intention estimation model storage unit 106, Intention estimation processing unit 107 , unknown word extraction unit 108 , dialogue script data storage unit 109 , response sentence generation unit 110 , speech synthesis unit 111 , and speech output unit 112 .

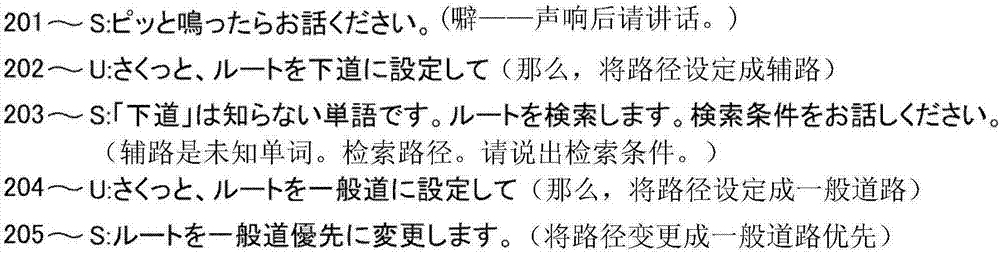

[0040] Hereinafter, a case where the dialog control device 100 is applied to a car navigation system will be described as an example, but the application object is not limited to the navigation system, and can be appropriately changed. In addition, a case where the user communicates with the dialogue control dev...

Embodiment approach 2

[0085] In this second embodiment, a configuration is shown in which a syntactic analysis is further performed on the morphological analysis result, and unknown word extraction is performed using the result of the syntactic analysis.

[0086] Figure 9 It is a block diagram showing the configuration of an interactive control device 100a according to Embodiment 2.

[0087] In Embodiment 2, the unknown word extraction unit 108a further includes a syntax analysis unit 113, and the intention estimation model storage unit 106a stores a frequent word list in addition to the intention estimation model. In the following, the same reference numerals as those used in Embodiment 1 are assigned to the same or equivalent components as those of the dialogue control device 100 according to Embodiment 1, and descriptions thereof are omitted or simplified.

[0088] The syntax analysis unit 113 also performs syntax analysis on the morphological analysis result analyzed by the morphological anal...

Embodiment approach 3

[0116] In this third embodiment, a configuration is shown in which a known word extraction process opposite to the unknown word extraction process of the above-mentioned first and second embodiments is performed using the morphological analysis result.

[0117] Figure 15 It is a block diagram showing the configuration of the dialog control device 100b according to the third embodiment.

[0118] In Embodiment 3, a known word extraction unit 114 is provided instead of figure 1 The unknown word extraction unit 108 of the dialog control device 100 according to Embodiment 1 is shown. In the following, the same reference numerals as those used in Embodiment 1 are assigned to the same or equivalent components as those of the dialogue control device 100 according to Embodiment 1, and descriptions thereof are omitted or simplified.

[0119] The known word extraction unit 114 extracts parts of speech extracted by the morphological analysis unit 105 that are not stored in the intentio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com