Augmented neural network configuration, training method therefor, and computer readable storage medium

A training method and neural network technology, applied in the field of deep learning, can solve the problems of the weight change of the original convolutional neural network, the decline of recognition ability, etc., and achieve the effect of good learning ability and recognition ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

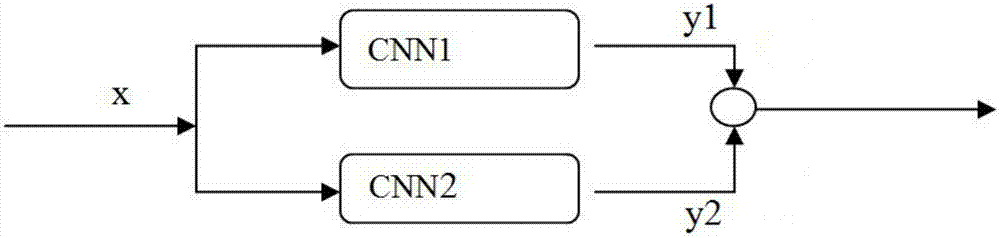

[0018] This embodiment provides an augmented convolutional neural network architecture and a training method thereof. The augmented convolutional neural network architecture includes a first convolutional neural network model CNN1 and a second convolutional neural network model CNN2 connected in parallel.

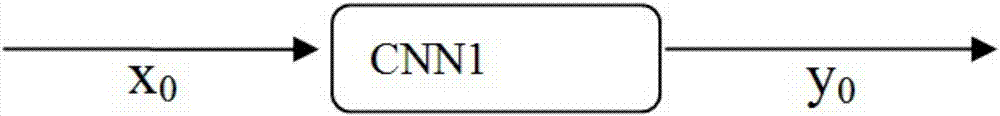

[0019] The first convolutional neural network model CNN1 is a neural network architecture that has been trained by training the original samples, such as figure 1 As shown, x 0 is a sample in the original sample set, which can be input to the first convolutional neural network model CNN1 to obtain the ideal output result y 0 . Therefore, the first convolutional neural network model CNN1 can well recognize images belonging to the original sample set type.

[0020] For example, the first convolutional neural network model CNN1 can use AlexNet, which can recognize more than 1,000 types of nautical objects in the original sample set ImageNet. Increase the recognition of more...

Embodiment 2

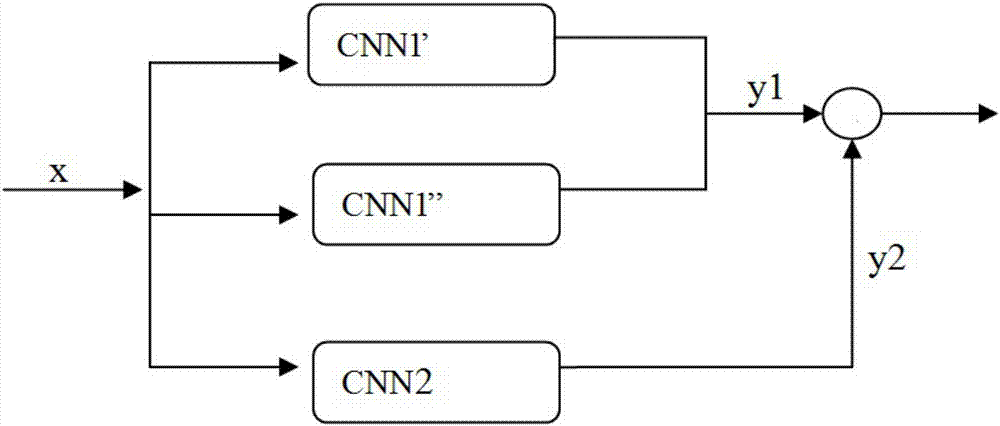

[0032]The difference between this embodiment and Embodiment 1 is that the first convolutional neural network model CNN1 in Embodiment 1 is a single convolutional neural network, and this embodiment uses the first convolutional neural network model architecture to replace the first convolutional neural network Network model CNN1, the first convolutional neural network model architecture is the architecture of two or more convolutional neural networks in parallel, for example, the first convolutional neural network model architecture of this embodiment includes a convolutional neural network Network model CNN1' and attached two convolutional neural network models CNN1", among them, the attached convolutional neural network model CNN1' can recognize more than 1,000 types of navigation objects in the original sample set ImageNet, and attached two convolutional neural network models CNN1" It can identify more than 3,000 types of daily objects in the original sample set ImageNet. In ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com