Audio event classification method and computer equipment based on stacking base sparse representation

A technology of sparse representation and classification method, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of low classification accuracy, increased audio event classification, insufficient training samples, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0073] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

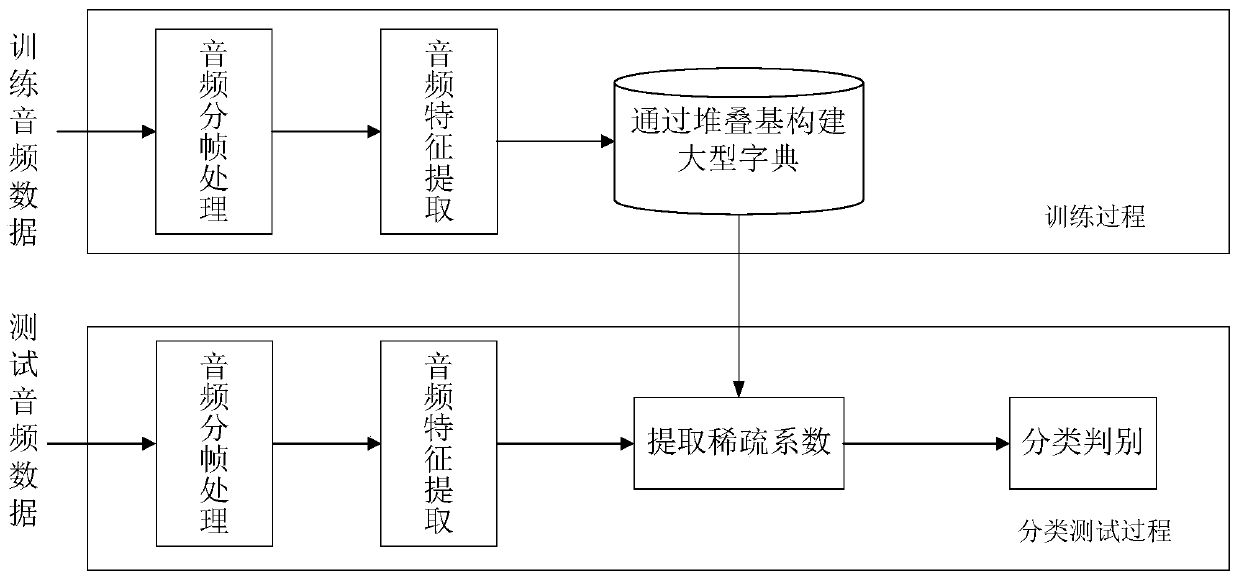

[0074] Such as figure 1 As shown, the audio scene recognition method proposed by the present invention is mainly divided into two modules: a training process and a classification test process. Among them, the training process includes audio frame processing of training data, audio feature extraction and building a large audio dictionary by stacking bases. The classification test process includes four processes: audio frame processing, audio feature extraction, sparse representation coefficient extraction and classification discrimination. Each part will be introduced in detail below.

[0075] First introduce the training process:

[0076] (1) Audio frame processing

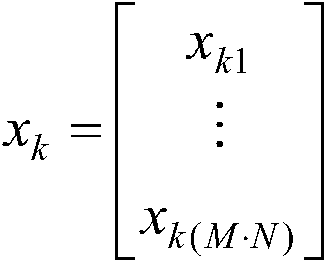

[0077] The training audio document is divided into frames, and each frame is regarded as an audio sample. According to the rule of thumb, the present invention sets the frame length as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com